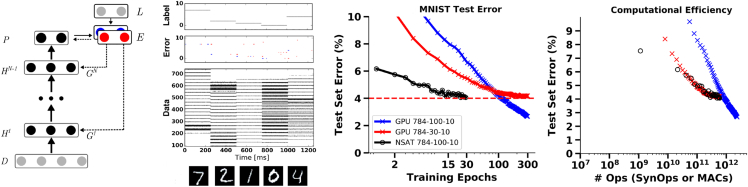

Figure 1.

Supervised Deep Learning in Spiking Neurons

The event-driven Random Back-Propagation (eRBP) is an event-based synaptic plasticity learning rule for approximate BP in spiking neural networks.

(Left) The network performing eRBP consists of feedforward layers (H1,…,HN) for prediction and feedback layers for supervised training with labels (targets) L. Full arrows indicate synaptic connections, thick full arrows indicate plastic synapses, and dashed arrows indicate synaptic plasticity modulation. In this example, digits 7,2,1,0,4 were presented in sequence to the network, after transformation into spike trains (layer D). Neurons in the network indicated by black circles were implemented as two-compartment spiking neurons. The first compartment follows the standard Integrate and Fire (I&F) dynamics, whereas the second integrates top-down errors and is used to multiplicatively modulate the learning. The error is the difference between labels (L) and predictions (P) and is implemented using a pair of neurons coding for positive error (blue) and negative error (red). Each hidden neuron receives inputs from a random combination of the pair of error neurons to implement random BP. Output neurons receive inputs from the pair of error neurons in a one-to-one fashion. (Middle) MNIST Classification error on the test set using a fully connected 784-100-10 network performed using limited precision states (8 bit fixed-point weights 16 bits state components) and on GPU (TensorFlow, floating-point 32 bits). (Right) Efficiency of learning expressed in terms of the number of operations necessary to reach a given accuracy is lower or equal in the spiking neural network (SynOps) compared with the artificial neural network (MACs). At this small MNIST task, the spiking neural network required about three times more neurons than the artificial neural network to achieve the same accuracy but the same number of respective operations. Figures adapted from Neftci et al. (2017) and Detorakis et al. (2017).