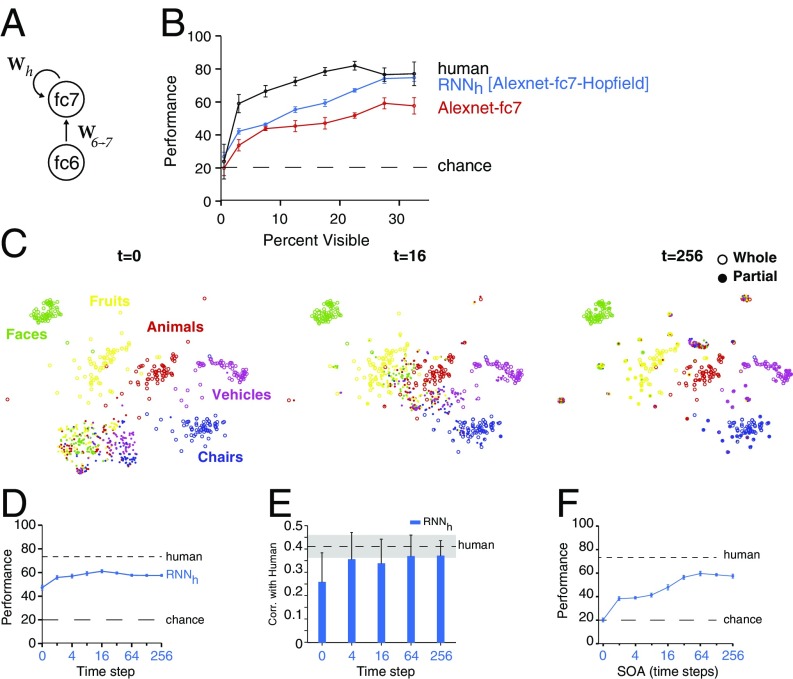

Fig. 4.

Dynamic RNN showed improved performance over time and was impaired by backward masking. (A) Top-level representation in AlexNet (fc7) receives inputs from fc6, governed by weights W6→7. We added a recurrent loop within the top-level representation (RNN). The weight matrix Wh governs the temporal evolution of the fc7 representation (Methods). (B) Performance of the RNNh (blue) as a function of visibility. The RNNh approached human performance (black curve) and represented a significant improvement over the original fc7 layer (red curve). The red and black curves are copied from Fig. 3A for comparison. Error bars denote SEM. (C) Temporal evolution of the feature representation for RNNh as visualized with stochastic neighborhood embedding. Over time, the representation of partial objects approaches the correct category in the clusters of whole images. (D) Overall performance of the RNNh as a function of recurrent time step compared with humans (top dashed line) and chance (bottom dashed line). Error bars denote SEM (five-way cross-validation; Methods). (E) Correlation (Corr.) in the classification of each object between the RNNh and humans. The dashed line indicates the upper bound of human–human similarity obtained by computing how well half of the subject pool correlates with the other half. Regressions were computed separately for each category, followed by averaging the correlation coefficients across categories. Over time, the model becomes more human-like (SI Appendix, Fig. S6). Error bars denote SD across categories. (F) Effect of backward masking. The same backward mask used in the psychophysics experiments was fed to the RNNh model at different SOA values (x axis). Error bars denote SEM (five-way cross-validation). Performance improved with increasing SOA values (SI Appendix, Fig. S10).