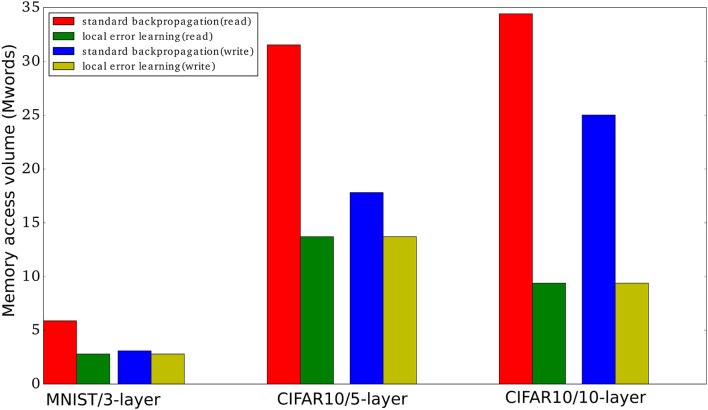

Figure 6.

Memory access volume (read and write) when training using local errors and when training using standard backpropagation. We report the memory access volume for the three networks used in this paper when training one mini-batch with 100 examples. The number of parameters, |Pi|, for the MNIST network, the 5-layer convnet, and the 10-layer convnet are 2784000, 13716512, and 9402048, respectively. The number of mini-batch activations, |Ai|, are 3000 * 100, 40960 * 100, and 156160 * 100, respectively, where 100 is the mini-batch size. We used Table 1 to obtain the read/write volumes.