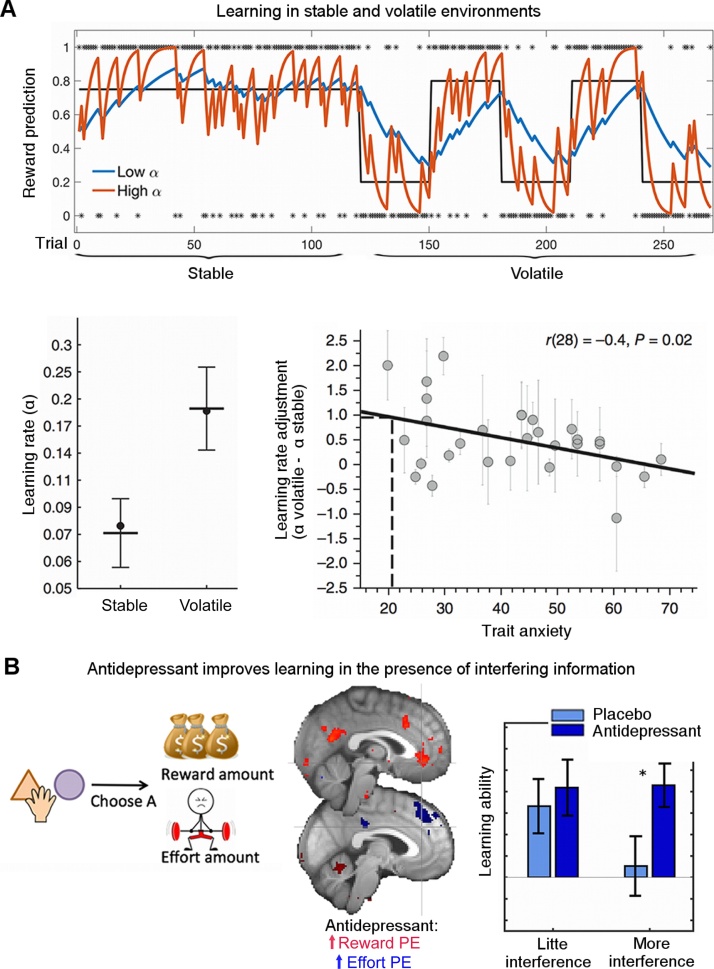

Fig. 3.

Environmental context and adaptive learning.

A) Effects of different simulated learning rates (α) on learning in stable (trials 1–120) or volatile (trials 120–270) environments. In stable environments, lower α (blue) results in predictions closer to the true underlying probability of a stimulus (black) and predictions that are less affected by random reward omissions. In contrast, in unstable environments, lower α leads to predictions lagging behind the quickly changing underlying probabilities, while higher α (red) leads to predictions that track the true probabilities more closely. Behrens et al. [51] (bottom left) found that indeed human participants modulate their learning rate, learning more slowly in stable environments and faster in volatile environments. Browning et al. [53] (bottom right) found that this ability to adjust α between volatile and stable environments was related to trait anxiety with more anxious participants being less able to adjust their learning rates. B) We [56] designed an experiment to measure whether serotonergic antidepressants, that have been proposed to increase plasticity in animal models, also improve learning in humans. In the task (here simplified to the relevant features), participants repeatedly chose between two options based on their reward and effort magnitudes, which had to be learnt from experience. Neurally, the antidepressant increased learning signals, i.e. prediction errors, for both reward (left, red) and effort (right, blue). Importantly, participants had to learn different dimensions (reward and effort) simultaneously which meant that they could interfere with each other, thus making learning more difficult. Therefore, learning well could mean learning more robustly, i.e. being less affected by interference. Indeed we found that compared to placebo, antidepressants increased how well participants could learn (i.e. use prediction errors to guide future choices), when there was more interference. (For interpretation of the references to colour in this figure legend, the reader is referred to the web version of this article).