Abstract

Photoreceptor ellipsoid zone (EZ) defects visible on optical coherence tomography (OCT) are important imaging biomarkers for the onset and progression of macular diseases. As such, accurate quantification of EZ defects is paramount to monitor disease progression and treatment efficacy over time. We developed and trained a novel deep learning-based method called Deep OCT Atrophy Detection (DOCTAD) to automatically segment EZ defect areas by classifying 3-dimensional A-scan clusters as normal or defective. Furthermore, we introduce a longitudinal transfer learning paradigm in which the algorithm learns from segmentation errors on images obtained at one time point to segment subsequent images with higher accuracy. We evaluated the performance of this method on 134 eyes of 67 subjects enrolled in a clinical trial of a novel macular telangiectasia type 2 (MacTel2) therapeutic agent. Our method compared favorably to other deep learning-based and non-deep learning-based methods in matching expert manual segmentations. To the best of our knowledge, this is the first automatic segmentation method developed for EZ defects on OCT images of MacTel2.

OCIS codes: (110.4500) Optical coherence tomography; (100.0100) Image processing; (150.0150) Machine vision; (100.4996) Pattern recognition, neural networks; (100.6890) Three-dimensional image processing

1. Introduction

Macular telangiectasia type 2 (MacTel2) is a progressive retinal disease of unknown cause which affects with varying severity the juxtafoveolar region of both eyes. Clinical signs of MacTel2 include loss of retinal transparency, crystalline deposits, telangiectatic vessels, and pigment plaques which result in a slow decline in visual acuity [1–5]. The early signs are often subtle and difficult to identify with ophthalmoscopy [3].

With optical coherence tomography (OCT) it is possible to obtain high-resolution retinal images [6], on which retinal layer boundaries can be delineated with micron (and even submicron [7]) accuracy. OCT has become a valuable tool to diagnose MacTel2 [2]. Signs of MacTel2 visible on OCT include hypo-reflective spaces in the inner and outer retina, thinning and defects of the retina temporal to the foveal center, and atrophy of the hyper-reflective layer or band that is located external to the external limiting membrane (ELM) and internal to a band thought to represent cone photoreceptor tips [2–5, 8, 9]. There is an ongoing lively debate regarding the exact cellular structure that correlates with this hyper-reflective band and the nomenclature used to describe it. Recent publications [10–16], including that from a consensus International Nomenclature group [17], refer to this band as the ellipsoid zone (EZ) as it is thought to represent the ellipsoid region of the photoreceptor inner segments which have densely-packed mitochondria that are likely hyper-reflective on OCT [18]. However, other studies, including a recent one that used adaptive optics to characterize this structure [9], refer to it as the junction between the inner segments and outer segments (IS/OS) of the photoreceptors [2–4, 8, 19–22]. In this paper, without making a judgment about the true nature of this band, we have used the EZ terminology, as it is more commonly used in the recent MacTel2 clinical trial literature [13, 23–25].

The 3-dimensional (3-D) information contained within OCT images can be projected to create a 2-D en face summed voxel projection (SVP) image. The SVP is useful to assess topographic locations and to quantify retinal lesion areas that include those caused by EZ atrophy or defects [2–4, 23].

Correlation between EZ defects and loss of retinal function has been established in previous MacTel2 studies [3, 4, 19, 20, 23, 26], in which segmentation of EZ defects has been achieved manually [3,4] or semi-automatically [21, 23]. Of note, the semi-automatic method by Mukherjee et al. [23] used a popular graph search algorithm [27, 28] to automatically segment retinal layer boundaries on individual OCT B-scans, which were then assessed and manually corrected by expert graders. An en face thickness map was generated from these segmentations, and thresholded to determine EZ defect areas. Subsequently, Gattani et al. [21] developed an iterative semi-automatic method to segment EZ defect boundaries based on manual initialization of seed locations by the user on the en face image.

EZ defects are also observed in other retinal diseases such as age-related macular degeneration (AMD) [22], macular edema (ME) [10], and diabetic retinopathy (DR) [11]. Semi-automatic EZ defects analysis has also been performed in these diseases [10, 12, 29].

Automatic segmentation and quantification of EZ defects would be a very useful tool to analyze EZ defects in clinical trials, especially in longitudinal studies where patients are observed over time to monitor disease progression or treatment efficacy. Most recently, Wang et al. [11] developed an automatic method to detect EZ defects in OCT images of DR using graph search and fuzzy c-means. In general, these automatic and semi-automatic methods to segment EZ defects involve a two-step process; first, defective retinal layer boundaries are segmented (e.g. using graph search [30–32], random forest classifiers [33], or active contours [34]); then, EZ thicknesses or pixel intensities are projected onto an en face image where EZ defects can be identified.

Deep learning is a powerful approach that has been used, especially in the past few years, in computer vision for object recognition, classification, and semantic segmentation [35–40]. Deep learning methods have been successfully used in many areas of medical imaging; for example, to detect and classify lesions, to segment organs and sub-structures, and to register and enhance medical images [41]. Convolutional neural networks (CNNs) are particularly suitable for image analysis. A CNN generally consists of several layers of filters learned from labeled training data that extract multi-scale features from an input and then map the extracted features to the associated label. Deep learning models have also been applied to a variety of ophthalmic image processing applications [42–47] that include OCT layer segmentation algorithms. Specifically, Fang et al. [48] was the first to utilize a CNN to segment inner retinal layer boundaries on OCT images of diseased eyes. Roy et al. [49], Xu et al. [50], and Venhuizen et al. [51] adopted variant versions of the fully-convolutional network (FCN) [37] and U-net [52] CNN models to delineate the boundaries of fluid masses and pigment epithelium detachment. Many other variants of CNNs have been recently employed for segmenting a variety of anatomic and pathologic features on OCT images [53–59].

Quantification of targeted biomarkers assessed at different time points (e.g. the growth of EZ defect areas over time) is a key method to evaluate treatment efficacy in clinical trials, as well as in clinical care. As such, patients enrolled in a clinical trial are frequently imaged with OCT over multiple visits. The accuracy of classic automatic image segmentation techniques (e.g. graph search [27]) is similar for all these visits, and at each visit, segmentation errors must be manually corrected [60–62]. Accordingly, the overall human workload to manually correct these errors is relatively constant at each visit using these classic techniques. However, despite progression of disease and treatment effects, it is reasonable to assume that the OCT images from the same eye of the same patient at different visits should have strong similarities in their anatomical and pathological structures. Fortunately, deep learning frameworks are well-suited to analyze temporal data, such as electronic medical health records [63–65]. For medical images, we will show how the algorithm can learn from its errors in previous encounters with images of a specific subject, which can, thereby, decrease the need for manual correction at each visit.

In this paper, we describe a novel deep learning-based method using a CNN to automatically segment 2-D en face EZ defect areas from 3-D OCT volumes obtained from eyes with MacTel2 without the need to segment retinal layer boundaries as an intermediate step. We further developed a transfer learning paradigm to learn from mistakes in segmenting the baseline images of a particular subject and fine-tuned our CNN to segment with higher accuracy the subsequent OCT images. We show the efficacy of our deep learning-based method with longitudinal transfer learning, which we call Deep OCT Atrophy Detection (DOCTAD), to segment images obtained from a clinical trial of a novel therapeutic agent to inhibit the progression of EZ defects in eyes with MacTel2.

2. Methods

We developed and trained DOCTAD to classify the EZ on individual OCT A-scans as normal or defective (atrophied) and automatically estimate the EZ defect areas in OCT volumes. In addition, a transfer learning procedure was utilized to demonstrate the benefits of learning from a subject’s past scan information to improve the segmentation at future time points. The performance of DOCTAD was evaluated using the Dice similarity coefficient and errors in the predicted EZ defect areas.

2.1 Data set

The study data set consisted of retinal spectral domain (SD)-OCT volumes of 134 eyes from 67 subjects from the international, multicenter, randomized phase 2 trial of ciliary neurotrophic factor for MacTel2 (NCT01949324; NTMT02; Neurotech, Cumberland, RI, USA). This study complied with the Health Insurance Portability and Accountability Act (HIPAA) and Clinical Trials (United States and Australia) guidelines, adhered to the tenets of the Declaration of Helsinki and was approved by the institutional ethics committees at each participating center.

We analyzed data at two different time points, six months apart, at which subjects were imaged on Spectralis SD-OCT units (Heidelberg Engineering GmBH, Heidelberg, Germany) at different imaging centers. We refer to the SD-OCT volumes obtained at the first time point as the baseline volumes and those obtained at the second time point as the 6-month volumes. The data set consisted of a total of 25,876 B-scans. Most SD-OCT volumes consisted of 97 B-scans with 1024 A-scans each, within a 20° × 20° (approximately 6 mm × 6 mm) retinal area. The exceptions were two 6-month volumes with 37 B-scans, and twelve baseline and four 6-month volumes with 512 A-scans per B-scan. All B-scans had a height of 496 pixels with an axial pixel pitch of 3.87µm/pixel. We removed no subject or eye from the data set regardless of image quality or defect size, and even included those eyes that were eventually excluded from the clinical trial, to be most faithful to a real-world clinical trial scenario, whereby the segmentation outcome determines the eligibility for trial enrollment.

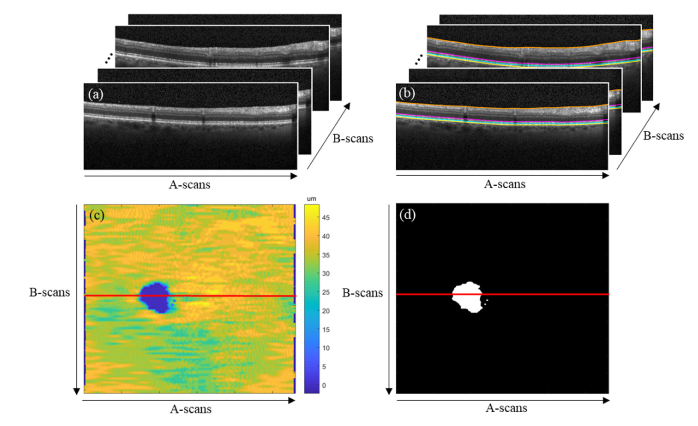

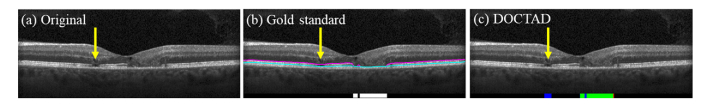

The process to attain the gold standard EZ defects segmentation is described in our previous publication [23]. In brief, for each B-scan, the inner limiting membrane (ILM), inner EZ, inner retinal pigment epithelium (RPE), and Bruch’s membrane (BrM) layer boundaries were first segmented by graph search [27, 28] using the Duke OCT Retinal Analysis Program (DOCTRAP; Duke University, Durham, NC, USA) software. Automatic segmentation was reviewed and manually corrected by an expert Reader at the Duke Reading Center. A second, more senior Reader reviewed the layers delineated by the first Reader and corrected these segmentations, as needed. An EZ thickness map was generated by axially projecting the EZ thicknesses, defined by the inner EZ and inner RPE layer boundaries, onto a 2-D en face image. This image was then interpolated using bicubic interpolation to obtain a pixel pitch of 10µm in each direction. EZ thicknesses of less than 12µm were classified as EZ defects [23] and the EZ thickness map was thresholded to obtain a binary map of EZ defects. The resulting binary map of EZ defects was used as the gold standard in this study. Figure 1 illustrates this process and Fig. 2 shows a representative B-scan with EZ defects.

Fig. 1.

(a) Retinal OCT volume. (b) Gold standard (manual) segmentation of the ILM (orange), inner EZ (magenta), inner RPE (cyan), and BrM (yellow) layer boundaries by expert Readers. The EZ thickness is defined by the inner EZ and inner RPE layer boundaries. (c) En face EZ thickness map. (d) Gold standard (manual) binary map of EZ defects. EZ thicknesses of less than 12µm were classified as EZ defects.

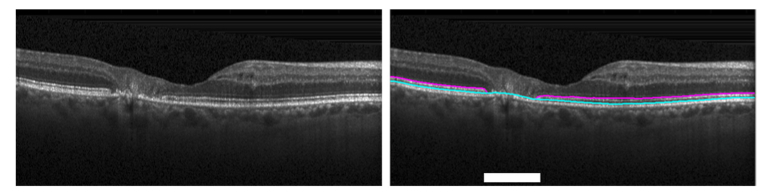

Fig. 2.

B-scan from the position marked by the red line on Fig. 1(c-d) showing the gold standard (manual) segmentation of the inner EZ (magenta) and inner RPE (cyan) layer boundaries by expert Readers and the EZ defects identified (white).

2.2 Cluster extraction

Since EZ defects are usually continuous in a local region, it is natural to assume that information from adjacent A-scans and B-scans can be useful in determining the absence or presence of EZ defects. Thus, the training of DOCTAD was based on a set of normal and defective A-scan clusters which were sampled from the OCT volumes as follows.

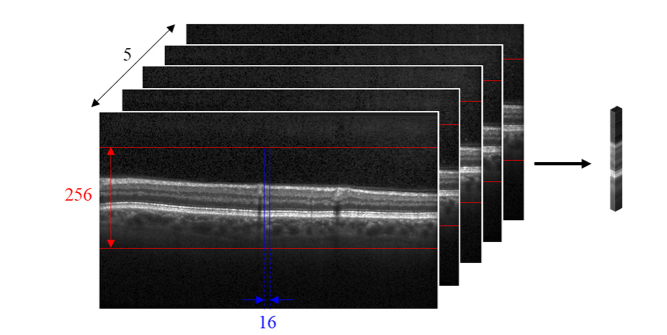

As a pre-processing step, we first used a simple method to swiftly locate an approximated location of the retina in the OCT volume and to remove as much of the background as possible while retaining full view of the retina. For each volume, the 20th B-scan was smoothed with a Gaussian filter (11 × 11 pixels, σ = 11 pixels) and thresholded (at 0.4 of the maximum intensity of the smoothed image) to obtain estimates of the retinal nerve fiber layer (RNFL), the innermost retinal layer, and the RPE layer, which is just external to the outer retinal boundary. These layers often appear as the brightest layers in the image. For each A-scan, the mean position of the RNFL and RPE was calculated and the median value across all A-scans was taken to be the estimated center of the retina for the volume. Then, all the images in the volume were cropped to a height of 256 pixels about the estimated center.

For every A-scan, a cluster of A-scans (256 × 16 × 5 pixels) centered at that A-scan was extracted and labeled according to the gold standard manual segmentation. Any clusters that fell outside the lateral field-of-view were mirrored about the center A-scan. Figure 3 illustrates the dimensions of such a cluster. We used data from a carefully-designed clinical trial. Nonetheless, some of the volumes had different scan densities. Accordingly, to ensure that our algorithm was robust, even given image acquisition inconsistencies that resulted in volumes with varying scan densities, we used the same cluster dimensions for all volumes to train the CNN to be invariant to scan density. Additionally, efficient CNNs for classification are often trained with approximately equal numbers of samples per class. Thus, since the EZ defect areas in the volumes were very small compared to the normal EZ areas, for each volume, normal clusters were randomly sampled with a probability equal to the ratio between the EZ defect area and normal area.

Fig. 3.

Clusters of dimensions 256 × 16 × 5 pixels were extracted from the OCT volumes.

2.3 CNN architecture

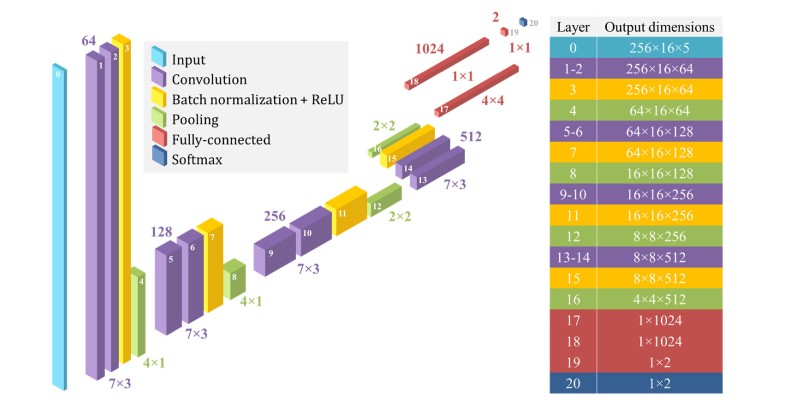

The CNN architecture used in DOCTAD is shown in Fig. 4. It consists of 20 convolutional, pooling, batch normalization, fully-connected, and softmax layers. It was constructed using standard CNN design principles. Certain aspects were modified to suit the structure of our data. In the convolutional layers, rectangular (7 × 3 pixels) instead of square (3 × 3 pixels) filters were used to extract features as the retinal images have greater variation in the vertical direction. A batch normalization layer, which has been demonstrated to improve training [66], was added after the convolutional layers before the rectified linear unit (ReLU) operation was applied. In the first two pooling layers, we used 4 × 1 max-pooling instead of the conventional 2 × 2 max-pooling to efficiently downsample the input as it propagated through the network. At the end of the network is a softmax layer to perform classification. In this case, our architecture performed binary classification (normal or defective) and the final output was a two-element vector.

Fig. 4.

DOCTAD CNN architecture showing the number of features (top) and filter sizes (bottom) of the convolutional and fully-connected layers, pooling sizes of the pooling layers, and the output dimensions of each layer as indicated by the layer number.

2.4 Training the CNN

The CNN was trained on the clusters and labels extracted from the baseline volumes of subjects in the training set. The parameters of the CNN were randomly initialized using Xavier initialization [67] and optimized using Adam optimization [68] to minimize the binary cross-entropy loss, defined as

| (1) |

where yi is the gold standard class label (0 for normal, 1 for defective) and pi is the predicted probability of the cluster i being defective. N is the number of clusters used per mini-batch or the mini-batch size. The value of pi was the final output from the softmax layer. A mini-batch size of 250 and learning rate of 0.0001 was used during training, without any weight regularization. The network was trained for a maximum of 10 epochs until the best performance was achieved on a hold-out validation set, which was usually between 3 to 10 epochs in our experiments. Performance metrics are detailed in Section 2.7.

2.5 Prediction

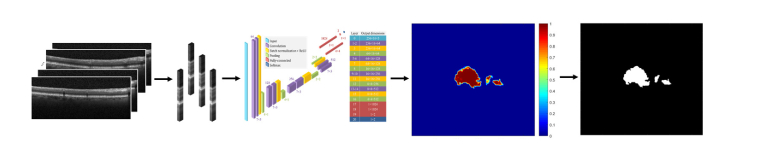

Once trained, DOCTAD was used to predict a binary map of EZ defects from a given OCT volume of an eye. During prediction, clusters centered on every A-scan were extracted and passed as inputs to the trained CNN to obtain the probability of each cluster being defective. An en face probability map was generated and interpolated to obtain a pixel pitch of 10µm in each direction. Any clusters with a probability of greater than 0.5 was considered defective and the probability map was thresholded to obtain the final predicted binary map of EZ defects. Figure 5 illustrates this process.

Fig. 5.

During prediction, clusters of every A-scan were extracted from the given OCT volume and passed as inputs to the trained CNN to generate an en face probability map which was thresholded to obtain the predicted binary map of EZ defects.

2.6 Longitudinal transfer learning

As previously mentioned, deep learning frameworks are well-suited to take advantage of the correlation between the OCT images from the same eye of the same patient at different time points. Thus, we expect that fine-tuning a trained CNN on a specific subject’s scan information from a previous time point would improve its performance when making a prediction on the same subject’s scans at a future time point.

In this sub-section, we utilize an interpretation of the general transfer learning approach [69], which we call longitudinal transfer learning. In longitudinal transfer learning, we fine-tune the proposed CNN model based on the semi-automatically corrected segmentations acquired at a previous time point and use the fine-tuned model to automatically segment the EZ defects in the same eye at a later time point. Specifically, we first train the CNN as described in Section 2.4 with the baseline volumes. Then, for each eye in our data set, we fine-tuned the trained CNN with clusters extracted from the baseline volume and evaluated performance on the 6-month volume of the corresponding eye. To fine-tune the CNN, we used a smaller batch-size of 100, lowered the learning rate to 0.00001, and trained it for a maximum of 10 epochs until the best performance was achieved on the baseline volume. It is possible that fine-tuning does not improve the performance on the baseline volume of some subjects. In these cases, the CNN is not updated and the performance both before and after fine-tuning would be unchanged.

2.7 Performance metrics

Two metrics were used to evaluate the performance of DOCTAD – the Dice similarity coefficient (DSC) [70] and errors in the predicted EZ defect areas.

The DSC was calculated between the gold standard and predicted binary map of EZ defects as

| (2) |

where TP was the number of true positives, FP was the number of false positives and FN was the number of false negatives (in pixels) in the predicted binary map of EZ defects. False positives or “over-prediction” indicated a scenario in which DOCTAD predicted EZ defects where the gold standard identified the area as normal. False negatives or “under-prediction” indicated a scenario in which DOCTAD failed to predict EZ defects where the gold standard identified the area as defective. The DSC ranged from 0 to 1 where a value of 1 indicated complete agreement between the gold standard and predicted binary maps of EZ defects. This metric was the one used to monitor the performance on the hold-out validation set during training.

The DSC is a relative measure and for volumes with small EZ defect areas, the DSC is drastically affected by small errors. In the extreme case, for example, a volume with no EZ defects will have a DSC of 0 if even one pixel is predicted as defective. Thus, we also calculated the total, Et and net, En errors of the predicted EZ defect areas as

| (3) |

| (4) |

where k = 0.0001 is the conversion factor from pixels to mm2 and is the absolute value. The errors in the predicted EZ defect areas are absolute values and this metric is, therefore, more robust, especially for volumes with small EZ defect areas.

2.8 Implementation

DOCTAD was implemented in Python using the TensorFlow [71] (Version 1.2.1) library. On a desktop computer equipped with an Intel Core i7-6850K CPU and four NVIDIA GeForce GTX 1080Ti GPUs, the average prediction time was approximately 12 seconds per SD-OCT volume. For longitudinal transfer learning, the average deployment time to fine-tune the CNN was approximately 5 minutes per SD-OCT volume.

3. Results

We report the average performance metrics of DOCTAD and alternative methods on all volumes, as well as the subset of clinically-significant (CS) volumes. CS volumes were defined as volumes having a gold standard EZ defect area of more than 0.16 mm2, consistent with the lower limit EZ defect area required for enrollment in the MacTel2 clinical trial [23].

3.1 Comparison to alternative methods on baseline volumes

We compared DOCTAD to the alternative method whereby the layer boundaries were first segmented, and then the EZ thicknesses projected onto an en face image where EZ defects could be identified. To segment the layer boundaries, we used two popular retinal layer boundary segmentation algorithms – DOCTRAP [27, 28], a graph search-based algorithm, and CNN-GS [48], a deep learning-based algorithm. We compared the performance of DOCTAD to DOCTRAP and CNN-GS on the baseline volumes in our data set.

DOCTRAP automatically segments 9 layer boundaries. The inner EZ and inner RPE correspond to boundaries 7 and 8, respectively. To account for any biases due to different conventions in marking the boundaries, we calculated the pixel shift that minimized the absolute difference between the DOCTRAP boundary segmentations with respect to the gold standard boundary segmentation across all baseline volumes and found that no pixel shifts were necessary.

To train both CNN-GS and DOCTAD, we used 6-fold cross validation to ensure independence of the training and testing sets. The 67 subjects were divided into six folds (groups), each consisting of 11 or 12 subjects. Baseline volumes of the subjects in five folds were used as the training set while the remaining volumes were used as the testing set. From the training set, volumes of subjects in one fold were set aside as the hold-out validation set. In the original work, CNN-GS was trained to segment 9 layer boundaries. However, as our data set consisted of only 4 manually-segmented layer boundaries as shown in Fig. 1(b), we modified the CNN-GS architecture to predict only 4 layer boundaries and trained CNN-GS using the methodology and parameters as described in the original work [48]. We trained DOCTAD as described in Section 2.4.

For DOCTRAP and CNN-GS, an EZ thickness map was generated for each volume by axially projecting the EZ thicknesses onto an en face image and interpolating to obtain a pixel pitch of 10µm in each direction as in the gold standard. EZ thicknesses of less than 12µm were classified as EZ defects and the EZ thickness map was thresholded to obtain a predicted binary map of EZ defects. For DOCTAD, the predicted binary maps of EZ defects were directly obtained as described in Section 2.5. Table 1 shows the average performance metrics of DOCTRAP, CNN-GS, and DOCTAD on the baseline volumes.

Table 1. Performance metrics (mean ± standard deviation, median) of DOCTRAP [27, 28], CNN-GS [48], and our new DOCTAD method on 134 baseline volumes using 6-fold cross validation.

| Volumes | Performance metric |

Method |

|||

|---|---|---|---|---|---|

|

Gold standard

(Manual) |

DOCTRAP | CNN-GS | DOCTAD | ||

| All | EZ defect areas (mm2) | 0.68 ± 0.69, 0.51 | 0.03 ± 0.04, 0.01 | 0.32 ± 0.40, 0.19 | 0.71 ± 0.66, 0.55 |

| DSC | - | 0.06 ± 0.15, 0.02 | 0.50 ± 0.23, 0.53 | 0.79 ± 0.22, 0.87 | |

| Et (mm2) | - | 0.67 ± 0.68, 0.52 | 0.46 ± 0.51, 0.32 | 0.19 ± 0.21, 0.13 | |

| En (mm2) | - | 0.66 ± 0.67, 0.49 | 0.40 ± 0.44, 0.28 | 0.11 ± 0.17, 0.06 | |

| CS | EZ defect areas (mm2) | 0.84 ± 0.68, 0.67 | 0.03 ± 0.05, 0.01 | 0.39 ± 0.41, 0.25 | 0.87 ± 0.64, 0.71 |

| DSC | - | 0.05 ± 0.06, 0.03 | 0.52 ± 0.18, 0.54 | 0.86 ± 0.12, 0.89 | |

| Et (mm2) | - | 0.83 ± 0.68, 0.63 | 0.56 ± 0.52, 0.38 | 0.22 ± 0.22, 0.15 | |

| En (mm2) | - | 0.81 ± 0.67, 0.62 | 0.49 ± 0.44, 0.33 | 0.12 ± 0.18, 0.07 | |

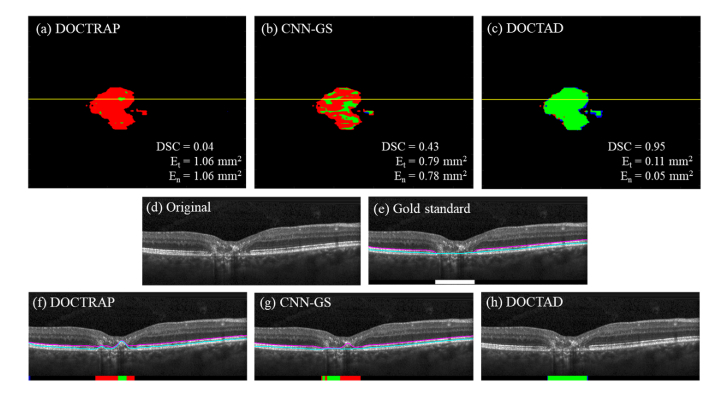

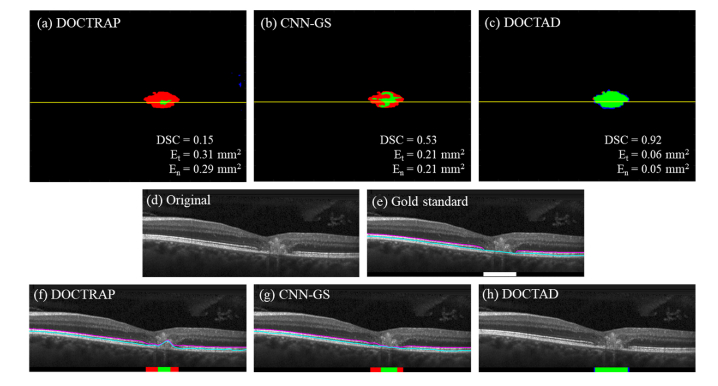

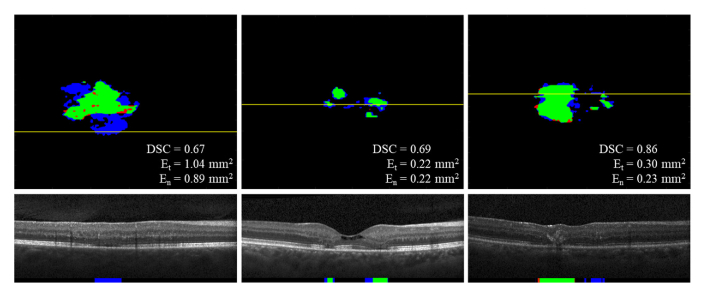

The overall performance of both DOCTRAP and CNN-GS were poor with small DSC values and large errors. DOCTAD was able to identify EZ defects areas with high accuracy, resulting in a mean DSC of 0.86 on 107 CS volumes. Figures 6–7 show examples of the boundary segmentations and predicted EZ defect areas by DOCTRAP, CNN-GS, and DOCTAD. The errors made by DOCTAD occurred mostly around the boundaries of the EZ defect areas, some of which are difficult to classify even for expert Readers, as further discussed in Section 3.3.

Fig. 6.

(a – c) Overlay of gold standard (manual) and predicted binary maps of EZ defects by DOCTRAP, CNN-GS, and our new DOCTAD method showing TP (green), FP (blue) and FN (red). (d) B-scan from the position marked by the yellow line on (a-c). (e) Gold standard (manual) boundary segmentations and EZ defect areas (white). (f – h) Boundary segmentations and predicted EZ defect areas by DOCTRAP, CNN-GS, and DOCTAD showing TP (green), FP (blue) and FN (red). DOCTRAP and CNN-GS correctly identified some EZ defects despite errors in the boundary segmentations.

Fig. 7.

(a – c) Overlay of gold standard (manual) and predicted binary maps of EZ defects by DOCTRAP, CNN-GS, and our new DOCTAD method showing TP (green), FP (blue) and FN (red). (d) B-scan from the position marked by the yellow line on (a-c). (e) Gold standard (manual) boundary segmentations and EZ defect areas (white). (f – h) Boundary segmentations and predicted EZ defect areas by DOCTRAP, CNN-GS, and DOCTAD showing TP (green), FP (blue) and FN (red). DOCTRAP correctly identified some EZ defects despite errors in the boundary segmentations whereas CNN-GS correctly identified more EZ defects with more accurate boundary segmentations.

3.2 Improvements with longitudinal transfer learning

Next, we studied the effect of fine-tuning DOCTAD for a specific subject’s eye as described in Section 2.6. For each subject’s eye, we fine-tuned the CNN trained on the baseline volumes for which the subject was not included in the initial training set. To demonstrate that any improvement in performance was due to the usage of the subject’s baseline volume during fine-tuning instead of simply an extended training time, we also fine-tuned the CNN on the initial training set using the same methodology and parameters as in the proposed longitudinal transfer learning procedure. Performance was evaluated on the 6-month volume of the corresponding eye both before and after fine-tuning. We used the Wilcoxon signed-rank test to determine the statistical significance of the observed differences. Table 2 shows the average performance metrics of DOCTAD on the 6-month volumes before and after fine-tuning.

Table 2. Performance metrics (mean ± standard deviation, median) of DOCTAD on 134 6-month volumes before and after fine-tuning both on the initial training set and the subject’s baseline volume using 6-fold cross validation. Statistically significant differences (p-value < 0.05) are shown in bold.

| Volumes | Performance metric |

Method

|

|||

|---|---|---|---|---|---|

| Gold standard (Manual) | Before fine-tuning |

After fine-tuning

|

|||

| On initial training set | On baseline volume | ||||

| All | EZ defect areas (mm2) | 0.71 ± 0.71, 0.54 | 0.74 ± 0.70, 0.56 | 0.76 ± 0.72, 0.60 | 0.78 ± 0.74, 0.61 |

| DSC | - | 0.82 ± 0.16, 0.88 | 0.82 ± 0.17, 0.88 | 0.83 ± 0.17, 0.89 | |

| Et (mm2) | - | 0.18 ± 0.20, 0.12 | 0.18 ± 0.20, 0.12 | 0.17 ± 0.21, 0.11 | |

| En (mm2) | - | 0.11 ± 0.17, 0.06 | 0.12 ± 0.17, 0.06 | 0.11 ± 0.20, 0.06 | |

| CS | EZ defect areas (mm2) | 0.87 ± 0.71, 0.70 | 0.89 ± 0.69, 0.73 | 0.92 ± 0.71, 0.76 | 0.93 ± 0.73, 0.76 |

| DSC | - | 0.85 ± 0.12, 0.89 | 0.85 ± 0.12, 0.89 | 0.87 ± 0.10, 0.90 | |

| Et (mm2) | - | 0.21 ± 0.21, 0.14 | 0.21 ± 0.21, 0.14 | 0.20 ± 0.22, 0.13 | |

| En (mm2) | - | 0.13 ± 0.18, 0.07 | 0.13 ± 0.18, 0.08 | 0.12 ± 0.21, 0.07 | |

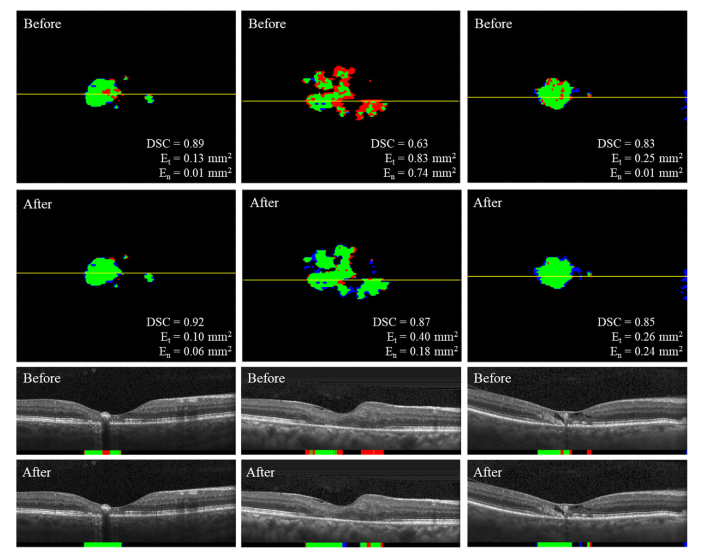

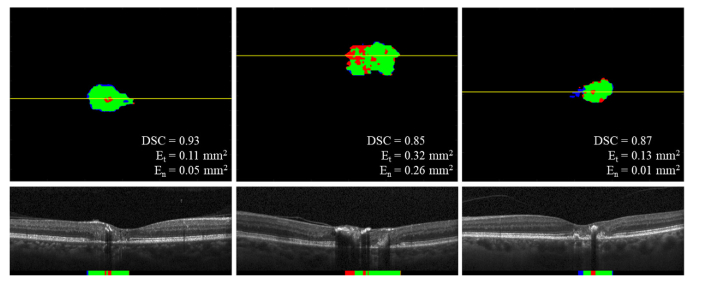

Overall, fine-tuning on the subject’s baseline volume resulted in an improved performance when predicting EZ defect areas. There was a significant increase in DSC especially for the 109 CS volumes, and decreased errors in the predicted EZ defect areas. Figure 8 shows examples of predicted EZ defect areas on the 6-month volumes before and after fine-tuning on the subject’s baseline volume. In contrast, fine-tuning on the initial training set did not result in comparable performance improvement overall.

Fig. 8.

Predicted binary maps of EZ defects on the 6-month volumes by DOCTAD before and after fine-tuning on the subject’s baseline volume showing TP (green), FP (blue), FN (red) and B-scans corresponding to the position marked by the yellow lines. Fine-tuning improved the EZ defects segmentations.

A major challenge in developing transfer learning techniques is to produce positive transfer (improved performance) while avoiding negative transfer (reduced performance) which in practice, is difficult to achieve simultaneously [72]. In our case, negative transfer may occur when the CNN overfits to features in the baseline volume that do not generalize to the 6-month volume, such as noise patterns in the images. Therefore, while there was an overall improvement across all volumes, there were some instances in which the performance on the 6-month volume did not improve following the longitudinal transfer learning procedure, either due to overfitting, or if the CNN was not updated during the fine-tuning as described in Section 2.6 (unchanged performance). Additionally, it is also possible that there is an improvement in one performance metric but a reduction in another. Table 3 shows the breakdown of the effect of the longitudinal transfer learning procedure on the performance of individual volumes.

Table 3. Performance breakdown on 134 6-month volumes after fine-tuning on the subject’s baseline volume.

| Volumes | Performance metric |

Percentage of volumes (%)

|

||

|---|---|---|---|---|

| Unchanged performance | Improved performance | Reduced performance | ||

| All | DSC | 26 | 41 | 33 |

| Et (mm2) | 26 | 43 | 31 | |

| En (mm2) | 26 | 38 | 36 | |

| CS | DSC | 22 | 46 | 32 |

| Et (mm2) | 22 | 44 | 34 | |

| En (mm2) | 22 | 41 | 37 | |

3.3 Qualitative analysis

Upon qualitative assessment of the EZ defects segmentations by DOCTAD, there was good agreement between the gold standard and predicted binary maps of EZ defects. Some of the false positives or “over-prediction” could be associated with borderline-defective areas. We refer to borderline-defective areas as areas where the EZ is certainly diseased, but it is not clear if it is completely lost or is in transition to become completely defective. These are difficult to classify even for expert Readers and are subject to judgment calls, which may be inconsistent among different Readers. Figure 9 shows examples of some false positives associated with borderline-defective areas.

Fig. 9.

Predicted binary maps of EZ defects by DOCTAD showing TP (green), FP (blue), FN (red) and B-scans corresponding to the position marked by the yellow lines. The false positives (blue) occurred in borderline-defective areas.

On the other hand, some of the false negatives or “under-prediction” could be associated with the CNN’s limited field of view, as it only “sees” clusters. If a cluster was from a region where the retina was partially obscured, usually by shadowing from overlying blood vessels or intra-retinal pigment, DOCTAD was likely to make a prediction error. Figure 10 shows examples of false negatives associated with regions obscured by intra-retinal pigment.

Fig. 10.

Predicted binary maps of EZ defects by DOCTAD showing TP (green), FP (blue), FN (red) and B-scans corresponding to the position marked by the yellow lines. The false negatives (red) occurred in regions obscured by intra-retinal pigment.

One of the main motivations for developing a method to automatically segment EZ defects is to replace the time-consuming and subjective task of manual segmentation. Despite the careful review and manual correction of the EZ layer boundaries in the thousands of images by the expert Readers, there were occasional errors in the gold standard manual segmentations. Figure 11 shows an example of a manual segmentation error in the gold standard that was correctly identified by DOCTAD as EZ defects.

Fig. 11.

(a) B-scan with EZ defects that was missed in the gold standard (manual) segmentation (yellow arrow). (b) Gold standard (manual) boundary segmentations and EZ defects areas (white). (c) Predicted EZ defects areas by DOCTAD showing TP (green), FP (blue) and FN (red). The missed EZ defects (yellow arrow) were correctly identified but considered false positives (blue).

4. Conclusions

We have developed DOCTAD, a novel deep learning-based method to automatically segment EZ defects on SD-OCT images from eyes with MacTel2. We developed and trained DOCTAD to classify clusters of A-scans as normal or defective to create an en face binary map of EZ defects given a SD-OCT volume of an eye. Our method can localize and quantify EZ defects accurately compared to the gold standard manual segmentation. It does not require any segmentation of retinal layer boundaries as an intermediate step and outperforms two popular retinal layer boundary segmentation algorithms – DOCTRAP [27, 28] and CNN-GS [48]. It achieved a higher mean DSC of 0.86 on 107 CS volumes, compared to a mean DSC of 0.05 and 0.52 achieved by DOCTRAP and CNN-GS, respectively.

We further demonstrated that when longitudinal information was available, DOCTAD could be fine-tuned for a specific subject to improve the segmentation at future time points. In our experiments, subjects were imaged at two time points – baseline and 6-month. With fine-tuning using the baseline volumes, a higher mean DSC of 0.87 was achieved on 109 CS 6-month volumes, compared to a mean DSC of 0.85 achieved without fine-tuning. The fine-tuning procedure can be continuously applied as more images are collected over time to improve the segmentation performance of DOCTAD. We expect that volumes that did not benefit from the longitudinal transfer learning procedure at the 6-month time point may do so at a future time point.

DOCTAD’s average segmentation speed of 12 seconds per volume is fast enough for most clinical applications. Yet, some niche applications such as real-time OCT-guided ocular surgery require even faster execution times [73]. Currently, the segmentation time is limited by the need to extract and process clusters for every A-scan. In the future, we plan to adapt our method to process the 3-D SD-OCT volumes as a whole without the need for cluster extraction by adapting 3-D CNNs for volumetric segmentation [74–76] to automatically segment and additionally, project EZ defects onto 2-D en face images, which would decrease the segmentation time. While 5 minutes is needed for the longitudinal transfer learning procedure, this step is often implemented offline during the 6-month period between each imaging time point.

The errors in the predicted EZ defect areas by DOCTAD could be in large associated with borderline-defective areas, or regions obscured by blood vessels or intra-retinal pigment. While we expect that using larger clusters may mitigate to a certain degree the false negatives associated with regions obscured by blood vessels or intra-retinal pigment, it would also increase the likelihood of false positives and the computation time. Additionally, in some cases, such as the first example shown in Fig. 8, the proposed longitudinal transfer learning procedure was able to correct some of these false negatives. Also, although the study data set was reviewed and corrected by two expert OCT Readers, we found (albeit rare) instances of manual segmentation error, which further highlights the need for an objective and consistent automatic segmentation method. An example is shown in Fig. 11, where upon image review it was deemed that DOCTAD correctly detected a region of EZ defects missed by the manual Readers. Such errors naturally occur when manually segmenting large data sets in a multi-center clinical trial. We did not alter manual segmentation when calculating the overall error of DOCTAD, so we would not bias the reported results in favor of our algorithm. While we expect that in the near future clinical trials will still utilize the current approach of semi-automatic segmentation, we expect that utilization of our deep learning method will significantly reduce the workload and will improve the accuracy of semi-automatic grading.

Acknowledgments

We thank Leon Kwark for his help in the semi-automatic segmentation of OCT images. A portion of this work is submitted and is accepted for oral presentation (Paper #1225) at The Association for Research in Vision and Ophthalmology Annual Meeting, Honolulu, HI, May 2018.

Funding

The Lowy Medical Research Institute; National Institutes of Health (NIH) (R01 EY022691 and P30 EY005722); Google Faculty Research Award; 2018 Unrestricted Grant from Research to Prevent Blindness.

Disclosures

The authors declare that there are no conflicts of interest related to this article.

References and links

- 1.Gass J. D. M., Blodi B. A., “Idiopathic juxtafoveolar retinal telangiectasis. Update of classification and follow-up study,” Ophthalmology 100(10), 1536–1546 (1993). 10.1016/S0161-6420(93)31447-8 [DOI] [PubMed] [Google Scholar]

- 2.Charbel Issa P., Gillies M. C., Chew E. Y., Bird A. C., Heeren T. F. C., Peto T., Holz F. G., Scholl H. P. N., “Macular telangiectasia type 2,” Prog. Retin. Eye Res. 34, 49–77 (2013). 10.1016/j.preteyeres.2012.11.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Sallo F. B., Peto T., Egan C., Wolf-Schnurrbusch U. E., Clemons T. E., Gillies M. C., Pauleikhoff D., Rubin G. S., Chew E. Y., Bird A. C., “The IS/OS junction layer in the natural history of type 2 idiopathic macular telangiectasia,” Invest. Ophthalmol. Vis. Sci. 53(12), 7889–7895 (2012). 10.1167/iovs.12-10765 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Sallo F. B., Peto T., Egan C., Wolf-Schnurrbusch U. E. K., Clemons T. E., Gillies M. C., Pauleikhoff D., Rubin G. S., Chew E. Y., Bird A. C., ““En face” OCT imaging of the IS/OS junction line in type 2 idiopathic macular telangiectasia,” Invest. Ophthalmol. Vis. Sci. 53(10), 6145–6152 (2012). 10.1167/iovs.12-10580 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Charbel Issa P., Heeren T. F., Kupitz E. H., Holz F. G., Berendschot T. T., “Very early disease manifestations of macular telangiectasia type 2,” Retina 36(3), 524–534 (2016). 10.1097/IAE.0000000000000863 [DOI] [PubMed] [Google Scholar]

- 6.Huang D., Swanson E. A., Lin C. P., Schuman J. S., Stinson W. G., Chang W., Hee M. R., Flotte T., Gregory K., Puliafito C. A., Fujimoto J., “Optical coherence tomography,” Science 254(5035), 1178–1181 (1991). 10.1126/science.1957169 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.DuBose T. B., Cunefare D., Cole E., Milanfar P., Izatt J. A., Farsiu S., “Statistical models of signal and noise and fundamental limits of segmentation accuracy in retinal optical coherence tomography,” IEEE Trans. Med. Imaging, published ahead of print (2018). [DOI] [PMC free article] [PubMed]

- 8.Gaudric A., Ducos de Lahitte G., Cohen S. Y., Massin P., Haouchine B., “Optical coherence tomography in group 2a idiopathic juxtafoveolar retinal telangiectasis,” Arch. Ophthalmol. 124(10), 1410–1419 (2006). 10.1001/archopht.124.10.1410 [DOI] [PubMed] [Google Scholar]

- 9.Jonnal R. S., Kocaoglu O. P., Zawadzki R. J., Lee S. H., Werner J. S., Miller D. T., “The cellular origins of the outer retinal bands in optical coherence tomography images,” Invest. Ophthalmol. Vis. Sci. 55(12), 7904–7918 (2014). 10.1167/iovs.14-14907 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Banaee T., Singh R. P., Champ K., Conti F. F., Wai K., Bena J., Beven L., Ehlers J. P., “Ellipsoid zone mapping parameters in retinal venous occlusive disease with associated macular edema,” Ophthalmology Retina, in press (2018). [DOI] [PMC free article] [PubMed]

- 11.Wang Z., Camino A., Zhang M., Wang J., Hwang T. S., Wilson D. J., Huang D., Li D., Jia Y., “Automated detection of photoreceptor disruption in mild diabetic retinopathy on volumetric optical coherence tomography,” Biomed. Opt. Express 8(12), 5384–5398 (2017). 10.1364/BOE.8.005384 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Itoh Y., Vasanji A., Ehlers J. P., “Volumetric ellipsoid zone mapping for enhanced visualisation of outer retinal integrity with optical coherence tomography,” Br. J. Ophthalmol. 100(3), 295–299 (2016). 10.1136/bjophthalmol-2015-307105 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Heeren T. F. C., Kitka D., Florea D., Clemons T. E., Chew E. Y., Bird A. C., Pauleikhoff D., Charbel Issa P., Holz F. G., Peto T., “Longitudinal correlation of ellipsoid zone loss and functional loss in macular telangiectasia type 2,” Retina 38(Suppl 1), S20–S26 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Scoles D., Flatter J. A., Cooper R. F., Langlo C. S., Robison S., Neitz M., Weinberg D. V., Pennesi M. E., Han D. P., Dubra A., Carroll J., “Assessing photoreceptor structure associated with ellipsoid zone disruptions visualized with optical coherence tomography,” Retina 36(1), 91–103 (2016). 10.1097/IAE.0000000000000618 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Quezada Ruiz C., Pieramici D. J., Nasir M., Rabena M., Avery R. L., “Severe acute vision loss, dyschromatopsia, and changes in the ellipsoid zone on SD-OCT associated with intravitreal ocriplasmin injection,” Retin. Cases Brief Rep. 9(2), 145–148 (2015). 10.1097/ICB.0000000000000120 [DOI] [PubMed] [Google Scholar]

- 16.Cai C. X., Light J. G., Handa J. T., “Quantifying the rate of ellipsoid zone loss in Stargardt disease,” Am. J. Ophthalmol. 186, 1–9 (2018). 10.1016/j.ajo.2017.10.032 [DOI] [PubMed] [Google Scholar]

- 17.Staurenghi G., Sadda S., Chakravarthy U., Spaide R. F., “Proposed lexicon for anatomic landmarks in normal posterior segment spectral-domain optical coherence tomography: the IN•OCT consensus,” Ophthalmology 121(8), 1572–1578 (2014). 10.1016/j.ophtha.2014.02.023 [DOI] [PubMed] [Google Scholar]

- 18.Spaide R. F., Curcio C. A., “Anatomical correlates to the bands seen in the outer retina by optical coherence tomography: Literature review and model,” Retina 31(8), 1609–1619 (2011). 10.1097/IAE.0b013e3182247535 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Paunescu L. A., Ko T. H., Duker J. S., Chan A., Drexler W., Schuman J. S., Fujimoto J. G., “Idiopathic juxtafoveal retinal telangiectasis: New findings by ultrahigh-resolution optical coherence tomography,” Ophthalmology 113(1), 48–57 (2006). 10.1016/j.ophtha.2005.08.016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Maruko I., Iida T., Sekiryu T., Fujiwara T., “Early morphological changes and functional abnormalities in group 2a idiopathic juxtafoveolar retinal telangiectasis using spectral domain optical coherence tomography and microperimetry,” Br. J. Ophthalmol. 92(11), 1488–1491 (2008). 10.1136/bjo.2007.131409 [DOI] [PubMed] [Google Scholar]

- 21.Gattani V. S., Vupparaboina K. K., Patil A., Chhablani J., Richhariya A., Jana S., “Semi-automated quantification of retinal IS/OS damage in en-face OCT image,” Comput. Biol. Med. 69, 52–60 (2016). 10.1016/j.compbiomed.2015.11.015 [DOI] [PubMed] [Google Scholar]

- 22.Landa G., Su E., Garcia P. M., Seiple W. H., Rosen R. B., “Inner segment-outer segment junctional layer integrity and corresponding retinal sensitivity in dry and wet forms of age-related macular degeneration,” Retina 31(2), 364–370 (2011). 10.1097/IAE.0b013e3181e91132 [DOI] [PubMed] [Google Scholar]

- 23.Mukherjee D., Lad E. M., Vann R. R., Jaffe S. J., Clemons T. E., Friedlander M., Chew E. Y., Jaffe G. J., Farsiu S., “Correlation between macular integrity assessment and optical coherence tomography imaging of ellipsoid zone in macular telangiectasia type 2,” Invest. Ophthalmol. Vis. Sci. 58(6), BIO291 (2017). 10.1167/iovs.17-21834 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Peto T., Heeren T. F. C., Clemons T. E., Sallo F. B., Leung I., Chew E. Y., Bird A. C., “Correlation of clinical and structural progression with visual acuity loss in macular telangiectasia type 2: Mactel project report no. 6–the mactel research group,” Retina 38(Suppl 1), S8–S13 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Sallo F. B., Leung I., Clemons T. E., Peto T., Chew E. Y., Pauleikhoff D., Bird A. C., M. C. R. Group , “Correlation of structural and functional outcome measures in a phase one trial of ciliary neurotrophic factor in type 2 idiopathic macular telangiectasia,” Retina 38(Suppl 1), S27–S32 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Charbel Issa P., Troeger E., Finger R., Holz F. G., Wilke R., Scholl H. P., “Structure-function correlation of the human central retina,” PLoS One 5(9), e12864 (2010). 10.1371/journal.pone.0012864 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Chiu S. J., Li X. T., Nicholas P., Toth C. A., Izatt J. A., Farsiu S., “Automatic segmentation of seven retinal layers in SDOCT images congruent with expert manual segmentation,” Opt. Express 18(18), 19413–19428 (2010). 10.1364/OE.18.019413 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Chiu S. J., Izatt J. A., O’Connell R. V., Winter K. P., Toth C. A., Farsiu S., “Validated automatic segmentation of amd pathology including drusen and geographic atrophy in SD-OCT images,” Invest. Ophthalmol. Vis. Sci. 53(1), 53–61 (2012). 10.1167/iovs.11-7640 [DOI] [PubMed] [Google Scholar]

- 29.Francis A. W., Wanek J., Lim J. I., Shahidi M., “Enface thickness mapping and reflectance imaging of retinal layers in diabetic retinopathy,” PLoS One 10(12), e0145628 (2015). 10.1371/journal.pone.0145628 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Chiu S. J., Allingham M. J., Mettu P. S., Cousins S. W., Izatt J. A., Farsiu S., “Kernel regression based segmentation of optical coherence tomography images with diabetic macular edema,” Biomed. Opt. Express 6(4), 1172–1194 (2015). 10.1364/BOE.6.001172 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Srinivasan P. P., Heflin S. J., Izatt J. A., Arshavsky V. Y., Farsiu S., “Automatic segmentation of up to ten layer boundaries in SD-OCT images of the mouse retina with and without missing layers due to pathology,” Biomed. Opt. Express 5(2), 348–365 (2014). 10.1364/BOE.5.000348 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Tian J., Varga B., Somfai G. M., Lee W.-H., Smiddy W. E., DeBuc D. C., “Real-time automatic segmentation of optical coherence tomography volume data of the macular region,” PLoS One 10(8), e0133908 (2015). 10.1371/journal.pone.0133908 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Lang A., Carass A., Hauser M., Sotirchos E. S., Calabresi P. A., Ying H. S., Prince J. L., “Retinal layer segmentation of macular OCT images using boundary classification,” Biomed. Opt. Express 4(7), 1133–1152 (2013). 10.1364/BOE.4.001133 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Farsiu S., Chiu S. J., Izatt J. A., Toth C. A., “Fast detection and segmentation of drusen in retinal optical coherence tomography images,” Proc. SPIE 6844, 68440D (2008) [Google Scholar]

- 35.Krizhevsky A., Sutskever I., Hinton G. E., “ImageNet classification with deep convolutional neural networks,” Adv. Neural Inf. Process. Syst. 1, 1097–1105 (2012). [Google Scholar]

- 36.Sermanet P., Eigen D., Zhang X., Mathieu M., Fergus R., LeCun Y., “Overfeat: Integrated recognition, localization and detection using convolutional networks,” arXiv preprint https://arxiv.org/abs/1312.6229 (2013).

- 37.Long J., Shelhamer E., Darrell T., “Fully convolutional networks for semantic segmentation,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2015), 3431–3440. [DOI] [PubMed] [Google Scholar]

- 38.Ren S., He K., Girshick R., Sun J., “Faster R-CNN: Towards real-time object detection with region proposal networks,” Adv. Neural Inf. Process. Syst. 39, 91–99 (2015). [DOI] [PubMed] [Google Scholar]

- 39.Szegedy C., Vanhoucke V., Ioffe S., Shlens J., Wojna Z., “Rethinking the inception architecture for computer vision,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2016), 2818–2826. 10.1109/CVPR.2016.308 [DOI] [Google Scholar]

- 40.He K., Zhang X., Ren S., Sun J., “Deep residual learning for image recognition,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2016), 770–778. [Google Scholar]

- 41.Litjens G., Kooi T., Bejnordi B. E., Setio A. A. A., Ciompi F., Ghafoorian M., van der Laak J. A. W. M., van Ginneken B., Sánchez C. I., “A survey on deep learning in medical image analysis,” Med. Image Anal. 42, 60–88 (2017). 10.1016/j.media.2017.07.005 [DOI] [PubMed] [Google Scholar]

- 42.Gargeya R., Leng T., “Automated identification of diabetic retinopathy using deep learning,” Ophthalmology 124(7), 962–969 (2017). 10.1016/j.ophtha.2017.02.008 [DOI] [PubMed] [Google Scholar]

- 43.Gulshan V., Peng L., Coram M., Stumpe M. C., Wu D., Narayanaswamy A., Venugopalan S., Widner K., Madams T., Cuadros J., Kim R., Raman R., Nelson P. C., Mega J. L., Webster D. R., “Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs,” JAMA 316(22), 2402–2410 (2016). 10.1001/jama.2016.17216 [DOI] [PubMed] [Google Scholar]

- 44.Asaoka R., Murata H., Iwase A., Araie M., “Detecting preperimetric glaucoma with standard automated perimetry using a deep learning classifier,” Ophthalmology 123(9), 1974–1980 (2016). 10.1016/j.ophtha.2016.05.029 [DOI] [PubMed] [Google Scholar]

- 45.Esteva A., Kuprel B., Novoa R. A., Ko J., Swetter S. M., Blau H. M., Thrun S., “Dermatologist-level classification of skin cancer with deep neural networks,” Nature 542(7639), 115–118 (2017). 10.1038/nature21056 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Cunefare D., Fang L., Cooper R. F., Dubra A., Carroll J., Farsiu S., “Open source software for automatic detection of cone photoreceptors in adaptive optics ophthalmoscopy using convolutional neural networks,” Sci. Rep. 7(1), 6620 (2017). 10.1038/s41598-017-07103-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Xiao S., Bucher F., Wu Y., Rokem A., Lee C. S., Marra K. V., Fallon R., Diaz-Aguilar S., Aguilar E., Friedlander M., Lee A. Y., “Fully automated, deep learning segmentation of oxygen-induced retinopathy images,” JCI Insight 2(24), 97585 (2017). 10.1172/jci.insight.97585 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Fang L., Cunefare D., Wang C., Guymer R. H., Li S., Farsiu S., “Automatic segmentation of nine retinal layer boundaries in OCT images of non-exudative AMD patients using deep learning and graph search,” Biomed. Opt. Express 8(5), 2732–2744 (2017). 10.1364/BOE.8.002732 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Roy A. G., Conjeti S., Karri S. P. K., Sheet D., Katouzian A., Wachinger C., Navab N., “ReLayNet: Retinal layer and fluid segmentation of macular optical coherence tomography using fully convolutional networks,” Biomed. Opt. Express 8(8), 3627–3642 (2017). 10.1364/BOE.8.003627 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Xu Y., Yan K., Kim J., Wang X., Li C., Su L., Yu S., Xu X., Feng D. D., “Dual-stage deep learning framework for pigment epithelium detachment segmentation in polypoidal choroidal vasculopathy,” Biomed. Opt. Express 8(9), 4061–4076 (2017). 10.1364/BOE.8.004061 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Venhuizen F. G., van Ginneken B., Liefers B., van Grinsven M. J. J. P., Fauser S., Hoyng C., Theelen T., Sánchez C. I., “Robust total retina thickness segmentation in optical coherence tomography images using convolutional neural networks,” Biomed. Opt. Express 8(7), 3292–3316 (2017). 10.1364/BOE.8.003292 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Ronneberger O., Fischer P., Brox T., “U-net: Convolutional networks for biomedical image segmentation,” in International Conference on Medical Image Computing and Computer-assisted Intervention (Springer, 2015), 234–241. 10.1007/978-3-319-24574-4_28 [DOI] [Google Scholar]

- 53.Abràmoff M. D., Lou Y., Erginay A., Clarida W., Amelon R., Folk J. C., Niemeijer M., “Improved automated detection of diabetic retinopathy on a publicly available dataset through integration of deep learning,” Invest. Ophthalmol. Vis. Sci. 57(13), 5200–5206 (2016). 10.1167/iovs.16-19964 [DOI] [PubMed] [Google Scholar]

- 54.Abdolmanafi A., Duong L., Dahdah N., Cheriet F., “Deep feature learning for automatic tissue classification of coronary artery using optical coherence tomography,” Biomed. Opt. Express 8(2), 1203–1220 (2017). 10.1364/BOE.8.001203 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Karri S. P. K., Chakraborty D., Chatterjee J., “Transfer learning based classification of optical coherence tomography images with diabetic macular edema and dry age-related macular degeneration,” Biomed. Opt. Express 8(2), 579–592 (2017). 10.1364/BOE.8.000579 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Lee C. S., Baughman D. M., Lee A. Y., “Deep learning is effective for classifying normal versus age-related macular degeneration OCT images,” Ophthalmology Retina 1(4), 322–327 (2017). 10.1016/j.oret.2016.12.009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Lee C. S., Tyring A. J., Deruyter N. P., Wu Y., Rokem A., Lee A. Y., “Deep-learning based, automated segmentation of macular edema in optical coherence tomography,” Biomed. Opt. Express 8(7), 3440–3448 (2017). 10.1364/BOE.8.003440 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Liefers B., Venhuizen F. G., Schreur V., van Ginneken B., Hoyng C., Fauser S., Theelen T., Sánchez C. I., “Automatic detection of the foveal center in optical coherence tomography,” Biomed. Opt. Express 8(11), 5160–5178 (2017). 10.1364/BOE.8.005160 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Liu G. S., Zhu M. H., Kim J., Raphael P., Applegate B. E., Oghalai J. S., “ELHnet: A convolutional neural network for classifying cochlear endolymphatic hydrops imaged with optical coherence tomography,” Biomed. Opt. Express 8(10), 4579–4594 (2017). 10.1364/BOE.8.004579 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Farsiu S., Chiu S. J., O’Connell R. V., Folgar F. A., Yuan E., Izatt J. A., Toth C. A., “Quantitative classification of eyes with and without intermediate age-related macular degeneration using optical coherence tomography,” Ophthalmology 121(1), 162–172 (2014). 10.1016/j.ophtha.2013.07.013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Folgar F. A., Yuan E. L., Sevilla M. B., Chiu S. J., Farsiu S., Chew E. Y., Toth C. A., “Drusen volume and retinal pigment epithelium abnormal thinning volume predict 2-year progression of age-related macular degeneration,” Ophthalmology 123(1), 39–50 (2016). 10.1016/j.ophtha.2015.09.016 [DOI] [PubMed] [Google Scholar]

- 62.Simonett J. M., Huang R., Siddique N., Farsiu S., Siddique T., Volpe N. J., Fawzi A. A., “Macular sub-layer thinning and association with pulmonary function tests in Amyotrophic Lateral Sclerosis,” Sci. Rep. 6(1), 29187 (2016). 10.1038/srep29187 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Lipton Z. C., Kale D. C., Elkan C., Wetzel R., “Learning to diagnose with LSTM recurrent neural networks,” arXiv preprint https://arxiv.org/abs/1511.03677 (2015).

- 64.Cheng Y., Wang F., Zhang P., Hu J., “Risk prediction with electronic health records: A deep learning approach,” in Proceedings of the 2016 SIAM International Conference on Data Mining (SIAM, 2016), 432–440. 10.1137/1.9781611974348.49 [DOI] [Google Scholar]

- 65.Pham T., Tran T., Phung D., Venkatesh S., “Predicting healthcare trajectories from medical records: A deep learning approach,” J. Biomed. Inform. 69, 218–229 (2017). 10.1016/j.jbi.2017.04.001 [DOI] [PubMed] [Google Scholar]

- 66.Ioffe S., Szegedy C., “Batch normalization: Accelerating deep network training by reducing internal covariate shift,” in International Conference on Machine Learning (2015), 448–456. [Google Scholar]

- 67.Glorot X., Bengio Y., “Understanding the difficulty of training deep feedforward neural networks,” in Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics (2010), 249–256. [Google Scholar]

- 68.Kingma D. P., Ba J., “Adam: A method for stochastic optimization,” arXiv https://arxiv.org/abs/1412.6980 (2014).

- 69.Pan S. J., Yang Q., “A survey on transfer learning,” IEEE Trans. Knowl. Data Eng. 22(10), 1345–1359 (2010). 10.1109/TKDE.2009.191 [DOI] [Google Scholar]

- 70.Dice L. R., “Measures of the amount of ecologic association between species,” Ecology 26(3), 297–302 (1945). 10.2307/1932409 [DOI] [Google Scholar]

- 71.Abadi M., Barham P., Chen J., Chen Z., Davis A., Dean J., Devin M., Ghemawat S., Irving G., Isard M., “Tensorflow: A system for large-scale machine learning,” in 12th Symposium on Operating Systems Design and Implementation (Usenix, 2016), 265–283. [Google Scholar]

- 72.Torrey L., Shavlik J., “Transfer learning,” Handbook of Research on Machine Learning Applications and Trends: Algorithms, Methods, and Techniques 1 (IGI, 2009), p. 242. [Google Scholar]

- 73.Carrasco-Zevallos O. M., Keller B., Viehland C., Shen L., Waterman G., Todorich B., Shieh C., Hahn P., Farsiu S., Kuo A. N., Toth C. A., Izatt J. A., “Live volumetric (4D) visualization and guidance of in vivo human ophthalmic surgery with intraoperative optical coherence tomography,” Sci. Rep. 6(1), 31689 (2016). 10.1038/srep31689 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Çiçek Ö., Abdulkadir A., Lienkamp S. S., Brox T., Ronneberger O., “3D U-net: Learning dense volumetric segmentation from sparse annotation,” in International Conference on Medical Image Computing and Computer-Assisted Intervention (Springer, 2016), 424–432. [Google Scholar]

- 75.Milletari F., Navab N., Ahmadi S. A., “V-net: Fully convolutional neural networks for volumetric medical image segmentation,” in 3D Vision (3DV), 2016 Fourth International Conference on, (IEEE, 2016), 565–571. 10.1109/3DV.2016.79 [DOI] [Google Scholar]

- 76.Chen H., Dou Q., Yu L., Heng P. A., “Voxresnet: Deep voxelwise residual networks for volumetric brain segmentation,” arXiv preprint https://arxiv.org/abs/1608.05895 (2016). [DOI] [PubMed]