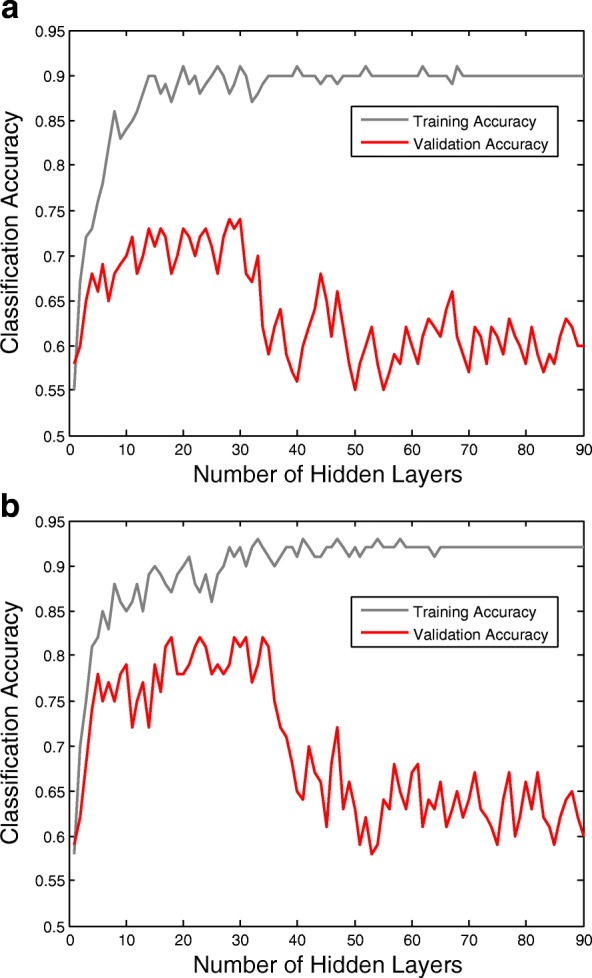

Fig. 10.

The curves of LSTM-RNN and GRU-RNN’s training and validation accuracies as the number of hidden layers increased. The validation accuracies appear peaks with a 20–15 hidden layers. The results of accuracies will be paradoxical with the results of cross-entropies, and the classification of EEG signals will be quickly over-fitting. The reason is that the deep RNN architecture continues to learn common components of the EEG sequences, while simultaneously learning signal noise and non-discriminative components. Therefore, there is a trade-off between classification and long-term patterns of modeling errors. a The curves of LSTM-RNN and b The curves of GRU-RNN