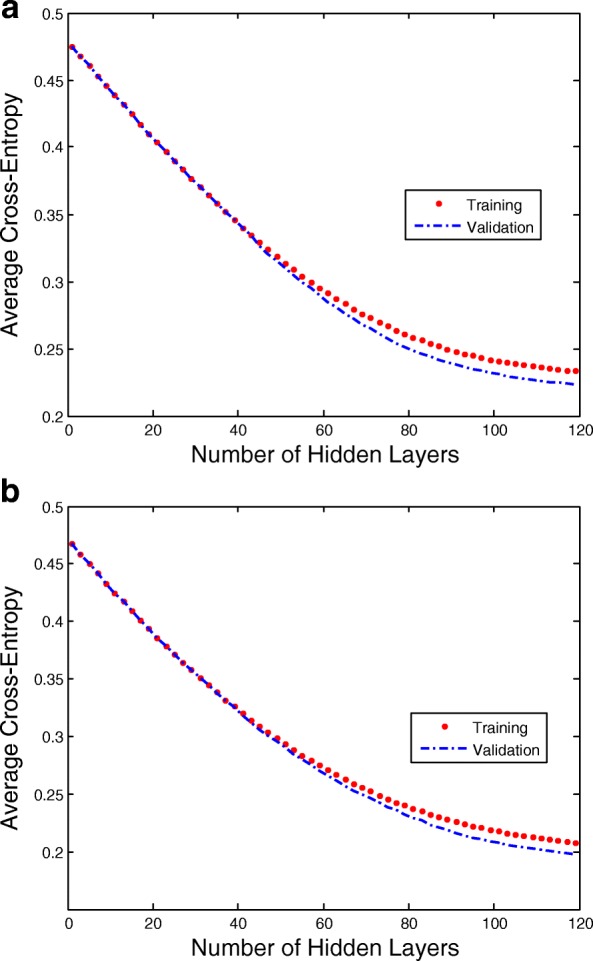

Fig. 9.

The curves of LSTM-RNN and GRU-RNN’s training and validation cross-entropies as the number of hidden layers increased. The training and validation cross-entropies have separations over 20 hidden layers. The cross-entropies will not reduce if the signals are over-fitting by RNN. In fact, the cross-entropies will not increase, so the deep RNN architecture continues to learn components of the signals that are common to all of the EEG sequences. a The curves of LSTM-RNN and b The curves of GRU-RNN