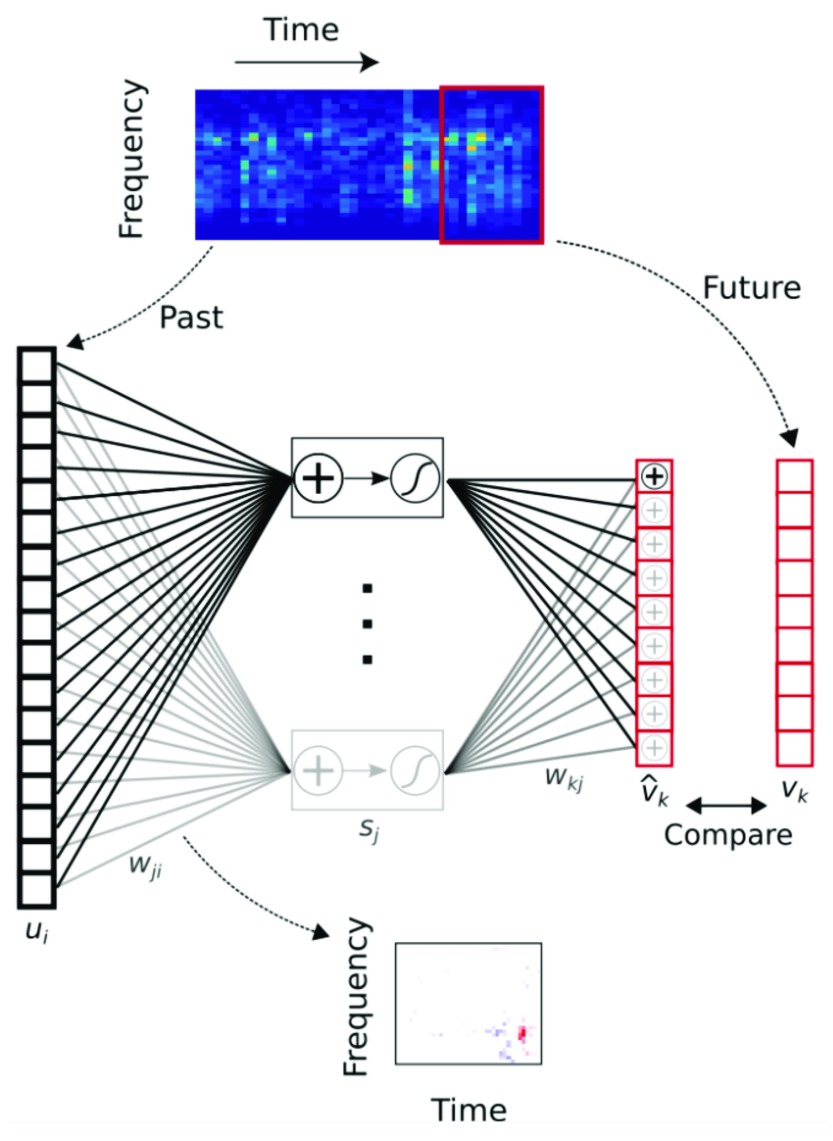

Figure 1. Neuronal selectivity in the auditory cortex is optimized to represent sound features in the recent sensory past that best predict immediate future inputs.

A feedforward artificial neural network was trained to predict the immediate future of natural sounds (represented as cochleagrams describing the spectral content over time) from their recent past. This temporal prediction model developed spectrotemporal receptive fields that closely matched those of real auditory cortical neurons. A similar correspondence was found between the receptive fields produced when the model was trained to predict the next few video frames in clips of natural scenes and the receptive field properties of neurons in primary visual cortex. Model nomenclature: s j, hidden unit output; u i, input—the past; v k, target output—the true future; , output—the predicted future; w ji, input weights (analogous to cortical receptive fields); w kj, output weights. Reprinted from Singer et al. 42.