Abstract

Abstract—Rigorous evidence of “what works” to improve health care is in demand, but methods for the development of interventions have not been scrutinized in the same ways as methods for evaluation. This article presents and examines intervention development processes of eight malaria health care interventions in East and West Africa. A case study approach was used to draw out experiences and insights from multidisciplinary teams who undertook to design and evaluate these studies. Four steps appeared necessary for intervention design: (1) definition of scope, with reference to evaluation possibilities; (2) research to inform design, including evidence and theory reviews and empirical formative research; (3) intervention design, including consideration and selection of approaches and development of activities and materials; and (4) refining and finalizing the intervention, incorporating piloting and pretesting. Alongside these steps, projects produced theories, explicitly or implicitly, about (1) intended pathways of change and (2) how their intervention would be implemented.The work required to design interventions that meet and contribute to current standards of evidence should not be underestimated. Furthermore, the process should be recognized not only as technical but as the result of micro and macro social, political, and economic contexts, which should be acknowledged and documented in order to infer generalizability. Reporting of interventions should go beyond descriptions of final intervention components or techniques to encompass the development process. The role that evaluation possibilities play in intervention design should be brought to the fore in debates over health care improvement.

Keywords: Africa, Complex intervention design, Evidence based public health, Implementation science, Malaria

INTRODUCTION

In recent decades, demand for evidence of “what works” in health care policy and practice has increased. This has led to intensified efforts to advance and standardize methodologies for the generation of evidence relating to health interventions.1-4 Interventions that aim to improve health care have been classified as “complex,” reflecting their composition of multiple, often interacting, components within dynamic and multifaceted systems.5,6 Significant investment has been made into methodological developments for evaluating such interventions.3,4,7-10 However, less attention has been paid to how such interventions are designed and to reflecting on how this design process works in practice.

The ACT Consortium joined together 45 leading malaria researchers from 26 institutions around the world who were concerned about increasing access to new first-line artemisinin combination therapy (ACT) antimalarial drugs, targeting the use of ACTs to those with malaria infection, the safety of ACTs when used routinely, and the quality of ACTs accessed by malaria-affected communities (www.actconsortium.org). Research had shown that improvement in malaria case management was not amenable to simple interventions and that strategies would be required that worked with different components of the health system, including formal public health care facilities, private drug retailers, and community health workers.11 In particular, the overdiagnosis of malaria was known to be a deeply embedded social practice that persisted despite World Health Organization policy supporting test-based treatment12 and availability of rapid diagnostic tests (RDTs).13 Interventions would need to go beyond the behavior of individuals to shift social expectations and to change structures, attending to health care as a construct of dynamic social, political, and economic processes.

The Medical Research Council's (MRC) guidance on developing and evaluating complex interventions4 recommends building on existing evaluations of behavioral interventions to design complex health interventions. However, literature on existing interventions is difficult to learn from and apply to other settings. Even when interventions and their constituent components are clearly described, the processes undertaken and people, agendas, and disciplines that have influenced the design of complex health care improvement interventions are often not made explicit, either internally within the design process or to external audiences in reporting.14 This reduces the ability to interpret how findings of intervention effects could be inferred from one setting to another or to a scale-up scenario.

Tools and protocols have been developed in the health promotion field to aid intervention development.15,16 However, the focus of these has been on individual health-related behaviors that may be amenable to psychological or environmental change factors. There is limited guidance on how to develop interventions that attend to the social nature of health care. This article aims to discuss the process and challenges faced by evaluation projects developing interventions to improve health care for malaria as an example of a systematic attempt to tackle multiple facets of a problem simultaneously.

We designed eight interventions to improve malaria care in five African countries. The interventions were to be rigorously evaluated according to current evidence-based standards,17 mostly through cluster-randomized trials (Table 1). All but one intervention (the Nigerian study) had a measurably positive impact in one or more outcome. Reflecting calls for multidisciplinary teams for such endeavors,18 project teams consisted of clinicians, public health practitioners, economists, anthropologists, epidemiologists, and statisticians. In this article, we summarize the intervention design methods and describe challenges faced and lessons learned about the process of health care improvement intervention design from across our projects. We have described lessons learned around evaluation methods elsewhere.19 The projects had similar aims and overall approaches to intervention design but varied in the detail of objectives, policy-related intentions, team knowledge bases and expertise, and budgets allocated to the design process. These similarities and differences enabled us to draw out lessons for intervention design across projects.

TABLE 1.

Summary of ACT Projects and Interventions Discussed in This Article

| Study Titlea | Study Design | Setting and Dates of Implementation | Intervention | Publications on Intervention and Trial Design, Formative Research | Publications of Intervention Effect (Including Forthcoming) |

|---|---|---|---|---|---|

| The PRIME study | Two-arm cluster-randomized trial | Uganda Public health facilities 2011–2013 | Enhanced health facility–based care for malaria and febrile illnesses in children | 23,70-72 | 35,73-75 |

| The REACT project, Cameroon (Research on the Economics of ACTs) | Three-arm cluster-randomized trial | Cameroon Public and mission health facilities 2010–2011 | Basic and enhanced provider interventions to improve malaria diagnosis and appropriate use of ACTs in public and mission health facilities | 76-79 | 80,81 |

| The REACT project, Nigeria (Research on the Economics of ACTs) | Three-arm cluster-randomized trial | Nigeria Public primary health facilities and private medicine retailers 2010–2011 | Provider and community interventions to improve malaria diagnosis using RDTs and appropriate use of ACTs in public health facilities and private-sector medicine retailers | 82-84 | 85 |

| The TACT trial (Targeting ACTs) | Three-arm cluster-randomized trial | Tanzania Public health facilities 2011–2012 | Health worker and patient-oriented interventions to improve uptake of malaria RDTs and adherence to results in primary health facilities | 86 | 87,88 |

| ACT Pharmacovigilance project | Participatory research design | Uganda Health facilities and community drug distributors 2010–2012 | Development of adverse event reporting forms for use by nonclinical workers to collect data on the effects of ACTs | 65 | 89 |

| RDTs for home management of malaria | Two-arm cluster-randomized trial | Uganda Community drug distributors 2010–2012 | Introduction of RDTs for the home management of malaria at the community level | 90 | |

| RDTs for drug shop management of malaria | Two-arm cluster-randomized trial | Uganda Drug shop vendors 2010–2012 | Introduction of RDTs to drug shops to encourage the rational drug use for case management of malaria | 91-94 | 95 |

| Effect of test-based versus presumptive diagnosis in the management of fever in under-five children | Two-arm cluster-randomized trial | Ghana Public primary health facilities 2011–2012 | Test-based diagnosis of malaria with RDT with restricting ACT to children who test positive | 96 | 97,98 |

See the ACT Consortium website (www.actconsortium.org) for more information on each of these studies.

MATERIALS AND METHODS

Intervention design steps and lessons learned were developed through a multiple case study approach,20 with each of our eight intervention projects representing a case. This included all intervention studies undertaken by the ACT Consortium. Initially, an external researcher (LB) undertook reviews of project documents and phone or face-to-face interviews with investigators to learn their perspectives of the most important elements in the design of their interventions and lessons learned in the processes they had undertaken. All study investigators were invited to participate or to nominate relevant team members, and interviews followed a loose topic guide. Additional insights were brought together through a series of face-to-face and e-mail discussions with a team of core scientists who worked across the ACT Consortium projects to support intervention and evaluation designs. A first summary of steps and lessons learned that emerged in common across projects was produced for review by study teams. Each study team then provided further reflections and insights, forming reflexive accounts of their research processes that could enable learning for others.21 The experiences of our study teams were reviewed together with existing literature on intervention development to characterize a series of key steps and challenges experienced that resonated across studies and were poorly addressed in the literature. This formed an iterative analytical process that continued through the process of writing.22 Throughout, we provide empirical examples from our work in the text, boxes, and supplemental material.

RESULTS AND DISCUSSION

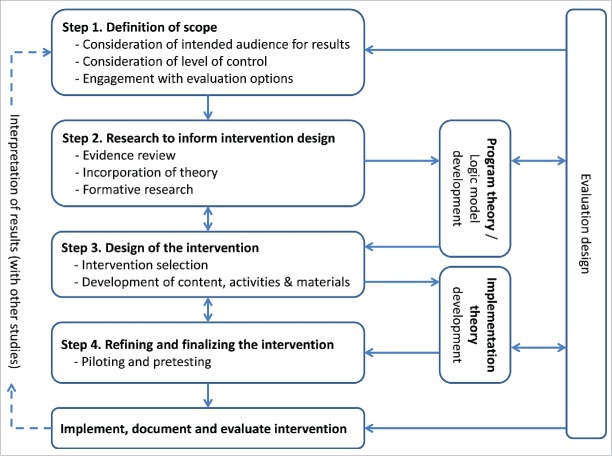

Each of our projects aimed to produce an evidence-based intervention package tailored for a particular context. Seven were to be delivered and evaluated through cluster-randomized trials and one through routine implementation (Table 1). The starting point for our interventions was technical—typically provision of commodities (ACTs and/or RDTs) and some form of training to support a change in practice. The intervention design processes were to yield details of these technical interventions and their delivery into local contexts. Four broad steps in this process were identified from across our projects (Figure 1):

Definition of scope

Research to inform intervention design

Design of the intervention

Refining and finalizing the intervention

FIGURE 1.

Phases in the Development of Complex Interventions

Alongside these activities, projects either explicitly or implicitly developed two sets of theory: program theory (or a logic model), which depicted the intended pathway for change from the intervention to study outcomes, and implementation theory, which depicted the intended vehicle for change, consisting of the “nuts and bolts” of the intervention itself. Each of these sets of theory attended specifically to the local social, political, and economic contexts where the interventions would be tested and potentially scaled up.

In all projects, the time and resources spent in designing interventions exceeded expectations. From the start of formative research to being ready for implementation, the overall process of intervention design took between six months and two years, requiring project durations to be extended by at least 20%. Project expenditure in this phase of work ranged between 10% and 25% of the overall budget. The variation in investment related to the starting point and goals of each project but also the relative investment compared with evaluations, which in some cases were large scale and costly. The knock-on effects of unexpected investment required in this phase of work included narrowing of scope of both the intervention and evaluation activities. The highest costs for projects were household surveys used in formative research and the professional development of intervention materials. It was not always possible to predict the length of time intervention design would take, particularly when responding to unexpected local priorities, which made interventions more relevant but less easy to budget time and resources for.

Step 1. Definition of Scope

As is often the case, the target problem to be tackled by the ACT Consortium projects had been established prior to the intervention design phase and particular components of the intervention, as well as the study design, had already been proposed as part of securing research funding. On initiation of funding, decisions were required to define the scale and potential scope of interventions. We found that three key areas needed consideration in defining the scope for interventions: the intended audience for results, the level of control required for the evaluation and intervention, and what is possible for evaluation designs.

Consideration of the Intended Audience for Results

ACT Consortium studies aimed to assist health policy makers at the global level and/or program managers at national and district levels to decide how to maximize health investments in relation to ACTs and RDTs. Keeping this aim and audience in mind was important when articulating the key criteria around which to design our interventions, which included feasibility, replicability, scalability, and cost-effectiveness. In each study, the intervention design process involved local and/or national stakeholders, who helped define intervention scope. We recognized that our interventions needed to be acceptable to different actors who had power to support the interventions in the future: from those in a position to fund and promote interventions, such as ministers of health, to those expected to take up the intervention in their daily practice, such as clinicians. The interventions therefore needed to fit with politically acceptable framings of the target problem and potential solutions.23 See Table 2 for an example of how this was negotiated with stakeholders in Cameroon.

TABLE 2.

Examples from Case Studies of Lessons Learned for Intervention Design. Note. CMD = community medicine distributor, RDT = rapid diagnostic test.

| Defining scope: consideration of intended audience for results | In the Cameroon REACT project, the initial focus of the intervention, defined in 2008, reflected concerns about appropriate use of first-line antimalarial drugs after recent policy changes to ACTs. In 2010, the project's focus was changed to appropriate diagnosis and treatment of malaria, incorporating the use of malaria RDTs. This responded to the upcoming roll-out of RDTs by the government and questions raised by them as stakeholders and the malaria community more broadly around how this could best be supported, given findings elsewhere that basic training was insufficient to support uptake of RDT results and adherence to test results. The trial therefore set out to answer specific concerns of Cameroonian policy makers by providing information about the cost-effectiveness of introducing RDTs alongside either basic training or an enhanced training intervention, compared with existing practice without RDTs. Furthermore, the initial inclusion of private sector providers was removed after feedback from the Ministry of Health that they preferred the tests first to be introduced at public and mission facilities. |

| Defining scope: consideration of level of control | For example, in the two Ugandan trials that introduced RDTs among community medicine distributors and drug shops, the objective was to learn the effect of the intervention if all providers allocated to the intervention received the full intervention. Training and follow-up supervision were delivered by members of the research team. The intention was not to produce an off-the-shelf intervention directly applicable for scale-up. By contrast, in the Nigerian trial, which introduced RDTs at public health facilities and private pharmacies and patent medicine dealers, the objective was to learn the effect of an intervention under routine conditions. Providers were invited to training sessions but were not followed up if they did not attend, and for a school-based intervention, school teachers and students were provided with intervention ideas and materials but were encouraged to undertake whatever activities they considered feasible. The intention was to produce interventions and results that would be directly applicable in practice. The latter study was closer to an effectiveness design than the former two. |

| Evidence review: scoping to identify potential intervention components in Uganda | The Ugandan PRIME project aimed to improve the quality of health care at health facilities in order to improve health outcomes and uptake of services. The target problem was identified as multifaceted, with several components of quality of care identified as targets for improvement in the project's formative research with health workers and community members. The targets were used as a focus for reviewing evidence of previous interventions:

|

| Formative research: utility | Formative research prior to the Ugandan trial with CMDs involved 29 in-depth interviews with CMDs, health workers, and district health officials and 13 focus group discussions with mothers, fathers, and community leaders. The research aimed to understand existing CMDs' motivations, practices, and experiences and to explore the potential for introducing RDTs into the work and profile of these voluntary workers. The findings suggested that specific liaison personnel would be required to provide support to CMDs and that acknowledgment of their work through provision of commodities to support their roles would be required to sustain motivation. |

| Formative research: challenges with “barriers” approach | First, many of the barriers identified in our research were not amenable to change within the predefined scope of the intervention. For example, where wider policy dictated that certain providers were not allowed to sell or distribute certain drugs, such as antibiotics, we were unable to meet demand for training on treatment of nonmalarial febrile illnesses. Second, even when a barrier might be amenable to change, the research focus on barriers and problems provided little to inform positive action through intervention. For example, the finding in the Cameroon formative research that clinicians considered treatment with antimalarials to be a psychological treatment suggested a need for a change in expectations of consultation outcomes but did not in itself indicate what might be effective in achieving this. Third, the focus on barriers diverted attention from the motivation and agency of those enacting the problem behaviors; the practices desired by the intervention may not be in line with their priorities and motivations. For example, the Cameroonian clinicians' motivation for prescribing antimalarial drugs was to treat the whole patient, rather than the laboratory result or the malaria parasite. This represented a fundamental conflict between the focus of the malaria policy and of the study clinicians.79 |

| Formative research: value of appreciative enquiry | In one of our studies in Uganda, identifying the aspirations of health workers for strengthening the quality of health care they provided gave us a framework for designing the PRIME intervention, based on their desires to strengthen technical, interpersonal, and management capacities.70 |

Consideration of Level of Control

Unlike in drug trials, which have established phases with different levels of control over the intervention in each, it is often not clear whether health care improvement evaluations are attempting to establish efficacy, effectiveness, or both.1 Our involvement in both the intervention and evaluation designs of our projects meant active decision making around trade-offs between evaluating predefined interventions or interventions-in-action. Consciously defining the level of standardization for interventions and their constituents therefore emerged as important early on in the studies. Because of the different contexts of our studies and different gaps in evidence, our studies fell at different points along a spectrum from efficacy to effectiveness,24 which affected intervention design and scope (see Table 2 for an example from two community-based studies in Uganda). On the whole, we required that interventions would be standardized to some degree but allowed for varying levels of flexibility in the content of interventions as delivered by implementers. This enabled some adaptation of content to different local contexts, such as different health facilities, provider types, or schools, as has been described elsewhere.6,25 In most projects, rather than ensure that all members of the target population had the same experience of the intervention, we opted to encourage adaption and evaluate through process evaluations the fidelity, reach, dose delivered, and dose received of intervention components such as training.26,27

Engagement with Evaluation Options

Unlike situations where evaluations are undertaken by researchers external to the intervention, which are intended to be more objective but can suffer from post hoc interpretations of intervention intentions and procedures,28 our interventions were designed with evaluation options in mind. Our remit, driven by a desire for transferability and scalability, was to identify minimal essential interventions that could stimulate change and be scaled up in low resource settings. This essentialist agenda fitted well with the experimental paradigm in which randomized controlled trials (RCTs) are a gold standard. Seven of our studies had already planned RCTs to evaluate their interventions, with ancillary anthropological and economic studies. The use of RCTs affected the potential scope of their interventions. For example, although large-scale interventions have been hypothesized to be effective in changing behavior,29,30 such interventions would be difficult to evaluate with RCTs due to logistical and budgetary constraints.5 Similarly, multifaceted interventions have been recommended to promote behavior change,31,32 but because RCTs typically allow for a small number of comparison study arms, a trade-off emerged between potentially more effective multicomponent interventions and our ability to evaluate what worked with an RCT. Some trials compared existing practice with simple interventions such as RDTs plus instructions; others tested enhanced intervention packages, such as a series of peer group workshops. However, the more enhanced interventions that responded to the complex needs of local situations consisted of multiple and interacting components (material, human, theoretical, social, or procedural33), requiring more complex evaluations to attempt to unpick their relative effects. Some projects employed cluster designs34 due to predicted benefits of group-level intervention, and some employed additional evaluation activities to understand the process and mechanisms of change.27 For interventions with a long-intended mechanism of effect, these additional evaluation activities became especially important in understanding intervention impact.35

Step 2. Research to Inform Intervention Design

Once the overall goal and broad scope for each intervention were defined, teams were faced with numerous options for the detailed design of interventions. Three domains for research have been recommended for guiding the detail of intervention design,15 with different emphases from different disciplines: evidence review (most strongly recommended in medicine4), incorporation of theory (most strongly recommended in health psychology36), and formative research (most strongly recommended in anthropology37). All of our projects undertook research in each of these domains, often concurrently, although the lack of clear evidence or theory to guide our interventions, which required engagement with local social constructions of health care, meant that our greatest investment was in empirical formative research.

Evidence Review

Systematic reviews are advocated for use in complex intervention design, to bring together all evidence of the effectiveness of interventions for a particular outcome.4 We faced three major challenges in following this recommendation. First, there were few systematic reviews available related to interventions to improve antimalarial prescribing practice or use of malaria diagnostics. Second, undertaking such reviews ourselves would be methodologically challenging and time consuming given the number of potential interventions that could be considered to change health care practices, especially if attempting to account for heterogeneity between interventions lumped together and offering mixed results (such as supervision38 or training39) that could benefit from being split according to materials, people, theory, and procedures.40 Third, the quality of reporting of intervention components was found to be poor, as well as the quality of evaluations, as others have described.9,41 These challenges point to the importance of having readily available systematic reviews of complex interventions and their component parts in the evidence base. In lieu of this, some of our teams undertook scoping reviews “to map rapidly the key concepts underpinning a research area and the main sources and types of evidence available.”42 See Table 2 for an example from a health center improvement study in Uganda. These reviews were neither systematic nor comprehensive but were sufficient to achieve the following:

Recognition of the breadth of interventions that have been implemented and evaluated to change a particular practice.

A sense of the potential effectiveness of particular intervention types in given contexts.

Specific intervention ideas and components that could be successful in our proposed intervention contexts.

Incorporation of Theory

It has been argued that interventions with strong theoretical underpinnings can lead to stronger effects, more refined theories for understanding behavior change, more replicable interventions, and more generalizable results.9,14,36 However, we could not identify clear guidance for incorporating theory into intervention design beyond the inclusion of individually oriented behavior change theoretical methods from health psychology.15,43 On initiating our attempts to incorporate theory, we entered what appeared as a minefield of competing and conflicting ideas and definitions, whose presentation under the same term, “theory,” makes decision making for would-be designers challenging. Additional difficulty in navigating this field comes with the contradictions and debates between different political perspectives—for example, do cognitive theories and resulting interventions shift responsibility for healthy behavior to individuals, ignoring broader structural factors influencing behavior?44 Some researchers have questioned whether theories used in complex health interventions to date have offered much beyond common sense.45

Our endeavors to incorporate behavior change theories into our intervention designs involved attempting to uncover the theoretical basis of interventions identified as successful through empirical literature reviews (described above). This required some familiarity with different theoretical perspectives commonly used (a useful summary of the evolution of clinician behavior change approaches can be found in Mann46), because most often the theory, model, or hypothesis for a program was not clearly reported. It also involved building on theoretical understandings and implications of formative research (described below). For example, we found communities of practice theory47 to be a useful framing for several of our intervention designs, highlighting different ways that clinicians may learn and change their practice in groups, given our prescribing contexts where colleague relationships appeared important. We felt that our reviews of the theories behind interventions and incorporation of this into our intervention designs achieved the following:

Recognition of the breadth and strength of different approaches that have been applied to behavior change regarding target problems or behaviors of interest.

Identification of specific theories that may be successfully adopted to inform the approach to interventions within the proposed parameters.

Familiarity with the use of frameworks to conceptualize the way an intervention is proposed to achieve an effect, to inform project logic models that explicitly outline intended mechanisms of change (as described below).

Formative Research

Formative research has been advocated to enable optimal intervention design by understanding the scale of and reasons for the target problem in the particular context where the intervention will take place.37,48 Most of our ACT Consortium projects undertook qualitative and quantitative research prior to intervention design, to understand the extent and nature of current practices, including perceptions and enactment of care and treatment seeking, as well as local histories of previous and existing interventions. We had anticipated formative research phases for our ACT Consortium projects to last between three and nine months, but this phase ended up requiring significantly more time and human resources for the fieldwork and analysis. This initial investment was considered valuable because the target behavior of antimalarial prescribing was known to be difficult to change.49,50 However, in future studies we would hope that this period could be condensed. One reason our formative research took a long time from conception to informing intervention design may have been our focus on current behavior as the problem. This approach emphasizes identification of barriers to desired practices, such as physical, economic, cognitive, social, or policy factors, with the assumption that release of such barriers through an intervention would lead to the emergence of desired behavior.51 Important challenges arose for our intervention designs based on this approach (see Table 2).

Our experiences suggest areas of our formative research that were more informative for intervention design and that might be most productive in future studies. First, the areas of formative research that focused on eliciting stories of past success were particularly useful. The qualitative research in some of our projects borrowed from the perspective of appreciative inquiry, which proposes that solutions already exist in organizations, and analysis of these can allow interventions to amplify what works in that context (illustrated in Table 2).52 Second, understanding the landscape in which practices were embedded helped with understanding the motivations and priorities of the targets of interventions and to align intervention messages and modes of delivery with these.

Program Theory Development

The decisions that emerged from steps 1 and 2 above fed into explicit or implicit program theories of our interventions:53 the way the intervention was intended to achieve particular outcomes. This has also been described as the intended mechanism of change54 or change theory.55 These descriptions of the intended journey on which the target of an intervention is hoped to travel can usefully be distinguished from the vehicle in which the journey is intended to be taken. The latter has been variously referred to as implementation theory,53 an action model,55 and process theory56 and reflects the nuts and bolts of the intervention, discussed after step 3 (design of the intervention) below. Typically, these theories are developed post hoc, in relation to evaluation design.57 We found it useful to articulate our assumptions and rationales for interventions during intervention design.

A useful method to depict program theory was logic modeling. Logic models describe the presumed causal linkages from project start to goal attainment.58 Building on the work of others,59,60 several of our Consortium projects developed logic models containing some or all of the components listed in Table 3. Supplemental Material File 1 provides an example of a logic model from one project.

TABLE 3.

Example Components of a Logic Model of an Intervention

| Inputs (resources) | Human, financial, and material resources needed for the intervention. |

| Inputs (activities of the intervention) | Specific activities in which the target audience(s) participate, such as training activities, workshops, events, and requisition of supplies. |

| Conditions | Factors among recipients and in their environment that are expected to affect the mechanism of effect of an intervention; for example, the presence of supporting resources or leaders. |

| Outputs | Measurable proximal outputs of intervention activities; for example, knowledge or motivation of a direct or indirect target audience. |

| Outcomes | Changes that occur in the target audience(s), which can be either proximal—for example, drug use behavior, patient satisfaction—or distal—for example, community health indicators. |

Our experiences of developing a logic model during intervention design suggest that key benefits are as follows:

To assist with the choice of intervention by articulating presumed hypotheses linking intervention options to intended outcomes.

To ensure that the intervention has internal consistency, that a mechanism of effect is predicted for each intervention component, that supporting components are accounted for in the model and therefore also in the evaluation activities, and that there are no important gaps or additional activities that are not justified within the model.

To act as a visual aid to communication, enabling the team and wider stakeholders to reach a common and consistent understanding of the components of the intervention.

To guide data collection for the intervention evaluation by showing where, when, and what information needs to be documented or collected.

Of note, our logic models rarely remained static. They became dynamic tools, being adapted and in turn adapting the design of both intervention and evaluation activities. Crucially, these logic models were developed, and assumptions articulated, with close attention to the specific contexts in which the interventions would take place, particularly the social, political, and economic contexts. Although we did not state the findings of formative research explicitly in our logic models, this was implicit in our processes of considering context and mechanisms of effect and could be included explicitly in future work.

Step 3. Design of the Intervention

Once research was completed to inform design options, we attempted to take an evidence-based approach to developing the detail of intervention components and materials. In most ACT Consortium projects, two activities were undertaken: workshops to review research undertaken so far and to select which specific interventions would be implemented and detailed development of intervention content, activities, and materials.

Intervention Selection

We found small-scale workshops to be a useful format to bring together findings from steps 1 and 2 with input from across the research team to consider potential interventions and their feasibility and potential effectiveness. Inviting stakeholders to the workshops or to individual follow-up meetings was useful in ensuring that the interventions fitted with priorities and other previous or current interventions and ensured that policy makers and those who would be responsible for scaling up interventions felt that the interventions were relevant to their concerns. We adapted recommendations of other researchers61 to structure these workshops, which had a similar format across the projects. Broadly, these included a discussion and agreement of criteria for the intervention design (e.g., effective, feasible, replicable, sustainable), informed by both the parameters considered in step 1 as well as the values and priorities of stakeholders attending the meeting. The workshops were an opportunity to present and discuss reviews and formative research undertaken by different members of the research team. Following this, a collaborative effort by the researchers, stakeholders, and field teams led to a long list of potential interventions that was refined to a short list that fitted the criteria set out at the start (see Supplemental Material File 2 for an example structure of our intervention design workshops).

A key challenge to note at this stage was the bringing together of disparate evidence from theory, literature, and empirical research. In some cases, we had to negotiate conflicts between these different sources or between members of study teams from different disciplinary backgrounds. For example, preferences over one or other model of behavior change could conflict and were not easy to resolve in a context where evidence was weak. In these cases, the resulting intervention component or mode of delivery represents a compromise across different disciplinary and individual preferences.

Development of Content, Activities, and Materials

ACT Consortium projects found that designing the detail of intervention materials took considerable time and resources. Activities and materials used in interventions included facilitated group learning, self-reflection tasks, participatory dramas, peer education, supervisory visits, tools for referral of patients or requisition of supplies, and distribution of posters and leaflets. Each required project teams to return to literature or to the field or to seek external expertise to identify evidence, best practice, and user perspectives on the implementation of activities. For several projects that used workshops to facilitate change, a six-step learning process was developed, based on literature of theory and best practice in adult learning (see Supplemental Material File 3).

Investment in the additional work in developing materials at this stage was considered valuable for the following reasons:

To ensure quality of intervention activities and materials and optimize the likelihood of effect.

To ensure consistency in intervention delivery in order that components are easily replicable.

To enable evaluation of the intended intervention through clear documentation of the activities, materials and procedures to be implemented.

Some ACT Consortium projects attempted a bottom-up approach to the design of some intervention materials, explicitly recognizing that target recipients are best placed to identify or refine content, messages, modes of delivery, and visual details that are likely to be effective and acceptable to end users.62-64 Participatory research was found to be valuable for these projects because it enabled them to draft material quickly for further testing and revision in intensive rounds of development (see, for example, Davies et al.65 for a description of the use of participatory research in the pharmacovigilance materials project in Uganda).

To assist with evaluation of interventions and our ability to draw conclusions from results, ACT Consortium projects recognized the need for consistency in the delivery of interventions, from the procedures followed and materials delivered to participants, to detailed manuals for workshop trainers. Such manuals required careful design of visuals and layout; for example, with the use of summary boxes and icons to assist the reader to follow activities during and after the workshop (see Supplemental Material File 4).

Implementation Theory

Step 3 gave rise to our theories and protocols for how the interventions should be delivered, which is sometimes known as “implementation theory.” This was most commonly articulated through process objectives that encompassed both content—for example, perceptions that specific workshop objectives were relevant and achieved—and procedures—for example, participant attendance at workshops or receipt of specific supplies at a particular time. These were depicted in manuals, protocols, and standard operating procedures for trainers, those delivering resources, supervisors, feedback messengers, and others engaged in the implementation process. This implementation theory was tested in process evaluations by assessing the fidelity, reach, dose delivered, dose received, effectiveness, and context of implementation.26

Step 4. Refining and Finalizing the Intervention

Once intervention activities, materials, and protocols were drafted, most of our projects undertook a period of piloting and pretesting these components in order to evaluate comprehension, acceptability, and relevance and to refine final versions. Our project teams noted the importance of investment in this stage, when a gap was revealed between the materials and procedures developed so far and the reality of delivery to and understanding of these by the target audience in practice. From across the ACT Consortium projects, investment in this stage is reported to have led to the following consequences:

Optimization of materials and activities through pretesting to identify and adapt any components that failed to communicate intended messages, were misunderstood, or were not deemed relevant to the target audience66 (see Supplemental Material File 5).

Ability to adapt procedures for ease and impact of delivery and receipt of the intervention during implementation; for example, decisions on grouping of participants to maximize peer interactions, timing, and transport for workshops to ensure timely participation with minimized disruption and feasibility of intervention intensity in practice.

Opportunity to train delivery staff during pilots, with two-way review of the intervention and delivery practices, which could feed into updated protocols for implementation procedures.

Opportunity to involve stakeholders in reviewing and revising the content and implementation of the intervention.

Opportunity for evaluation teams to pilot tools to document the implementation of the intervention.

Piloting and pretesting involved presentation of the draft intervention component, such as a training module or leaflet, with various methods to elicit feedback from the target audience, implementers, and/or observers. Methods included structured questionnaires, focus groups, and informal discussions. Several rounds of revisions to draft materials were often made, with each new draft tested and the feedback used to improve the subsequent draft, until the quality, suitability, and comprehension of the final product were deemed sufficient to implement and evaluate formally.

CONCLUSIONS

Intervention design is a crucial, yet often neglected and black-boxed, process in the field of health care research. We believe that it is time that more attention is paid to how it is done. This article shares methodological experiences from eight ACT Consortium projects, which designed and evaluated a variety of complex health interventions to improve malaria care in five malaria-endemic countries in Africa. This adds empirical examples to existing guidance on health intervention research, which thus far has been limited in terms of detail4 and mostly focused on individual health behavior change.15,16 Our attention to the processes of intervention design shows that intervention characteristics are not merely technical but are produced in response to social, political, and economic priorities. As such, we alert others to the requirements and realities of such endeavors and encourage greater transparency in articulating these processes. The steps outlined here provide a framework with which to view processes of intervention design in research projects. Routine articulation of such processes would allow for improved assessments of transferability of interventions and inference of their potential effects to other scenarios.

The time taken to design interventions within an evidence-based paradigm was invariably longer than expected, required multiple rounds of protocols and ethics approvals, and, crucially, required a substantial proportion of overall project budgets. Funders and researchers both need to recognize that health care improvement interventions cannot be taken off the shelf; they require substantial investment to develop, and this should be planned for accordingly. Without this investment, funders and researchers risk further well-conducted evaluations that describe the lack of impact of poorly designed interventions. In situations with limited funding, those designing interventions would benefit greatly from learning about the rationales and processes of the design of other similar interventions, emphasizing a need for better reporting.

The dearth of methodological and empirical literature on the process of intervention design unnecessarily lengthened our efforts to design robust interventions. We argue strongly that the process of intervention development should be routinely reported, in the same way as trial protocols are now requested to be published. Criteria for reporting interventions have been proposed,67 largely from the health psychology field, and through a lens of interventions as behavior change techniques.68 Though such taxonomies are useful to understand what finally constituted an intervention, we propose that the process by which such interventions were arrived at is equally crucial for transferability of findings. Reporting of interventions should go beyond their final constituents, to describe the process of development including reflection of the social, political, and economic contexts that led to that particular intervention package. Such reporting could follow the framework of steps and theory outlined in this article. Specific sections of journals where intervention designs can be published would support and promote both publication and debate over methods. Until this happens, the publication of evaluations of interventions whose process of development has not been clearly articulated will continue, with a consequent risk of replicating mistakes and reinventing wheels that could have been avoided with greater and better quality reporting of the process of intervention design.

This article highlights a paradox in evidence-based intervention research: on the one hand, basing interventions on an international and local evidence base should strengthen their effectiveness, whereas on the other hand intervention options are constrained by the requirements of evaluation design that are in place to strengthen the evidence base. This paradox represents a challenge that goes beyond the design of malaria interventions. Operating within these demands can result in the production of high-quality, well-understood interventions that have smaller impacts than less well-controlled, more flexible interventions that may be more difficult to assess for attribution of effect. This reflects a tension between desires for experimental evidence, which promises generalizability of interventions over time and space, and more contingent ways of knowing what works.69 The role that evaluation possibilities play in intervention design should be brought to the fore in debates over health care improvement. The development of best practice in designing interventions will need to go hand in hand with the development of best practice in evaluating complex health interventions in action.

Supplementary Material

DISCLOSURE OF POTENTIAL CONFLICTS OF INTEREST

The authors report no potential conflict of interest.

SUPPLEMENTAL MATERIAL

Supplemental data for this article can be accessed on the publisher's website.

REFERENCES

- [1].Campbell M, Fitzpatrick R, Haines A, Kinmonth AL, Sandercock P, Spiegelhalter D, Tyrer P. Framework for design and evaluation of complex interventions to improve health. BMJ 2000; 321(7262): 694-696. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Craig P, Dieppe P, Macintyre S, Michie S, Nazareth I, Petticrew M. Developing and evaluating complex interventions: the new Medical Research Council guidance. BMJ 2008; 337: 979–983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Council Medical Research. A framework for the development and evaluation of RCTs for complex interventions to improve health. 2000. Available at https://www.mrc.ac.uk/documents/pdf/rcts-for-complex-interventions-to-improve-health/ (accessed 6 February 2016) [Google Scholar]

- [4].Council Medical Research. Developing and evaluating complex interventions: new guidance. 2008. Available at http://www.mrc.ac.uk/documents/pdf/complex-interventions-guidance/ (accessed 6 February 2016)

- [5].English M, Schellenberg J, Todd J. Assessing health system interventions: key points when considering the value of randomization. Bull World Health Organ 2011; 89(12): 907-912. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Hawe P, Shiell A, Riley T. Complex interventions: how “out of control” can a randomised controlled trial be? BMJ 2004; 328(7455): 1561-1563. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Walach H, Falkenberg T, Fonnebo V, Lewith G, Jonas WB. Circular instead of hierarchical: methodological principles for the evaluation of complex interventions. BMC Med Res Methodol 2006; 24(6): 29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Paterson C, Baarts C, Launso L, Verhoef MJ. Evaluating complex health interventions: a critical analysis of the “outcomes” concept. BMC Complement Altern Med 2009; 9: 18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Michie S, Fixsen D, Grimshaw JM, Eccles MP. Specifying and reporting complex behaviour change interventions: the need for a scientific method. Implement Sci 2009; 4: 40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Kernick D. Wanted—new methodologies for health service research. Is complexity theory the answer? Fam Pract 2006; 23(3): 385-390. [DOI] [PubMed] [Google Scholar]

- [11].Whitty C, Chandler C, Ansah E, Leslie T, Staedke S. Deployment of ACT antimalarials for treatment of malaria: challenges and opportunities. Malar J 2008; 7(Suppl 1): S7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].World Health Organisation Guidelines for the treatment of malaria. 2006. Available at http://www.gov.bw/Global/MOH/TreatmentGuidelines2006.pdf (accessed 6 February 2016) [Google Scholar]

- [13].Reyburn H, Mbakilwa H, Mwangi R, Mwerinde O, Olomi R, Drakeley C, Whitty CJ. Rapid diagnostic tests compared with malaria microscopy for guiding outpatient treatment of febrile illness in Tanzania: randomised trial. BMJ 2007; 334(7590): 403. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [14].Michie S, Abraham C. Interventions to change health behaviours: evidence-based or evidence-inspired? Psychol Health 2004; 19(1): 29-49. [Google Scholar]

- [15].Kok G, Schaalma H, Ruiter RA, van Empelen P, Brug J. Intervention mapping: protocol for applying health psychology theory to prevention programmes. J Health Psychol 2004; 9(1): 85-98. [DOI] [PubMed] [Google Scholar]

- [16].National Institute for Health and Care Excellence Behaviour change: individual approaches. 2014. Available at http://guidance.nice.org.uk/ph49 (accessed 6 February 2016)

- [17].Lambert H. Accounting for EBM: notions of evidence in medicine. Soc Sci Med 2006; 62(11): 2633-2645. [DOI] [PubMed] [Google Scholar]

- [18].Dean K, Hunter D. New directions for health: towards a knowledge base for public health action. Soc Sci Med 1996; 42(5): 745-750. [DOI] [PubMed] [Google Scholar]

- [19].Reynolds J, DiLiberto D, Mangham-Jefferies L, Ansah E, Lal S, Mbakilwa H, Bruxvoort K, Webster J, Vestergaard L, Yeung S, et al.. The practice of “doing” evaluation: lessons learned from nine complex intervention trials in action. Implement Sci 2014; 9(1): 75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Crowe S, Cresswell K, Robertson A, Huby G, Avery A, Sheikh A. The case study approach. BMC Med Res Methodol 2011; 11: 100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Wells M, Williams B, Treweek S, Coyle J, Taylor J. Intervention description is not enough: evidence from an in-depth multiple case study on the untold role and impact of context in randomised controlled trials of seven complex interventions. Trials 2012; 13: 95. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Anzul M, Downing M, Ely M, Vinz R. On writing qualitative research: living by words. London and Washington, DC: The Falmer Press; 2003. [Google Scholar]

- [23].DiLiberto DD, Staedke SG, Nankya F, Maiteki-Sebuguzi C, Taaka L, Nayiga S, Kamya MR, Haaland A, Chandler CI. Behind the scenes of the PRIME intervention: designing a complex intervention to improve malaria care at public health centres in Uganda. Glob Health Action 2015; 8: 29067. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [24].Ogilvie D, Cummins S, Petticrew M, White M, Jones A, Wheeler K. Assessing the evaluability of complex public health interventions: five questions for researchers, funders, and policymakers. Milbank Q 2011; 89(2): 206-225. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [25].Godwin M, Ruhland L, Casson I, MacDonald S, Delva D, Birtwhistle R, Lam M, Seguin R. Pragmatic controlled clinical trials in primary care: the struggle between external and internal validity. BMC Med Res Methodol 2003; 3: 28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [26].Saunders RP, Evans ME, Joshi P. Developing a process-evaluation plan for assessing health promotion program implementation: a how-to guide. Health Promot Pract 2005; 6: 134-147. [DOI] [PubMed] [Google Scholar]

- [27].Oakley A, Strange V, Bonell C, Allen E, Stephenson J. Process evaluation in randomised controlled trials of complex interventions. BMJ 2006; 332(7538): 413-416. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [28].Peterson S. Assessing the scale-up of child survival interventions. Lancet 2010; 375(9714): 530-531. [DOI] [PubMed] [Google Scholar]

- [29].Granovetter MS. Threshold models of collective behavior. Am J Sociol 1978; 83: 1420-1443. [Google Scholar]

- [30].Rogers EM. Diffusion of innovations 5th ed. New York: The Free Press; 2003. [Google Scholar]

- [31].Oxman AD, Thomson MA, Davis DA, Haynes RB. No magic bullets: a systematic review of 102 trials of interventions to improve professional practice. CMAJ 1995; 153(10): 1423-1431. [PMC free article] [PubMed] [Google Scholar]

- [32].Woodward C. Improving Provider Skills: strategies for assisting health workers to modify and improve skills: Developing quality health care—a process of change 2000. Evidence and Information for Policy. Department of Organization of Health Services Delivery, World Health Organisation; Available at http://whqlibdoc.who.int/hq/2000/WHO_EIP_OSD_00.1.pdf(accessed 6 February 2016) [Google Scholar]

- [33].Clark AM. What are the components of complex interventions in healthcare? Theorizing approaches to parts, powers and the whole intervention. Soc Sci Med 2013; 93: 185-193. [DOI] [PubMed] [Google Scholar]

- [34].Cornfield J. Randomization by group: a formal analysis. Am J Epidemiol 1978; 108(2): 100-102. [DOI] [PubMed] [Google Scholar]

- [35].Okwaro FM, Chandler CIR, Hutchinson E, Nabirye C, Taaka L, Kayendeke M, Nayiga S, Staedke SG. Challenging logics of complex intervention trials: community perspectives of a health care improvement intervention in rural Uganda. Soc Sci Med 2015; 131(0): 10-17. [DOI] [PubMed] [Google Scholar]

- [36].Improved Clinical Effectiveness Through Behavioural Research Group Designing theoretically-informed implementation interventions. Implement Sci 2006; 1: 4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [37].Ulin PR, Robinson ET, Tolley EE. Qualitative methods in public health: a field guide for applied research San Francisco: Family Health International; 2005. [Google Scholar]

- [38].Bosch-Capblanch X, Liaqat S, Garner P. Managerial supervision to improve primary health care in low- and middle-income countries. Cochrane Database Syst Rev 2011; 9: CD006413. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [39].World Health Organisation Drug management program Interventions and strategies to improve the use of antimicrobials in developing countries. 2001. Available at http://www.who.int/drugresistance/Interventions_and_strategies_to_improve_the_use_of_antim.pdf (accessed 6 February 2016) [Google Scholar]

- [40].Squires JE, Valentine JC, Grimshaw JM. Systematic reviews of complex interventions: framing the review question. J Clin Epidemiol 2013; 66(11): 1215-1222. [DOI] [PubMed] [Google Scholar]

- [41].Petticrew M, Roberts H. Evidence, hierarchies, and typologies: horses for courses. J Epidemiol Community Health 2003; 57(7): 527-529. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [42].Mays N, Roberts E, Popay J. Synthesising research evidence In: Studying the organization and delivery of health services: research methods, Fulop N, Allen P, Clarke A and Black N, eds. London: Routledge; 2001; 188–220. [Google Scholar]

- [43].Mohr DC, Schueller SM, Montague E, Burns MN, Rashidi P. The behavioral intervention technology model: an integrated conceptual and technological framework for eHealth and mHealth interventions. J Med Internet Res 2014; 16(6): e146. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [44].Yoder PS. Negotiating relevance: belief, knowledge, and practice in international health projects. Med Anthropol Q 1997; 11(2): 131-146. [DOI] [PubMed] [Google Scholar]

- [45].Bhattacharyya O, Reeves S, Garfinkel S, Zwarenstein M. Designing theoretically-informed implementation interventions: fine in theory, but evidence of effectiveness in practice is needed. Implement Sci 2006; 1: 5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [46].Mann KV. Theoretical perspectives in medical education: past experience and future possibilities. Med Educ 2011; 45(1): 60-68. [DOI] [PubMed] [Google Scholar]

- [47].Wenger E. Communities of practice Learning, meaning, and identity. New York: Cambridge University Press; 1998. [Google Scholar]

- [48].Power R, Langhaug LF, Nyamurera T, Wilson D, Bassett MT, Cowan FM. Developing complex interventions for rigorous evaluation—a case study from rural Zimbabwe. Health Educ Res 2004; 19(5): 570-575. [DOI] [PubMed] [Google Scholar]

- [49].Smith LA, Jones C, Meek S, Webster J. Review: provider practice and user behavior interventions to improve prompt and effective treatment of malaria: do we know what works? Am J Trop Med Hyg 2009; 80(3): 326-335. [PubMed] [Google Scholar]

- [50].Rao VB, Schellenberg D, Ghani AC. Overcoming health systems barriers to successful malaria treatment. Trends Parasitol 2013; 29(4): 164-180. [DOI] [PubMed] [Google Scholar]

- [51].Parker M, Harper I. The anthropology of public health. J Biosoc Sci 2006; 38(1): 1-5. [DOI] [PubMed] [Google Scholar]

- [52].Hammond SA. The thin book of appreciative enquiry Plano, TX: Thin Book Publishing Co.; 1996. [Google Scholar]

- [53].Weiss CH. Evaluation: methods for studying programs and policies 2nd ed. Englewood Cliffs, NJ: Prentice Hall; 1998. [Google Scholar]

- [54].Pawson R, Tilley N. Realistic evaluation London: Sage Publications; 1997. [Google Scholar]

- [55].Chen HT. Practical program evaluation Assessing and improving planning, implementation, and effectiveness. Thousand Oaks, CA: SAGE; 2005. [Google Scholar]

- [56].Donaldson SI. Program theory-driven evaluation science New York: Lawrence Erlbaum; 2007. [Google Scholar]

- [57].Blamey AAM, MacMillan F, Fitzsimons CF, Shaw R, Mutrie N. Using programme theory to strengthen research protocol and intervention design within an RCT of a walking intervention. Evaluation 2013; 19(1): 5-23. [Google Scholar]

- [58].Frechtling JA. Logic modeling methods in program evaluation San Francisco: Jossey-Bass; 2007. [Google Scholar]

- [59].Harris M. Evaluating public and community health programs San Fransisco: John Wiley & Sons; 2010. [Google Scholar]

- [60].Huhman M, Heitzler C, Wong F. The VERB campaign logic model: a tool for planning and evaluation. Prev Chronic Dis 2004; 1(3): A11. [PMC free article] [PubMed] [Google Scholar]

- [61].Ofori-Adjei D, Arhinful DK. Effect of training on the clinical management of malaria by medical assistants in Ghana. Soc Sci Med 1996; 42(8): 1169-1176. [DOI] [PubMed] [Google Scholar]

- [62].Ajayi IO, Falade CO, Bamgboye EA, Oduola AM, Kale OO. Assessment of a treatment guideline to improve home management of malaria in children in rural south-west Nigeria. Malar J 2008; 7: 24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [63].Ajayi IO, Oladepo O, Falade CO, Bamgboye EA, Kale O. The development of a treatment guideline for childhood malaria in rural southwest Nigeria using participatory approach. Patient Educ Couns 2009; 75(2): 227-237. [DOI] [PubMed] [Google Scholar]

- [64].Haaland A. Reporting with pictures. a concept paper for researchers and health policy decision-makers Geneva: UNDP/World Bank/WHO Special Programme for Research and Training in Tropical Diseases; 2001. [Google Scholar]

- [65].Davies EC, Chandler CI, Innocent SH, Kalumuna C, Terlouw DJ, Lalloo DG, Staedke SG, Haaland A. Designing adverse event forms for real-world reporting: participatory research in Uganda. PloS One 2012; 7(3): e32704. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [66].Haaland A. UNICEF. Pretesting communication materials with special emphasis on child health and nutrition education: a manual for trainers and supervisors. Rangoon: UNICEF; 1984. [Google Scholar]

- [67].Hoffmann TC, Glasziou PP, Boutron I, Milne R, Perera R, Moher D, Altman DG, Barbour V, Macdonald H, Johnston M, et al.. Better reporting of interventions: template for intervention description and replication (TIDieR) checklist and guide. BMJ 2014; 348: g1687. [DOI] [PubMed] [Google Scholar]

- [68].Michie S, Richardson M, Johnston M, Abraham C, Francis J, Hardeman W, Eccles M, Cane J, Wood C. The behavior change technique taxonomy (v1) of 93 hierarchically clustered techniques: building an international consensus for the reporting of behavior change interventions. Ann Behav Med 2013; 46(1): 81-95. [DOI] [PubMed] [Google Scholar]

- [69].Adams V. Evidence-based global public health: subjects, profits, erasures In: When people come first critical studies in global health, Biehl J, Petryna A, eds. Princeton, NJ: Princeton University Press; 2013. [Google Scholar]

- [70].Chandler CIR, Kizito J, Taaka L, Nabirye C, Kayendeke M, Diliberto D, Staedke SG. Aspirations for quality health care in Uganda: how do we get there? Hum Resour Health 2013; 11(1): 13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [71].Staedke SG, Chandler CIR, Diliberto D, Maiteki-Sebuguzi C, Nankya F, Webb E, Dorsey G, Kamya MR. The PRIME trial protocol: evaluating the impact of an intervention implemented in public health centres on management of malaria and health outcomes of children using a cluster-randomised design in Tororo, Uganda. Implement Sci 2013; 8(1): 114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [72].Chandler CIR, Diliberto D, Nayiga S, Taaka L, Nabirye C, Kayendeke M, Hutchinson E, Kizito J, Maiteki-Sebuguzi C, Kamya MR, et al.. The PROCESS study: a protocol to evaluate the implementation, mechanisms of effect and context of an intervention to enhance public health centres in Tororo, Uganda. Implement Sci 2013; 8(1): 113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [73].Nayiga S, DiLiberto D, Taaka L, Nabirye C, Haaland A, Staedke SG, Chandler CIR. Strengthening patient-centred communication in rural Ugandan health centres: a theory-driven evaluation within a cluster randomized trial. Evaluation 2014; 20(4): 471-491. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [74].Staedke SG, Maiteki-Sebuguzi C, Diliberto D, Webb E, Mugenyi L, Mbabazi E, Gonahasa S, Kigozi SP, Willey B, Dorsey G, et al.. The impact of an intervention to improve Malaria care in public health centers on health indicators of children in Tororo, Uganda (PRIME): A cluster-randomized trial. Am J Trop Med Hyg 2016; Epub ahead of print. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [75].Grieve E, Diliberto D, Hansen KS, Maiteki-Sebuguzi C, Nankya F, Dorsey G, Kamya MR, Chandler CIR, Yeung S, Staedke SG. Cost-effectiveness of PRIME: an intervention implemented in public health centres on management of malaria of children under five using a cluster-randomised design in Tororo, Uganda. Forthcoming. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [76].Wiseman V, Mangham LJ, Cundill B, Achonduh OA, Nji AM, Njei AN, Chandler C, Mbacham WF. A cost-effectiveness analysis of provider interventions to improve health worker practice in providing treatment for uncomplicated malaria in Cameroon: a study protocol for a randomized controlled trial. Trials 2012; 13: 4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [77].Achonduh O, Mbacham W, Mangham-Jefferies L, Cundill B, Chandler C, Pamen-Ngako J, Lele A, Ndong I, Ndive S, Ambebila J, et al.. Designing and implementing interventions to change clinicians' practice in the management of uncomplicated malaria: lessons from Cameroon. Malar J 2014; 13(1): 204. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [78].Mangham LJ, Cundill B, Achonduh OA, Ambebila JN, Lele AK, Metoh TN, Ndive SN, Ndong IC, Nguela RL, Nji AM, et al.. Malaria prevalence and treatment of febrile patients at health facilities and medicine retailers in Cameroon. Trop Med Int Health 2012; 17(3): 330-342. [DOI] [PubMed] [Google Scholar]

- [79].Chandler CIR, Mangham L, Njei AN, Achonduh O, Mbacham WF, Wiseman V “As a clinician, you are not managing lab results, you are managing the patient”: how the enactment of malaria at health facilities in Cameroon compares with new WHO guidelines for the use of malaria tests. Soc Sci Med 2012; 74(10): 1528-1535. [DOI] [PubMed] [Google Scholar]

- [80].Mbacham WF, Mangham-Jefferies L, Cundill B, Achonduh OA, Chandler CIR, Ambebila JN, Nkwescheu A, Forsah-Achu D, Ndiforchu V, Tchekountouo O, et al.. Basic or enhanced clinician training to improve adherence to malaria treatment guidelines: a cluster-randomised trial in two areas of Cameroon. Lancet Glob Health 2014; 2(6): e346-e358. [DOI] [PubMed] [Google Scholar]

- [81].Mangham-Jefferies L, Wiseman V, Achonduh OA, Drake TL, Cundill B, Onwujekwe O, Mbacham W. Economic evaluation of a cluster randomized trial of interventions to improve health workers' practice in diagnosing and treating uncomplicated malaria in Cameroon. Value Health 2014; 17(8): 783–791. [DOI] [PubMed] [Google Scholar]

- [82].Mangham LJ, Cundill B, Ezeoke O, Nwala E, Uzochukwu BS, Wiseman V, Onwujekwe O. Treatment of uncomplicated malaria at public health facilities and medicine retailers in south-eastern Nigeria. Malar J 2011; 10: 155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [83].Wiseman V, Ogochukwu E, Emmanuel N, Lindsay JM, Bonnie C, Jane E, Eloka U, Benjamin U, Obinna O. A cost-effectiveness analysis of provider and community interventions to improve the treatment of uncomplicated malaria in Nigeria: study protocol for a randomized controlled trial. Trials 2012; 13: 81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [84].Ezeoke OP, Ezumah NN, Chandler CC, Mangham-Jefferies LJ, Onwujekwe OE, Wiseman V, Uzochukwu BS. Exploring health providers' and community perceptions and experiences with malaria tests in south-east Nigeria: a critical step towards appropriate treatment. Malar J 2012; 11: 368. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [85].Onwujekwe O, Mangham-Jefferies L, Cundill B, Alexander N, Langham J, Ibe O, Uzochukwu B, Wiseman V. Effectiveness of provider and community interventions to improve treatment of uncomplicated malaria in Nigeria: a cluster randomized controlled trial. PloS One 2015; 10(8): e0133832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [86].Chandler CI, Meta J, Ponzo C, Nasuwa F, Kessy J, Mbakilwa H, Haaland A, Reyburn H. The development of effective behaviour change interventions to support the use of malaria rapid diagnostic tests by Tanzanian clinicians. Implement Sci 2014; 9: 83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [87].Cundill B, Mbakilwa H, Chandler CI, Mtove G, Mtei F, Willetts A, Foster E, Muro F, Mwinyishehe R, Mandike R, et al.. Prescriber and patient-oriented behavioural interventions to improve use of malaria rapid diagnostic tests in Tanzania: facility-based cluster randomised trial. BMC Med 2015; 13(1): 118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [88].Hutchinson E, Reyburn H, Hamlyn E, Long K, Meta J, Mbakilwa H, Chandler C. Bringing the state into the clinic? Incorporating the rapid diagnostic test for malaria into routine practice in Tanzanian primary healthcare facilities. Glob Public Health 2015: 1-15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [89].Pace C, Lal S, Ewing V, Mbonye AK, Ndyomugyenyi R, Lalloo DG, Terlouw DJ. Facilitating harms data capture by non-clinicians utilizing a novel data collection tool developed by the ACT Consortium. Results of testing in the field. Forthcoming. [Google Scholar]

- [90].Ndyomugyenyi R, Magnussen P, Lal S, Hansen KS, Clarke S. Impact of malaria rapid diagnostic tests by community health workers on appropriate targeting of artemisinin-based combination therapy: randomized trials in two contrasting areas of high and low malaria transmission in south western Uganda. Forthcoming. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [91].Hansen KS, Pedrazzoli D, Mbonye A, Clarke S, Cundill B, Magnussen P, Yeung S. Willingness-to-pay for a rapid malaria diagnostic test and artemisinin-based combination therapy from private drug shops in Mukono District, Uganda. Health Policy Plan 2013; 28(2): 185-196. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [92].Chandler CIR, Hall-Clifford R, Asaph T, Pascal M, Clarke S, Mbonye AK. Introducing malaria rapid diagnostic tests at registered drug shops in Uganda: limitations of diagnostic testing in the reality of diagnosis. Soc Sci Med 2011; 72(6): 937-944. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [93].Mbonye AK, Ndyomugyenyi R, Turinde A, Magnussen P, Clarke S, Chandler C. The feasibility of introducing rapid diagnostic tests for malaria in drug shops in Uganda. Malar J 2010; 9: 367. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [94].Mbonye A, Magnussen P, Chandler C, Hansen K, Lal S, Cundill B, Lynch C, Clarke S. Introducing rapid diagnostic tests for malaria into drug shops in Uganda: design and implementation of a cluster randomized trial. Trials 2014; 15(1): 303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [95].Mbonye AK, Magnussen P, Lal S, Hansen KS, Cundill B, Chandler C, Clarke SE. A cluster randomised trial introducing rapid diagnostic tests into registered drug shops in Uganda: impact on appropriate treatment of malaria. PloS One 2015; 10(7): e0129545. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [96].Baiden F, Owusu-Agyei S, Bawah J, Bruce J, Tivura M, Delmini R, Gyaase S, Amenga-Etego S, Chandramohan D, Webster J. An evaluation of the clinical assessments of under-five febrile children presenting to primary health facilities in rural Ghana. PloS One 2011; 6(12): e28944. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [97].Baiden F, Owusu-Agyei S, Okyere E, Tivura M, Adjei G, Chandramohan D, Webster J. Acceptability of rapid diagnostic test-based management of malaria among caregivers of under-five children in rural Ghana. PloS One 2012; 7(9): e45556. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [98].Webster J, Baiden F, Bawah J, Bruce J, Tivura M, Delmini R, Amenga-Etego S, Chandramohan D, Owusu-Agyei S. Management of febrile children under five years in hospitals and health centres of rural Ghana. Malar J 2014; 13(1): 261. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [99].Abraham C, Michie S. Coding manual to identify behaviour change techniques in behaviour change intervention descriptions. 2007. Available at http://www.cs.uu.nl/docs/vakken/b3ii/Intelligente%20Interactie%20literatuur/College%203.%20Coaching%20Systemen%20%28Beun%29/Abraham%20&%20Michie%20-%20Coding%20Manual%20to%20Identify%20Behaviour%20Change%20Techniques.pdf (accessed 6 February 2016)

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.