Abstract

We propose a framework for the linear prediction of a multi-way array (i.e., a tensor) from another multi-way array of arbitrary dimension, using the contracted tensor product. This framework generalizes several existing approaches, including methods to predict a scalar outcome from a tensor, a matrix from a matrix, or a tensor from a scalar. We describe an approach that exploits the multiway structure of both the predictors and the outcomes by restricting the coefficients to have reduced CP-rank. We propose a general and efficient algorithm for penalized least-squares estimation, which allows for a ridge (L2) penalty on the coefficients. The objective is shown to give the mode of a Bayesian posterior, which motivates a Gibbs sampling algorithm for inference. We illustrate the approach with an application to facial image data. An R package is available at https://github.com/lockEF/MultiwayRegression.

Keywords: Multiway data, PARAFAC/CANDECOMP, ridge regression, reduced rank regression

1 Introduction

For many applications data are best represented in the form of a tensor, also called a multi-way or multi-dimensional array, which extends the familiar two-way data matrix (Samples × Variables) to higher dimensions. Tensors are increasingly encountered in fields that require the automated collection of high-throughput data with complex structure. For example, in molecular “omics” pro ling it is now common to collect high-dimensional data over multiple subjects, tissues, fluids or time points within a single study. For neuroimaging modalities such as fMRI and EEG, data are commonly represented as multi-way arrays with dimensions that can represent subjects, time points, brain regions, or frequencies. In this article we consider an application to a collection of facial images from the Faces in the Wild database (Learned-Miller et al., 2016), which when properly aligned to a 90 90 pixel grid can be represented as a 4-way array with dimension Faces × X × Y × Colors, where X and Y give the horizontal and vertical location of each pixel.

This article concerns the prediction of an array of arbitrary dimension Q1 × ⋯ QM from another array of arbitrary dimension P1 × ⋯ PL. For N training observations, this involves an outcome array and a predictor array . For example, we consider the simultaneous prediction of several describable attributes for faces from their images (Kumar et al., 2009), which requires predicting the array from . Another potential application is the prediction of EEG from fMRI data (see De Martino et al. (2011)), or fMRI from EEG data (see Jansen et al. (2012)). The spatial resolution of fMRI is richer than that for EEG, and the temporal resolution of EEG is richer than that for fMRI. Thus, an understanding of the predictive relationship between the two datasets can be used to infer missing temporal information for fMRI (e.g., the order in which certain region activations occur) and missing spatial information for EEG (e.g., the exact spatial location of certain electrical signals) (Huster et al., 2012). Yet another potential application is the prediction of gene expression across multiple tissues from other genomic variables (see Ramasamy et al. (2014)). The association between genetic polymorphisms with gene expression is an important first step to understanding the genetic etiology of a disease, and such associations are known to differ across tissue types (GTEx Consortium, 2015).

The task of tensor-on-tensor regression extends a growing literature on the predictive modeling of tensors under different scenarios. Such methods commonly rely on tensor factorization techniques (Kolda and Bader, 2009), which reconstruct a tensor using a small number of underlying patterns in each dimension. Tensor factorizations extend well known techniques for a matrix, such as the singular value decomposition and principal component analysis, to higher-order arrays. A classical and straightforward technique is the PARAFAC/CANDECOMP (CP) (Harshman, 1970) decomposition, in which the data are approximated as a linear combination of rank-1 tensors. An alternative is the Tucker decomposition (Tucker, 1966), in which a tensor is factorized into basis vectors for each dimension that are combined using a smaller core tensor. The CP factorization is a special case of the Tucker factorization wherein the core tensor is diagonal. Such factorization techniques are useful to account for and exploit multi-way dependence and reduce dimensionality.

Several methods have been developed for the prediction of a scalar outcome from a tensor of arbitrary dimension: and . Zhou et al. (2013) and Guo et al. (2012) propose tensor regression models for a single outcome in which the coefficient array is assumed to have a low-rank CP factorization. The proposed framework in Zhou et al. (2013) extends to generalized linear models and allows for the incorporation of sparsity-inducing regularization terms. An analogous approach in which the coefficients are assumed to have a Tucker structure is described by Li et al. (2013). Several methods have also been developed for the classification of multiway data (categorical Y : N × 1) (Tao et al., 2007; Wimalawarne et al., 2016; Lyu et al.), extending well-known linear classification techniques under the assumption that model coefficients have a factorized structure.

There is also a wide literature on the prediction of a matrix from another matrix, and . A classical approach is reduced rank regression, in which the P × Q coefficient matrix is restricted to have low rank (Izenman, 1975; Mukherjee and Zhu, 2011). Miranda et al. (2015) describe a Bayesian formulation for regression models with multiple outcome variables and multiway predictors ( and ), which is applied to a neuroimaging study. Conversely, tensor response regression models have been developed to predict a multiway outcome from vector predictors ( , ). Sun and Li (2016) propose a tensor response regression wherein a multiway outcome is assumed to have a CP factorization, and Li and Zhang (2016) propose a tensor response regression wherein a multiway outcome is assumed to have a Tucker factorization with weights determined by vector-valued predictors. For a similar context Lock and Li (2016) describe a supervised CP factorization, wherein the components of a CP factorization are informed by vector-valued covariates. Hoff (2015) extend a bilinear regression model for matrices to the prediction of an outcome tensor from a predictor tensor with the same number of modes (e.g., and ) via a Tucker product and describe a Gibbs sampling approach to inference.

The above methods address several important tasks, including scalar-on-tensor regression, vector-on-vector regression, vector-on-tensor regression and tensor-on-vector regression. However, there is a lack of methodology to addresses the important and increasingly relevant task of tensor-on-tensor regression, i.e., predicting an array of arbitrary dimension from another array of arbitrary dimension. This scenario is considered within a comprehensive theoretical study of convex tensor regularizers Raskutti and Yuan (2015), including the tensor nuclear norm. However, they do not discuss estimation algorithms for this context, and computing the tensor nuclear norm is NP-hard (Sun and Li, 2016; Friedland and Lim, 2014). In this article we propose a contracted tensor product for the linear prediction of a tensor from a tensor , where both and have arbitrary dimension, through a coefficient array of dimension P1 × ⋯ × PL × Q1 × ⋯ × QM. This framework is shown to accommodate all valid linear relations between the variates of and the variates of . In our implementation is assumed to have reduced CP-rank, a simple restriction which simultaneously exploits the multi-way structure of both and by borrowing information across the different modes and reducing dimensionality. We propose a general and efficient algorithm for penalized least-squares estimation, which allows for a ridge (L2) penalty on the coefficients. The objective is shown to give the mode of a Bayesian posterior, which motivates a Gibbs sampling algorithm for inference.

The primary novel contribution of this article is a framework and methodology that allows for tensor-on-tensor regression with arbitrary dimensions. Other novel contributions include optimization under a ridge penalty on the coefficients and Gibbs sampling for inference, and these contributions are also relevant to the more familiar special cases of tensor regression (scalar-on-tensor), reduced rank regression (vector-on-vector), and tensor response regression (tensor-on-vector).

2 Notation and Preliminaries

Throughout this article bold lowercase characters (a) denote vectors, bold uppercase characters (A) denote matrices, and uppercase blackboard bold characters ( ) denote multi-way arrays of arbitrary dimension.

Define a K-way array (i.e., a Kth-order tensor) by , where Ik is the dimension of the kth mode. The entries of the array are defined by indices enclosed in square brackets, , where ik ∈ {1, …, Ik} for k ∈ 1, …, K.

For vectors a1, …, aK of length I1, …, IK, respectively, define the outer product

as the K-way array of dimensions I1 × ⋯ × IK, with entries

The outer product of vectors is defined to have rank 1. For matrices A1, …, AK of the same column dimension R, we introduce the notation

| (1) |

where akr is the rth column of Ak. This gives a CP factorization, and an array that can be expressed in the form (1) is defined to have rank R.

The vectorization operator vec(·) transforms a multiway array to a vector containing the array entries. Specifically, is a vector of length where

It is often useful to represent an array in matrix form via unfolding it along a given mode. For this purpose we let the rows of A(k) : Ik × (∏j≠k Ij) give the vectorized versions of each subarray in the kth mode.

For two multiway arrays and we define the contracted tensor product

by

An analogous definition of the contracted tensor product, with slight differences in notation, is given in Bader and Kolda (2006). Note that for matrices A : I × P and B : P × Q,

and thus the contracted tensor product extends the usual matrix product to higher-order operands.

3 General framework

Consider predicting a multiway array from a multiway array with the model

| (2) |

where is a coefficient array and is an error array. The first L modes of contract the dimensions of that are not in , and the last M modes of expand along the modes in that are not in . The predicted outcome indexed by (q1, …, qM) is

| (3) |

for observations n = 1, …, N. In (2) we forgo the use of an intercept term for simplicity, and assume that and are each centered to have mean 0 over all their values.

Let P be the total number of predictors for each observation, , and Q be the total number of outcomes for each observation, . Equation (2) can be reformulated by rearranging the entries of , , and into matrix form

| (4) |

where Y(1) : N × Q, X(1) : N × P, and E(1) : N × Q are the arrays , and unfolded along the first mode. The columns of B : P × Q vectorize the first L modes of (collapsing ), and the rows of B vectorize the last M modes of (expanding to ):

From its matrix form (4) it is clear that the general framework (2) supports all valid linear relations between the P variates of and the Q variates of .

4 Estimation criteria

Consider choosing to minimize the sum of squared residuals

The unrestricted solution for is given by separate OLS regressions for each of the Q outcomes in , each with design matrix X(1); this is clear from (4), where the columns of B are given by separate OLS regressions of X(1) on each column of Y(1). Therefore, the unrestricted solution is not well-defined if Q > N or more generally if X(1) is not of full column rank. The unrestricted least squares solution may be undesirable even if it is well-defined, as it does not exploit the multi-way structure of or , and requires fitting

| (5) |

unknown parameters. Alternatively, the multi-way nature of and suggests a low-rank solution for . The rank R solution can be represented as

| (6) |

where Ul : Pl × R for l = 1, …, L and Vm : Qm × R for m = 1, …, M. The dimension of this model is

| (7) |

which can be a several order reduction from the unconstrained dimensionality (5). Moreover, the reduced rank solution allows for borrowing of information across the different dimensions of both and . However, the resulting least-squares solution

| (8) |

is still prone to over-fitting and instability if the model dimension (7) is high relative to the number of observed outcomes, or if the predictors have multicollinearity that is not addressed by the reduced rank assumption (e.g., multicollinearity within a mode). High-dimensionality and multicollinearity are both commonly encountered in application areas that involve multi-way data, such as imaging and genomics. To address these issues we incorporate an L2 penalty on the coefficient array ,

| (9) |

where λ controls the degree of penalization. This objective is equivalent to that of ridge regression when predicting a vector outcome from a matrix , where necessarily R = 1.

5 Identifiability

The general predictive model (2) is identifiable for , in that implies

for some . To show this, note that if is an array with 1 in position [p1, …, pL] and zeros elsewhere, then

However, the resulting components U1, …, UL, V1, …, VM in the factorized representation of (6) are not readily identified. Conditions for their identifiability are equivalent to conditions for the identifiability of the CP factorization, for which there is an extensive literature. To account for arbitrary scaling and ordering of the components, we impose the restrictions

1. ‖u1r‖ = ⋯ = ‖uLr‖ = ‖v1r‖ = ⋯ = ‖vMr‖ for r = 1 …, R, and

2. ‖u11‖ ≥ ‖u12‖ ≥ ⋯ ≥ ‖u1R‖.

The above restrictions are generally enough to ensure identifiability when L+M ≥ 3 under verifiable conditions (Sidiropoulos and Bro, 2000). If L + M = 2 (i.e., when predicting a matrix from a matrix, a 3-way array from a vector, or a vector from a 3-way array), then is a matrix and we require additional orthogonality restrictions:

3. for all r ≠ r*, or for all r ≠ r*.

In practice these restrictions can be imposed post-hoc, after the estimation procedure detailed in Section 7. For L + M ≥ 3, restrictions (a) and (b) can be imposed via a re-ordering and re-scaling of the components. For L + M = 2, components that satisfy restrictions (a), (b) and (c) can be identified via a singular value decomposition of .

6 Special cases

Here we describe other methods that fall within the family given by the reduced rank ridge objective (9). When predicting a vector from a matrix (Q = 0, P = 1), this framework is equivalent to standard ridge regression (Hoerl and Kennard, 1970), which is equivalent to OLS when λ = 0. Moreover, a connection between standard ridge regression and continuum regression (Sundberg, 1993) implies that the coefficients obtained through ridge regression are proportional to partial least squares regression for some λ = λ*, and the coefficients are proportional to the principal components of when λ → ∞.

When predicting a matrix from another matrix (Q = 1, P = 1), the objective given by (9) is equivalent to reduced rank regression (Izenman, 1975) when λ = 0. For arbitrary λ the objective is equivalent to a recently proposed reduced rank ridge regression (Mukherjee and Zhu, 2011).

When predicting a scalar from a tensor of arbitrary dimension (Q = 0, arbitrary P), (9) is equivalent to tensor ridge regression (Guo et al., 2012). Guo et al. (2012) use an alternating approach to estimation but claim that the subproblem for estimation of each Ul cannot be computed in closed form and resort to gradient style methods instead. On the contrary, our optimization approach detailed in Section 7 does give a closed form solution to this subproblem (11). Alternatively, Guo et al. (2012) suggest the separable form of the objective,

| (10) |

This separable objective is also used by Zhou et al. (2013), who consider a power family of penalty functions for predicting a vector from a tensor using a generalized linear model; their objective for a Gaussian response under L2 penalization is equivalent to (10). The solution of the separable L2 penalty depends on arbitrary scaling and orthogonality restrictions for identifiability of the Ul’s. For example, the separable penalty (10) is equivalent to the non-separable L2 penalty (9) if the columns of U2, …, UL are restricted to be orthonormal.

Without scale restrictions on the columns of Ul, the solution to the separable L2 penalty is equal to the solution for the non-separable penalty for L = 2, where defines the nuclear norm (i.e., the sum of the singular values of ). This interesting result is given explicitly in Proposition 1, and its proof is given in Appendix C.

Proposition 1

For , where the columns of U1 and U2 are orthogonal,

7 Optimization

We describe an iterative procedure to estimate that alternatingly solves the objective (9) for the component vectors in each mode, {U1, …, UL, V1, …, VM}, with the others fixed.

7.1 Least-squares

Here we consider the case without ridge regularization, λ = 0, wherein the component vectors in each mode are updated via separate OLS regressions.

To simplify notation we describe the procedure to update U1 with {U2, …, UL, V1, …, VM} fixed. The procedure to update each of U2, …, UL is analogous, because the loss function is invariant under permutation of the L modes of .

Define ℂr : N × P1 × Q1 × ⋯ × QM to be the contracted tensor product of and the r’th component of the CP factorization without U1:

Unfolding ℂr along the dimension corresponding to P1 gives the design matrix to predict vec(Y) for the r’th column of U1, Cr : NQ × P1. Thus, concatenating these matrices to define C : NQ × RP1 by C = [C1 … CR] gives the design matrix for all of the entries of U1, which are updated via OLS:

| (11) |

For the outcome modes we describe the procedure to update VM with {U1, …, UL, V1, …, VM−1} fixed. The procedure to update each of V1, …, VL−1 is analogous, because the loss function is invariant under permutation of the M modes of .

Let be unfolded along the mode corresponding to QM. Define so that the r’th column of D, dr, gives the entries of the contracted tensor product of and the r’th component of the CP factorization without VM:

The entries of VM are then updated via QM separate OLS regressions:

| (12) |

7.2 Ridge-regularization

For λ > 0, note that the objective (9) can be equivalently represented as an unregularized least squares problem with modified predictor and outcome arrays and :

Here is the concatenation of and a tensor wherein each P1 × ⋯ PL dimensional slice has for a single entry and zeros elsewhere; is the concatenation of and a P × Q1 × ⋯ QM tensor of zeros. Unfolding and along the first dimension yields the matrices

where I is the identity matrix and 0 is a matrix of zeros.

Thus, one can optimize the objective (9) via alternating least squares by replacing for and for in the least-squares algorithm of Section 7.1. However, and can be very large for high-dimensional . Thankfully, straightforward tensor algebra shows that this is equivalent to a direct application of algorithm in Section 7.1 to the original data and , with computationally efficient modifications to the OLS updating steps (11) and (12). The updating step for U1 (11) is

| (13) |

where · defines the dot product and ⊗ defines the Kronecker product. The updating step for VM (12) is

| (14) |

This iterative procedure is guaranteed to improve the regularized least squares objective (9) at each sub-step. The algorithm is “multi-convex”, i.e., the objective is convex and the parameter domains that are iteratively optimized are each convex. However, this is not enough to guarantee convergence to a global optimum, and the full space of low-rank tensors is not a convex space. The algorithm also may not converge to a local optimum, in that for a given distance metric it is possible that there exists alternative solutions within an infinitesimally small ε-ball of the converged solution with a better objective (see (Chen et al., 2012) for a discussion on iterative tensor optimization and stationary points). However, it is straightforward that the algorithm is guaranteed to converge to a coordinate-wise optimum, wherein the solution cannot be improved by changing the parameters in any single dimension. Higher levels of regularization (λ → ∞) tend to convexify the objective and facilitate convergence to a global optimum, a similar phenomenon is observed in Zhou et al. (2013). In practice we find that robustness to initial values and local optima is improved by a tempered regularization, starting with larger values of λ that gradually decrease to the desired level of regularization.

7.3 Tuning parameter selection

Selection of λ and R (if unknown) can be accomplished by assessing predictive accuracy with a training and test set, as illustrated in Section 10. More generally, these parameters can be selected via K-fold cross-validation. This approach has the advantage of being free of model assumptions, and is assessed via a simulation study in Appendix F. Alternatively, it is straightforward to compute the deviance information criterion (DIC) (Spiegelhalter et al., 2014) for posterior draws under the Bayesian inference framework of Section 8 and use this as a model-based heuristic to select both λ and R.

8 Inference

In the previous sections we have considered optimizing a given criteria for point estimation, without specifying a distributional form for the data or even a philosophical framework for inference. Indeed, the estimator given by the objective (9) is consistent under a wide variety of distributional assumptions, including those that allow for correlated responses or predictors. See Appendix A for more details on its consistency.

For inference and uncertainty quantification for this point estimate, we propose a Markov chain Monte Carlo (MCMC) simulation approach. This approach is theoretically motivated by the observation that (9) gives the mode of a Bayesian probability distribution. There are several other reasons to use MCMC simulation for inference in this context, rather than (e.g.,) asymptotic normality of the global optimizer under an assumed likelihood model (see Zhou et al. (2013) and Zhang et al. (2014) for related results). The algorithm in Section 7 may converge to a local minimum, which can still be used as a starting value for MCMC. Moreover, our approach gives a framework for full posterior inference on over its rank R support, the conditional mean for observed responses, and the predictive distribution for the response array given new realizations of the predictor array without requiring the identifiability of θ = {U1, …, UL, V1, …, VM}. Inference for θ is also possible under the conditions of Section 5.

If the errors have independent N(0, σ2) entries, the log-likelihood of implied by the general model (2) is

and thus the unregularized objective (8) gives the maximum likelihood estimate under the restriction . For λ > 0, consider a prior distribution for that is proportional to the spherical Gaussian distribution with variance σ2/λ over the support of rank R tensors:

| (15) |

The log posterior distribution for is

| (16) |

where , which is maximized by (9).

Under the factorized form (6) the full conditional distributions implied by (16) for each of U1, …, UL, V1, …, VM are multivariate normal. For example, the full conditional for U1 is

where μ1 is the right hand side of (13) and

where C is defined as in Section 7.1. The full conditional for VM is

where μL+M is given by the right hand side of (14) and

The derivations of the conditional means and variances are given in Appendix B. When λ = 0 the full conditionals correspond to a at prior on , for , and the posterior mode is given by the unregularized objective (8).

In practice we use a flexible Jeffrey’s prior for σ2, pr(σ2) ∝ 1=σ2, which leads to an inverse-gamma (IG) full conditional distribution,

| (17) |

We simulate dependent samples from the marginal posterior distribution of by Gibbs sampling from the full conditionals of U1, …, UL, V1, …, VM, and σ2:

-

Initialize by the posterior mode (9) using the procedure in Section 7.

For samples t = 1, …, T, repeat (b) and (c):

Draw σ2(t) from as in (17).

- Draw , as follows:

For the above algorithm U1, …, UL, V1, …, VM serve as a parameter augmentation to facilitate sampling for . Interpreting the marginal distribution of each of the or separately requires careful consideration of their identifiability (see Section 5). One approach is to perform a post-hoc transformation of the components at each sampling iteration

where satisfy given restrictions for identifiability.

For Ñ out-of-sample observations with predictor array , the point prediction for the responses is

| (18) |

where is given by (9). Uncertainty in this prediction can be assessed using samples from the posterior predictive distribution of :

| (19) |

where is generated with independent N(0, σ2(t)) entries.

9 Simulation study

9.1 Approach

We conduct a simulation study to predict a three-way array from another three-way array under various conditions. We implement a fully crossed factorial simulation design with the following manipulated conditions:

Rank R = 0, 1, 2, 3, 4 or 5 (6 levels)

Sample size N = 30 or 120 (2 levels)

Signal-to-noise ratio SNR = 1 or 5 (2 levels).

For each of the 24 scenarios, we simulate data as follows:

Generate : N × P1 × P2 with independent N(0, 1) entries.

Generate Ul : Pl × R for l = 1, …, L and Vm : Qm × R for m = 1, …, M, each with independent N(0, 1) entries.

Generate error : N × Q1 × Q2 with independent N(0, 1) entries.

- Set , where

and c is the scalar giving

We x the dimensions p1 = 15, p2 = 20, q1 = 5, q2 = 10, and generate 10 replicated datasets as above for each of the 24 scenarios, yielding 240 simulated datasets. For each simulated dataset, we estimate as in Section 7 under each combination of the following parameters:

Assumed rank , 2, 3, 4 or 5 (5 levels)

Regularization term λ = 0, 0:5, 1, 5 or 50 (5 levels).

For each of the 240 simulated datasets and 5 5 = 25 estimation procedures, we compute the relative out-of-sample prediction error of the resulting coefficient estimate . This is done empirically by generating a new dataset with Ñ = 500 observations:

where and have independent N(0, 1) entries. The relative prediction error (RPE) for these test observations is

| (20) |

Symmetric 95% credible intervals are created for each value of using T = 1000 outcome arrays simulated from the posterior (19).

9.2 Results

First we consider the results for those cases with no signal, R = 0, where the oracle RPE is 1. The marginal mean RPE across the levels of N, λ, and are shown in Table 1. Overall, simulations with a higher training sample size N resulted in lower RPE, estimation with higher regularization parameter λ resulted in lower RPE, and estimation with higher assumed rank resulted in higher RPE. These results are not surprising, as a lower sample size, higher assumed rank and less regularization all encourage over-fitting.

Table 1.

Marginal mean RPE for no signal, R = 0.

| Training samples |

N = 30 1.48 |

N = 120 1.08 |

|||

|---|---|---|---|---|---|

| Regularization | λ = 0 2.00 |

λ = 0.5 1.14 |

λ = 1 1.13 |

λ = 5 1.08 |

λ = 50 1.02 |

|

| |||||

| Assumed rank |

1.04 |

1.12 |

1.20 |

1.32 |

1.70 |

Table 2 shows the effect of the regularization parameter λ on the accuracy of the estimated model, in terms of RPE and coverage rates, for different scenarios. As expected, prediction error is generally improved in scenarios with a higher training sample size and higher signal-to-noise ratio. Higher values of λ generally improve predictive performance when the sample size and signal-to-noise ratio are small, as these scenarios are prone to over-fitting without regularization. However, large values of λ can lead to over-shrinkage of the estimated coefficients and introduce unnecessary bias, especially in scenarios that are less prone to over-fitting. Coverage rates of the 95% credible intervals are generally appropriate, especially with a higher training sample size. However, for the scenario with low sample size and high signal (N = 30, λ = 120) coverage rates for moderate values of λ are poor, as inference is biased toward smaller values of .

Table 2.

The top panel shows mean RPE by regularization for different scenarios using correct assumed ranks. The bottom panel shows the coverage rate for 95% credible intervals, and their mean length relative to the standard deviation of .

| RPE (std error) | λ = 0 | λ = 0.5 | λ = 1 | λ = 5 | λ = 50 |

|---|---|---|---|---|---|

| N = 120, SNR = 5 | 0.04 (0.01) | 0.04 (0.01) | 0.04 (0.01) | 0.05 (0.01) | 0.20 (0.01) |

| N = 120, SNR = 1 | 0.52 (0.01) | 0.52 (0.01) | 0.52 (0.01) | 0.52 (0.01) | 0.59 (0.01) |

| N = 30, SNR = 5 | 1.64 (0.12) | 0.74 (0.05) | 0.70 (0.04) | 0.63 (0.02) | 0.77 (0.01) |

| N = 30, SNR = 1 | 1.90 (0.15) | 1.07 (0.04) | 1.03 (0.04) | 0.92 (0.02) | 0.91 (0.01) |

|

| |||||

| Coverage (length) | λ = 0 | λ = 0.5 | λ = 1 | λ = 5 | λ = 50 |

|

| |||||

| N = 120, SNR = 5 | 0.95 (0.77) | 0.95 (0.77) | 0.95 (0.77) | 0.94 (0.80) | 0.91 (1.43) |

| N = 120, SNR = 1 | 0.95 (2.79) | 0.95 (2.79) | 0.95 (2.79) | 0.95 (2.79) | 0.94 (2.90) |

| N = 30, SNR = 5 | 0.91 (3.10) | 0.68 (1.11) | 0.65 (1.04) | 0.68 (1.10) | 0.84 (2.10) |

| N = 30, SNR = 1 | 0.98 (4.75) | 0.95 (3.56) | 0.94 (3.36) | 0.91 (3.05) | 0.91 (3.18) |

Table 3 illustrates the effects of rank misspecification on performance, under the scenario with N = 120, SNR= 1 and no regularization (λ = 0). For each possible value of the true rank R = 1, …, 5, the RPE is minimized when the assumed rank is equal to the true rank. Predictive performance is generally more robust to assuming a rank higher than the true rank than it is to assuming a rank lower than the true rank.

Table 3.

Mean RPE by assumed rank for different true ranks for N = 120, S2N= 1, and λ = 0

|

|

|

|

|

|

||||||

|---|---|---|---|---|---|---|---|---|---|---|

| R = 1 | 0.50 | 0.54 | 0.55 | 0.56 | 0.57 | |||||

| R = 2 | 0.64 | 0.50 | 0.53 | 0.53 | 0.54 | |||||

| R = 3 | 0.71 | 0.58 | 0.53 | 0.55 | 0.57 | |||||

| R = 4 | 0.78 | 0.67 | 0.57 | 0.51 | 0.53 | |||||

| R = 5 | 0.81 | 0.68 | 0.61 | 0.55 | 0.53 |

See Appendix D for additional simulation results when the predictors or response are correlated, and see Appendix E for a comparison with ad-hoc approaches that do not account for low rank dependence in or .

10 Application

We use the tensor-on-tensor regression model to predict attributes from facial images, using the Labeled Faces in the Wild database (Learned-Miller et al., 2016). The database includes over 13000 publicly available images taken from the internet, where each image includes the face of an individual. Each image is labeled only with the name of the individual depicted, often a celebrity, and there are multiple images for each individual. The images are unposed and exhibit wide variation in lighting, image quality, angle, etc. (hence “in the wild”).

Low-rank matrix factorization approaches are commonly used to analyze facial image data, particularly in the context of facial recognition (Sirovich and Kirby, 1987; Turk and Pentland, 1991; Vasilescu and Terzopoulos, 2002; Kim and Choi, 2007). Although facial images are not obviously multi-linear, the use of multi-way factorization techniques has been shown to convey advantages over simply vectorizing images (e.g., from a P1 P2 array of pixels to a vector of length P1 × P2) (Vasilescu and Terzopoulos, 2002). Kim and Choi (2007) show that treating color as another mode within a tensor factorization framework can improve facial recognition tasks with different lighting. Moreover, the CP factorization has been shown to be much more efficient as a dimension reduction tool for facial images than PCA, and marginally more efficient than the Tucker and other multiway factorization techniques (Lock et al., 2011).

Kumar et al. (2009) developed an attribute classifier, which gives describable attributes for a given facial image. These attributes include characteristics that describe the individual (e.g., gender, race, age), that describe their expression (e.g., smiling, frowning, eyes open), and that describe their accessories (e.g., glasses, make-up, jewelry). These attribute were determined on the Faces in the Wild dataset, as well as other facial image databases. In total 72 attributes are measured for each image. The attributes are measured on a continuous scale; for example, for the smiling attribute, higher values correspond to a more obvious smile and lower values correspond to no smile.

Our goal is to create an algorithm to predict the 72 describable and correlated attributes from a given image that contains a face. First, the images are frontalized as described in Hassner et al. (2015). In this process the unconstrained images are rotated, scaled, and cropped so that all faces appear forward-facing and the image shows only the face. After this step images are aligned over the coordinates, in that we expect the nose, mouth and other facial features to be in approximately the same location. Each frontalized image is 90 × 90 pixels, and each pixel gives the intensity for colors red, green and blue, resulting in a multiway array of dimensions 90 × 90 × 3. We center the array by subtracting the “mean face” from each image, i.e., we center each pixel triplet (x × y × color) to have mean 0 over the collection of frontalized images. We standardize the facial attribute data by converting the measurements to z-scores, wherein each attribute has mean zero and standard deviation 1 over the collection of faces.

To train the predictive model we use a use a random sample of 1000 images from unique individuals. Thus the predictor array of images is of dimension 1000 × 90 × 90 × 3, and the outcome array of attributes is of dimension 1000 × 72. Another set of 1000 images from unique individuals are used as a validation set, and .

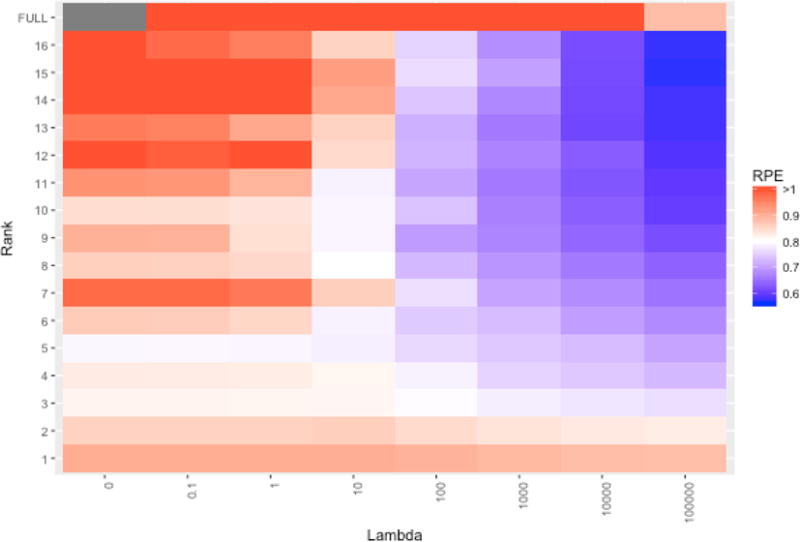

We run the optimization algorithm in Section 7 to estimate the coefficient array under various values for the rank R and regularization parameter λ. We consider all combinations of the values λ = {0, 0:1, 1, 10, 100, 1000, 104, 105} and R = {1, 2, …, 16}. We also consider the full rank model that ignores multi-way structure, where the coefficients are given by separate ridge regressions for each of the 72 outcomes on the 90 · 90 · 3 = 24300 predictors. For each estimate we compute the relative prediction error (RPE) for the test set (see (20)). The resulting RPE values over the different estimation schemes are shown in Figure 1. The minimum RPE achieved was 0:568, for R = 15 and λ = 105. The performance of models with no regularization (λ = 0), or without rank restriction (rank=FULL), were much worse in comparison. This illustrates the benefits of simultaneous rank restriction and ridge regularization for high-dimensional multi-way prediction problems.

Figure 1.

Relative prediction error for characteristics of out-of-sample images for different parameter choices. The top row (full rank) gives the results under separate ridge regression models for each outcome without rank restriction.

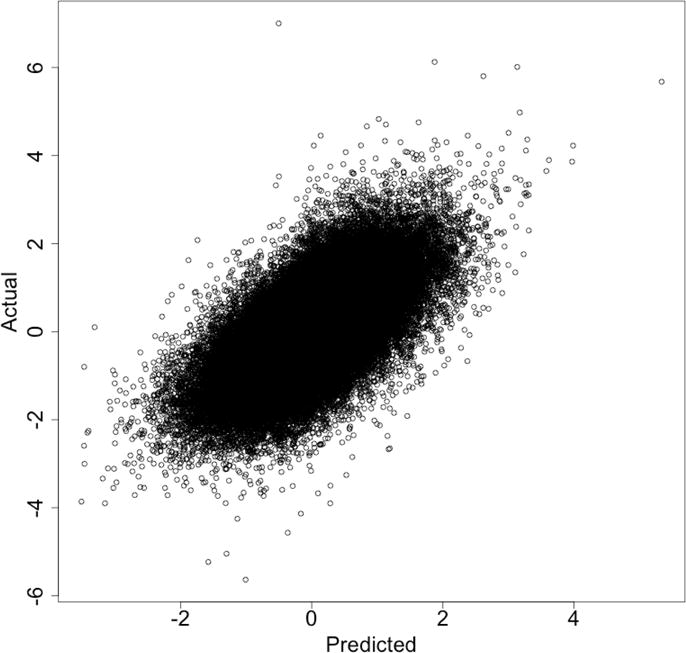

In what follows we use R = 15 and λ = 105. Figure 2 shows the predicted values vs. the given values, for the test data, over all 72 characteristics. The plot shows substantial residual variation but a clear trend, with correlation r = 0:662.

Figure 2.

Actual vs. predicted values for 1000 test images across 72 characteristics.

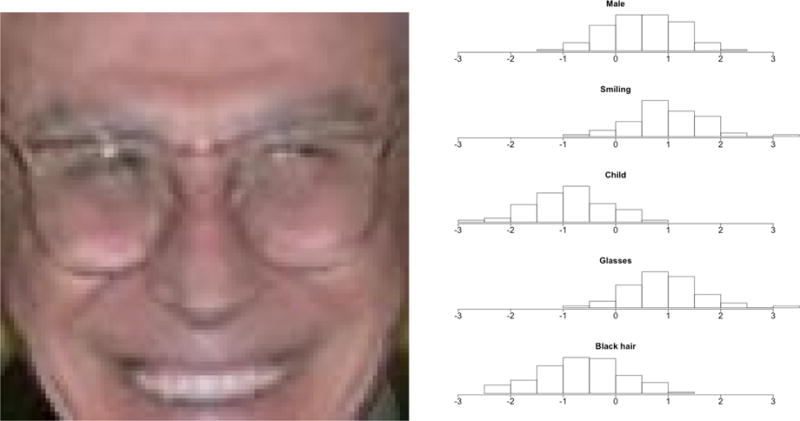

To assess predictive uncertainty we generate 5000 posterior samples as in Section 8, yielding samples from the posterior predictive distribution of the 72 characteristics for each of the 1000 test images. Symmetric credible intervals were computed for each characteristic of each image. The empirical coverage rates for the given values were 0:934 for 95% credible intervals and 0:887 for 90% credible intervals. The full posterior distributions for a small number of select characteristics, for a single test image, are shown in Figure 3 as an illustration of the results.

Figure 3.

Example test image (left), and its posterior samples for 5 select characteristics (right).

11 Discussion

In this article we have proposed a general framework for predicting one multiway array from another using the contracted tensor product. The simulation studies and facial image application illustrate the advantages of CP-rank regularization and ridge regularization in this framework. These two parameters define a broad class of models that are appropriate for a wide variety of scenarios. The CP assumption accounts for multi-way dependence in both the predictor and outcome array, and the ridge penalty accounts for auxiliary high-dimensionality and multi-collinearity of the predictors. However, several alternative regularization strategies are possible. The coefficient array can be restricted to have a more general Tucker structure (as in Li et al. (2013)), rather than a CP structure. A broad family of separable penalty functions, such as the separable L2 penalty in (10), are straightforward to impose within the general framework using an alternating estimation scheme similar to that described in Zhou et al. (2013). In particular, a separable L1 penalty has advantages when a solution that includes sparse subregions of each mode is desired. The alternating estimation scheme described herein for the non-separable L2 penalty is not easily extended to alternative non-separable penalty functions.

We have described a simple Gaussian likelihood and a prior distribution for that are motivated by the least-squares objective with non-separable L2 penalty. The resulting probability model involves many simplifying assumptions, which may be over-simplified for some situations. In particular, the assumption of independent and homoscadastic error in the outcome array can be inappropriate for applications with auxiliary structure in the outcome. The array normal distribution (Akdemir and Gupta, 2011; Hoff et al., 2011) allows for multiway dependence and can be used as a more flexible model for the error covariance. Alternatively, envelope methods (Cook and Zhang, 2015) rely on a general technique to account for and ignore immaterial structure in the response and/or predictors of a predictive model. A tensor envelope is defined in Li and Zhang (2016), and its use in the tensor-on-tensor regression framework is an interesting direction for future work.

The approach to inference used herein is “semi-Bayesian”, in that the prior is limited to facilitate inference under the given penalized least-squares objective and is not intended to be subjective. Fully Bayesian approaches, such as using a prior for both the rank of the coefficient array and the shrinkage parameter, are another interesting direction of future work.

Supplementary Material

A Consistency

Here we establish the consistency of the minimizer of the objective (9), for fixed dimension as N → ∞, under general conditions.

Theorem 1

Assume model (2) holds for, where

For each response index (q1, …, qM), the errors are independent and identically distributed (iid) for n = 1, …, N, with mean 0 and finite second moment.

For each predictor index (p1, …, pL), are iid for n = 1, …, N from a bounded distribution.

has a rank R0 factorization (6), where θ0 = {U1, …, UL, V1, …, VM} is in the interior of a compact space Θ and is identifiable under the restrictions of Section 5.

For R = R0 and fixed ridge penaly tλ ≥ 0, the minimizer of the objective (9), , converges to in probability as N → ∞. Moreover, under the restrictions of Section 5 the factorization parameters converge to θ0 in probability as N → ∞.

For Theorem 1 we require that the observations are iid, but within an observation the elements of the error array or predictor array may be correlated or from different distributions. Also, note that the correct rank is assumed for the estimator, but the result holds for any fixed penalty λ ≥0. Theorem 1 applies to the global minimizer of objective (9), which may not be attained by the iterative algorithm of Section 7. The requirements that the predictors are bounded and that Θ is compact are similar to those used to show the constancy of tensor regression under a normal likelihood model in Zhou et al. (2013). These requirements facilitate the use of Glivenko-Cantelli theory with a classical result on the asymptotic consistency of M-estimators (Van der Vaart, 2000); the proof is given below.

Proof

Let be the coefficient array resulting from θ = {U1, …, UL, V1, …, VM}:

Let M(θ) be the expected squared error for a single observation:

which exists for all θ ∈ Θ because the entries of are assumed to have finite second moment. Let be the penalized empirical squared error loss (9) divided by N:

From Theorem 5.7 of Van der Vaart (2000), the following three properties imply is a consistent estimator of θ0:

1. ε > 0,

2. , where OP (1) defines a stochastically bounded sequence of random variables, and

3.

Because , the coefficient array minimizes the expected squared error. Property 1 then follows from the identifiability of θ0. For any θ ∈ Θ, almost surely by the strong law of large numbers and the fact

| (21) |

Also, M(θ) is necessarily bounded over the compact space Θ. Thus, both and Mn(θ0) are stochastically bounded, and property 2 follows. For property 3 it suffices to show uniform convergence of the unpenalized squared error , by

and (21). The uniform convergence of can be verified by Glivenko-Cantelli theory.

Define

The class {m : θ ∈ Θ } is Glivenko-Cantelli, because Θ is compact and is continuous as a function of θ and bounded on Θ for any . Thus, property 3 holds and is a consistent estimator of θ.

By the continuous mapping theorem, is also consistent estimator of the true coefficient array □.

B Posterior derivations

Here we derive the full conditional distributions of the factorization components for , used in Section 8.

First, we consider the a priori conditional distributions that are implied by the spherical Gaussian prior for (15). Here we derive the prior conditional for U1, pr(U1 | U2, …, UL, V1, …, VM); the prior conditionals for U2, …, UL, V1, …, VM are analogous, because the prior for is permutation invariant over its L + M modes. Let give the vectorized form of the CP factorization without U1,

and define the matrix by . Then

and it follows that

The general model (2) implies

where C is defined as in (11). If has independent N(0, σ2) entries, a direct application of the Bayesian linear model (Lindley and Smith, 1972) gives

where

and

Basic tensor algebra shows

The posterior mean and variance for U2, …, UL are derived in an analogous way.

For the it suffices to consider VM, as the posterior derivations for V1, …, VM − 1 are analogous. The prior conditional for VM is

and the general model (2) implies

where D and YM are defined as in (12), and E has independent N(0, σ2) entries. Separate applications of the Bayesian linear model for each row of VM gives

where

and

Basic tensor algebra shows

C Proof of Proposition 1

Here we prove the equivalence of separable L2 penalization and nuclear norm penalization stated in Proposition 1. The result is shown for predicting a vector from a three-way array, in which . Analogous results exist for predicting a matrix from a matrix and predicting a three-way array from a vector .

In the solution to

| (22) |

the columns of U1 and U2, {u11, …, u1R} and {u21, …, u2R}, must satisfy

| (23) |

Here (23) follows from the general result that for c > 0,

where a = ‖u1r‖2, b = ‖u2r‖2, and . Thus,

| (24) |

Under orthogonality of the columns of U1 and U2, the non-zero singular values of are , and therefore (24) is equal to . It follows that (22) is equivalent to

D Correlated data simulation

Here we describe the results of a simulation study analogous to that in Section 9, but with correlation in the predictors or in the response . We simulate Gaussian data with an exponential spatial correlation structure using the R package fields (Douglas Nychka et al., 2015). The entries of are assumed to be on a Q1 × Q2 grid (Q1 = 5, Q2 = 10) with adjacent entries having distance 1. The entries of are assumed to be on a P1 × P2 grid (P1 = 15, P2 = 20) with adjacent entries having distance 1. The correlation between adjacent locations is ρ = 0.6 for each scenario, and the marginal variance of the entries is 1. Thus, data are simulated exactly as in Section 9.1, except for the correlation structure of (step 1.) or (step 3.).

The resulting RPE and credible interval coverage rates are shown in Table 4, which is analogous to Table 2 for the uncorrelated case. Interestingly, for penalized estimation and n = 30 the scenario with correlated gives significantly better performance in terms of RPE than the scenario without correlation. This was unexpected, but may be because correlation in discourages the algorithm from converging to a local minimum. For correlated the results are often similar to the uncorrelated scenario but tend toward lower accuracy. In particular, the credible intervals tend to undercover more than for the uncorrelated scenario, or for the scenario with correlated . This is probably because correlation in violates the assumed likelihood model for inference, while correlation in does not.

Table 4.

Mean RPE by regularization and coverage rate for correlated or correlated using correct assumed ranks. The coverage rates are for 95% credible intervals, and their mean length relative to the standard deviation of is shown.

| Correlated | |||||

|---|---|---|---|---|---|

|

| |||||

| RPE (std error) | λ = 0 | λ = 0.5 | λ = 1 | λ = 5 | λ = 50 |

| N = 120, SNR = 5 | 0.04 (0.01) | 0.04 (0.01) | 0.04 (0.01) | 0.05 (0.01) | 0.19 (0.01) |

| N = 120, SNR = 1 | 0.52 (0.01) | 0.52 (0.01) | 0.52 (0.01) | 0.52 (0.01) | 0.58 (0.01) |

| N = 30, SNR = 5 | 1.70 (0.17) | 0.43 (0.03) | 0.43 (0.03) | 0.45 (0.02) | 0.58 (0.01) |

| N = 30, SNR = 1 | 1.62 (0.11) | 0.87 (0.02) | 0.83 (0.02) | 0.79 (0.02) | 0.80 (0.01) |

|

| |||||

| Coverage (length) | λ = 0 | λ = 0.5 | λ = 1 | λ = 5 | λ = 50 |

|

| |||||

| N = 120, SNR = 5 | 0.95 (0.78) | 0.95 (0.78) | 0.95 (0.78) | 0.94 (0.83) | 0.92 (1.42) |

| N = 120, SNR = 1 | 0.95 (2.80) | 0.95 (2.80) | 0.95 (2.80) | 0.95 (2.80) | 0.94 (2.92) |

| N = 30, SNR = 5 | 0.93 (3.10) | 0.76 (1.11) | 0.74 (1.04) | 0.74 (1.10) | 0.84 (2.10) |

| N = 30, SNR = 1 | 0.98 (4.75) | 0.95 (3.56) | 0.94 (3.36) | 0.92 (3.05) | 0.92 (3.18) |

|

| |||||

| Correlated | |||||

|

| |||||

| RPE (std error) | λ = 0 | λ = 0.5 | λ = 1 | λ = 5 | λ = 50 |

|

| |||||

| N = 120, SNR = 5 | 0.04 (0.01) | 0.04 (0.01) | 0.04 (0.01) | 0.04 (0.01) | 0.19 (0.01) |

| N = 120, SNR = 1 | 0.59 (0.01) | 0.59 (0.01) | 0.59 (0.01) | 0.58 (0.01) | 0.60 (0.01) |

| N = 30, SNR = 5 | 2.03 (0.22) | 0.72 (0.03) | 0.68 (0.03) | 0.63 (0.02) | 0.77 (0.01) |

| N = 30, SNR = 1 | 1.86 (0.13) | 1.10 (0.02) | 1.04 (0.02) | 0.93 (0.01) | 0.91 (0.01) |

|

| |||||

| Coverage (length) | λ = 0 | λ = 0.5 | λ = 1 | λ = 5 | λ = 50 |

|

| |||||

| N = 120, SNR = 5 | 0.95 (0.77) | 0.95 (0.77) | 0.95 (0.77) | 0.94 (0.79) | 0.91 (1.41) |

| N = 120, SNR = 1 | 0.93 (2.75) | 0.93 (2.75) | 0.93 (2.75) | 0.93 (2.75) | 0.93 (2.88) |

| N = 30, SNR = 5 | 0.91 (2.98) | 0.68 (1.10) | 0.66 (1.01) | 0.67 (1.05) | 0.84 (2.09) |

| N = 30, SNR = 1 | 0.98 (4.89) | 0.94 (3.51) | 0.93 (3.28) | 0.89 (2.92) | 0.91 (3.12) |

E Full-rank comparison

Here we describe the results of a simulation study in which data are generated as in Section 9, but the model is estimated without low-rank constraints. For each simulation scenario, we consider two additional estimation approaches:

Unconstrained and unconstrained , in which the solution is given by independent ridge regression for each location of on the vectorized entries of .

Rank constraint for , but not for , in which the solution is given by separate tensor regressions with ridge regularization for each location of on . That is, each location in is considered independently as a univariate outcome, and criteria (9) is optimized separately for each of the Q1Q2 = 50 outcomes (Q1 = 5, Q2 = 10).

The resulting RPE under the different simulation scenarios and estimation approaches are shown in Table 5. Recall that each outcome has N = 30 or 120 observations; for approach 1 the total number of parameters for each outcome is P1P2 = 300 (P1 = 15 and P2 = 20), and for approach 2 the total number of parameters for each outcome is R(P1 + P2). Thus, without ridge regularization (λ = 0), the solution is always undefined for approach 1, and is also undefined under approach 2 for most values of N and R considered. With ridge regularization (λ > 0), the results under approach 1 are generally inferior to those under approach 2, and both approaches are inferior to the tensor-on-tensor approach presented in Section 9 with a low-rank constraint for both and . Moreover, while approach 1 is very fast because it is non-iterative, approach 2 was generally much slower than the tensor-on-tensor approach because it requires fitting a separate model for each location; an average, model estimation for approach 2 took 12 minutes per simulated dataset, and the tensor-on-tensor model took 3 minutes per dataset. This demonstrates the potential advantages of simultaneously allowing for low-rank dependence in both the predictors and the outcomes in the tensor-on-tensor context.

Table 5.

Mean RPE by regularization parameter (λ), under three estimation approaches: 1. with no rank constraints, 2. with rank constraint for but independent models for each entry of , and 3. with low-rank dependence for both and (reproduced from Table 2).

| 1. Unconstrained , unconstrained | |||||

|---|---|---|---|---|---|

|

| |||||

| RPE (std error) | λ = 0 | λ = 0.5 | λ = 1 | λ = 5 | λ = 50 |

| N = 120, SNR = 5 | NA | 0.64 (0.01) | 0.64 (0.01) | 0.63 (0.01) | 0.64 (0.01) |

| N = 120, SNR = 1 | NA | 1.14 (0.01) | 1.14 (0.01) | 1.11 (0.01) | 0.99 (0.01) |

| N = 30, SNR = 5 | NA | 0.91 (0.01) | 0.91 (0.01) | 0.91 (0.01) | 0.91 (0.01) |

| N = 30, SNR = 1 | NA | 1.00 (0.01) | 1.00 (0.01) | 1.00 (0.01) | 0.99 (0.01) |

|

| |||||

| 2. Constrained , unconstrained | |||||

|

| |||||

| RPE (std error) | λ = 0 | λ = 0.5 | λ = 1 | λ = 5 | λ = 50 |

|

| |||||

| N = 120, SNR = 5 | NA | 0.30 (0.01) | 0.29 (0.01) | 0.27 (0.01) | 0.38 (0.01) |

| N = 120, SNR = 1 | NA | 1.72 (0.01) | 1.62 (0.01) | 1.30 (0.01) | 0.88 (0.01) |

| N = 30, SNR = 5 | NA | 0.91 (0.01) | 0.90 (0.01) | 0.87 (0.01) | 0.86 (0.01) |

| N = 30, SNR = 1 | NA | 1.20 (0.01) | 1.18 (0.01) | 1.12 (0.01) | 1.00 (0.01) |

|

| |||||

| 3. Constrained , Constrained | |||||

|

| |||||

| RPE (std error) | λ = 0 | λ = 0.5 | λ = 1 | λ = 5 | λ = 50 |

|

| |||||

| N = 120, SNR = 5 | 0.04 (0.01) | 0.04 (0.01) | 0.04 (0.01) | 0.05 (0.01) | 0.20 (0.01) |

| N = 120, SNR = 1 | 0.52 (0.01) | 0.52 (0.01) | 0.52 (0.01) | 0.52 (0.01) | 0.59 (0.01) |

| N = 30, SNR = 5 | 1.64 (0.12) | 0.74 (0.05) | 0.70 (0.04) | 0.63 (0.02) | 0.77 (0.01) |

| N = 30, SNR = 1 | 1.90 (0.15) | 1.07 (0.04) | 1.03 (0.04) | 0.92 (0.02) | 0.91 (0.01) |

F Cross-validation simulation

Here we describe the results of a simulation study to assess the use of cross-validation to select the parameters R and λ. Data were generated as in Section 9. For each dataset generated, we perform five-fold cross-validation for each pair (R, λ) over the grid R = {1, 2, 3, 4, 5} and λ = {0, 0:5, 1, 5, 50}. The estimates are selected as the pair that gives the lowest mean squared prediction error across test folds. The resulting estimates are summarized in Table 6. The accuracy of the selected ranks varied widely depending on the simulation scenario. For the scenario with larger sample size and larger signal, N = 120, SNR = 5, the true underlying rank was selected for every replication. With lower sample size (N = 30) the true rank was selected for only approximately 1/3 of the replications, and for remaining replications the results were evenly split between overestimation and under-estimation of the rank. The selected value for generally increased with lower signal and lower sample size, which is consistent with the values of λ that gave optimal performance for the larger test set (see Table 2).

Table 6.

Rank selection accuracy and selected regularization parameter (λ) under five-fold cross-validation, averaged over replications for four different scenarios.

|

|

|

|

Mean | ||||

|---|---|---|---|---|---|---|---|

| N = 120, SNR = 5 | 100% | 0% | 0% | 0.03 | |||

| N = 120, SNR = 1 | 88% | 12% | 0% | 2.14 | |||

| N = 30, SNR = 5 | 34% | 32% | 34% | 16.1 | |||

| N = 30, SNR = 1 | 30% | 38% | 32% | 27.8 |

Footnotes

SUPPLEMENTARY MATERIAL

MultiwayRegression: R package MultiwayRegression, containing documented code for the methods described in this article. (GNU zipped tar le)

Code: A zipped folder with R scripts to reproduce the simulation and application results in this manuscript. (zipped folder)

References

- Akdemir D, Gupta AK. Array variate random variables with multiway Kronecker delta covariance matrix structure. J Algebr Stat. 2011;2(1):98–113. [Google Scholar]

- Bader BW, Kolda TG. Algorithm 862: Matlab tensor classes for fast algorithm prototyping. ACM Transactions on Mathematical Software (TOMS) 2006;32(4):635–653. [Google Scholar]

- Chen B, He S, Li Z, Zhang S. Maximum block improvement and polynomial optimization. SIAM Journal on Optimization. 2012;22(1):87–107. [Google Scholar]

- Cook RD, Zhang X. Foundations for envelope models and methods. Journal of the American Statistical Association. 2015;110(510):599–611. [Google Scholar]

- De Martino F, De Borst AW, Valente G, Goebel R, Formisano E. Predicting EEG single trial responses with simultaneous fMRI and relevance vector machine regression. Neuroimage. 2011;56(2):826–836. doi: 10.1016/j.neuroimage.2010.07.068. [DOI] [PubMed] [Google Scholar]

- Douglas Nychka, Reinhard Furrer, John Paige, Stephan Sain. fields: Tools for spatial data. 2015 R package version 9.0. [Google Scholar]

- Friedland S, Lim LH. Nuclear norm of higher-order tensors. arXiv preprint arXiv: 1410.6072 2014 [Google Scholar]

- GTEx Consortium. The genotype-tissue expression (GTEx) pilot analysis: Multi-tissue gene regulation in humans. Science. 2015;348(6235):648–660. doi: 10.1126/science.1262110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guo W, Kotsia I, Patras I. Tensor learning for regression. IEEE Transactions on Image Processing. 2012;21(2):816–827. doi: 10.1109/TIP.2011.2165291. [DOI] [PubMed] [Google Scholar]

- Harshman RA. Foundations of the PARAFAC procedure: Models and conditions for an “explanatory” multi-modal factor analysis. UCLA Working Papers in Phonetics. 1970;16:1–84. [Google Scholar]

- Hassner T, Harel S, Paz E, Enbar R. Effective face frontalization in unconstrained images. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2015:4295–4304. [Google Scholar]

- Hoerl AE, Kennard RW. Ridge regression: Biased estimation for nonorthogonal problems. Technometrics. 1970;12(1):55–67. [Google Scholar]

- Hoff PD. Multilinear tensor regression for longitudinal relational data. The Annals of Applied Statistics. 2015;9(3):1169–1193. doi: 10.1214/15-AOAS839. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoff PD, et al. Separable covariance arrays via the Tucker product, with applications to multivariate relational data. Bayesian Analysis. 2011;6(2):179–196. [Google Scholar]

- Huster RJ, Debener S, Eichele T, Herrmann CS. Methods for simultaneous eeg-fmri: an introductory review. Journal of Neuroscience. 2012;32(18):6053–6060. doi: 10.1523/JNEUROSCI.0447-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Izenman AJ. Reduced-rank regression for the multivariate linear model. Journal of multivariate analysis. 1975;5(2):248–264. [Google Scholar]

- Jansen M, White TP, Mullinger KJ, Liddle EB, Gowland PA, Francis ST, Bowtell R, Liddle PF. Motion-related artefacts in eeg predict neuronally plausible patterns of activation in fmri data. Neuroimage. 2012;59(1):261–270. doi: 10.1016/j.neuroimage.2011.06.094. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim YD, Choi S. International Conference on Biometrics. Springer; 2007. Color face tensor factorization and slicing for illumination-robust recognition; pp. 19–28. [Google Scholar]

- Kolda TG, Bader BW. Tensor decompositions and applications. SIAM review. 2009;51(3):455–500. [Google Scholar]

- Kumar N, Berg AC, Belhumeur PN, Nayar SK. 2009 IEEE 12th International Conference on Computer Vision. IEEE; 2009. Attribute and simile classifiers for face verification; pp. 365–372. [Google Scholar]

- Learned-Miller E, Huang GB, RoyChowdhury A, Li H, Hua G. Advances in Face Detection and Facial Image Analysis. Springer; 2016. Labeled faces in the wild: A survey; pp. 189–248. [Google Scholar]

- Li L, Zhang X. Parsimonious tensor response regression. Journal of the American Statistical Association. 2016 doi: 10.1080/01621459.2021.1938082. (just-accepted) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li X, Zhou H, Li L. Tucker tensor regression and neuroimaging analysis. arXiv preprint arXiv: 1304.5637. 2013 doi: 10.1007/s12561-018-9215-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lindley DV, Smith AF. Bayes estimates for the linear model. Journal of the Royal Statistical Society. Series B (Methodological) 1972;34(1):1–41. [Google Scholar]

- Lock EF, Li G. Supervised multiway factorization. arXiv preprint arXiv: 1609.03228. 2016 doi: 10.1214/18-EJS1421. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lock EF, Nobel AB, Marron JS. Comment on Population Value Decomposition, a framework for the analysis of image populations. Journal of the American Statistical Association. 2011;106(495):798–802. doi: 10.1198/jasa.2011.ap10089. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lyu T, Lock EF, Eberly LE. Discriminating sample groups with multi-way data. Biostatistics. 18:434–450. doi: 10.1093/biostatistics/kxw057. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miranda M, Zhu H, Ibrahim JG. TPRM: Tensor partition regression models with applications in imaging biomarker detection. arXiv preprint arXiv: 1505.05482. 2015 doi: 10.1214/17-AOAS1116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mukherjee A, Zhu J. Reduced rank ridge regression and its kernel extensions. Statistical analysis and data mining. 2011;4(6):612–622. doi: 10.1002/sam.10138. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ramasamy A, Trabzuni D, Guelfi S, Varghese V, Smith C, Walker R, De T, Coin L, de Silva R, Cookson MR, et al. Genetic variability in the regulation of gene expression in ten regions of the human brain. Nature neuroscience. 2014;17(10):1418–1428. doi: 10.1038/nn.3801. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raskutti G, Yuan M. Convex regularization for high-dimensional tensor regression. arXiv preprint arXiv: 1512.01215 2015 [Google Scholar]

- Sidiropoulos ND, Bro R. On the uniqueness of multilinear decomposition of N-way arrays. Journal of Chemometrics. 2000;14(3):229–239. [Google Scholar]

- Sirovich L, Kirby M. Low-dimensional procedure for the characterization of human faces. Journal of the Optical Society of America A. 1987;4(3):519–524. doi: 10.1364/josaa.4.000519. [DOI] [PubMed] [Google Scholar]

- Spiegelhalter DJ, Best NG, Carlin BP, Linde A. The deviance information criterion: 12 years on. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 2014;76(3):485–493. [Google Scholar]

- Sun WW, Li L. Sparse low-rank tensor response regression. arXiv preprint arXiv: 1609.04523 2016 [Google Scholar]

- Sundberg R. Continuum regression and ridge regression. Journal of the Royal Statistical Society. Series B (Methodological) 1993:653–659. [Google Scholar]

- Tao D, Li X, Wu X, Hu W, Maybank SJ. Supervised tensor learning. Knowledge and information systems. 2007;13(1):1–42. [Google Scholar]

- Tucker LR. Some mathematical notes on three-mode factor analysis. Psychometrika. 1966;31(3):279–311. doi: 10.1007/BF02289464. [DOI] [PubMed] [Google Scholar]

- Turk M, Pentland A. Eigenfaces for recognition. Journal of cognitive neuroscience. 1991;3(1):71–86. doi: 10.1162/jocn.1991.3.1.71. [DOI] [PubMed] [Google Scholar]

- Van der Vaart AW. Asymptotic statistics. Vol. 3. Cambridge university press; 2000. [Google Scholar]

- Vasilescu MAO, Terzopoulos D. European Conference on Computer Vision. Springer; 2002. Multilinear analysis of image ensembles: Tensorfaces; pp. 447–460. [Google Scholar]

- Wimalawarne K, Tomioka R, Sugiyama M. Theoretical and experimental analyses of tensor-based regression and classification. Neural computation. 2016;28(4):686–715. doi: 10.1162/NECO_a_00815. [DOI] [PubMed] [Google Scholar]

- Zhang X, Li L, Zhou H, Shen D, et al. Tensor generalized estimating equations for longitudinal imaging analysis. arXiv preprint arXiv: 1412.6592. 2014 doi: 10.5705/ss.202017.0153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhou H, Li L, Zhu H. Tensor regression with applications in neuroimaging data analysis. Journal of the American Statistical Association. 2013;108(502):540–552. doi: 10.1080/01621459.2013.776499. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.