Abstract

Research indicates that human-robot interaction can help children with Autism Spectrum Disorder (ASD). While most early robot-mediated interaction studies were based on free interactions, recent studies have shown that robot-mediated interventions that focus on the core impairments of ASD such as joint attention deficit tend to produce better outcomes. Joint attention impairment is one of the core deficits in ASD that has an important impact in the neuropsychological development of these children. In this work, we propose a novel joint attention intervention system for children with ASD that overcomes several existing limitations in this domain such as the need to use body-worn sensors, non-autonomous robot operation requiring human involvement and lack of a formal model for robot-mediated joint attention interaction. We present a fully autonomous robotic system, called NORRIS, that can infer attention through a distributed non-contact gaze inference mechanism with an embedded Least-to-Most (LTM) robot-mediated interaction model to address the current limitations. The system was tested in a multi-session user study with 14 young children with ASD. The results showed that participants’ joint attention skills improved significantly, their interest in the robot remained consistent throughout the sessions, and the LTM interaction model was effective in promoting the children’s performance.

Index Terms: Robot-assisted intervention, joint attention, children with Autism Spectrum Disorder

I. Introduction

Human-robot interaction (HRI), in recent years, has been investigated as a potential intervention tool for children with Autism Spectrum Disorder (ASD) [1]. ASD is a common neurodevelopmental disorder that impacts 1 in 68 children in the U.S. [2]. Social communication deficits are among the core impairments of ASD [3]. Robot-mediated interventions are promising in teaching social communication skills to children with ASD because many children with ASD are fascinated by technology and may pay more attention to a robot rather than a human therapist [4]. A few experimental studies have shown that robots could elicit social communication behaviors, such as speech [5] and arm gesture imitation [6], better than humans within the studies. In addition, robots are highly controllable, precise, and could potentially provide effective interventions with low cost [7, 8].

Primarily animal-like robots [9, 10] and small humanoid robots [11, 12] have been used for studies with children with ASD. Kozima et al.[13] designed a small creature-like robot called “Keepon”, which successfully elicited positive social interaction behaviors in children with ASD. A humanoid robot called “KASPAR” [14, 15], was successfully used to facilitate collaborative play and tactile interaction with children with ASD. Feil-Seifer and Mataric [16] found that contingent activation of a robot during interactions yielded immediate short-term improvement in social interactions. While important to demonstrate the potential of HRI in ASD intervention, most of these earlier studies chose free play as the mode of interaction instead of focusing on the core deficits of ASD. However, studies in ASD intervention have shown that interventions are most effective when the intervention is focused on the core deficits of ASD [17]. In addition, most of these previous HRI systems were open-loop systems and thus were not responsive to the dynamic interaction cues from the participants to be able to adapt and individualize intervention. The primary goal of the current work is to design a fully autonomous closed-loop robotic system that can target core deficits of ASD. Targeting core deficits using robot-mediated intervention is more complex since the autonomous system needs to elicit response regarding the core deficit through a set of well-designed interaction protocol, assess participant’s response in real-time, and adapt its (i.e., the robot’s) own interaction to shape the participant’s response.

We introduce a new closed-loop fully autonomous robotic system, named NORRIS, which stands for Non-contact Responsive Robot-mediated Intervention System, to help children with ASD learn joint attention skills. Joint attention is the process of sharing attention and socially coordinating attention with others to effectively learn from the environment [18]. Joint attention skills underlie the neurodevelopmental cascade of ASD, and successful intervention targeted on joint attention is essential to improve numerous other developmental skills in children with ASD [19–21].

The current work improved our previous work [22, 23] in a number of important ways. While [22] presented a novel HRI architecture for ASD intervention, ARIA, and developed an effective Least-to-Most (LTM) protocol for joint attention intervention with promising results, it required participants to wear an instrumented hat for gaze inference. Since many young children with ASD are sensitive to unfamiliar touch [24], close to 40% children did not want to wear the hat and thus could not take part in the intervention. In order to solve this problem, Zheng et al. [23] developed another robotic system that inherited the LTM protocol from ARIA but used a Wizard of Oz [25] strategy for gaze detection to eliminate the need for the instrumented hat. While it enabled 100% participation, the system became semi-autonomous and needed human involvement for gaze inference. Additionally, both [22] and [23] used LTM protocol for joint attention but did not provide a generalizable mathematical model for LTM interaction. In LTM, the teacher allows the learner an opportunity to respond independently on each training stage and delivers the least intrusive prompt first. If necessary, more intrusive prompts, usually upgraded based on the previous prompts, are then delivered to the learner to complete each training procedure [26]. Essentially, LTM provides support to the learner only when needed. LTM has been widely applied in diagnostic and screening tools for children with ASD [27, 28]. However, to our knowledge, no mathematical model of LTM has been presented in the literature such that LTM based interaction can be generalized for multiple skill training. Anzalone et al. [29] have developed a joint attention intervention system using a robot administrator and external cameras to sense the attention of the participants, which is similar to the set-up presented in NORRIS. However, NORRIS introduces novel system architecture, gaze tracking method, LTM interaction mathematical model, and multi-session user study for young children with ASD that have not been reported in the literature.

The contributions of the current study are two-fold: 1) development of a new fully autonomous closed-loop robot-mediated intervention system that can infer gaze non-invasively and is capable of administering LTM protocol based on a general mathematical model; and 2) results from a feasibility joint attention intervention user study that tested the newly developed system in a multi-session study. This study validated the effectiveness of LTM in the robot-mediated intervention, i.e., adding prompt levels increased the probability of expected response and the system elicited expected response with high probability at the highest prompt level.

The remainder of this paper is organized as follows: Section II describes the architecture and components of NORRIS. Section III introduces the mathematical model for LTM as the interaction logic which is followed by the design of the feasibility multi-session user study to validate the NORRIS system in Section IV. Sections V and VI present the results of this user study and the summary of contributions and limitations of the paper, respectively.

II. NORRIS System Architecture and Components

A. System Architecture

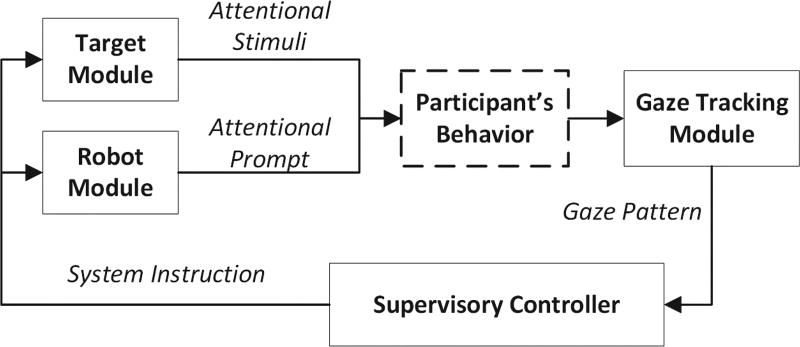

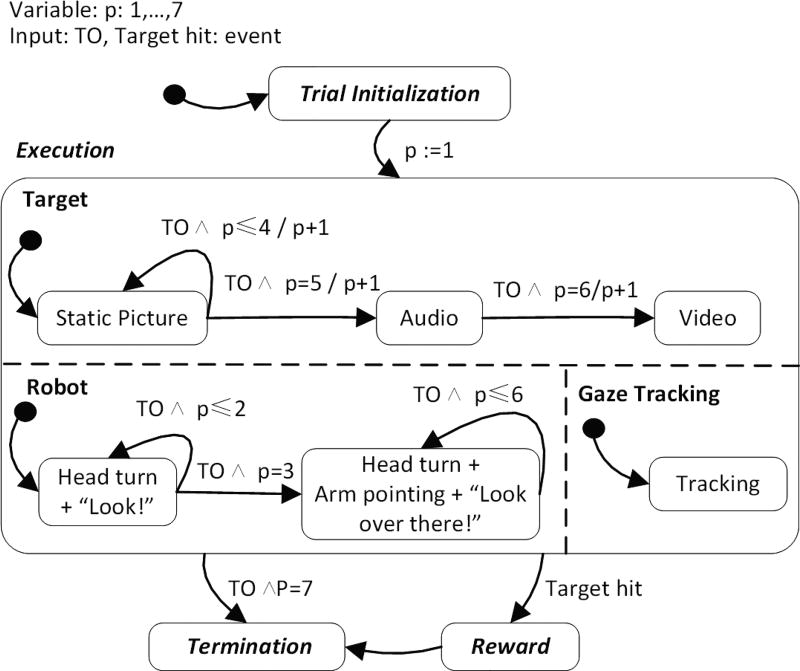

HRI using NORRIS is designed to work as follows. A child with ASD will be seated in a room in front of a humanoid robot. The room will be equipped with a set of spatially distributed computer monitors or TVs where audio-visual stimuli will be presented. The robot will administer a LTM based joint attention prompting protocol to the child and the child’s response in terms of gaze direction will be inferred by a set of distributed cameras. Based on whether the child shares attention or not the robot will provide appropriate feedback and move on to the next prompt. As shown in Fig. 1, NORRIS has 4 main components: 1) the robot module controls robot actions; 2) the target module controls environmental factors; 3) the gaze tracking module provides interaction cue sensing; and 4) the supervisory controller controls the interaction logic. The supervisory controller is the “brain” of NORRIS that sends commands to the robot and the target module to present directional prompts to the participant. For example, the robot can turn its head to a monitor displaying a picture, and ask the participant to look at that monitor. The participant may or may not look at the monitor, and this looking behavior is sensed by the gaze tracking module. The tracking module further computes whether the direction of the participant’s gaze falls on the monitor, and sends this message back to the supervisory controller. Then the supervisory controller sends commands to the robot and the target module again telling what to show next, based on an interaction protocol. Therefore, NORRIS provides a fully autonomous closed-loop interaction between the system and the participant.

Fig. 1.

NORRIS system architecture

B. System Components

1) Robot module

A humanoid robot NAO by Aldebaran Robotics [30] was embedded in NORRIS. NAO has been widely applied for children with ASD [11, 22] due to its attractive childlike appearance and high controllability. We designed a new controller for NAO that communicates with the supervisory controller. The robot controller was embedded with a built-in library storing all the necessary motions (e.g., turning its head to a monitor) and speeches (e.g., asking the participant to look towards a target) needed for the interaction. The robot’s actions are detailed in Section III.B along with the interaction protocol.

2) Target module

Two flat TVs (width: 70cm; height: 43 cm), one to the right and one to the left of the participant, were used as attentional targets. The robot would point to one of the monitors at a time and ask the participant to look at what was being shown on that monitor. The two TVs were controlled individually by two target controllers that received commands from the supervisory controller. A library of pictures, audios, and videos were embedded in the target controller. Based on the commands sent from the supervisory controller, static pictures, audios, or videos of children’s interest were displayed. The set of target actions are detailed in the interaction protocol (Section III.B).

3) Gaze tracking module

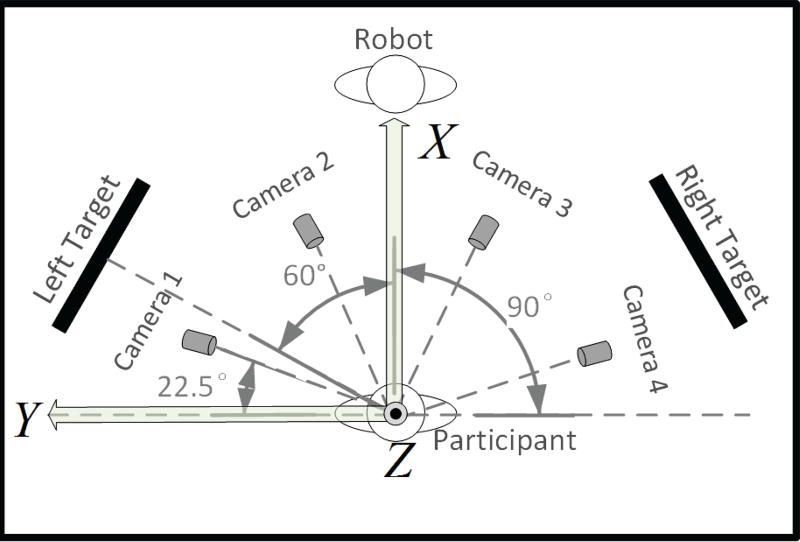

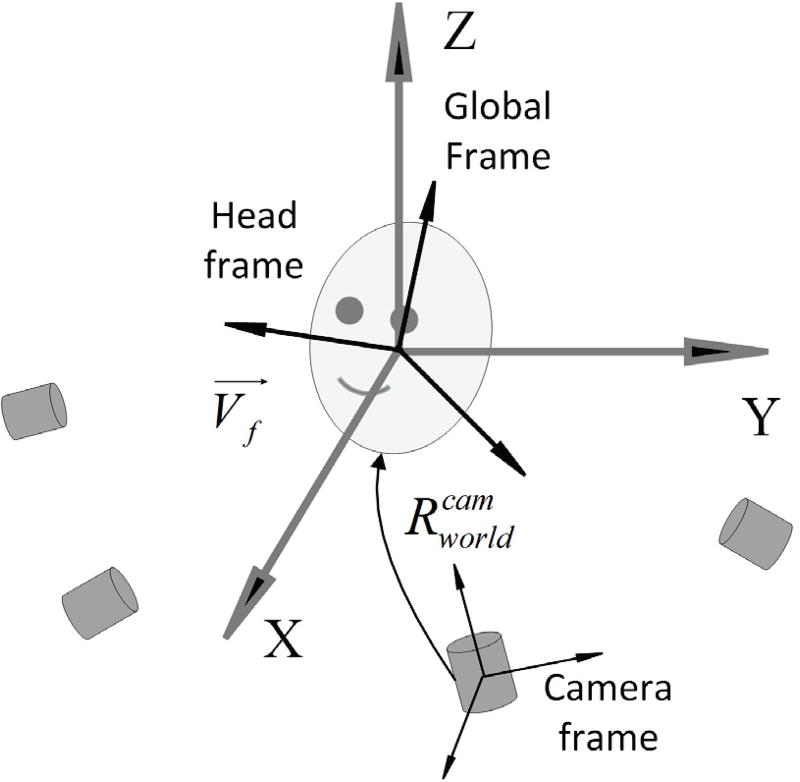

The gaze tracking module detected the participants’ looking behavior. The direction of a participant’s gaze was computed based on the orientation of his/her head as detected by a set of cameras as shown in Fig. 2 and Fig. 3. Fig. 2 illustrates the top view of NORRIS in the global reference frame. The center of the participant’s head was the origin of the global frame. The X-axis and the Y-axis pointed forward and to the left of the participant, respectively, and the Z-axis pointed upwards out of the plane. Fig.3 shows both the body-attached head frame of the participant and the global reference frame that share the same origin. If the participant did not perform yaw (around the Z-axis), pitch (around the Y-axis), or roll rotations (around the X-axis), the head frame was aligned with the global frame. The unit vector along the positive x-axis of the head frame, , represents the frontal head orientation, and was used to derive the gaze direction. Four cameras were employed for gaze detection, each with its own coordinate system. The gaze tracking method has 3 steps as discussed below. It is to be noted that while Step1 and Step2 were inherited from our previous work [31], Step3 was newly developed in the current study.

Fig. 2.

Top view of NORRIS and the global frame

Fig. 3.

Coordinate systems of gaze tracking

Step1: Detect head orientation from a camera

The SDM [32] method was applied to each camera to achieve fast and robust head orientation estimation with respect to the camera’s frame. The image of the participant’s frontal face is needed for this estimation, and thus a given camera can only detect head orientation when the frontal face is visible to it. The detected head orientation is represented by in the camera’s frame. However, in the current joint attention study, we wanted to detect a larger head yaw angle (about 180°) than what can be detected by one camera (about 80°) for realistic tasks. Therefore, we developed a distributed head orientation estimation algorithm for an array of 4 cameras (as shown in Fig. 2) around the participant with partially overlapping views to extend the detection range. This design guaranteed that no matter which part of the interaction environment the participant was looking at, at least one camera could capture his/her frontal face in order to conduct head orientation estimation.

Step2: Transform the head orientation estimation from a camera’s frame to the global frame

Each camera was calibrated to get the transformation matrix, , between the camera’s frame and the global frame. As shown in Fig. 3, transform from the camera’s frame to the global frame.

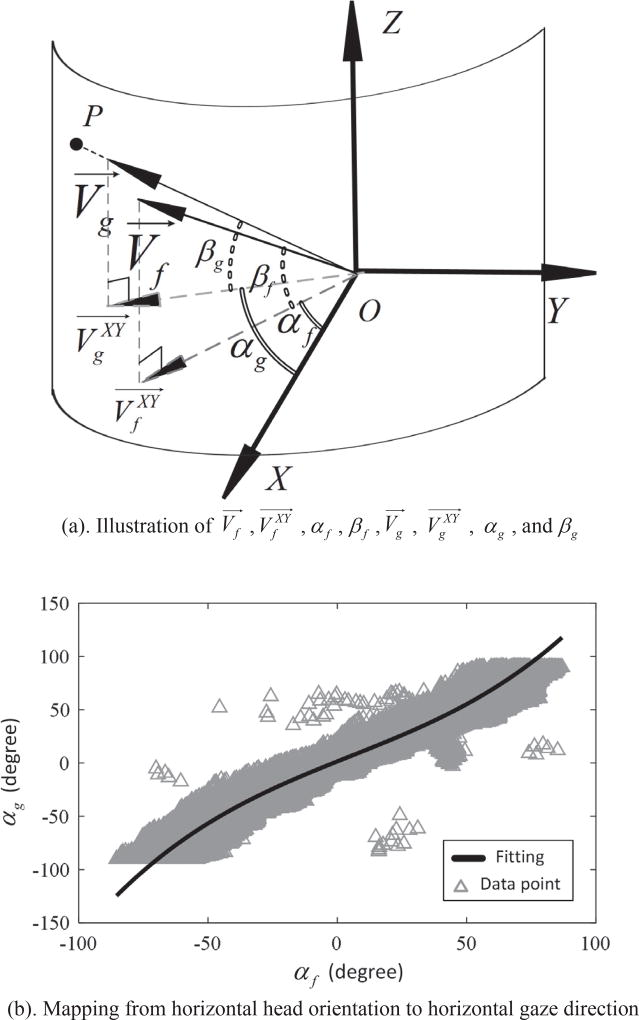

Step3: Compute gaze direction from in the global frame

As shown in Fig. 4(a), denotes the gaze direction in the global frame. We used the vertical and horizontal components of to judge whether the participant’s gaze direction fell into a center range (e.g., the range of a target monitor). In Fig. 4, is the projection of on the XY plane. The angle between and , βg, denotes the vertical gaze direction. The angle between the X-axis and , αg, denotes the horizontal gaze direction. is the projection of on the XY plane. The angle between and , βf, is used for computing βg. The angle between the X-axis and , αf, is used for computing αg.

Fig. 4.

Gaze direction computation in the global frame

In order to correlate head orientation with gaze direction we conducted a small study with 10 adults where the volunteers were asked to look at a marker in front of them that was moved from left to right 5 times followed by a right to left marker movement for another 5 times.

In the horizontal direction, we trained a mapping function to derive αg from αf. βg was identified as the angle of the moving marker (between −90° (left) and 90° (right)). Simultaneously, the volunteers’ horizontal head orientation, αf, was estimated by the camera array. A total of 22,236 data pairs were collected. We used a polynomial fitting to reflect the relation between αg and αf. In Fig. 4(b), the blue points indicate the pairs (αf, αg). The red curve with a sigmoid shape is the curve that maps αf. The equation of the red curve is

| (1) |

The mean distance from the data points to the red curve is 9.12°. We can see that, in general, a larger αf leads to a larger ‖αg− αf‖. Intuitively, the more the participant’s head turned to the side, the larger the deviation between the gaze direction and the frontal head orientation in the horizontal direction.

The vertical gaze direction was approximated by βg = βf − βbaseline. Here βbaseline is an offset angle which was calibrated for each participant. During the calibration, the participant was prompted to look along the X-axis, and βf at this moment was recorded as βbaseline. In the user study presented in Section IV, βbaseline ranges from −11.57° to 8.14°.

The range of both monitors can be represented by the values αg and βg. The αg range of the left and the right monitors were [−70°, −50°] and [50°, 70°], respectively. However, in order to accommodate for mapping error as well as encouraging young children with ASD to continue with the intervention, we relaxed the range by 15° on each side. Similarly, the range of βg was [−26.3°, 12.5°] from top to bottom, which covered an additional 43 cm (the height of the monitor) beyond the monitor’s top and bottom edges. Therefore, if αg ∈ [−85°, −35°], and βg ∈ [−26.3°, 12.5°], the system would infer that the participant responded to the left monitor. Similar ranges were applied for the right monitor. On average, the whole gaze tracking module refreshed at a speed of 15 fps. Note that even though this gaze tracking method was developed for the NORRIS system in this study, it is an independent component which can be easily applied to other scenarios where large range gaze tracking is needed.

4) Supervisory controller

The supervisory controller communicated with different system components and controlled the global logic of the interaction. The communication was implemented with TCP/IP Socket Communication method. The average communication time from sending a message to receiving the message between the supervisory controller and a system component was about 25ms, which guaranteed real-time closed-loop interaction. The global interaction logic, which we call the interaction protocol, is discussed in detail in Section III.

III. LTM Interaction Protocol

The Least-to-Most (LTM) hierarchy was applied in NORRIS to form the interaction protocol. LTM has been widely applied in diagnostic and screening tools for children with ASD [27, 28]. In LTM, the teacher allows the learner an opportunity to respond independently on each training stage and delivers the least intrusive prompt first. If necessary, more intrusive prompts, usually upgraded based on the previous prompts, are then delivered to the learner to complete each training procedure [26]. Essentially, LTM provides support to the learner only when needed.

LTM has been applied to a few important robot-mediated intervention systems for children with ASD. Feil-Seifer et al. [33] and Greczek et al. [11] introduced a graded cueing feedback mechanism to teach imitation skills to children with ASD. In this mechanism, higher prompts were upgraded based on the initial prompt by adding additional verbal and gestural hints to help children copy gestures. Huskens et al. [34] used a robot to prompt question-asking behaviors in children with ASD. The robot used open-question prompt initially. If the participants did not respond correctly, the robot would add more hints (e.g., adding part of the correct response) in the following prompts. Zheng et al. [35] designed a robot-mediated imitation learning system using a prompting protocol to help address an incorrect imitation. The robot first showed a gesture to the participant and asked him/her to copy it. If the child could not do it correctly, the robot would point out where to improve in the following prompts. Bekele et al. [22] developed the ARIA system to teach joint attention skills to children with ASD. If the participants did not respond to simple directional prompts given by a robot, higher levels of prompts with additional visual and verbal directional hints were provided. Kim et al. [36] designed a robot-assisted pivotal response training platform, where the higher levels of prompts were built by adding target responses hints on lower level of prompts.

From these examples, we can see that LTM is not limited to a specific skill, but can be used as a general guidance mechanism for robot-mediated intervention. However, to our knowledge, no mathematical model has been proposed to create a general LTM framework. In this work, for the first time, we attempt to develop a general LTM-based Robot-mediated Intervention (LTM-RI) model. Such a model can be used to teach different skills to children with ASD as well as adapt the prompts for a specific skill. We expand the model for joint attention intervention, which is used for the user study.

A. LTM-RI model

An intervention system uses prompts to teach a skill to children with ASD. These prompts may consist of robot actions (e.g., motions and speeches), and may also include environmental factors that coordinate with the robot’s actions (e.g., the attentional target that the robot may refer to). Suppose we have libraries of different robot actions {RA} and environmental factors {EF}, then we can combine different RAi and EFj to form different prompts. These combinations may have different strength in eliciting the expected response (e.g., child looking at the target monitor), ExpResp, from a child. For example, in the current joint attention study, the robot turning its head (RA) to a static picture display on the TV (EF) might have a weaker impact on the children than pointing (RA) to a cartoon video displayed on the monitor (RA). Here we arrange the order of the elements in {RAi} and {EFj} as follows: RAa is stronger (includes more instructive information) than RAb if a>b ; and EFc is stronger than EFd if c>d. These orders can be determined based on common sense and clinical experiences.

LTM-RI starts from presenting the weakest combination of RA and EF to form the least intrusive prompt. If this cannot elicit ExpResp (expected response), stronger RAs and EFs will be provided iteratively to form more instructive prompts, until the end of an intervention trial. We formally define this iterative procedure as follows.

LTM-RI Model

| Step 1: Initial prompt (prompt level = 1). | ||||

| Behavior(1)=BF (RobAction(1),EnviFactor(1)) | ||||

| Resp(1)=ICD (Behavior(1)) | ||||

| If Resp(1)=ExpResp | ||||

| Reward | ||||

| Go to Step 3 | ||||

| Step 2: Iterative prompting loop. | ||||

| For prompt level n =2: IN | ||||

| [RobAction(n),EnviFactor(n)]=PF (Resp(n-1)) | ||||

| Behavior(n)=BF (RobAction(n),EnviFactor(n)) | ||||

| Resp(n)=ICD (Behavior(n)) | ||||

| If Resp(n)=ExpResp | ||||

| Reward | ||||

| Break | ||||

| n = n + 1 | ||||

| Step 3: Termination. | ||||

| Robot naturally stops the interaction | ||||

Here BF is an implicit function that describes the participant’s behavior (e.g., participant’s gaze direction) given RA (RobAction) and EF (EnviFactor). This behavior is sensed by the interactive cue detection function (ICD) to determine whether the behavior is ExpResp. In the current study, ICD is the gaze tracking module. In the simplest scenario, we can categorize Resp(n)=ExpResp and Resp(n) ≠ ExpResp. PF is the prompting function which decides what RA and EF to present, if Resp(n) ≠ ExpResp. Therefore, PF is a sorted list of prompt levels following the LTM heirarchy. We want to identify the lowest level of support needed by the participant to perform ExpResp. If Resp(n) ≠ ExpResp (the response detected was not the expected response), given RobAction(n-1) = RAi (robot action at time instance n-1), and EnviFactor(n-1) = EFj (environmental factor at time instance n-1), we choose the robot action for the next instant, RobAction(n)=RAl (l ≥ i), from (RA} and the environmental factor for the next time instance, EnviFactor(n)=EFk (k ≥ j), from {EF}, so that the next prompt repeats the last prompt or provides a more instructive prompt. LTM-RI steps works are as follows:

In Step 1, the participant’s baseline behavior Perf (1) is evaluated by prompt level 1, which consists of the weakest RA (RobAction(1)) and EF (EnviFactor(1)). If the participant’s response, Resp(1), is ExpResp, then higher prompts are not needed. The system gives rewards and then executes Step 3 to terminate the intervention. Otherwise, Step 2 is executed.

In Step 2, prompt level 2 is given first. If the participant cannot perform ExpResp, the higher prompts are presented one by one until level IN. During this iteration, the next level of prompt (RobAction(n) and EnviFactor(n)) is formed based on the current response of the participant Resp(n-1), according to the PF. If Resp(n)=ExpResp, the system gives a reward to the participant and goes to Step 3.

We can see that if ExpResp happened on prompt level n, it means that level 1 to n-1 have been executed but failed to elicit ExpResp. Suppose ERn means Resp(n)=ExpResp, and PTx represents prompt level x had been executed but was not successful. Then P(ERn | PT1,…, PTn-1) represents the probability that ExpResp happens on prompt level n. In order to measure the impact of LTM-RI, we define an intensity function In as:

| (2) |

In represents the probability of ExpResp at or before prompt level n. LTM-RI procedure has two goals:

Goal 1: Im > In, given m > n. This means adding prompt levels increases the probability of ExpResp.

Goal 2: 1 IIN = 1 − ε, ε = 0 or ε is a small positive number. This means that eventually, at the highest prompt level, the system can elicit ExpResp with high probability.

We can see that the LTM-RI is a general model that is not limited to one particular skill. What behaviors of the participants that the model tracks depends on the design of ICD. The number of prompt levels and the content of the prompts can be easily adjusted within this framework by changing the detail of PF. In the current work, LTM-RI is itemized for joint attention intervention below. While the specific content of the prompts in the six-level hierarchy was inherited from our previous studies [22, 23], we interpret it under the framework of LTM-RI to demonstrate how to implement the model.

B. LTM-RI trial in the current study

We designed the intervention trial of NORRIS based on LTM-RI. The RAs and EFs applied are shown in Table I, which is the PF in LTM-RI. Larger the subscript implies stronger directional information.

Table I.

Prompt Levels

| Prompt level | Prompting element list | |

|---|---|---|

| 1 and 2 | RA1 + EF1 | |

| 3 and 4 | RA2 + EF1 | |

| 5 | RA2 + EF2 | |

| 6 | RA2 + EF3 | |

| Prompt Elements (TR means Target Monitor) | ||

| RA1 | Robot turned its head to the TM, saying “Look!” | |

| RA2 | Robot turned its head and pointed its arm to the TM, saying “Look over there!” | |

| EF1 | TM displayed a static picture. | |

| EF2 | TM displayed an audio clip. | |

| EF3 | TM displayed a video clip. | |

In Step 1 of LTM-RI (prompt level 1), the robot turned its head to the target monitor, saying “Look!” (RA1). At the same time, the monitor displayed a static picture (EF1).

In Step 2 of LTM-RI, IN = 6. Prompt level 2 was the same as prompt level 1. In prompt level 3 and 4, the robot not only turned its head, but also pointed its arm to the target monitor, saying “Look over there!” (RA2). At the same time, the monitor still displayed a static picture (EF1). In prompt level 5 and 6, the robot action was kept as RA2, but the monitor displayed an audio clip (EF2) and a video clip (EF3), respectively. At any time during a trial, if the participant looked at the target (ExpResp happened), the robot would say “Good job!” and the target monitor would display cartoon video for 10 seconds as rewards. Otherwise, the prompt level would be presented one by one until prompt level 6 was completed.

Finally, Step 3 of LTM-RI was executed, where the robot returned to its standing position, thanked the participant, and said Goodbye.

This protocol closely aligns with standardized diagnostic assessment procedures (i.e., Autism Diagnostic Observation Schedule–Second Edition [27]) for demarcating joint attention symptoms in young children with ASD as well as robot capacity.

In order to implement LTM-RI within NORRIS, we interpreted the LTM-RI trial with the standard Harel Statechart model [37], which is an extended state machine capable of modeling hierarchical and concurrent system states. As shown in Fig. 5, rectangles denote states. When an event happens, a state transition takes place, which is indicated by a directed arrow. Solid rectangles mark exclusive-or (XOR) states, and the dotted lines mark AND states. Encapsulation represents the hierarchy of the states. In the same hierarchy (encapsulated by the same rectangle), the system must be in only one of its XOR states, while in all of its AND states. Therefore, the AND states represent parallel processes in the system.

Fig. 5.

The Harel Statechart model of the NORRIS LTM-RI trial

The first hierarchy includes 4 XOR states:

At the beginning of a trial, the system is in the Initialization state, where the robot stands straight facing the participant. Then, the system transits to the Execution state and initializes variable p=1.

The Execution state is the second hierarchy, which includes 3 AND states, showing target, robot, and gaze tracking modules running in parallel.

| (3) |

The third hierarchy controls the prompts:

| (4) |

| (5) |

The Target state includes 3 EFs, and the Robot state includes 2 RAs. p is used to select RAs and EFs to form different prompts. The Gaze tracking state controls the Tracking function only, which represents the gaze tracking module.

Two pure signals “Time out (TO)” and “Target hit” are used to change the prompts and terminate the LTM-RI trial. A pure signal either absents (no event), or presents (an event happens) any time t ∈ ℝ [38]. If the gaze tracking module detects gaze direction towards the target monitor within 7 seconds from the beginning of each prompt, a “Target hit” event is generated, which triggers the state transition to Reward. If no target hit is detected, TO event is generated. TO is combined with p to guide the transition in the AND substates of Execution. Once the state transition is done, p is increased by 1 to mark the next level of prompt. If prompt level 6 is completed without “Target hit”, the system transits to Termination.

IV. Experimental User Study

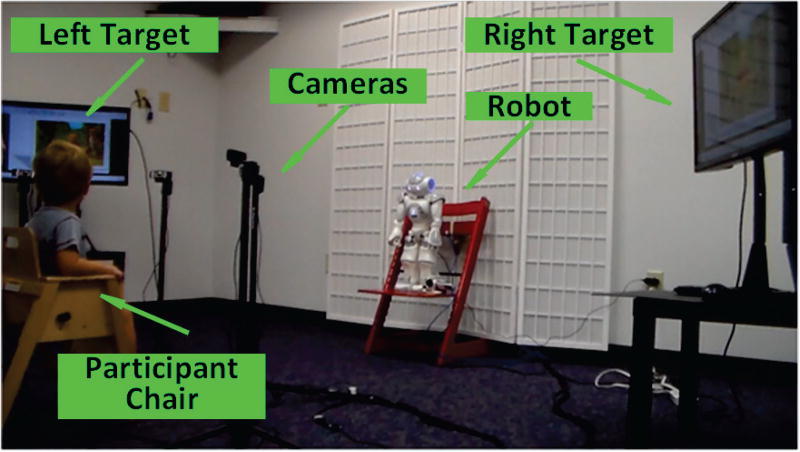

Fig. 6 shows the experiment room. The participant was seated in a wooden chair. The robot was placed in front of the participant, standing on a platform 32cm above the floor. When the participant was seated, his/her eyes were approximately as high as the robot’s face. The two monitors and the robot were all 2 meters away from the participant.

Fig. 6.

Experiment room configuration

A. Participants

The NORRIS was tested by 14 children (12 males and 2 females; 12 Caucasians, 1 African American, and 1 Asian) with ASD. They were recruited from a research registry of the Vanderbilt Kennedy Center, and this study was approved by the Vanderbilt University Institutional Review Board. The participants were diagnosed with ASD by an expert clinician based on the DSM-5 [3] criteria. The diagnoses were done on average of 9.6 (SD: 5.9) months prior to enrollment in this study. Table II lists the statistical characteristics of the participants. The characteristics of individual participants are listed in the Appendix. Scores from gold standard diagnostic instruments (i.e., Autism Diagnostic Observation Schedule–Second Edition (ADOS-2) [27] and Mullen Scales of Early Learning [39]) were administered at that time. The participants met the spectrum cut-off on the ADOS-2. We use the Early Learning Composite derived from the Mullen Scales of Early Learning to indicate existing Intelligent Quotient (marked as IQ in Table II). Parents of these children also completed the Social Responsiveness Scale–Second Edition [40] and Social Communication Questionnaire Lifetime Total Score (SCQ) [41] to index current ASD symptoms.

Table II.

Statistical Characteristics of Participants

| ADOS-2 Raw Score |

IQ | SCQ | SRS-2 T score | Age at enrollment (Years) |

|

|---|---|---|---|---|---|

| Avg | 21.29 | 54.71 | 14.86 | 63.36 | 2.78 |

| SD | 4.61 | 8.17 | 5.56 | 8.63 | 0.65 |

B. Experimental procedure and measurements

Four sessions were arranged on different days for each participant. On average, the time required to complete all four sessions was 27 days. Each session involved 8 repeated LTM-RI trials as introduced in section III.B. The left or the right monitor was randomly assigned as the target for each trial.

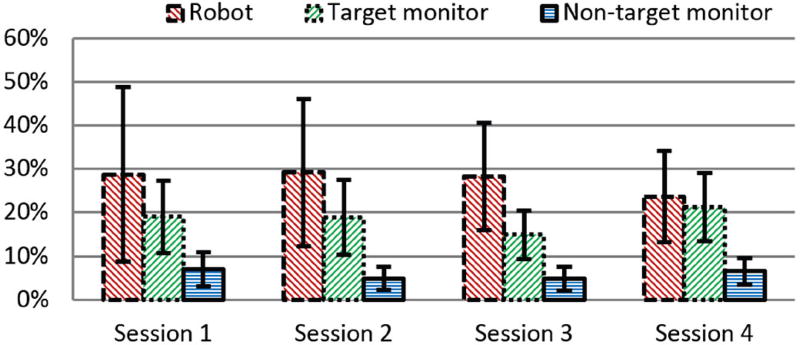

1) Preferential attention

First, we evaluated the participants’ attention on the robot and the monitors, a measure which reflected their engagement. A region of interest was defined for each object that covered that object with a margin of 20cm around it. We analyzed how their attention was distributed among the robot and the 2 monitors. We anticipated that participants would: 1) pay significant attention to the robot because the robot was the main interactive agent; and 2) pay more attention to the target monitor than the non-target monitor, because the target monitor was referred to by the robot and displayed visual stimuli. We also tracked the change in the participants’ attention on the robot over the 4 sessions. We anticipated that if the participants’ interest in the robot sustained over the sessions, then their time spent looking at the robot would not change significantly.

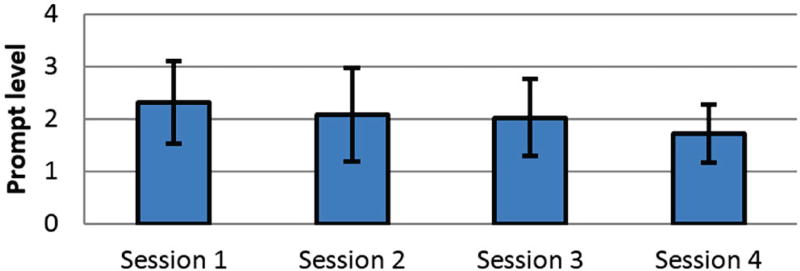

2) Joint attention performance

Second, we evaluated the participants’ joint attention performance, which reflected the effectiveness of the system. For each session, we computed: i) the number of trials in which the participants hit the target successfully; and ii) the average prompt levels the participants needed in order to hit the target. We anticipated that the participant’s performance would improve significantly if the robotic intervention was effective. In addition, we computed the intensity (defined in equation (2)) of each prompt level within the sessions. If the participants’ joint attention skill improved, we would see higher intensity values in low prompt levels than in high prompt levels.

C. User Study Results

All 14 participants completed the 4 sessions, and thus the completion rate was 100%. This result is very promising when compared with other technology-assisted studies [22, 42]. We used the Wilcoxon-signed rank test for statistical analysis.

1) Preferential attention

On average, in sessions 1 through 4,the participants spent 54.84%, 52.93%, 47.97%, and 51.58% of the session duration looking at the main objects (i.e., the robot, the target monitor, and the non-target monitor), respectively. Fig. 7 shows the percentage of session durations that the participants spent looking at each of the main objects across the sessions. On average, in sessions 1 to 4, the participants spent 28.76%, 29.17%, 28.23%, and 23.69% of the session duration looking at the robot, respectively. In sessions 1 to 4, they spent 19.04%, 18.85%, 14.91%, and 21.31% of the session duration looking at the target monitor, respectively. We can see that the participants looked at the robot more than the monitors in every session. As expected, the participants looked at the target monitor much more than the non-target monitor. The main reasons, we believe, were: 1) the target monitor was referred to by the robot, and the participants responded more to the referred direction; 2) the target monitor displayed visual stimuli during prompts and rewards, which caught and held the participants’ attention. Results showed that the participants spent very small portions of the session duration looking at the non-target monitor (7.05%, 4.92%, 4.83%, and 6.58% in sessions 1 to 4, respectively).

Fig. 7.

Percentage of the session time participants spent looking at the robot, target monitor, and the non-target monitor

We compared the time that the participants spent looking at the robot across all sessions, and found no statistically significant change (p = .9515 to .1937). This result suggests that the participants’ interest in the robot held over the course of the sessions. The change in attention duration on the target monitor was also not statistically significant, except between sessions 2 and 3 (p = .0203), and between sessions 3 and 4 (p = .0009). In each session, different sets of static pictures, audio clips and video clips were presented in the prompts. We noticed that the participants had different preferences for certain stimuli (e.g., one participant liked “Scooby Doo” more than “Dora”). Therefore, the fluctuation in attention time on the target monitor might be attributable to the change of stimuli. In addition, the attention time on the target monitor was statistically significantly higher than the non-target monitor (p ranges from .0001 to .0006) in all 4 sessions.

In summary, these results indicated: 1) among the three main objects, the participants paid most of their attention to the robot; 2) The participants’ initial interest in the robot were maintained across the sessions; and 3) They paid significantly more attention to the target monitor than the non-target monitor. Due to the heterogeneous neurodevelopmental trajectory and behavioral pattern of children with ASD, the participants had quite different attention patterns and joint attention capabilities. Therefore, Fig. 7 shows large standard deviations in all cases. The standard deviation of the looking time on the robot decreased from session 1 to session 4, which showed that the participants’ looking towards the robot tended to be stable after a few sessions’ of intervention. However, this pattern was not shown for the two monitors.

2) Joint attention performance

Fig. 8 shows the average prompt levels participants needed to hit the target. Note that the lower the prompt level needed by participants, the better their performance was. We observe that from session 1 to session 4, the average target hit prompt level decreased monotonically from 2.31 to 1.71. A Wilcoxon-signed rank test showed that the decrease in prompt level from session 1 to session 4 was statistically significant (p = 0.0115). This indicated that the participants’ performance improved significantly.

Fig.8.

Average target hit prompt levels in all sessions

We further evaluated how the incremented LTM prompt levels elicited target hit behavior, i.e., whether the two goals discussed in section III.A were achieved. The computation can be performed as follows:

| (6) |

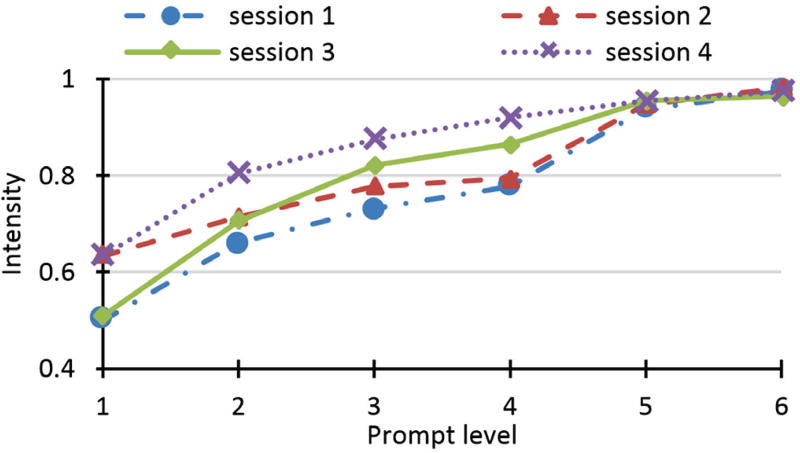

Then the intensity of prompt level n can be computed according to equation (2). Here we mark the intensity of prompt level n in session x as . Fig. 9 shows the values of I in sessions 1 to 4.

Fig. 9.

Intensity of prompt levels across 4 sessions

We observe that, (given a > b) in all 4 sessions. This indicated that the adding more instructive prompt levels on top of low prompt level elicited more target hits. Therefore, Goal 1 was achieved. Fig. 9 also shows that for the same prompt level, or (given x > y) in most of the cases. The only exception is that is apparently higher than . This means that, in general, as the participants had received more interventions in later sessions, their chances of a target hit on the same prompt level increased with occasional fluctuations.

to were 0.97, 0.98, 0.96, and 0.97, respectively. This leads us to conclude that the LTM-RI trial could eventually help participants hit the target in almost all of the trials. Thus the prompt level content and the number of the levels (IN = 6) were properly designed. Therefore, Goal 2 was also achieved.

We also recorded subjective observation of these children’s responses and feedback during the interaction with the robot. Due to the large heterogeneity of the autism spectrum, one child with ASD can be quite different from another. We found a spectrum of different behaviors from the participants. In most sessions, participants actively responded to the robot. Sometimes they even tried to talk to the robot and imitate its gestures and speech. In some other sessions, participants quietly responded to the robot. There were also sessions where participants seemed tired and not quite interested in the interaction.

V. Conclusion and Discussion

In this paper, we introduced a new non-invasive autonomous robot-mediated joint attention intervention system, NORRIS. A humanoid robot was embedded as the intervention administrator. The looking behavior of the participants in response to robot prompts was detected using a new non-contact gaze tracking method, which could track the participants’ real-time gaze direction in a large range. The prompts were designed and implemented based on the presented Least-to-Most Robot-mediated Intervention prompting hierarchy, LTM-RI, which is a general model of robot-mediated intervention for children with ASD.

NORRIS was validated through a 4-session study. Fourteen children with ASD were recruited and all of them successfully participated in all the sessions. We measured their preferential attention towards the robot, target monitor, and the non-target monitor. Results showed that the participants looked at the robot longer than other objects and this interest did not change significantly over the sessions. As expected, the participants paid significantly more attention towards the target monitor than the non-target monitor in all the sessions. We also evaluated their joint attention performance. Results showed that the participants’ performance improved significantly after the 4 intervention sessions. The results also proved the effectiveness of the LTM-RI model, i.e., the higher the prompt level, the higher the probability that target hit was achieved by the participants, and the participants could hit the target eventually in almost all the trials.

Therefore, we conclude that this study has two major contributions: 1) the design and development of a new joint attention intervention system with the introduction of the LTM-RI model; and 2) a user study with repeated observations which validated the effectiveness of NORRIS and LTM-RI.

However, it is important to notice that the current study also had limitations that need to be addressed in the future. First, NORRIS was only validated by a small group of children with ASD. In order to thoroughly evaluate the efficacy of NORRIS, it needs to be tested in formal clinical studies in the future. Second, while we did assess promising joint attention skills within the system, we did not systematically compare such improvements with other methods. Third, the current study did not investigate if such training can be generalized to other interactions, especially in human-human interactions. To address this issue, pre- and post-tests should be conducted to observe how joint attention behaviors in children with ASD generalize before and after using the robot-mediated intervention systems. A possible way to do the pre- and post-tests is video recording joint attention behaviors of children with ASD as they interact with other humans and coding these videos afterward. Fourth, the current study repeated a straightforward LTM-RI procedure in a limited number of sessions. However, using the same interaction content repeatedly will eventually cause the ceiling effect (e.g., participants hit the target on the first prompt in most of the trials) and/or loss of interest (e.g., participants feeling tired of doing the same intervention again and again) after a large number of sessions. Therefore, once these issues are detected, new interaction content under the same interaction protocol or a completely new interaction protocol need to be adapted by the system to update and reinforce the training procedure. Future robot-mediated works would benefit from exploring differences in combinations of approach in protocols, such as breaking down the cueing hierarchy that has nonverbal components only and then nonverbal and verbal components. Finally, LTM-RI was proposed as a general interaction model to implement robot-mediated intervention for children with ASD. Although the current study successfully validated LTM-RI for joint attention, we did not test it thoroughly in other types of training. Therefore, the eventual value of LTM-RI will need to be verified through other interventions.

Despite these limitations, this work is the first to our knowledge to design and empirically evaluate the usability and feasibility of a non-invasive fully autonomous robot-mediated joint attention intervention system over repeated observations. The preliminary results of this work are promising. Note that the NORRIS system architecture, components, and the LTM-RI protocol can be adapted to address other core deficits in ASD (e.g., social orienting, response to name). Thus this work provides a framework of how to design and implement an effective robot-mediated intervention system in general. It is important to note that we do not propose this technology as a replacement for existing necessary comprehensive behavioral intervention and care for young children with ASD. Instead, this platform represents a meaningful step towards realistic deployment of technology capable of accelerating and priming a child for learning in key areas of deficits. While there are still much work to be done, in the future, we hope such systems will be deployed into schools and clinics for further validation and eventually be used as an effective and convenient tool for educators and psychologists.

Acknowledgments

This work was supported in part by a grant from the Vanderbilt Kennedy Center (Hobbs Grant), the National Science Foundation under Grant 1264462, and the National Institutes of Health under Grant 1R01MH091102-01A1 and 1R21MH103518-01. Work also includes core support from NICHD (P30HD15052) and NCATS (UL1TR000445-06).

Biographies

Zhi Zheng (S’09-M’16) received the Ph.D. degrees in Electrical Engineering from Vanderbilt University, Nashville, TN, USA, in 2016. She was a Research Assistant Professor of Electrical Engineering at Michigan Technological University, Houghton, MI, USA, from Sep. 2016 to Aug. 2017. Then, she joined University of Wisconsin-Milwaukee, Milwaukee, WI, USA, as an Assistant Professor of Biomedical Engineering.

Her research interests include human-machine interaction and human-centered computing.

Huan Zhao received the B.S. degree in Automation at Xi’an Jiaotong University, Xi’an, China, in 2012, and the M.S. degree in Electrical Engineering from Vanderbilt University, Nashville, TN, USA, in 2016. From 2012 to 2014, she did research at Institute of Artificial Intelligence and Robotics in Xi’an Jiaotong University. She is currently pursuing the Ph.D. degree in electrical engineering at Vanderbilt University.

Her research includes the design and development of systems for special needs using virtual reality, human-machine interaction and robotics.

Amy R. Swanson received the M.A. degree in social science from University of Chicago, Chicago, IL, USA, in 2006.

Currently she is a Research Analyst at Vanderbilt Kennedy Center’s Treatment and Research Institute for Autism Spectrum Disorders, Nashville, TN, USA.

Amy S. Weitlauf received the Ph.D. degree in Psychology from the Vanderbilt University, Nashville, TN, USA, in 2011.

She completed her pre-doctoral internship at the University of North Carolina, Chapel Hill. She then returned to Vanderbilt, first as a postdoctoral fellow and now as an Assistant Professor of Pediatrics at Vanderbilt University Medical Center. She is also a clinical psychologist.

Zachary E. Warren received the Ph.D. degree in Clinical Psychology from the University of Miami, Miami, FL, USA, in 2005.

Currently he is an Associate Professor of Pediatrics and Psychiatry at Vanderbilt University. He is the Director of the Treatment and Research Institute for Autism Spectrum Disorders at the Vanderbilt Kennedy Center.

Nilanjan Sarkar (S’92–M’93–SM’04) received the Ph.D. degree in Mechanical Engineering and Applied Mechanics from the University of Pennsylvania, Philadelphia, PA, USA, in 1993.

In 2000, Dr. Sarkar joined Vanderbilt University, Nashville, TN, where he is currently a Professor of Mechanical Engineering and Electrical Engineering and Computer Science. His current research interests include human–robot interaction, affective computing, dynamics, and control. Dr. Sarkar is a Fellow of the ASME and a Senior Member of the IEEE.

Appendix

The characteristics of individual participants are shown in Table III as follows.

Table III.

Individual Characteristics of Participants

| Participant index |

ADOS-2 Raw Score |

IQ | SCQ | SSS-2 Tscore |

Age at enrollment (Years) |

Gender | Race |

|---|---|---|---|---|---|---|---|

| 1 | 26 | 49 | 18 | 67 | 2.66 | M | White |

| 2 | 22 | 67 | 19 | 75 | 2.82 | M | AfricanAmerican |

| 3 | 22 | 49 | 14 | 60 | 2.45 | M | White |

| 4 | 26 | 49 | 23 | 72 | 2.95 | M | White |

| 5 | 24 | 49 | 15 | 62 | 2.58 | M | White |

| 6 | 24 | 50 | 14 | 61 | 2.64 | M | White |

| 7 | 14 | 59 | 13 | 57 | 2.59 | M | White |

| 8 | 18 | 54 | 15 | 68 | 2.79 | M | White |

| 9 | 21 | 52 | 12 | 61 | 3.06 | M | White |

| 10 | 26 | 49 | 16 | 68 | 4.53 | F | Asian |

| 11 | 27 | 55 | 25 | 75 | 3.53 | M | White |

| 12 | 15 | 59 | 3 | 43 | 2.26 | F | White |

| 13 | 14 | 49 | 8 | 54 | 2.26 | M | White |

| 14 | 19 | 76 | 13 | 64 | 1.78 | M | White |

Contributor Information

Zhi Zheng, Biomedical Engineering Department, University of Wisconsin-Milwaukee, Milwaukee, WI 53212 USA.

Huan Zhao, Electrical Engineering and Computer Science Department, Vanderbilt University, Nashville, TN 37212 USA.

Amy R. Swanson, Treatment and Research in Autism Disorder, Vanderbilt University Kennedy Center, Nashville, TN 37203 USA

Amy S. Weitlauf, Treatment and Research in Autism Disorder, Vanderbilt University Kennedy Center, Nashville, TN 37203 USA

Zachary E. Warren, Treatment and Research in Autism Disorder, Vanderbilt University Kennedy Center, Nashville, TN 37203 USA

Nilanjan Sarkar, Mechanical Engineering Department, Vanderbilt University, Nashville, TN 37212 USA.

References

- 1.Cabibihan J-J, Javed H, Ang M, Jr, Aljunied SM. Why robots? A survey on the roles and benefits of social robots in the therapy of children with autism. International journal of social robotics. 2013;5:593–618. [Google Scholar]

- 2.Christensen DL, Baio J, Braun KVN, Bilder D, Charles J, Constantino JN, et al. Prevalence and Characteristics of Autism Spectrum Disorder Among Children Aged 8 Years--Autism and Developmental Disabilities Monitoring Network, 11 Sites, United States, 2012. MMWR Surveill Summ. 2016 Apr.65(SS-3):1–23. doi: 10.15585/mmwr.ss6503a1. (No. SS-3) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Association AP. The Diagnostic and Statistical Manual of Mental Disorders: DSM 5. 2013 doi: 10.1590/s2317-17822013000200017. bookpointUS. [DOI] [PubMed] [Google Scholar]

- 4.Robins B, Dautenhahn K, Dubowski J. Does appearance matter in the interaction of children with autism with a humanoid robot? Interaction Studies. 2006;7:509–542. [Google Scholar]

- 5.Kim ES-W. Computer Science Ph.D. Dissertation. Connecticut, United States: Yale University; 2013. Robots for social skills therapy in autism: Evidence and designs toward clinical utility. [Google Scholar]

- 6.Zheng Z, Das S, Young EM, Swanson A, Warren Z, Sarkar N. Autonomous Robot-mediated Imitation Learning for Children with Autism; Robotics and Automation (ICRA), 2014 IEEE International Conference on; 2014. pp. 2707–2712. [Google Scholar]

- 7.Warren ZE, Zheng Z, Swanson AR, Bekele E, Zhang L, Crittendon JA, et al. Can Robotic Interaction Improve Joint Attention Skills? Journal of Autism and Developmental Disorders. 2015;45:3726–3734. doi: 10.1007/s10803-013-1918-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Pennisi P, Tonacci A, Tartarisco G, Billeci L, Ruta L, Gangemi S, et al. Autism and social robotics: A systematic review. Autism Research. 2015 doi: 10.1002/aur.1527. [DOI] [PubMed] [Google Scholar]

- 9.Kozima H, Michalowski MP, Nakagawa C. Keepon. International Journal of Social Robotics. 2009;1:3–18. [Google Scholar]

- 10.Kim ES, Berkovits LD, Bernier EP, Leyzberg D, Shic F, Paul R, et al. Social robots as embedded reinforcers of social behavior in children with autism. Journal of autism and developmental disorders. 2013;43:1038–1049. doi: 10.1007/s10803-012-1645-2. [DOI] [PubMed] [Google Scholar]

- 11.Greczek J, Kaszubksi E, Atrash A, Matarić MJ. Graded Cueing Feedback in Robot-Mediated Imitation Practice for Children with Autism Spectrum Disorders. Proceedings, 23rd IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN 2014) Edinburgh, Scotland, UK. 2014 Aug. [Google Scholar]

- 12.Dautenhahn K, Nehaniv CL, Walters ML, Robins B, Kose-Bagci H, Mirza NA, et al. KASPAR–a minimally expressive humanoid robot for human–robot interaction research. Applied Bionics and Biomechanics. 2009;6:369–397. [Google Scholar]

- 13.Kozima H, Nakagawa C, Yasuda Y. Children–robot interaction: a pilot study in autism therapy. Progress in Brain Research. 2007;164:385–400. doi: 10.1016/S0079-6123(07)64021-7. [DOI] [PubMed] [Google Scholar]

- 14.Wainer J, Robins B, Amirabdollahian F, Dautenhahn K. Using the humanoid robot KASPAR to autonomously play triadic games and facilitate collaborative play among children with autism. Autonomous Mental Development, IEEE Transactions on. 2014;6:183–199. [Google Scholar]

- 15.Robins B, Dautenhahn K. Social Robotics. Springer; 2010. Developing play scenarios for tactile interaction with a humanoid robot: a case study exploration with children with autism; pp. 243–252. [Google Scholar]

- 16.Feil-Seifer D, Matarić MJ. Toward socially assistive robotics for augmenting interventions for children with autism spectrum disorders. Experimental robotics. 2009:201–210. [Google Scholar]

- 17.Warren ZE, Stone WL. Best practices: Early diagnosis and psychological assessment. In: Amaral David, Geschwind Daniel, Dawson G., editors. Autism Spectrum Disorders. New York: Oxford University Press; 2011. pp. 1271–1282. [Google Scholar]

- 18.Mundy P, Block J, Delgado C, Pomares Y, Van Hecke AV, Parlade MV. Individual differences and the development of joint attention in infancy. Child development. 2007;78:938–954. doi: 10.1111/j.1467-8624.2007.01042.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Mundy P, Sigman M, Kasari C. A longitudinal study of joint attention and language development in autistic children. Journal of autism and developmental disorders. 1990;20:115–128. doi: 10.1007/BF02206861. [DOI] [PubMed] [Google Scholar]

- 20.Adamson LB, Bakeman R, Deckner DF, Romski M. Joint engagement and the emergence of language in children with autism and Down syndrome. Journal of autism and developmental disorders. 2009;39:84. doi: 10.1007/s10803-008-0601-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Sigman M, Ruskin E, Arbelle S, Corona R, Dissanayake C, Espinosa M, et al. Continuity and change in the social competence of children with autism, Down syndrome, and developmental delays. Monographs of the society for research in child development. 1999:i-139. doi: 10.1111/1540-5834.00002. [DOI] [PubMed] [Google Scholar]

- 22.Bekele ET, Lahiri U, Swanson AR, Crittendon JA, Warren ZE, Sarkar N. A step towards developing adaptive robot-mediated intervention architecture (ARIA) for children with autism. Neural Systems and Rehabilitation Engineering, IEEE Transactions on. 2013;21:289–299. doi: 10.1109/TNSRE.2012.2230188. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Zheng Z, Zhang L, Bekele E, Swanson A, Crittendon J, Warren Z, et al. Impact of Robot-mediated Interaction System on Joint Attention Skills for Children with Autism; Rehabilitation Robotics (ICORR), 2013 IEEE International Conference on; Seattle, Washington. 2013. pp. 1–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Leekam SR, Nieto C, Libby SJ, Wing L, Gould J. Describing the sensory abnormalities of children and adults with autism. Journal of autism and developmental disorders. 2007;37:894–910. doi: 10.1007/s10803-006-0218-7. [DOI] [PubMed] [Google Scholar]

- 25.Steinfeld A, Jenkins OC, Scassellati B. The oz of wizard: simulating the human for interaction research; Human-Robot Interaction (HRI), 2009 4th ACM/IEEE International Conference on; 2009. pp. 101–107. [Google Scholar]

- 26.Libby ME, Weiss JS, Bancroft S, Ahearn WH. A Comparison of Most-to-Least and Least-to-Most Prompting on the Acquisition of Solitary Play Skills. Behavior Analysis in Practice. 2008;1:37. doi: 10.1007/BF03391719. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Lord C, Rutter M, DiLavore P, Risi S, Gotham K, Bishop S. Autism Diagnostic Observation Schedule–2nd edition (ADOS-2) Western Psychological Services; Torrance, CA: 2012. [Google Scholar]

- 28.Mundy P, Hogan A, Doelring P. A preliminary manual for the abridged Early Social Communication Scales (ESCS) Coral Gables, FL: University of Miami; 1996. [Google Scholar]

- 29.Anzalone SM, Tilmont E, Boucenna S, Xavier J, Jouen A-L, Bodeau N, et al. How children with autism spectrum disorder behave and explore the 4-dimensional (spatial 3D+ time) environment during a joint attention induction task with a robot. Research in Autism Spectrum Disorders. 2014;8:814–826. [Google Scholar]

- 30.Aldebaran Robotics. Available: http://www.aldebaran-robotics.com/en/

- 31.Zheng Z, Fu Q, Zhao H, Swanson A, Weitlauf A, Warren Z, et al. Universal Access in Human-Computer Interaction. Access to Learning, Health and Well-Being. Springer; 2015. Design of a Computer-Assisted System for Teaching Attentional Skills to Toddlers with ASD; pp. 721–730. [Google Scholar]

- 32.Xiong X, De la Torre F. Supervised Descent Method for Solving Nonlinear Least Squares Problems in Computer Vision. arXiv preprint arXiv:1405.0601. 2014 [Google Scholar]

- 33.Feil-Seifer DJ, Matarić MJ. A Simon-Says Robot Providing Autonomous Imitation Feedback Using Graded Cueing. International Meeting for Autism Research (IMFAR) 2012 ed. [Google Scholar]

- 34.Huskens B, Verschuur R, Gillesen J, Didden R, Barakova E. Promoting question-asking in school-aged children with autism spectrum disorders: Effectiveness of a robot intervention compared to a human-trainer intervention. Developmental neurorehabilitation. 2013;16:345–356. doi: 10.3109/17518423.2012.739212. [DOI] [PubMed] [Google Scholar]

- 35.Zheng Z, Young E, Swanson A, Weitlauf A, Warren Z, Sarkar N. Robot-mediated Imitation Skill Training for Children with Autism. IEEE Transactions on Neural Systems and Rehabilitation Engineering. 2015;24:682–691. doi: 10.1109/TNSRE.2015.2475724. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Kim M-G, Oosterling I, Lourens T, Staal W, Buitelaar J, Glennon J, et al. Designing robot-assisted Pivotal Response Training in game activity for children with autism; Systems, Man and Cybernetics (SMC), 2014 IEEE International Conference on; 2014. pp. 1101–1106. [Google Scholar]

- 37.Harel D. Statecharts: A visual formalism for complex systems. Science of computer programming. 1987;8:231–274. [Google Scholar]

- 38.Lee EA, Seshia SA. Introduction to embedded systems: A cyber-physical systems approach. Lee & Seshia; 2011. [Google Scholar]

- 39.Mullen EM. Mullen scales of early learning: AGS edition. Circle Pines, MN: American Guidance Service; 1995. [Google Scholar]

- 40.Constantino JN, Gruber CP. The social responsiveness scale. Los Angeles: Western Psychological Services; 2002. [Google Scholar]

- 41.Rutter M, Bailey A, Lord C. The Social Communication Questionnaire. Los Angeles, CA: Western Psychological Services; 2010. [Google Scholar]

- 42.Wainer J, Dautenhahn K, Robins B, Amirabdollahian F. A pilot study with a novel setup for collaborative play of the humanoid robot KASPAR with children with autism. International Journal of Social Robotics. 2014;6:45–65. [Google Scholar]