Abstract

Purpose

The purpose of this study was to examine fatigue associated with sustained and effortful speech-processing in children with mild to moderately severe hearing loss.

Method

We used auditory P300 responses, subjective reports, and behavioral indices (response time, lapses of attention) to measure fatigue resulting from sustained speech-processing demands in 34 children with mild to moderately severe hearing loss (M = 10.03 years, SD = 1.93).

Results

Compared to baseline values, children with hearing loss showed increased lapses in attention, longer reaction times, reduced P300 amplitudes, and greater reports of fatigue following the completion of the demanding speech-processing tasks.

Conclusions

Similar to children with normal hearing, children with hearing loss demonstrate reductions in attentional processing of speech in noise following sustained speech-processing tasks—a finding consistent with the development of fatigue.

Fatigue is commonly defined as a subjective experience, a mood state associated with feelings of weariness, tiredness, a lack of vigor or energy, and/or a decreased motivation to continue on with a task that is not due to a mental or physical limitation (Chaudhuri & Behan, 2000; Hockey, 2013; Hornsby, Naylor, & Bess, 2016). Feelings of fatigue are often noted as a significant concern for working adults with hearing loss (Hétu, Riverin, Lalande, Getty, & St-Cyr, 1988; Kramer, Kapteyn, & Houtgast, 2006; Nachtegaal et al., 2009). The additional attention, concentration, and effort needed to overcome auditory deficits associated with hearing loss can result in increased reports of stress and fatigue for listeners with hearing loss, compared to those with normal hearing. When considering the demands of processing speech in a noisy classroom environment, it is reasonable to imagine that children with hearing loss experience some level of fatigue similar to or greater than adults with hearing loss. Children with hearing loss have been found to exhibit greater signs of stress (Bess et al., 2016), expend more listening effort (Hicks & Tharpe, 2002; McGarrigle, Gustafson, Hornsby, & Bess, in press), and subjectively report more fatigue (Hornsby et al., 2017; Hornsby, Werfel, Camarata, & Bess, 2014; Werfel & Hendricks, 2016) than children with no hearing loss. While intuitive, there has been limited work directly examining whether the increased effortful listening experienced by children with hearing loss results in fatigue.

The fatigued state can be associated with decreased activity of the central nervous system, reflected in slowed information processing, decreased attention, and reduced arousal (Lim et al., 2010; Moore, Key, Thelen, & Hornsby, 2017; Murata, Uetake, & Takasawa, 2005). Thus, fatigued children may show reduced focus on the teacher's instructions and suffer from more frequent distractions caused by irrelevant events. Prior studies using cortical auditory-evoked potentials (AEP) have identified the centroparietal P300 as a consistently observed response that indexes the amount of available processing resources (e.g., Donchin, Miller, & Farwell, 1986) and is sensitive to the effects of mental fatigue (Murata et al., 2005). The P300 is most commonly elicited in an oddball paradigm where the listener is asked to detect a rare target stimulus present in a stream of frequent distractors (standard stimuli; Polich, 2007). The P300 response is characterized by a positive peak, larger in response to the targets compared to the standard stimuli, and typically occurs in young adults within 300–600 ms after the stimulus onset. The magnitude of the P300 response (difference between target and standard response) reflects the amount of processing capacity available for attention allocation to ongoing tasks (Polich, 2007). This processing capacity (and thus P300 amplitude) is modulated by arousal level (Kahneman, 1973; Polich, 2007), which is decreased in a fatigued state (Moore et al., 2017). Thus, a potential consequence of fatigue is diminished amplitudes of the P300 response (Key, Gustafson, Rentmeester, Hornsby, & Bess, 2017; Murata et al., 2005).

We previously demonstrated that auditory P300 measures in a pre- versus post-design can be used as a measure of fatigue related to speech processing in school-age children (Key et al., 2017). In that study, 27 children with normal hearing completed a series of sustained and effortful speech-processing tasks over a 3-hr visit. Fatigue was measured using behavioral (visual response times, lapses in attention), subjective (rating scale), and electrophysiological (auditory-evoked P300 responses) methods prior to and directly following the speech-processing tasks. As predicted, children demonstrated greater lapses in attention, longer reaction times, reduced P300 amplitudes, and increased fatigue ratings after the completion of the demanding speech-processing tasks.

The purpose of this study was to examine if children with hearing loss exhibit a similar pattern of fatigue related to speech processing using the same behavioral, subjective, and electrophysiological methods. We hypothesized that children with hearing loss would exhibit greater lapses of attention and prolonged reaction times, report increased fatigue, and generate auditory P300 responses with reduced amplitude following sustained speech-processing tasks.

Method

Participants

Fifty-six participants with hearing loss between 6 and 12 years of age were recruited from Vanderbilt's pediatric audiology clinics and from school systems throughout the Middle Tennessee area. All participants in this study were enrolled in a broader research program designed to examine the effects of listening effort and fatigue on school-age children with hearing loss (see Bess, Gustafson, & Hornsby, 2014, for an overview). Parents reported no diagnosis of learning disability or cognitive impairment and confirmed that all participants were monolingual English speakers. Participants exhibited mild to moderately severe sensorineural hearing loss in at least the better hearing ear, as confirmed by audiologic assessment upon entry into the larger study. Mild hearing loss was defined as pure-tone average (PTA; thresholds at 500, 1000, and 2000 Hz) between 20 and 40 dB HL or thresholds greater than 25 dB HL at two or more frequencies above 2000 Hz. Moderately severe hearing loss was defined as a PTA of 45–70 dB HL. To determine a reliable, norm-referenced measure of language performance by age, language ability was measured using the core language index of the Clinical Evaluation of Language Fundamentals–Fourth Edition (Semel, Wiig, & Secord, 2003). In addition, participants received the Test of Nonverbal Intelligence–Fourth Edition (Brown, Sherbenou, & Johnsen, 2010) to confirm intellectual capacity within or above normal limits.

Children were invited to complete the experimental visit with and without the use of personal hearing aids. To be eligible for unaided testing, children were required to repeat ≥ 20% words correctly at +8 dB signal-to-noise ratio (SNR) without using hearing aids. This criterion helped ensure that performance remained above floor levels and that children remained engaged during the completion of the speech-processing tasks. Children who were not eligible for unaided testing were eligible to complete aided testing. Aided testing was completed using each child's personal hearing aids programmed with their everyday, “walk-in” settings. We chose not to optimize hearing aid programing so that we could study the effects of hearing loss on listening effort and fatigue in an ecologically valid sample of children.

To examine if audibility had an effect on fatigue related to speech processing, we quantified available audibility (unaided or aided). Real-ear aided response was measured by a trained research assistant for each hearing aid using probe microphone methods prior to the start of the appointment. This provided a measure of aided audibility for average-level speech (65 dB SPL) using the speech intelligibility index (SII) values calculated with the Audioscan Verifit (Audioscan; Dorchester, Ontario, Canada). Better-ear aided SIIs for average-level speech ranged from .42 to .95. Of the children who were tested with hearing aids (see below), 83% had better ear SIIs greater than .65, which has been proposed as the criterion for adequate aided audibility (Stiles, Bentler, & McGregor, 2012). This rate of adequate audibility is slightly above the rate of 74% reported by McCreery, Bentler, and Roush (2013), who assessed hearing aid fittings in a sample of 195 younger children with hearing loss. The Audioscan Verifit was also used to measure unaided audibility for children who were tested without hearing aids by using the unaided SII values calculated using hearing thresholds. Better ear unaided SIIs for average-level speech ranged from .13 to .92. Of the children who were tested without hearing aids, 59% had better ear SIIs greater than .65.

Complete, usable data were obtained from 51 children with hearing loss; 26 children completed both unaided and aided test sessions. Seventeen children completed only an aided test session, and eight children completed only an unaided test session. Thus, potentially useful data were available from 77 unique test sessions. However, because the purpose of this study was to evaluate the effect of sustained, effortful listening on P300 response amplitude, only participants demonstrating a P300 response at the expected parietal location prior to the speech-processing tasks were included in the analyses. Fifteen of the 34 children (44%) completing unaided visits and 19 of 43 children (44%) completing aided visits did not show P300 responses in the expected parietal location at pretest and thus were not included in analyses. Children with and without parietal P300 responses at pretest were not significantly different in age, language skills, gender, better ear PTA, or better ear audibility (ps > .05).

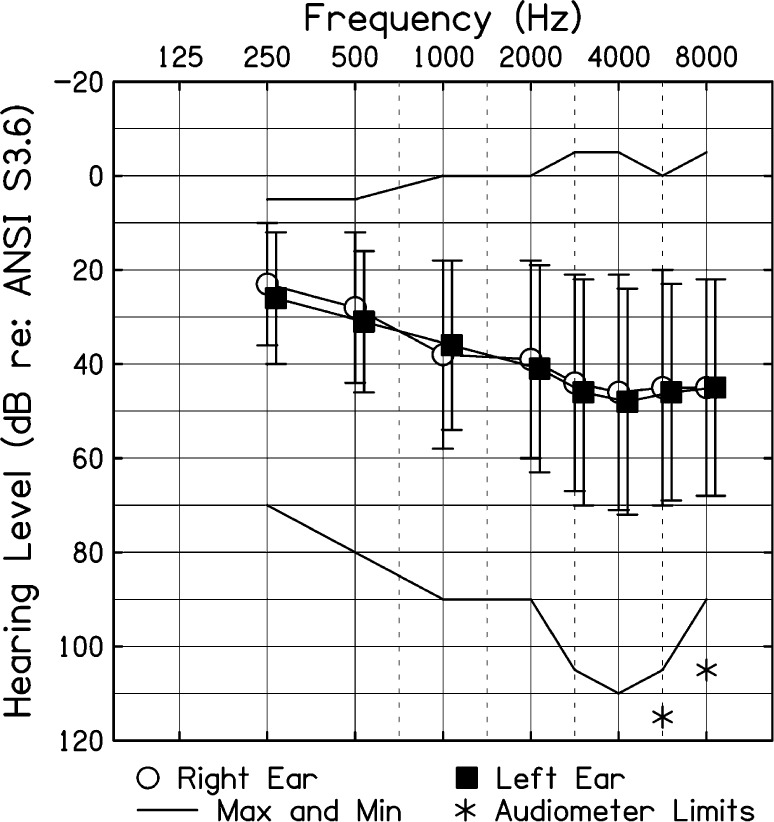

Our original intent was to examine the effect of hearing aid use on fatigue related to speech processing within children. Unfortunately, only nine children who were tested in the unaided and aided conditions showed P300 responses in both conditions at pretest. This sample size was too small for the planned repeated-measures analysis. Therefore, to address the main research question of the effects of speech-processing tasks on fatigue in children with mild to moderately severe hearing loss, data from children showing P300 responses at pretest, regardless of whether the study visit was unaided or aided, were collapsed. Only the unaided data were included for the nine children who showed measurable P300 responses for both conditions. There were no significant differences between children tested in the aided versus unaided condition in age, language skills, gender, or better ear audibility, although children tested in the aided condition had significantly more hearing loss than children tested in the unaided condition (see Table 1). The two groups were also not significantly different in performance on speech-processing tasks or on any measure of fatigue (ps > .05). Thus, the final sample included 34 children with hearing loss (19 tested in the unaided condition, 15 tested in the aided condition). Figure 1 shows average hearing thresholds for children included in the final sample.

Table 1.

Participant characteristics for unaided and aided groups.

| Measure | Unaided

a

|

Aided

b

|

||

|---|---|---|---|---|

| M | SD | M | SD | |

| Age (years) | 10.45 | 1.94 | 9.46 | 1.83 |

| Language | 96.53 | 24.71 | 92.80 | 17.76 |

| Nonverbal IQ | 103.89 | 15.30 | 105.87 | 7.56 |

| Better ear PTA (dB)* | 30.44 | 13.45 | 42.56 | 14.70 |

| Better ear audibility (SII) | 0.66 | 0.23 | 0.70 | 0.13 |

| Laterality quotient* | 0.53 | 0.73 | 0.95 | 0.11 |

Note. Language reported as the standard score on the core language index of the Clinical Evaluation of Language Fundamentals–Fourth Edition. Nonverbal IQ reported as the standard score on the Test of Nonverbal Intelligence–Fourth Edition. Better ear PTA calculated as average of 500, 1000, and 2000 Hz. Laterality quotient tested by the Edinburgh Handedness Inventory (Oldfield, 1971). PTA = pure-tone average; SII = speech intelligibility index.

n = 19; 10 boys, 9 girls.

n = 15; 4 boys, 11 girls.

p < .05.

Figure 1.

Average (1 SD) hearing thresholds for children in the final sample. ANSI = American National Standards Institute.

The study was reviewed and approved by the institutional review board of Vanderbilt University. All children provided their assent, and parents/caregivers provided written informed consent prior to the initiation of any research procedures. Families were compensated for their time.

Procedure

Study visits occurred on nonschool days (e.g., weekends, school holidays) and lasted approximately 3 hr (M = 2.84 hr, SD = 0.40 hr). Assignment of first test condition (unaided vs. aided) was counterbalanced across children who completed both visits. Visit activities included a baseline assessment of fatigue, three demanding speech-processing tasks that required sustained, effortful listening (speech recognition, dual-task paradigm, and speech vigilance), and a repetition of the fatigue assessment. Fatigue was evaluated using objective (AEP and behavioral performance) and subjective (self-report) measures. Details of these measures are provided in a previous article (Key et al., 2017); however, a brief overview and changes implemented for children with hearing loss (if any) are described below.

Measures of Fatigue

Behavioral Measures

Participants completed an abbreviated version of the psychomotor vigilance task (PVT; Dinges & Powell, 1985) that included 50 trials over a 5-min period. The PVT is a visual–motor reaction time task that requires sustained visual attention to achieve optimum performance. This test was completed in a quiet, sound-treated booth and used to detect decrements in vigilant attention due to fatigue. Fatigue effects were quantified as changes in median response times and changes in lapses in attention (instances where reaction times were greater than 500 ms; Lim & Dinges, 2008) between the PVT administrations before and after the speech-processing tasks.

Self-Report

A fatigue scale (FS) questionnaire (Bess et al., 2014) was used to assess the children's current level of fatigue immediately prior to each of the AEP sessions. The scale consists of five fatigue-related statements evaluated using a 5-point Likert response set, ranging from 0 = not at all to 4 = a lot. Responses across five FS items were averaged to derive a mean fatigue score, with “lower” total scores indicating greater perceived fatigue.

AEP Paradigm

Stimuli. Stimuli included syllables /gi/ and /gu/ presented at a +10 dB SNR against a 20-talker babble. These two syllables were 610 ms in duration. Within a trial, the syllables were centered within the 1400-ms babble segments. The assignment of syllables to the standard and target condition was counterbalanced across participants.

Electrodes. AEPs were recorded using a 128-channel Geodesic sensor net (v. 2.1; Electrical Geodesics, Inc.). The electrode impedances were kept at or below 40 kΩ. The AEP signals were sampled at 250 Hz with filters set at 0.1–100 Hz. During data collection, all electrodes were referred to vertex (Cz). Average reference was used for data analyses.

AEP procedure. AEPs were recorded twice per visit, once before and once after completing the speech-processing tasks described below. Participants were tested individually in a sound-dampened room, where speech sound stimuli were delivered using an oddball paradigm with targets comprising 30% of the trials. Stimuli were delivered using an automated presentation program (E-Prime, PST, Inc.) at an average intensity of 65 dB(A) from a single speaker positioned above the participant's midline. Intervals between stimuli varied randomly between 1,400 and 2,400 ms to prevent habituation to stimulus onset.

Participants were asked to sit quietly, listen to the stimuli, and make a mental note when a target stimulus was presented. Stimulus presentation was suspended during periods of motor activity until the child's behavior quieted. The examiners then redirected the child to the task. For each session, electroencephalography was recorded continuously during the presentation of 120 trials (84 standard and 36 target trials). The task duration was approximately 6–8 min per session.

Speech-Processing Tasks

Three listening tasks requiring the processing of speech in background noise were presented in a fixed order. For further details, see Key et al. (2017).

Speech Recognition

Word recognition was assessed using 32 randomly selected stimuli modified from the Coordinate Response Measure (CRM; Bolia, Nelson, Ericson, & Simpson, 2000) presented in a background noise of cafeteria babble. The speech and noise were presented from a single loudspeaker located at ear level and positioned 1 m directly in front of the child. The speech was presented at 60 dB(A) to all participants. The background noise level varied across participants, resulting in a test condition of 0, +4, or +8 dB SNR. Methods used to determine SNR assignment are described below. The task was approximately 3 min long.

Dual-Task Paradigm

The dual-task paradigm used in this study required children to listen to and repeat monosyllabic words presented in multitalker babble noise (primary task) while monitoring a computer screen for the presence of a brief (125 ms) visual target, which required a button-press response (secondary task). Testing setup and procedures for children with hearing loss were identical to those reported by Key et al. (2017), with the exception of the presentation level of the speech. Because children with hearing loss require more favorable SNRs than children with normal hearing for speech perception (Crandell & Smaldino, 2000; Leibold, Hillock-Dunn, Duncan, Roush, & Buss, 2013), SNRs used for speech-processing tasks with children who have hearing loss were allowed to vary systematically between the original and two more favorable combinations (described below). Children in Key et al. were all tested using SNRs ranging from −4 to +4 dB. In this study, the level of the speech was adjusted by the examiner to create SNRs ranging from −4 to +12 dB. Testing was conducted at three SNRs separated in 4-dB increments (i.e., −4, 0, +4; 0, +4, +8; or +4, +8, +12). Methods used to determine SNR assignment are described below. The effect of SNR on the dual-task paradigm performance is discussed elsewhere (McGarrigle et al., in press); here, we report performance averaged across the three SNR conditions. The total test time of the primary, secondary, and dual-task procedures was approximately 40 min.

Speech Vigilance Task

This task required children to listen amid cafeteria babble attentively for an auditory target (a specific CRM number) while ignoring irrelevant stimuli (all other numbers). Upon hearing this target number, children were instructed to recall the sentence details (call sign and color) directly preceding the target number using a computer interface. This mentally demanding task was meant to require sustained attention for a total of 13–15 min. Testing was completed in the same sound-treated room used for the CRM recognition task and at the same speech and noise level (0, +4, or +8 dB SNR).

Determining SNRs

Upon entry into the study, children participated in a screening test to determine testing conditions that would ensure performance above floor levels for each of the speech-processing tasks. This screening process is described in detail elsewhere (McGarrigle et al., in press) but is briefly explained here. Participants completed an abbreviated version of the primary task (word recognition in noise) at −4, 0, and +8 dB SNR. The lowest (hardest) SNR at which the participant was able to score ≥ 20% correct (cutoff SNR) was used to determine which SNRs would be used for behavioral testing. Table 2 shows which combination of SNRs was used in each study task based on the participant's screening test performance. As an example, if a child scored 24% correct at a +8 dB SNR but only 12% correct at a 0 dB SNR, their SNRs for the dual-task paradigm would be +4, +8, +12 dB SNR, and the test SNR for the simple speech recognition and vigilance tasks would be +8 dB SNR.

Table 2.

Cutoff SNRs and the corresponding SNR combinations used in each study task.

| Cutoff SNR | Dual-task paradigm | Speech recognition & vigilance tasks |

|---|---|---|

| −4 | −4, 0, +4 dB SNR | 0 |

| 0 | 0, +4, +8 dB SNR | +4 |

| +8 | +4, +8, +12 dB SNR | +8 |

Note. SNR = signal-to-noise ratio.

Data Analysis

Behavioral and Subjective Measures of Fatigue

To test the prediction that children would show increased fatigue following completion of the speech-processing tasks, paired sample t tests were conducted to examine differences in median response times and lapses in attention during the PVT task, as well as in the FS scores obtained before and after the fatigue-inducing speech-processing tasks.

Because of the ordinal nature of our subjective data, a nonparametric correlational approach (Spearman's rho) was used to examine the association between the subjective ratings of fatigue (FS) and performance on objective behavioral assessments of fatigue (PVT) at pre– and post–speech-processing tasks time points. To examine if the relation between objective and subjective measures persisted when considering changes in fatigue caused by the speech-processing tasks, we calculated a difference score using response times and lapses in attention between PVT1 and PVT2 and total scores from FS1 and FS2. A Bonferroni-adjusted alpha level of .025 was used to evaluate the significance of these correlations.

AEP Data

Following the procedures outlined in Key et al. (2017), the electroencephalography data were filtered offline using a 30-Hz low-pass filter and segmented on stimulus onset to include a 496-ms presyllable interval (containing the 100 ms prebabble baseline) and an 800-ms postsyllable period. Only standard trials that preceded a deviant stimulus were selected for the analyses. All trials contaminated by ocular and movement artifacts were excluded from further analysis using an automated screening algorithm in NetStation (v. 4.5 and 5.3; Electrical Geodesics, Inc.) followed by a manual review. Data for electrodes with poor signal quality within a trial were reconstructed using spherical spline interpolation procedures. Trials where more than 20% of the electrodes were deemed bad were discarded. Individual condition averages had to be based on at least 10 trials in order for a data set to be included in the statistical analyses. The number of trials retained per condition was comparable across test sessions (standard stimuli: M pretest = 19.6, SD = 7.39, M posttest = 19.3, SD = 6.70, p = .773; target stimuli: M prettest = 16.6, SD = 5.29, M posttest = 17.3, SD = 6.62, p = .502).

Following artifact screening, individual AEPs were averaged, rereferenced to an average reference, and baseline-corrected by subtracting the average microvolt value across the 100-ms prebabble interval from the poststimulus segment. Only data from selected electrode clusters corresponding to frontal (Fz), central (Cz), and parietal (Pz) midline locations were used in the remaining statistical analyses (Key et al., 2017). In our previous study, children with normal hearing showed P300 responses in the 300–500 ms analysis window, with no differences between standard and target response amplitudes in the 500–800 ms window at pretest (Key et al., 2017). Because adult listeners with hearing loss show delayed P300 responses compared to adults with normal hearing (Oates, Kurtzberg, & Stapells, 2002), particularly for speech stimuli (Wall & Martin, 1991), we anticipated that children with hearing loss may also have delayed latencies of P300 responses and thus show P300 responses in the later analysis window. Therefore, mean response amplitudes relative to the prebabble noise baseline were calculated for the P300 in the standard and target conditions across the 300–500 ms and 500–800 ms windows.

As discussed above, only participants demonstrating a P300 response at the Pz location prior to the speech-processing tasks were included in the analyses. Although there is no widely accepted minimal amplitude to consider an auditory P300 response as “present,” 0.5 μV has been used previously as the minimum detectable amplitude criterion in event-related potential data (Tacikowski & Nowicka, 2010). Therefore, we functionally deemed a P300 response to be present at pretest if the mean amplitude of the target response was ≥ 0.5 μV greater than the mean amplitude of the standard response in either the 300–500 ms or the 500–800 ms window at the Pz electrode cluster. For children demonstrating a P300 response at pretest, the amplitude difference between target and standard response within these windows exceeded the amplitude difference between responses during the prestimulus baseline by more than 6 SDs of the mean.

The mean amplitude values were averaged across the electrodes within the preselected electrode clusters (Fz, Cz, Pz; Key et al., 2017) and entered into separate repeated-measures analyses of variance (one for each time window) with Time (2: pre–/post–speech-processing tasks) and Stimulus (2: standard, target) as within-subject factors using Huynh–Feldt correction. Significant interactions were further explored using planned comparisons and post hoc pairwise t tests with Bonferroni correction. We focused these analyses only on contrasts relevant to the hypotheses, such as differences between standard and target responses within a session and changes in responses across the two test sessions. To determine if significant stimulus- or test time–related effects were related to fatigue, exploratory correlation analyses were performed on relevant AEP variables and scores on the objective behavioral assessments (PVT) and self-report of fatigue (FS).

Results

The purpose of this study was to examine the effects of sustained, speech-processing demands on fatigue in children with mild to moderately severe hearing loss. We report performance on the fatigue-eliciting tasks to demonstrate that children were actively engaged in speech processing. Behavioral and self-reported measures of fatigue following sustained speech processing are also described, with results of the AEP analyses presented as an additional objective measure of fatigue effects. Correlations between brain and behavioral measures are also reported.

Performance on Speech-Processing Tasks

Table 3 shows performance summaries for the speech-processing tasks and the measures of fatigue. Mean performance levels in the speech recognition task reflect that (a) children were able to successfully complete the tasks and (b) the listening conditions were challenging enough to limit ceiling performance. Accuracy data from the speech vigilance task suggest that children were able to maintain vigilant attention sufficient for high performance levels.

Table 3.

Performance on speech-processing tasks and measures of fatigue.

| Speech-processing tasks | M | SD | |||

|---|---|---|---|---|---|

| CRM recognition performance (% correct) | 73.81 | 26.94 | |||

| Primary task performance (% correct) | 33.74 | 14.32 | |||

| Secondary task median response time (ms) | 784.10 | 120.86 | |||

| Dual-task primary task performance (% correct) | 33.02 | 15.34 | |||

| Dual-task secondary task median response time (ms) | 940.70 | 154.25 | |||

| Vigilance performance (% correct) |

88.46 |

14.11 |

|||

|

Measures of fatigue

|

Pre

|

Post

|

Significance

|

||

|

M

|

SD

|

M

|

SD

|

||

| Fatigue scale total score | 80.88 | 17.52 | 76.18 | 20.08 | p = .073 |

| PVT median response time (ms) | 349.09 | 69.89 | 377.82 | 81.46 | p = .001 |

| PVT lapses in attention (count)c | 6.35 | 8.01 | 10.50 | 8.81 | p = .002 |

Note. CRM = coordinate response measure; PVT = psychomotor vigilance task.

Paired-sample t tests revealed no changes in speech recognition performance between the primary and dual tasks, t(28) = 0.551, p = .586. However, a significant change in response time was revealed when comparing performance on the secondary task alone (response time to a visual marker) and secondary task performance during the dual task, t(28) = −10.113, p < .001. Specifically, response times were longer when responding to visual targets in the dual-task condition when compared to those in the secondary task completed in isolation. These results suggest that the dual-task paradigm required more effort than the single task (primary task alone) to maintain recognition performance, leaving fewer processing resources available for allocation toward the secondary visual task.

Behavioral and Subjective Measures of Fatigue

Effects of Demographic Characteristics

Because fatigue measures in children with normal hearing sensitivity have shown significant relationships with age (Key et al., 2017), we included age as a potential covariate in our analyses. Age was significantly correlated with pre- and post-PVT median response time (pre: r(33) = −.537, p = .001; post: r(33) = −.548, p = .001) and lapses in attention (pre: r(33) = −.399, p = .019; post: r(33) = −.573, p < .001). Age was not significantly correlated with FS total scores at either time point (pre: r s(33) = −.136, p = .443; post: r s(33) = −.141, p = .427). These associations indicate that younger children were slower to respond to the visual stimulus and experienced more lapses in attention than older children; however, they did not report more fatigue than older children. There were also no significant correlations between age and changes in PVT or FS scores from pre– to post–speech-processing tasks administration (ps = .105–.917), indicating that younger children did not report or demonstrate larger increases or decreases in fatigue following sustained, demanding listening when compared to older children.

Fatigue Due to Speech-Processing Demands: Changes in PVT and FS Scores

When comparing data collected prior to starting the speech-processing tasks (PVT1) to those obtained following completion of the speech-processing tasks (PVT2), participants showed a significant increase in median PVT response times, t(33) = −3.284, p = .002. The number of lapses in attention during PVT2 was also significantly greater than in PVT1, t(33) = −3.676, p = .001, suggesting a reduced ability to maintain vigilant attention after completion of the speech-processing tasks. In addition, subjective ratings of fatigue suggested a slight increase (lower FS scores) following speech-processing tasks (see Table 3). However, results of a Wilcoxon signed-rank test showed the difference was not statistically significant, Z(33) = −1.795, p = .073.

Relation Between Behavioral and Subjective Measures of Fatigue

Partial correlation analyses controlling for the significant effects of age were conducted separately for subjective (FS ratings) and behavioral (PVT) measures of fatigue at pre– and post–speech-processing time points. Results showed significant associations at posttest between FS total scores and median PVT response times (pre: r(31) = −.277, p = .119; post: r(31) = −.357, p = .041) and lapses in attention (pre: r(31) = −.331, p = .060; post: r(31) = −.436, p = .011). These moderate, negative associations suggest that children who reported more fatigue also showed longer response times and more lapses in attention after completing the speech-processing tasks. Changes in behavioral fatigue (PVT median response time and lapses in attention) from pre– to post–speech-processing tasks performance were also related to increases in self-reported fatigue, but these correlations did not reach statistical significance (PVT median response time: r(34) = −.310, p = .075; PVT lapse in attention: r(34) = −.309, p = .076).

AEP Results

The amplitude of the parietal P300 response was not significantly correlated with age, language skills, laterality quotient, better ear PTA, or better ear audibility in either of the two time windows included in the statistical analyses of either test session (ps = .095–.982). Although all 34 children had observable P300 responses (≥ 0.5 μV) at the Pz electrode cluster in at least one of the measurement windows at pretest, only 16 children (47%) had parietal P300 responses at posttest. Of these 16 children, seven (44%) also showed a frontal P300 response (≥ 0.5 μV). In comparison, 15 of the 18 children (83%) with no parietal P300 response at posttest showed a frontal P300 response, suggesting a change in auditory attention processes. There were no differences between the children with parietal P300 responses at posttest and those without a parietal P300 response at posttest on measures of age, gender, language skills, intelligence, better ear PTA, or better ear audibility (ps = .277–.852).

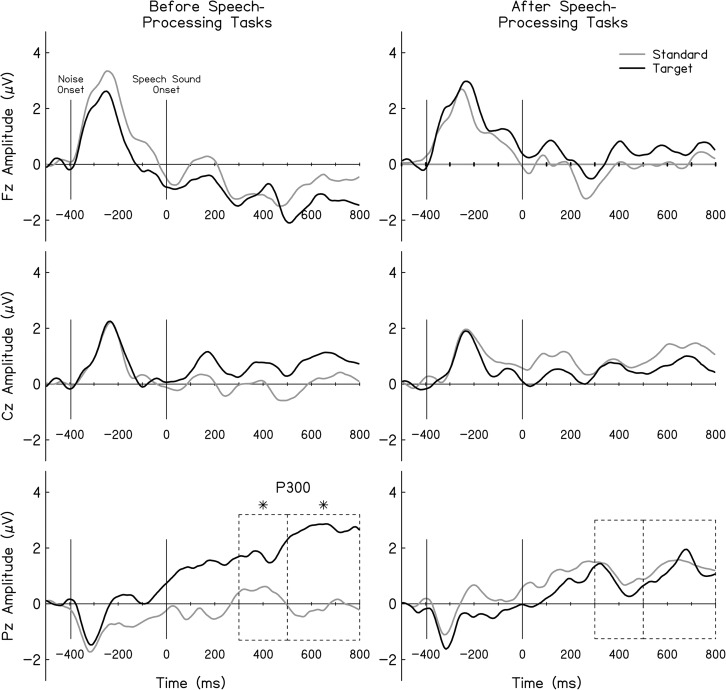

300–500 ms Window

Although there was no main effect of time, F(1, 33) = 3.96, p = .054, ηp 2 = .108, there was a main effect of electrode cluster, F(2, 66) = 13.7, p < .001, ηp 2 = .294, and stimulus, F(1, 33) = 5.167, p = .030, ηp 2 = .135. No significant interactions were found. Pairwise comparisons (critical p = .017) showed that responses had the expected centroparietal scalp distribution with the largest amplitudes observed at Pz and Cz compared to Fz electrode clusters (Pz vs. Fz, p < .001; Cz vs. Fz, p < .001). No significant amplitude differences were observed between Pz and Cz (p = .053). Planned comparisons focused on the stimulus-specific responses indicated that, at pretest, targets elicited larger P300 responses than standards at the Pz electrode cluster, t(33) = −2.600, p = .014, Cohen's d = .422 (see Figure 2). During the posttest session, there were no significant stimulus differences at Pz, t(33) = 0.513, p = .612. No significant stimulus differences were found during pre- or posttest at the Cz or Fz electrode clusters (ps = .071–.891).

Figure 2.

Averaged auditory-evoked potential (AEP) responses at Fz, Cz, and Pz electrode clusters recorded prior to and following completion of the speech-processing tasks. Dark and light tracings represent AEP responses to the target and standard stimuli, respectively. Dashed boxes highlight time windows used in the analyses. Asterisks indicate time windows where significant changes were observed between test stimuli.

500–800 ms Window

Results show significant main effects of time, F(1, 33) = 9.524, p = .004, ηp 2 = .224, electrode cluster, F(2, 66) = 8.477, p = .003, ηp 2 = .204, and stimulus, F(1, 33) = 10.84, p = .002, ηp 2 = .247. Significant interactions were found between Time × Stimulus, F(1, 33) = 8.025, p = .008, ηp 2 = .196, Electrode Cluster × Stimulus, F(2, 66) = 7.327, p = .002, ηp 2 = .182, and Time × Stimulus × Electrode Cluster, F(2, 66) = 12.610, p < .001, ηp 2 = .276. There was no significant Time × Electrode Cluster interaction. Pairwise comparisons (critical p = .017) showed that responses had the expected centroparietal scalp distribution with the largest amplitudes observed at Pz and Cz compared to Fz electrode clusters (Pz vs. Fz, p = .004; Cz vs. Fz, p < .001). No significant amplitude differences were observed between Pz and Cz clusters (p = .127).

Planned comparisons focused on the stimulus-specific responses indicated that, at pretest, targets elicited larger P300 responses to standards at the Pz electrode cluster, t(33) = −8.068, p < .001, Cohen's d = .819 (see Figure 2). During the post–speech-processing session, there were no significant stimulus differences at the Pz cluster, t(33) = 0.317, p = .753. Central electrodes also showed larger responses elicited by target stimuli than standard responses at pretest, t(33) = −2.397, p = .022, Cohen's d = .298, and no difference in stimulus-specific responses at posttest, t(33) = 1.527, p = .136. No significant stimulus differences were found during pre- or posttest at the Fz electrode cluster (ps = .106 and .340).

Brain–Behavior Correlations

Brain–behavior correlations (critical p = .013) were examined for 300–500 ms and 500–800 ms windows. Parietal P300 responses did not correlate with the performance on PVT or the FS total score at pre- or posttest. Changes in parietal P300 amplitude following the speech-processing tasks sessions were also not significantly correlated with age, language, better ear PTA, better ear audibility, PVT performance at either time point, or either of the total scores on the FS. Similarly, there were no significant relationships between changes in P300 amplitude at the Pz electrode cluster and changes in behavioral performance (PVT measures) or changes in FS scores.

Recall that 44% of children (n = 15) showed P300 responses at the frontal rather than the parietal electrode cluster at posttest. To determine if this shift in attentional processing was related to speech-processing fatigue, we compared performance on fatigue measures between children who maintained parietal P300 responses at posttest and those who, instead, showed frontal P300 responses at posttest. No differences were found at either time point on PVT performance (response times or lapses in attention) or FS scores measured between children with parietal P300 and frontal P300 responses at posttest (ps = .278–.896). Changes in fatigue measures from pre- to posttest were also no different between parietal P300 and frontal P300 groups (ps = .308–.676).

Discussion

The purpose of this study was to evaluate fatigue related to speech processing in children with mild to moderately severe hearing loss using subjective (FS), behavioral (PVT), and electrophysiological (AEP) methods. Results are consistent with our hypotheses. Namely, children with hearing loss exhibited greater lapses of attention and prolonged reaction times, reported increased fatigue, and showed reduced amplitudes in auditory P300 responses after sustained speech-processing tasks. Because this study used similar methodology to our previous work in children with normal hearing (Key et al., 2017), below we discuss similarities and differences in the pattern of responses between groups (hearing loss and normal hearing).

Behavioral and Subjective Measures of Fatigue

Children with hearing loss showed behavioral signs of fatigue after the speech-processing tasks. They also reported increased subjective fatigue following these tasks; however, this change in subjective fatigue did not reach statistical significance. Although children with normal hearing reported significant increases in subjective fatigue following sustained speech-processing tasks, independent samples t tests indicated that the two groups showed no differences in reported fatigue at either time point (ps > .05). The lack of significant differences between these two groups of children could be due to increased variability in responses from children with normal hearing as compared to those from children with hearing loss (discussed in further detail below). Furthermore, the limited range of fatigue reports from children with hearing loss might be attributed to their ever-present experience of fatigue related to listening and/or a different experience of being nonfatigued compared to children without hearing loss.

It is also possible that children with hearing loss employed different listening strategies compared to children with normal hearing. When faced with increasingly fatiguing speech-processing tasks, it is feasible that children with hearing loss were unable to maintain optimal performance and, thus, stopped trying so hard. Alternatively, children with hearing loss could have chosen to “pace themselves” throughout the anticipated demanding speech-processing tasks. This deliberate aim to reduce expenditure of resources could have resulted in less perceived (subjective) fatigue. Exploring these potential differences in strategy are outside the scope of this study; however, they could be examined in future research by recording subjective fatigue at multiple time points throughout a lengthy period of speech-processing tasks.

In this study, increased subjective reports of fatigue (changes in FS scores) did not correlate significantly with decrements in behavioral measures of fatigue (changes in PVT response time and lapses in attention). This finding is in contrast to our previous study in children with normal hearing, where larger increases in reported fatigue were significantly related with more lapses in attention (Key et al., 2017). It is noteworthy that, although not significant, the direction and magnitude of correlations between subjective and behavioral measures of fatigue found in children with hearing loss are consistent with those reported in children with normal hearing (Key et al., 2017). To test this observation, a repeated-measures analysis of variance was conducted to examine if changes in behavioral and subjective measures of fatigue were different between the 34 children with hearing loss reported here and the 27 children with normal hearing reported by Key and colleagues (2017). Hearing status did not have a significant effect on changes in PVT response time, lapses in attention, or fatigue ratings (ps = .444–.742), suggesting that hearing status did not affect how sustained speech-processing influenced behavioral and subjective ratings of fatigue.

Finally, the significant correlation found for children with normal hearing (Key et al., 2017) but not for children with hearing loss may be due to the greater variability shown by the children with normal hearing on behavioral and subjective measures of fatigue. Specifically, children with normal hearing showed broader interquartile ranges (IQRs) for FS ratings at pretest (65–95) and posttest (50–90) when compared to IQRs of FS ratings from children with hearing loss at pretest (75–95) and posttest (60–95). Furthermore, despite similar IQRs for lapses in attention at pretest between children with normal hearing (2–10) and children with hearing loss (1–9.25), children with normal hearing showed larger IQRs at posttest (3–18) than children with hearing loss (4–15.25). Nevertheless, our findings are consistent with research in adults with hearing loss showing no significant correlation between reports of subjective fatigue and fatigue-related changes in behavioral performance (Hornsby, 2013).

Electrophysiological Measures of Fatigue

In this study, fatigue related to speech processing in children with hearing loss was characterized by changes in attentional processing capacity, as indexed by the smaller amplitude of the parietal P300 responses after a period of sustained speech processing. These findings suggest that, like in children with normal hearing, sustained speech-processing demands can have fatigue-related behavioral and physiological consequences in children with mild to moderately severe hearing loss.

Consistent with findings for children with normal hearing reported by Key et al. (2017), changes in subjective (changes in FS scores) and behavioral measures of fatigue (changes in PVT response time and lapses in attention) were not significantly correlated with changes in electrophysiological measures in children with hearing loss. The lack of correlation between electrophysiological measures and subjective ratings is inconsistent with previous research in adults using the auditory P300 to index fatigue (Murata et al., 2005). Murata and colleagues used a mental arithmetic task, rather than sustained speech processing, to induce fatigue in their participants and found that the reductions in P300 amplitude were related with increased reports of mental and physical fatigue. Despite differences in participant age and methodology, reductions in P300 amplitude have now been found in studies with adults and children following fatiguing tasks (Key et al., 2017; Murata et al., 2005). Inconsistencies between our correlation findings and those of Murata et al. may be attributed to a variety of factors. Most notably, developmental differences might exist in the relationship between electrophysiological measures and subjective indices of fatigue. Although fatigue-related change in cognitive processing occurs in both adults and children, it is possible that children are not as reliable as adults in reporting their subjective fatigue.

Though Key et al. (2017) found no significant correlations between changes in electrophysiological measures of fatigue and measures of behavioral or subjective fatigue, children with normal hearing who demonstrated more lapses in attention and longer response times after sustained speech processing also showed the lowest amplitude of P300 at posttest. In contrast, correlations between electrophysiological and behavioral measures of fatigue at posttest were not significant in children with hearing loss. As discussed above with respect to relationships between subjective and behavioral measures, this also could be attributed to differences in variability of lapses in attention at posttest between children with normal hearing and children with hearing loss. In addition, the IQR of P300 amplitude collapsed across 300–500 and 500–800 ms windows at posttest for children with normal hearing (−2.25 to 2.24 μV) was broader than the IQR observed in children with hearing loss (−0.585 to 2.69 μV). This additional variability in children with normal hearing was localized to the posttest, as IQR differences between groups were minimal at pretest (normal hearing from −0.175 to 4.09 μV; hearing loss from 0.013 to 4.25 μV).

The overall reduction of P300 amplitudes at posttest in children with and without hearing loss may suggest that insufficient attentional resources were available to discriminate speech in noise following a period of sustained speech processing. However, although the reduction of parietal P300 responses from pre- to posttest is consistent with a previous study of adults demonstrating fatigue (Murata et al., 2005) and with behavioral and subjective measures of fatigue used in this study, it is also possible that reduction of the P300 amplitude post–speech processing reported here could be attributable to reductions in compliance with the speech discrimination task at posttest. We were unable to confirm if children were engaged in the task because we elected not to require an overt behavioral response (i.e., mental tracking of target stimulus rather than a button press) to keep the test simple enough for our youngest participants. Although adult studies have found larger P300 amplitudes with mental count compared to button-press procedures (Salisbury, Rutherford, Shenton, & McCarley, 2001), it is unknown whether this pattern of results would persist in children. Notably, a hallmark of fatigue is a reduced ability and/or motivation to maintain focused attention on a task (Hornsby et al., 2016). One consequence of such reduced ability/motivation is that a fatigued individual is more likely to shift their goal from completion of a target task (attending to the oddball stimulus in this case) to some other task (Hockey, 2013). Functionally, this would have been observed as reduced compliance with task instructions and could have resulted in a reduced or absent P300 response. Whether the reduction of P300 responses at posttest should be attributed to reduced attentional resources accompanying fatigue or to the effect of fatigue on behavioral compliance to task instructions, either should be expected to result in detrimental consequences in an educational setting. Future research is needed to understand the mechanisms underlying this fatigue related to speech processing in children.

Electrophysiological Measures of Speech Embedded in Background Noise Recorded From Children With and Without Hearing Loss

To our knowledge, this study is the first to report P300 responses in children with hearing loss using speech stimuli embedded in background noise. Notably, based on the criterion of ≥ 0.50 μV difference in amplitude between the target and standard stimuli, parietal P300 responses were absent at pretest in 30% of children with hearing loss, some of whom showed absent responses in both aided and unaided testing. These absent responses in children with hearing loss do not appear to be due to age, language skills, hearing aid use, degree of hearing loss, or audibility. In fact, 33% of children with normal hearing reported in Key et al. (2017) also did not show parietal P300 responses at pretest. Therefore, the presence of P300 responses at pretest does not appear to be related to hearing status in children. This is consistent with research in adults that has found no effect of hearing loss on P300 amplitude (Bertoli, Smurzynski, & Probst, 2005; Micco et al., 1995).

Research in adults with hearing loss show delayed P300 responses compared to adults with normal hearing (Oates et al., 2002), particularly for speech stimuli (Wall & Martin, 1991). Although children with normal hearing showed P300 responses only in the 300–500 ms analysis window (Key et al., 2017), children with hearing loss show P300 responses in the 300–500 and 500–800 ms analysis windows. Notably, the most robust response appears to be concentrated in the later, 500–800 ms time window (see Figure 2), suggesting that some children with hearing loss may have had P300 responses with peak latencies of > 500 ms. Although this study did not directly assess the effect of hearing loss on the latency of the P300 response, these findings are consistent with previous adult research showing delays in speech processing for listeners with hearing loss as compared to those with normal hearing (Oates et al., 2002).

Methodological Limitations

It is possible that the absence of parietal P300 responses at pretest (defined target amplitude ≥ 0.5 μV above standard amplitude) for one third of the study sample was due to study procedures used to collect AEP data. First, work in adult listeners has shown that the presence of babble noise causes significant reductions in P300 amplitude when compared to AEPs elicited in quiet (Bennett, Billings, Molis, & Leek, 2012; Koerner, Zhang, Nelson, Wang, & Zou, 2017). The use of speech embedded in multitalker babble noise could have negatively influenced the presence of the P300 response. However, it is unknown if background noise has a similar detrimental effect on the P300 response in children compared to adults. Second, the absence of P300 responses in our sample might have been due to the relatively high target stimulus probability (30%), as the amplitude of the P300 varies inversely with target probability (Polich, Ladish, & Burns, 1990) and the length of the target-to-target interval (Polich, 2007). Our target probability was motivated by the need to obtain enough target trials for a reliable event-related potential signal while minimizing the overall test session duration. This also allowed us to limit the confounding effects of additional fatigue, decreasing attention, and increasing motor artifacts. The selected target probability of 30% is not unusual in the auditory oddball studies, as the original P300 study by Sutton, Braren, Zubin, and John (1965) presented infrequent stimuli on 33% of the trials and the 70/30 design has been used in other studies of auditory processing in children (e.g., Barnes, Gozal, & Molfese, 2012; Henkin, Kileny, Hildesheimer, & Kishon-Rabin, 2008; Key et al., 2017; Senderecka, Grabowska, Gerc, Szewczyk, & Chmylak, 2012).

The smaller amplitude of the P300 responses seen in this study could have been exacerbated by the relatively small number of artifact-free trials available for analysis (17 trials on average). Although a greater number of artifact-free trials would have yielded data less influenced by measurement variability, other studies have used similarly small trial counts (Kröner et al., 1999; Määttä et al., 2005) and reported that 20 trials is “more than enough to stabilize P300 and the other components' amplitude” (Ochoa & Polich, 2000, p. 94). Finally, as discussed above, the mental tracking task without an overt behavioral response could have affected our ability to elicit P300 responses in all children due to varied compliance with the instructions. In the subgroup for whom a parietal P300 was absent at pretest (hearing loss, n = 34; normal hearing, n = 9), a frontal positivity to target stimuli was observed in 22 (65%) children with hearing loss and five (56%) children with normal hearing. These observations suggest that the target stimuli evoked an involuntary orienting response (i.e., P3a; Squires, Squires, & Hillyard, 1975). The remaining pretest sessions showed no increased positivity for targets versus standard sounds at any electrode cluster. This lack of positivity for 12 children with hearing loss and four normal hearing participants suggests that either their auditory systems could not discriminate reliably between two speech sounds in background noise or that methodological limitations discussed above prohibited us from detecting reliable P300 responses. Future work, including more target trials at a lower probability rate and requiring an overt behavioral response, is needed to determine if P300 responses can be more consistently elicited in children when using speech embedded in background babble noise.

Conclusions

Like children with normal hearing, sustained speech-processing demands can be fatiguing for children with mild to moderately severe hearing loss. This fatigue is evidenced most readily as a decrement in vigilant attention (PVT responses) and in electrophysiological changes in brain activity (P300 responses). Future research is needed to evaluate if a different subjective tool would be more sensitive to the effects of fatigue related to speech processing in children. Until then, behavioral and electrophysiological measures appear to show the most promise for assessing interventions aimed to reduce the negative effects of task-induced fatigue related to speech processing in children.

Acknowledgments

This work was supported in part by IES Grant R324A110266 to Vanderbilt University (Fred Bess, principal investigator) and NICHD Grants P30 HD15052 and U54HD083211 to Vanderbilt Kennedy Center. This research was also supported by the Dan and Margaret Maddox Charitable Fund. The content expressed is that of the authors and do not necessarily represent official views of the Institute of Educational Sciences, the U.S. Department of Education, or the National Institutes of Health. Data management was supported in part by the Vanderbilt Institute for Clinical and Translational Research (UL1 TR000445 from NCATS/NIH to Vanderbilt University Medical Center). We would like to thank Dorita Jones for assistance with AEP data processing as well as students and study staff who assisted in participant recruitment and data collection.

Funding Statement

This work was supported in part by IES Grant R324A110266 to Vanderbilt University (Fred Bess, principal investigator) and NICHD Grants P30 HD15052 and U54HD083211 to Vanderbilt Kennedy Center. This research was also supported by the Dan and Margaret Maddox Charitable Fund. The content expressed is that of the authors and do not necessarily represent official views of the Institute of Educational Sciences, the U.S. Department of Education, or the National Institutes of Health. Data management was supported in part by the Vanderbilt Institute for Clinical and Translational Research (UL1 TR000445 from NCATS/NIH to Vanderbilt University Medical Center).

References

- Barnes M. E., Gozal D., & Molfese D. L. (2012). Attention in children with obstructive sleep apnoea: An event-related potentials study. Sleep Medicine, 13(4), 368–377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bennett K. O., Billings C. J., Molis M. R., & Leek M. R. (2012). Neural encoding and perception of speech signals in informational masking. Ear and Hearing, 32(2), 231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bertoli S., Smurzynski J., & Probst R. (2005). Effects of age, age-related hearing loss, and contralateral cafeteria noise on the discrimination of small frequency changes: Psychoacoustic and electrophysiological measures. Journal of the Association for Research in Otolaryngology, 6(3), 207–222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bess F. H., Gustafson S. J., Corbett B. A., Lambert E. W., Camarata S. M., & Hornsby B. W. Y. (2016). Salivary cortisol profiles of children with hearing loss. Ear and Hearing, 37(3), 334–344. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bess F. H., Gustafson S. J., & Hornsby B. W. Y. (2014). How hard can it be to listen? Fatigue in school-age children with hearing loss. Journal of Educational Audiology, 20, 1–14. [Google Scholar]

- Bolia R. S., Nelson W. T., Ericson M. A., & Simpson B. D. (2000). A speech corpus for multitalker communications research. The Journal of the Acoustical Society of America, 107(2), 1065–1066. [DOI] [PubMed] [Google Scholar]

- Brown L., Sherbenou R. J., & Johnsen S. K. (2010). Test of Nonverbal Intelligence–Fourth Edition (TONI-4). Austin, TX: Pro-Ed. [Google Scholar]

- Chaudhuri A., & Behan P. O. (2000). Fatigue and basal ganglia. Journal of the Neurological Sciences, 179(1), 34–42. [DOI] [PubMed] [Google Scholar]

- Crandell C. C., & Smaldino J. J. (2000). Classroom acoustics for children with normal hearing and with hearing impairment. Language, Speech, and Hearing Services in Schools, 31(4), 362–370. [DOI] [PubMed] [Google Scholar]

- Dinges D. F., & Powell J. W. (1985). Microcomputer analyses of performance on a portable, simple visual RT task during sustained operations. Behavior Research Methods, Instruments, & Computers, 17(6), 652–655. [Google Scholar]

- Donchin E., Miller G. A., & Farwell L. A. (1986). The endogenous components of the event-related potential—A diagnostic tool? Progress in Brain Research, 70, 87–102. [DOI] [PubMed] [Google Scholar]

- Henkin Y., Kileny P. R., Hildesheimer M., & Kishon-Rabin L. (2008). Phonetic processing in children with cochlear implants: An auditory event-related potentials study. Ear and Hearing, 29(2), 239–249. [DOI] [PubMed] [Google Scholar]

- Hétu R., Riverin L., Lalande N., Getty L., & St-Cyr C. (1988). Qualitative analysis of the handicap associated with occupational hearing loss. British Journal of Audiology, 22(4), 251–264. [DOI] [PubMed] [Google Scholar]

- Hicks C. B., & Tharpe A. M. (2002). Listening effort and fatigue in school-age children with and without hearing loss. Journal of Speech, Language, and Hearing Research, 45(3), 573–584. [DOI] [PubMed] [Google Scholar]

- Hockey R. (2013). A motivational control theory of cognitive fatigue. New York, NY: Cambridge University Press. [Google Scholar]

- Hornsby B. W. Y. (2013). The effects of hearing aid use on listening effort and mental fatigue associated with sustained speech processing demands. Ear and Hearing, 34(5), 523–534. [DOI] [PubMed] [Google Scholar]

- Hornsby B. W. Y., Gustafson S. J., Lancaster H., Cho S. J., Camarata S., & Bess F. H. (2017). Subjective fatigue in children with hearing loss assessed using self- and parent-proxy report. American Journal of Audiology, 26(3S), 393–407. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hornsby B. W. Y., Naylor G., & Bess F. H. (2016). A taxonomy of fatigue concepts and their relation to hearing loss. Ear and Hearing, 37, 136S–144S. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hornsby B. W. Y., Werfel K., Camarata S., & Bess F. H. (2014). Subjective fatigue in children with hearing loss: Some preliminary findings. American Journal of Audiology, 23(1), 129–134. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahneman D. (1973). Attention and effort (Vol. 1063). Englewood Cliffs, NJ: Prentice-Hall. [Google Scholar]

- Key A. P., Gustafson S. J., Rentmeester L., Hornsby B. W. Y., & Bess F. H. (2017). Speech-processing fatigue in children: Auditory event-related potential and behavioral measures. Journal of Speech, Language, and Hearing Research, 60(7), 2090–2104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koerner T. K., Zhang Y., Nelson P. B., Wang B., & Zou H. (2017). Neural indices of phonemic discrimination and sentence-level speech intelligibility in quiet and noise: A P3 study. Hearing Research, 350, 58–67. [DOI] [PubMed] [Google Scholar]

- Kramer S. E., Kapteyn T. S., & Houtgast T. (2006). Occupational performance: Comparing normally-hearing and hearing-impaired employees using the Amsterdam Checklist for Hearing and Work. International Journal of Audiology, 45(9), 503–512. [DOI] [PubMed] [Google Scholar]

- Kröner S., Schall U., Ward P. B., Sticht G., Banger M., Haffner H.-T., & Catts S. V. (1999). Effects of prepulses and d-amphetamine on performance and event-related potential measures on an auditory discrimination task. Psychopharmacology, 145(2), 123–132. [DOI] [PubMed] [Google Scholar]

- Leibold L. J., Hillock-Dunn A., Duncan N., Roush P. A., & Buss E. (2013). Influence of hearing loss on children's identification of spondee words in a speech-shaped noise or a two-talker masker. Ear and Hearing, 34(5), 575–584. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lim J., & Dinges D. F. (2008). Sleep deprivation and vigilant attention. Annals of the New York Academy of Sciences, 1129(1), 305–322. [DOI] [PubMed] [Google Scholar]

- Lim J., Wu W., Wang J., Detre J. A., Dinges D. F., & Rao H. (2010). Imaging brain fatigue from sustained mental workload: An ASL perfusion study of the time-on-task effect. NeuroImage, 49(4), 3426–3435. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Määttä S., Herrgård E., Saavalainen P., Pääkkönen A., Könönen M., Luoma L., … Partanen J. (2005). P3 amplitude and time-on-task effects in distractible adolescents. Clinical Neurophysiology, 116(9), 2175–2183. [DOI] [PubMed] [Google Scholar]

- McCreery R. W., Bentler R. A., & Roush P. A. (2013). Characteristics of hearing aid fittings in infants and young children. Ear and Hearing, 34(6), 701–710. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McGarrigle R., Gustafson S. J., Hornsby B. W. Y., & Bess F. H. (in press). Behavioral measures of listening effort in school-age children: Examining the effects of signal-to-noise ratio, hearing loss, and amplification. Ear and Hearing. [DOI] [PubMed] [Google Scholar]

- Micco A. G., Kraus N., Koch D. B., McGee T. J., Carrell T. D., Sharma A., … Wiet R. J. (1995). Speech-evoked cognitive P300 potentials in cochlear implant recipients. Otology & Neurotology, 16(4), 514–520. [PubMed] [Google Scholar]

- Moore T. M., Key A. P., Thelen A., & Hornsby B. W. Y. (2017). Neural mechanisms of mental fatigue elicited by sustained auditory processing. Neuropsychologia, 106(Supplement C), 371–382. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murata A., Uetake A., & Takasawa Y. (2005). Evaluation of mental fatigue using feature parameter extracted from event-related potential. International Journal of Industrial Ergonomics, 35(8), 761–770. [Google Scholar]

- Nachtegaal J., Kuik D. J., Anema J. R., Goverts S. T., Festen J. M., & Kramer S. E. (2009). Hearing status, need for recovery after work, and psychosocial work characteristics: Results from an Internet-based national survey on hearing. International Journal of Audiology, 48(10), 684–691. [DOI] [PubMed] [Google Scholar]

- Oates P. A., Kurtzberg D., & Stapells D. R. (2002). Effects of sensorineural hearing loss on cortical event-related potential and behavioral measures of speech-sound processing. Ear and Hearing, 23(5), 399–415. [DOI] [PubMed] [Google Scholar]

- Ochoa C. J., & Polich J. (2000). P300 and blink instructions. Clinical Neurophysiology, 111(1), 93–98. [DOI] [PubMed] [Google Scholar]

- Oldfield R. C. (1971). The assessment and analysis of handedness: The Edinburgh inventory. Neuropsychologia, 9(1), 97–113. [DOI] [PubMed] [Google Scholar]

- Polich J. (2007). Updating P300: An integrative theory of P3a and P3b. Clinical Neurophysiology, 118(10), 2128–2148. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Polich J., Ladish C., & Burns T. (1990). Normal variation of P300 in children: Age, memory span, and head size. International Journal of Psychophysiology, 9(3), 237–248. [DOI] [PubMed] [Google Scholar]

- Salisbury D. F., Rutherford B., Shenton M. E., & McCarley R. W. (2001). Button-pressing affects P300 amplitude and scalp topography. Clinical Neurophysiology, 112(9), 1676–1684. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Semel E., Wiig E., & Secord W. (2003). Clinical Evaluation of Language Fundamentals–Fourth Edition. San Antonio, TX: Psychological Corporation. [Google Scholar]

- Senderecka M., Grabowska A., Gerc K., Szewczyk J., & Chmylak R. (2012). Event-related potentials in children with attention deficit hyperactivity disorder: An investigation using an auditory oddball task. International Journal of Psychophysiology, 85(1), 106–115. [DOI] [PubMed] [Google Scholar]

- Squires N. K., Squires K. C., & Hillyard S. A. (1975). Two varieties of long-latency positive waves evoked by unpredictable auditory stimuli in man. Electroencephalography and Clinical Neurophysiology, 38(4), 387–401. [DOI] [PubMed] [Google Scholar]

- Stiles D. J., Bentler R. A., & McGregor K. K. (2012). The speech intelligibility index and the pure-tone average as predictors of lexical ability in children fit with hearing aids. Journal of Speech, Language, and Hearing Research, 55(3), 764–778. [DOI] [PubMed] [Google Scholar]

- Sutton S., Braren M., Zubin J., & John E. R. (1965). Evoked-potential correlates of stimulus uncertainty. Science, 150(3700), 1187–1188. [DOI] [PubMed] [Google Scholar]

- Tacikowski P., & Nowicka A. (2010). Allocation of attention to self-name and self-face: An ERP study. Biological Psychology, 84(2), 318–324. [DOI] [PubMed] [Google Scholar]

- Wall L. G., & Martin J. W. (1991). The effect of hearing loss on the latency of the P300 evoked potential: A pilot study. National Student Speech-Language-Hearing Association Journal, 18, 121–125. [Google Scholar]

- Werfel K. L., & Hendricks A. E. (2016). The relation between child versus parent report of chronic fatigue and language/literacy skills in school-age children with cochlear implants. Ear and Hearing, 37(2), 216–224. [DOI] [PubMed] [Google Scholar]