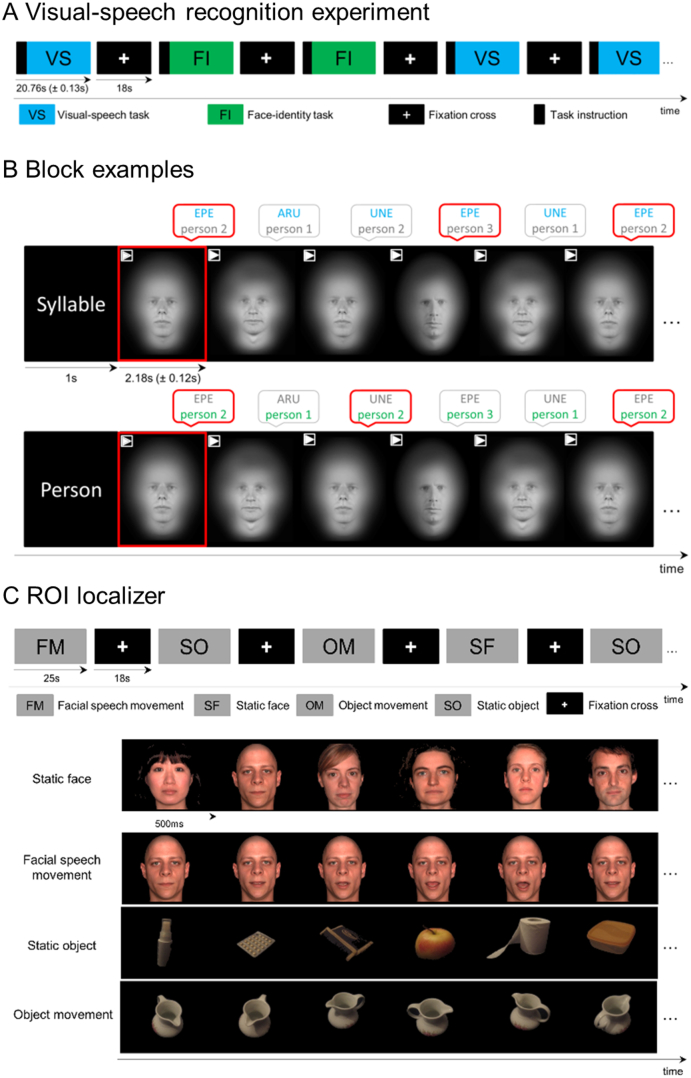

Fig. 1.

Experimental designs presented during fMRI session. (A) Visual-speech recognition experiment: Participants viewed blocks of videos without audio-stream showing 3 speakers articulating syllables. There were two tasks for which the same stimuli were used: visual-speech task and face-identity task. (B) At the beginning of each block, a written word instructed participants to perform one of the tasks (“syllable” for the visual-speech task or “person” for the face-identity task). In the visual-speech task, participants matched the articulated syllable to a target syllable (here ‘EPE’). In the face-identity task, participants matched the identity of the speaker to a target person (here person 2). Respective targets were presented in the first video of the block and marked by a red frame around the video. (C) ROI localizer: Blocks of images of faces and objects were presented, and participants were asked to view them attentively.