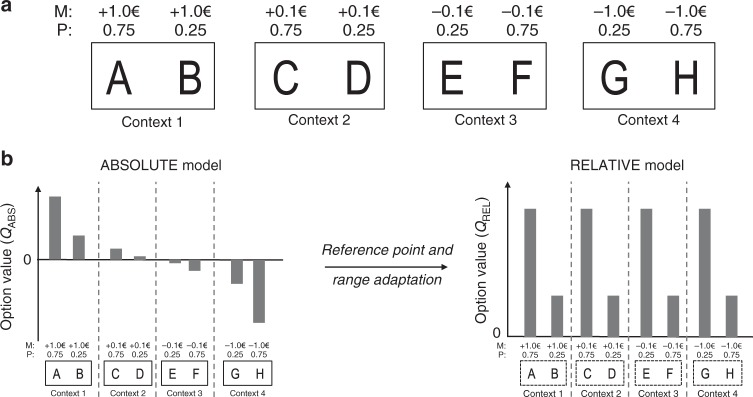

Fig. 1.

Experimental design and normalization process. a Learning task with four different contexts: reward/big, reward/small, loss/small, and loss/big. Each symbol is associated with a probability (P) of gaining or losing an amount of money or magnitude (M). M varies as a function of the choice contexts (reward seeking: +1.0€ or +0.1€; loss avoidance: −1.0€ or −0.1€; small magnitude: +0.1€ or −0.1€; big magnitude: +1.0€ or −1.0€). b The graph schematizes the transition from absolute value encoding (where values are negative in the loss avoidance contexts and smaller in the small magnitude contexts) to relative value encoding (complete adaptation as in the RELATIVE model), where favorable and unfavorable options have similar values in all contexts, thanks to both reference-point and range adaptation