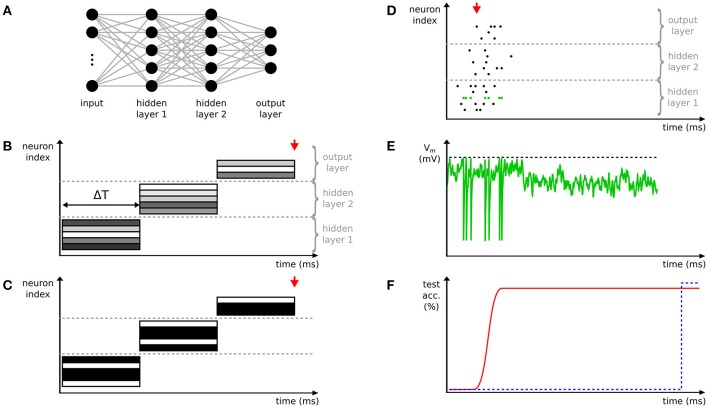

Figure 1.

Comparison of deep spiking neural networks (SNNs) to conventional deep neural networks (DNNs). (A) Example of a deep network with two hidden layers. Here, exemplarily a fully-connected network is shown. Neurons are depicted with circles, connections with lines. (B) Time-stepped layer-by-layer computation of activations in a conventional DNN with step duration ΔT. The activation values of neurons (rectangles) are exemplarily visualized with different gray values. The output of the network, e.g. categories in the case of a classification task, is only available after all layers are completely processed. (C) Like (B), but with binarized activations. (D) The activity of a deep SNN showing a fast and asynchronous propagation of spikes through the layers of the network. (E) The membrane potential of the neuron highlighted in green in (D). When the membrane potential (green) crosses the threshold (black dashed line) a spike is emitted and the membrane potential is reset. (F) The first spike in the output layer (red arrow in D) rapidly estimates the category (assuming a classification task) of the input. The accuracy of this estimation increases over time with the occurrence of more spikes (red line and Diehl et al., 2015). In contrast, the time-stepped synchronous operation mode of DNNs results in later, but potentially more accurate classifications compared to SNNs (blue dashed line and red arrows in B,C).