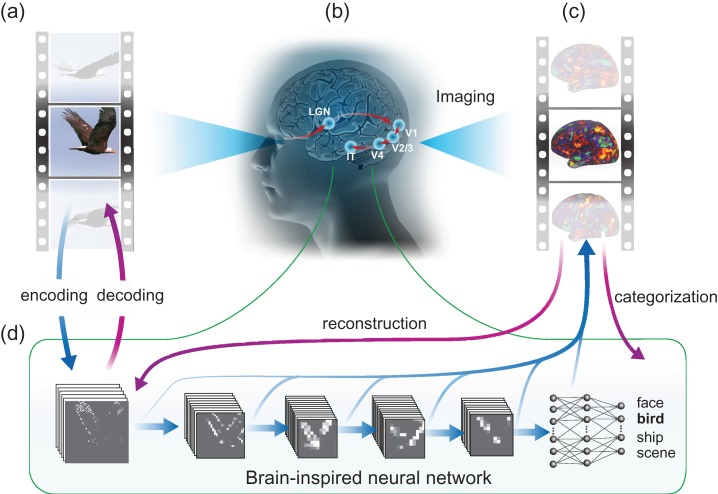

Figure 1.

Neural encoding and decoding through a deep-learning model. When a person is seeing a film (a), information is processed through a cascade of cortical areas (b), generating fMRI activity patterns (c). A deep CNN is used here to model cortical visual processing (d). This model transforms every movie frame into multiple layers of features, ranging from orientations and colors in the visual space (the first layer) to object categories in the semantic space (the eighth layer). For encoding, this network serves to model the nonlinear relationship between the movie stimuli and the response at each cortical location. For decoding, cortical responses are combined across locations to estimate the feature outputs from the first and seventh layer. The former is deconvolved to reconstruct every movie frame, and the latter is classified into semantic categories.