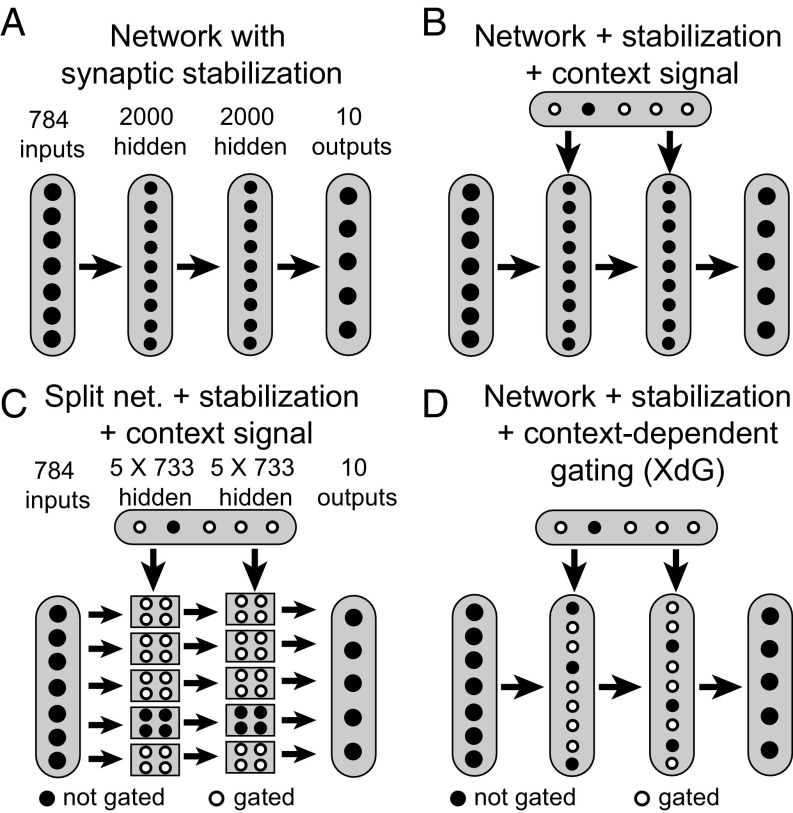

Fig. 1.

Network architectures for the permuted MNIST task. The ReLu activation function was applied to all hidden units. (A) The baseline network consisted of a multilayer perceptron with two hidden layers consisting of 2,000 units each. (B) For some networks, a context signal indicating task identity projected onto the two hidden layers. The weights between the context signal and the hidden layers were trainable. (C) Split networks (net.) consisted of five independent subnetworks, with no connections between subnetworks. Each subnetwork consisted of two hidden layers with 733 units each, such that it contained the same amount of parameters as the full network described in A. Each subnetwork was trained and tested on 20% of tasks, implying that, for every task, four of the five subnetworks was set to zero (gated). A context signal, as described in B, projected onto the two hidden layers. (D) XdG consisted of multiplying the activity of a fixed percentage of hidden units by 0 (gated), while the rest were left unchanged (not gated). The results in Fig. 2D involve gating 80% of hidden units.