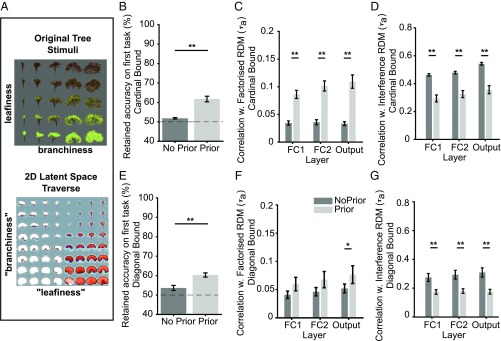

Fig. 7.

Results of experiment 4. (A) Experiment 4a. (Top) Example of tree images used for training the autoencoder. (Bottom) The 2D latent space traversal of trained autoencoder (see Methods). For each x,y coordinate pair, we plot a tree image sampled from the generative model, revealing that the autoencoder learned a disentangled low-dimensional representation of branchiness and leafiness. (B) Experiment 4b (cardinal), blocked training: comparison of performance on the first task after training on the second task, between the model from experiment 3 (“vanilla” CNN, without priors) and the model from experiment 4 (“pretrained” CNN, with priors from VAE encoder). Unsupervised pretraining partially mitigated catastrophic interference. (C) Experiment 4b (cardinal), blocked training: comparison of layer-wise RDM correlations with factorized model for CNN, between networks without and with unsupervised pretraining. Pretraining yielded stronger correlations with the factorized model in each layer. (D) Experiment 4b (cardinal), blocked training: comparison of layer-wise RDM correlations with interference model. Likewise, pretraining significantly reduced correlations with the catastrophic interference model in each layer. (E) Experiment 4b (diagonal), blocked training: mean accuracy on the first task after training on the second task, for vanilla and pretrained CNN. Again, pretraining mitigated catastrophic interference. (F) Experiment 4b (diagonal), blocked training. RDM correlations with factorized model only increased in the output layer. (G) Experiment 4b (diagonal), blocked training. RDM correlations with the interference model increased significantly in each layer. All error bars indicate SEM across independent runs. *P < 0.05; **P < 0.01.