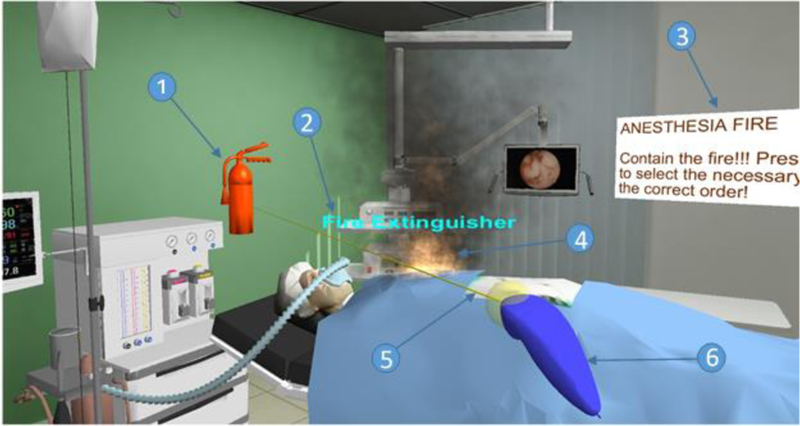

Figure 2:

Simulated OR fire, caused by a gas enrichment area under the surgical drape

Figure 2 depicts a typical state of the virtual environment as seen by the user: (1) currently selected object, (2) heads-up status text, (3) static instructions, (4) simulated flame and smoke, (5) selection ray used for reaching distant objects, and (6) virtual avatar of the physical selection device. The heads-up status text always remains in front of the user, regardless of where he/she is currently looking, and is used for critical real-time training scenario updates (e.g., name of the currently selected object, indication of correct/incorrect action, etc.). Selection of the objects in the virtual environment is based on a two-tiered collision detection between the model of the interaction device and the virtual OR object. Axis-oriented bounding boxes are first used to identify the potential collision candidates, followed by a mesh-to-mesh collision check.