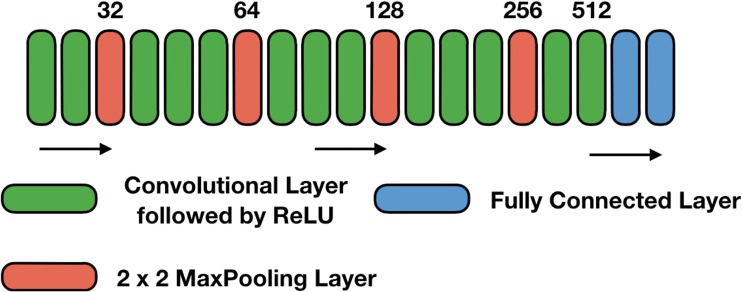

Fig. 2.

Overview of our neural network architecture. Starting from 32 convolution filters, the number of filters increases by a factor of 2 after every max pooling layer (indicated by the numbers above the boxes). The first FC layer uses a ReLU activation function with 256 filters, followed by the second FC layer with a softmax activation function and two filters. The last layer is a softmax output with two nodes