Abstract

Background and Objectives:

The uses of robotics in surgery were hypothesized as far back as 1967, but it took nearly 30 years and the nation's largest agency, the Department of Defense, in conjunction with innovative startups and established research agencies to complete the first fully functional multipurpose surgical robot. Currently, the most prominently available multipurpose robotic surgery system with US Food and Drug Administration approval is Intuitive Surgical Inc.'s da Vinci Surgical System, which is found in operating rooms across the globe. Although now ubiquitous for minimally invasive surgery, early surgical robot prototypes were specialty focused. Originally, multipurpose robotic systems were intended for long-distance trauma surgery in battlefield settings. While there were impressive feats of telesurgery, the marketable focus has veered from this goal. Initially developed through SRI International and Defense Advanced Research Projects Agency, surgical robotics reached private industry through two major competitors, who later merged.

Methods:

A thorough search of PubMed, Clinical Key, EBSCO, Ovid, ProQuest, and industry manufacturers' websites yielded 62 relevant articles, of which 51 were evaluated in this review.

Conclusion:

We analyzed the literature and referred to primary sources by conducting interviews with present and historical leaders in the field to yield a detailed chronology of surgical robotics development. As minimally invasive robotic procedures are becoming the standard of care, it is crucial to comprehensively document their historical context and importance as an emerging and evolving discipline.

Keywords: History, Robotic, Surgery

INTRODUCTION

The idea of robotics used for surgery began more than 50 years ago, but actual use began in the late 1980s with Robodoc (Integrated Surgical Systems, Sacramento, CA), the orthopedic image-guided system developed by Hap Paul, DVM, and William Bargar, MD, for use in prosthetic hip replacement.1–3 During the time frame of Drs. Paul and Bargar's development of Robodoc, Brian Davies and John Wickham were developing a urologic robot for prostate surgery.4 In addition, there were a number of computer-assisted systems being used in neurosurgery (called stereotactic) and otolaryngology. These were procedure-specific, computer-assisted, and image-guided systems that proved both the potential and value of robotic surgery systems. They also heralded the multipurpose teleoperated robotic systems initially developed by SRI International and the Defense Advanced Research Projects Agency (DARPA) and led to the surgeon-controlled (multifunctional) robotic telepresence surgery systems that have become a standard of care. The impetus to develop these systems stemmed from the Department of Defense's need to decrease battlefield casualties, and DARPA was precisely the agency to conduct such high-risk research and development.

BACKGROUND

During combat, the first medical support to battlefield injury is the combat medic sent from the battalion aid station. The farther away from the combat zone, the larger is the support unit, in a progressively expanding pattern until finally aeronautical staging is reached.5 As such, the area with the most limited resources is that closest to the site of injury. The medic's goal then must be mainly stabilization for evacuation to rear units.6

The primary cause of death in combat casualties is hemorrhagic shock and polytrauma, with associated difficulties related to the inability to transfer the patient, hidden source of trauma, and secondary injuries.5 These factors often combine and compound to create a highly unstable patient in need of immediate and advanced medical attention. In 1993, evidence accumulated to support the concept of damage control surgery—that is, surgery limited to controlling hemorrhage and minimizing contamination—and the military trained forward surgical teams (FSTs) to provide only critical life-saving surgery in the forward battlefield.7

For the military, there was then an obvious and immediate need to provide expert surgical care immediately after major trauma. Rather than the traditional “Golden Hour,” where the focus is primarily hemorrhage control and prompt evacuation for definitive surgical care to prevent death from massive trauma, the military intended to change the paradigm to the “Golden Minute” by bringing the operating room to the casualty for temporizing by using damage control surgery, rather than evacuation back to the closest mobile army surgical hospital (MASH). When the prototype of the first surgical robot, the Green Telepresence System, was shown as a potential life-saving device capable of remote damage control surgery to Surgeon General of the Army Alcide LaNoue, a public–private partnership was struck that would eventually catapult robotic technology into operating rooms across the world.8

ORIGINS OF VIRTUAL REALITY

Although “virtual reality” (VR) would not be present as a phrase for another two decades, by the 1960s scientists were working with the concept of transporting one's awareness (presence) to some other environment. At the National Aeronautics and Space Administration's (NASA) Ames Research Center, Michael McGreevey, PhD, and Stephen Ellis, PhD, were doing just that through the development of Ivan Sutherland's original concept. Using a heavy helmet mount or ceiling-suspended monitors to display a 3-dimensional (3-D) image, their initial goal was data review from the recent Voyager mission.8,9 They were shortly joined by Scott Fisher, PhD, who added 3-D audio to the device and termed the integrated system “telepresence.”8

It was Jaron Lanier (who coined the term “virtual reality”) who created a wearable head-mounted display (HMD) and invented an intuitive human interface technology that allowed one to interact with and control the images in the VR environment by using VPL, Inc.'s DataGlove, a hand gesture interface tool.10 DataGlove could be used to measure hand position and orientation as well as to provide haptic feedback to the wearer through a series of optical goniometer flex sensors, piezoceramic benders, and low-frequency magnetic fields.10. By 1987, the state of the art of VR, although rather crude, was wearing a HMD and interacting with and controlling images in a computer-generated (virtual) environment. Thus, combining VR and robotics led to the realization of telepresence as originally conceived by Scott Fisher (Figure 1).

Figure 1.

Head mounted display (HMD) with DataGlove interface, a theoretical controller for a telerobotics system, demonstrated by Dr. Scott Fisher.

THE GREEN TELEPRESENCE SURGERY SYSTEM

The robotics components of early telepresence surgery came from Phil Green, PhD, of Stanford Research Institute (SRI, later SRI International), a civilian institute with a federally funded research and development center (FFRDC).9 Dr. Green's background in ultrasonic research gave him a basis in bioengineering for what was to come. Joined by plastic surgeon resident Joseph Rosen, MD, who saw application for nerve and vasculature anastomosis, Green and his team began development on a dexterous manipulator for microsurgery.9

The earliest concepts in 1986 had a surgeon wearing an HMD, with 3-D visual and audio, as well as DataGloves, to control remote operative instruments.8 The gloves, however, failed to have sufficient fidelity in dexterous control for surgical environments, while simultaneously the graphics generated by the helmet were inadequate for safe operative visualization.8 In 1987, Joseph Rosen left Stanford for a faculty position at Dartmouth Medical Center, and US Army Colonel (COL) Richard Satava, MD, joined the team as SRI began construction of the first prototype of a robotic surgery system, which Dr. Green called the “telepresence surgery system.” They created a workstation that used handles from actual surgical instruments in the place of gloves, as well as an advantageously stereoscopic monitor rather than the HMD.11

The first constructed prototype (Figure 2) consisted of 2 discrete units: the telepresence surgeon's workstation (TSW) and a remote surgical unit (RSU). At the TSW, a surgeon sat at a stereoscopic video monitor with a pair of instrument manipulators that transmitted his or her hand motions to the RSU.12 The monitor allowed for a 120-degree field of view with a liquid-crystal shutter, necessitating that the surgeon wear passive polarized glasses to create an observable 3-D image.13 To conserve space, the monitor was located level with the surgeon's head, although pointed down to a mirror, where the surgeon could see an image in an ergonomically comfortable position (5–15 degrees below horizontal). The manipulators were located below the mirror, which gave an illusion that the handles of the instrument in the surgeon's hands were connected to the image of the tips of the instruments that the surgeon was seeing on the video monitor. The workstation was also ergonomically designed to be comfortable while the surgeon was seated. Additionally, the TSW featured a speaker placed below the mirror that added to the illusion that the source of the audio was originating from the surgical site rather than from the mirror.

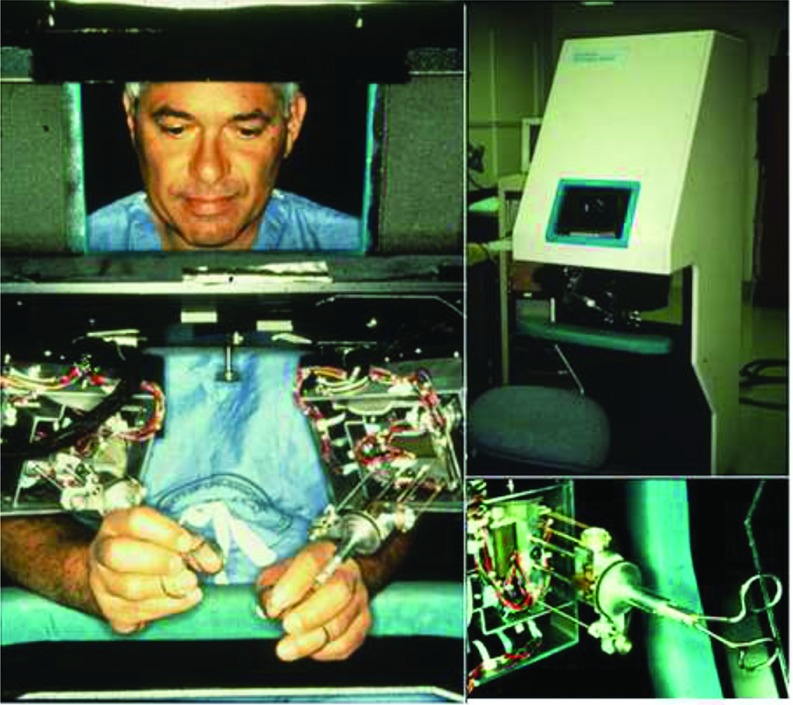

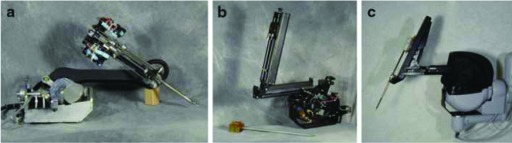

Figure 2.

([bdit]A) COL Anthony LaPorta operating at TSW. ([bdit]B) Early version of the TSW (note the ergonomic design, adjustable stool, and armrest to stabilize and rest the arms). ([bdit]C) TSW master controls, reengineered from a standard surgical instrument.

At the RSU, the manipulator end-effectors (Figure 3) had the capacity to receive exchangeable instrument tips via a twist-lock mechanism, including forceps, needle drivers, bowel graspers, scalpels, and cautery tips.13,14 A pair of stereographic video cameras were located to follow the normal line of sight.13

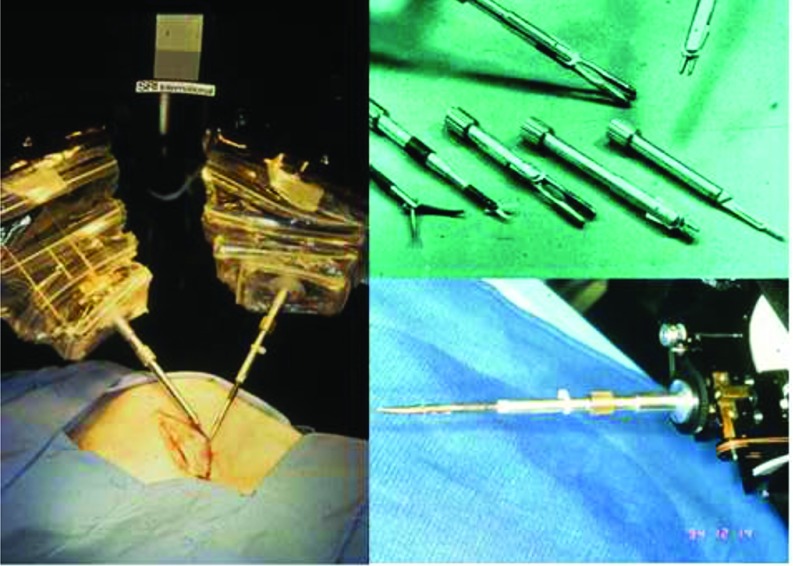

Figure 3.

([bdit]A) Remote surgical unit being used to create an incision. ([bdit]B) Exchangeable end effectors for SRI's RSU. ([bdit]C) RSU end effector with instrument attached.

The first manipulators used by SRI were patented in 1995 and continued by Dr. Green in 1998.14 One of the most notable differences between SRI's system and the systems available today was SRI's inclusion of haptic feedback. The Green Telepresence System's manipulators held force-sensing elements on the distal portion of the mechanism that could sense lateral forces and transmit the sensations to the surgeon's controllers.14,15 When resistance was met in the operative field, the surgeon's controller would be prohibited from further motion. Early versions of the manipulators were limited to 4 degrees of freedom (DOFs), allowing the surgeon to have translational movement in 3 dimensions as well as axial rotation, compared with the ability of the human arm to manipulate instruments with at least 7 DOFs (the 3 added DOFs are allowed by the addition of a wrist).

The system was originally conceived for open surgery, but when COL Satava observed the presentation of Dr. Jacques Perrisat's videotaped laparoscopic cholecystectomy at the Society of American Gastrointestinal and Endoscopic Surgeons (SAGES) conference in 1989, he urged the SRI team to transition the telepresence system toward laparoscopic surgery.9 COL Satava argued that the telepresence system offered a solution through the use of robotic instrumentation that solved the problem of the fulcrum effect of traditional laparoscopic tools.16 In addition, it provided a full high-definition stereoscopic vision, enhanced dexterity, tremor reduction, and motion scaling that could improve a surgeon's performance, even beyond human physical limitations.

Video of telepresence surgery shown by COL Satava to COL Russ Zajtchuck, MD, and Donald Jenkins, PhD, at Walter Reed Army Medical Center, and subsequently to LT GEN LaNoue, resulted in assignment of COL Satava to the Advanced Research Projects Agency in 1992 (ARPA, which became the Defense Advanced Research Projects Agency [DARPA] in 1993) to develop the telepresence system for potential military applications.9

DARPA CONTRIBUTIONS

With DARPA, the telepresence prototype fell under the Advanced Biomedical Technologies (ABMT) program, comanaged by COL Satava and Donald Jenkins and later joined by Commander Shaun Jones, MD, in 1992. ABMT had far-reaching goals for both civilian and military applications. By 2000, there were more than 50 individual projects documented, some of which evolved to be commonplace in hospitals today (e.g., medical simulation, direct digital radiology, SonoSite portable ultrasound, telepathology, etc.).17 ABMT's focus was directed toward improving military medicine, with an emphasis on providing emergency medical care to combat casualties.8,9,17 The rate-limiting factor in the treatment of battlefield injuries is the absence of a surgeon at the casualty site who is available to provide immediate care. This is further compounded by distance of the surgeon from the site of injury, restricted accessibility, and limited support personnel.5

Telepresence surgery offered a possible solution by removing distance as a factor in providing immediate and intensive treatment. At the time of the DARPA grant, the TSW and RSU were still cabled together to communicate with each other but had strong potential for allowing a surgeon to remotely operate on an injured soldier immediately after the trauma was incurred while minimizing the risk of harm to the operational team.

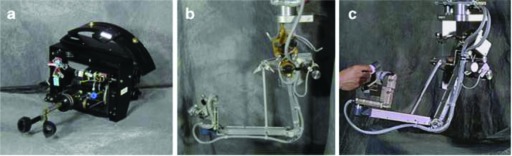

The proposed concept located the TSW at a MASH unit, while the RSU was transported to the patient's side in an M577A armored vehicle, a standard armored infantry personnel carrier, for medical forward advanced surgical treatment (MEDFAST) (Figure 4). The surgeon could then conduct the surgery with bedside assistance from a medic while the patient was being transported back to the nearest MASH. From the outset, DARPA planned for SRI's prototype to be integrated with other medical systems. Many of the projects DARPA was funding simultaneously were intended for eventual integration with the telepresence system, such as digital radiographs and Life Support for Trauma and Transport (LSTAT), a platform for casualty evacuation.17 However, the telepresence system was the centerpiece of MEDFAST, because, functionally, the robot was not a machine but rather an information system, which could integrate all the capabilities (telecommunications, remote imaging, computer enhanced manipulation, image acquisition, and guidance) needed to support remote surgery.

Figure 4.

DARPA's original concept for MEDFAST surgical unit, linked by mobile 2-way microwave communication link.

Secondary development consisted of developing and integrating a robotic surgical scrub nurse using modified commercial off-the-shelf technology (COTS), which could change tools on the end effectors or dispense pharmaceuticals. Integration of these components, called a robotic cell, had been present in manufacturing for decades but was not demonstrated in the operating room until 2004.

Integration with the M577A coincided with several key enhancements. The visualization aspect was improved for 2× to 3× magnification in full-color high definition, allowing surgeons to clearly see objects down to 1 mm. The camera was also made capable of motion compensation within a moving vehicle. Although all tests using MEDFAST were stationary, the camera could move very slightly to keep the relative visualized surgical field stable in the surgeon's point of view (“virtual stillness”). The master controllers were also programmed for tremor reduction at the TSW.

The MEDFAST, either as a modular system or integrated with the M577A, was now a fully functional operating room outfitted with necessities for both basic life support and surgical intervention. Systems included technology for infusion, electrosurgery, anesthesia, respirators, suction, imaging, video monitoring, and the full-spectrum telecommunications from the MEDFAST to the central receiving MASH.17

Under contract with DARPA, SRI was then tasked with multiple goals, including the development of a functional telepresence surgical system with installation and the maintenance of the system at the Uniformed Services University of the Health Sciences (USUHS) in Bethesda, MD.12 The final assignment was to create a VR-based simulator for the TSW in order to integrate training and preoperative planning with the system, again with installation at USUHS.17

The first demonstration of the telepresence surgery system occurred in June 1993 during field exercises at Fort Gordon in Augusta, GA. A patient was simulated by the use of a mannequin with excised pig intestines placed in the body cavity. The mannequin and RSU were located in a mobile operating room 30 m from a tent housing the surgeon and console.13 This first trial was well received, but modifications were ultimately deemed necessary. The model featured at Fort Gordon was capable of only one-handed surgery. Adaptations were quickly made to outfit a second hand, as well as a panoramic LCD display for operating room visualization.13 The entire system was shipped to the Association of the U.S. Army Annual Convention in October 1994.With the M577A in the parking lot and the RSU linked by 160 m of cable in the hotel's exhibition hall, attendees were invited to the console. A similar pig-lacerated intestine simulation was enhanced with manually operated “bleeders.”8,13 Participants in the demonstration included Secretary of Defense William Perry, who, with no prior surgical experience, was able to complete a suture and knot on the tissue, illustrating the system's intuitive nature and concluding initial development phases.8. The final target, which was never completed due to political considerations, was to be the development of a next-generation miniaturized computed tomography (CT) scanner that would be incorporated into the MEDFAST, along with integration of all the patient-monitoring systems, LSTAT, and the robotic cell.

ANIMAL LABS

As a result of the SRI's success in anatomical models, the team moved on to both ex vivo and in vivo validation. With seed funding provided by DARPA in early 1995, they were able to indicate that bovine and porcine vasculature was feasible as a tool during anastomosis. When COL Satava left SRI in 1992 to begin working with DARPA, he was replaced as medical scientist by Jon Bowersox, MD, PhD, a vascular surgeon at Stanford Medical Center.8

Initial trials took place with a 4-DOF model: a surgeon's workstation and a remote surgical unit remained hard-wired together.18 Using portions of excised bovine aorta, Dr. Bowersox and his team used SRI's system for running suture incision closure. The operative surgeon could successfully perform closure of arteriotomies with bedside assistance, with suture cutting and initial suture placement; however, procedures took significantly longer.18 Dr. Bowersox also successfully performed a patch angioplasty as well as a thin-walled PTFE for an aortic graft anastomosis.18 (Figure 5).

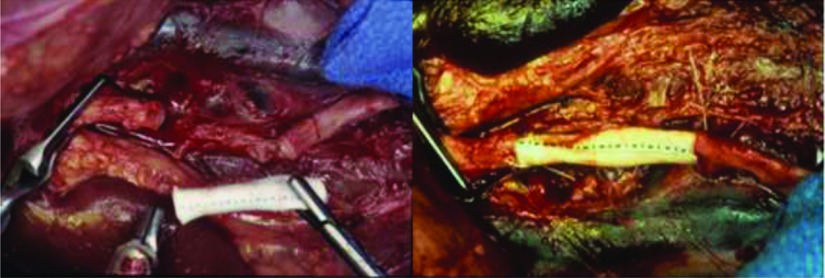

Figure 5.

Clamped ([bdit]A) and femoral artery ([bdit]B) repair performed with SRI's Telepresence System.

Progression to in vivo porcine studies advanced with exposed and clamped femoral arteries. Similar to the bovine studies, arteriotomy repairs were made, again using a continuous running suture.18 The repair was analyzed immediately and after an hour using Doppler ultrasound to observe pulsatile blood flow. Again, all surgeries were successful, but they took significantly longer than even the similar ex vivo experiments.18

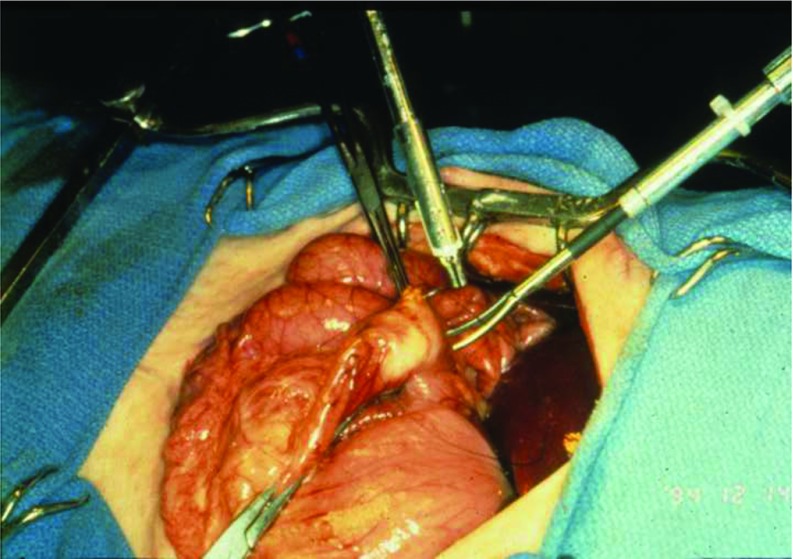

The potential vascular uses provided a baseline for future endeavors. The SRI team then progressed to trauma surgery, which held particular significance for the potential military applications. For testing, a variety of penetrating injuries were created on visceral surfaces of anesthetized swine and then repaired with both traditional and telesurgical means. Procedures included 2-layer closure of anterior surface gastrotomies (Figure 6), cholecystectomies, post anticoagulant administration repair of liver lacerations, and full-thickness enterotomies.19

Figure 6.

Two-layer gastrotomy closure performed with SRI's Telepresence System.

Next, SRI's team attempted urologic procedures using the telepresence system including nephrectomy, cystostomy closures, and ureteroureterostomies.20 A surgical assistant was necessary for exchanging instrument end effectors as well as for modifying the camera magnification in all procedures. A simulated fiberoptic endoscopy was also performed, using segments of excised porcine intestine. Using the robotic manipulators, the endoscope was fed through the simulated lumen to the ureter until a planted stone was visualized.20 The porcine labs revealed several trends in operative time. Procedures took significantly longer with the telesurgical unit when directly compared with open methods. However, when used for microsurgery or laparoscopic cases, the robotic assistance decreased operating time.21 One of the factors that contributed to the prolonged time when using the robotic system was the lack of wristed instrumentation; as such, improvements were made to increase the DOF.12 SRI had designed a prototype with 7 DOFs by 1995, but due to contracting delays, by project closeout in 1999 the most advanced system had advanced to 6.18 Functionality was further limited by the inability to clutch master controllers, a fixed camera position, and set instrumentation.

An interesting modular system for the completed telesurgical system was the development of a robotic device that could fulfill the roles of both circulating and scrub nurses in the operating room. In this project, codeveloped by the Department of Energy Oak Ridge National Labs and SRI International, a standard automatic pharmacy drug dispenser was modified to dispense surgical supplies. A modified tool-changing device from the manufacturing industry was used to change the surgical instruments. The final module was integrated with the telepresence surgery system, and in 1999 it was used to demonstrate the efficiency and effectiveness of an integrated system, referred to as a “robotic cell.” The project closeout occurred before integration into the MEDFAST and, to date, there has been little interest in a fully integrated robotic cell for the OR, even though this has become standard in most high-precision industries.

THE FINAL SRI SYSTEM

By 1996, SRI had conducted experiments with microwave telecommunication between a M577A bound RSU and anchored TSW components while up to 5 km apart.9,22 During a demonstration, with Dr. Sam Wells, MD (former president of the American College of Surgeons), used an updated 6-DOF system to perform suturing and dexterity tasks.

USUHS received their completed MEDFAST unit in July 1997. Two years later, the telepresence simulation platform was similarly installed.12 Both were intended for their simulation and readiness center, which was still in development.

The final version of the surgeon's workstation brought to USUHS had push-rod and cable driven manipulator arms featuring 6 DOFs (including grasp), each with a 30-cm range of motion. Manipulator handles could be exchanged between hemostat and forceps handles to properly mimic the tool being used intraoperatively. Images captured from the RSU were displayed on a 640 × 480-pixel resolution stereo display at the TSW.12

At the RSU, similar 6-DOF instruments could be exchanged by the telesurgeon or surgical assistant. A choice of hemostat, needle driver, scissors, or forceps was available, each with individualized but set closing force for atraumatic tissue grasping. The operative field was visualized via the use of 3 vertical line cameras of 700 × 494.12 Communication between the RSU and TSW occurred by a 2- to 8-GHz DS3 and DS1digital microwave radio signal over a maximum of 5 km. 12 The MEDFAST's mobile antenna was documented to be “quite small” at a 16-foot mast, transmitting to a 24-foot mast base antenna. Servo latency was only 1 ms, while video latency was more significant at 50 ms.12

Unlike any current robotic surgery system, SRI's prototype had force feedback (pressure), although this was problematic. Pressure is only one of the multiple sensations mapped to the human hand. Without sensations like vibration, static friction, stress, tension, tangential force, etc., surgeons struggled with repeated suture breakage. When the force feedback was turned off however, high-definition images combined with monocular cues compensated for the lack of tactile information.

The SRI system was never intended for human use or commercialization, but as a research prototype developed, the concept proved to be a valid one. Although funded by external sources, FFRDCs like SRI have numerous exit strategies once a project is ready for commercialization, such as starting a spin-off company or licensing patents. In this case, they chose the latter, and between 1993 and 1994 efforts were made to pitch the system to venture capitalists.23

PRIVATE INDUSTRY: COMPUTER MOTION

In 1990, Dr. Yulun Wang founded Computer Motion with the goal of creating an endoscopic holder. With initial funding from NASA's Jet Propulsion Laboratory Small Business Innovation Research (JPL SBIR) grant and supplemented with DARPA funding in 1992, Computer Motion developed AESOP, the first surgical robot to receive FDA clearance. While searching for marketable gaps in laparoscopic surgery, Dr. Wang found 2 distinct needs: first, to articulate instrumentation, and, second, to stably hold a laparoscope that could be controlled by the surgeon. Dr. Wang met COL Satava at the first Medicine Meets Virtual Reality Conference in 1991 and subsequently received seed funding from DARPA. AESOP, abbreviated from Automated Endoscopic System for Optimal Positioning, used voice-controlled commands to provide hands-free intraoperative maneuvering. Not only was the technology itself significant as the first voice-controlled equipment approved for use in the OR and the first FDA-approved surgical robot, but Computer Motion used the FDA's 510K process instead of class III approval, allowing it to be released to the market several years faster and set a precedent for future competition to use. AESOP's success is illustrated by its adoption into more than 1000 hospitals and represents the beginning of robotic surgery's global impact.

In 1996, Computer Motion announced HERMES, which incorporated voice control and feedback into other components of the operating room, in collaboration with Stryker Endoscopy. Computer Motion quickly advanced into the first complete robotic surgery system, ZEUS, in 1996. (Figure 7) ZEUS used the same software and platform as AESOP's robotic arm but incorporated laparoscopic instrumentation. ZEUS prioritized improving laparoscopic surgery and the original intended use of surgical robotics as a remote surgery system. Instead of a stereoscopic viewer, the surgeon sat at a monitor with polarizing glasses, much like the SRI system. Early versions had only 6 DOFs, but a seventh was added later. Motion scaling and tremor elimination were also key features.

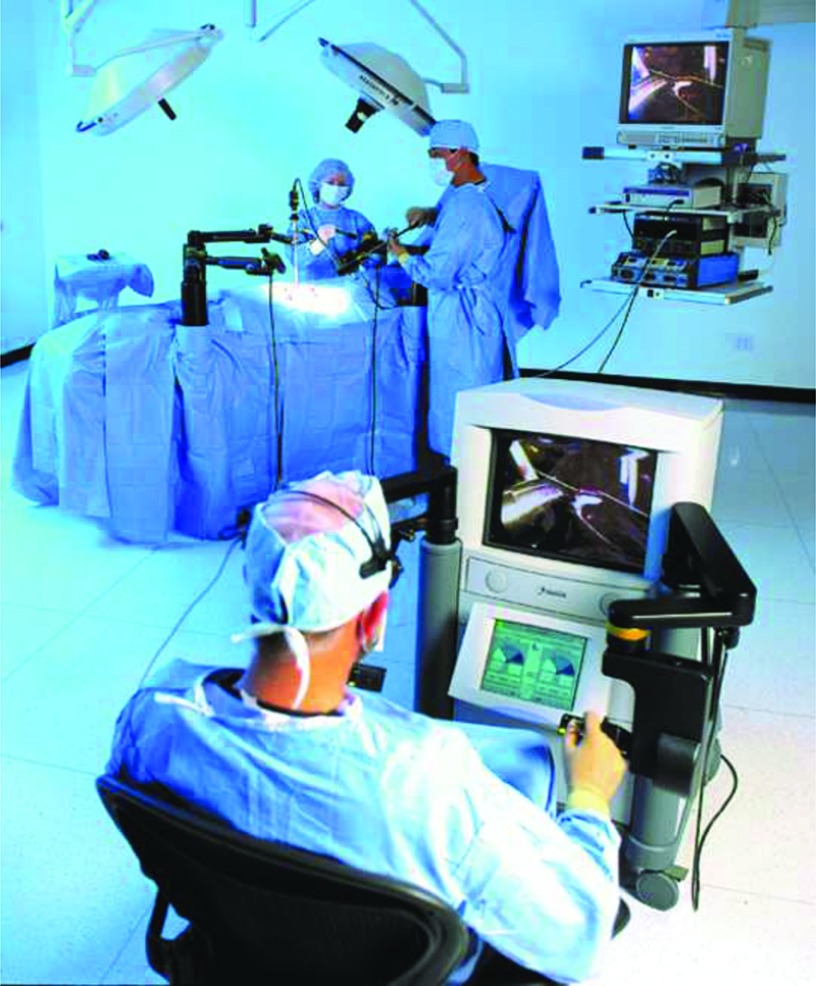

Figure 7.

Computer Motion's ZEUS in an operating room.

Computer Motion made cardiothoracic surgery the original target for ZEUS, with particular emphasis on developing a minimally invasive procedure for coronary artery bypass graft surgery (CABG). However, there were several difficulties with robotic CABG procedures, predominantly that there still was not enough space to accurately position instruments, even if they were articulated. In the same time frame, catheter procedures were showing efficacy. ZEUS, much like the later da Vinci, found a market in urologic surgery, although this was short lived, as well as profound telesurgical applications.

PRIVATE INDUSTRY: INTUITIVE SURGICAL

Fred Moll, MD, at the time an employee of Guidant, saw an opportunity for growth in laparoscopic surgery. Dr. Moll was assisted by John Freund, MD, who had recently left Acuson Corporation. Dr. Freund negotiated for SRI's intellectual property, and in 1995 Drs. Moll and Freund were joined by Robert Younge, an electrical engineer who had also departed from Acuson, and founded Intuitive Surgical.

Intuitive's first prototype was Lenny (Figure 8a, 9a), an abbreviation of Leonardo, which built off of where SRI's prototype had left off by adding a wrist to the insertion axis of the patient-side instrument manipulator, creating a sixth and seventh DOF with grasp. Patient-side manipulators were manually attached to the surgical table and had fixed instrumentation.24 Whereas SRI's prototype had a passively polarized visualization system, Lenny used active shuttering glasses synchronized with alternating left and right eye video monitor frames transmitted from a Welch Allyn endoscope to a Crystal Eyes display. The patient-side component of Lenny consisted of 3 separate robotic arms that clamped to the operating table. Two were instrument arms, and the third held the endoscopic component.24 Although Lenny was used in animal trials in 1996, it was mechanically unreliable and did not offer the surgeon sufficiently high-quality visualization for operating.24

Figure 8.

Lenny, Mona, and da Vinci patient-side manipulators.

Figure 9.

Lenny, Mona, and da Vinci master controllers.

Following Lenny, Intuitive developed Mona (Figure 8b, 9b), named for Leonardo da Vinci's Mona Lisa, which in 1997 was their first robotic surgical system to move to human trials. Part of what enabled this evolution was the addition of exchangeable instrumentation that could be interchanged while maintaining a sterile field. Although the instrument interface with 4 rotary inputs was advantageous over Lenny's, instrument engagement still failed about 20% of the time.24

Moll found a surgeon for Mona in Jaques Himpens, MD, a bariatric surgeon out of Saint- Blasium General Hospital in Dendermonde, Belgium. The first procedure attempted was a cholecystectomy performed on a 72-year-old woman on March 3, 1997.25 Patient-side assistance was offered by Guido Leman, MD, who, as Mona lacked a camera-holding arm, held the endoscope for the duration of the procedure.25 Dr. Himpens operated robotic instruments through two 10-mm ports with an endoscope connected to a third. Final ports were used for clip placement and liver retraction. Later in the same day, Drs. Himpens and Leman performed a second cholecystectomy as well as an adhesion lysis. The following day, Marc Bosiers, MD, performed 2 arteriovenous fistula cases for dialysis using Mona.26,27

Those interested in publications about the first human trials will be disappointed. Although Dr. Himpens was able to document the event in a letter to the editor featured in Surgical Endoscopy; submissions were refused by The New England Journal of Medicine and The Lancet, due to the seemingly incredible nature of robots assisting with surgery.27,25 Mona again reached audiences in Obesity Surgery after the successful completion of a gastric banding procedure.28 The case, performed at the University Hospital Saint-Pierre in Brussels, Belgium, on September 16, 1998, was as successful as its predecessor. Dr. Cadiere highlighted 3 points in the piece: that telesurgery is safe and feasible, that it is ergonomically advantageous, and these points are particularly evident in bariatric surgery due to fixed trocars and difficulty in the surgeon's positioning relative to the patient.28 As a side note on diction, this piece used both the “telesurgery” terminology, which has since been relegated to long-distance surgery undertakings, and “robotic-assisted,” which is commonly seen today.

Although all the surgeries were successful, they noted that the robot was most beneficial to the procedure when in a confined space or if microsuturing was involved. Several flaws were still evident, including a fragile instrument exchange coupling, inadequate visualization, and unwieldy setup process.24 These issues were taken into consideration for their next development—the da Vinci.

The da Vinci's most obvious departure from its predecessors was creation of a stand-alone cart housing patient-side operative components, replacing the necessity for mounting slave manipulators to the table while increasing positioning flexibility from overhead arms.24 The patient-side cart also negated issues with limitations in patient positioning and problems with counterbalancing when the table was tilted. The da Vinci also had a completely revamped stereoscopic viewer. In the place of single shuttered video displays in combination with glasses, the viewer now had discrete video outputs for each eye, which reduced fatigue and nausea. The master manipulators also progressed from the telescoping system used by SRI to a backhoe design, enabling freer motion.24 The previous model's master controllers had mechanical components positioned above the surgeon's hands, which could at times hinder the range of motion. At the patient-side element, the current Welch Allyn endoscope was switched for a custom-built endoscope from Precision Optics Corporation with dual lenses. This setup resolved some of the issues with video quality and contributed to greater depth perception.24 Issues with instrument exchanges were bypassed with the application of an Oldham coupling design, which joined via a series of 3 disks locked together by tongue and groove.24 This allowed for a certain degree of misalignment between the instrument input axes that could be generated by variations in manufacturing.

Intuitive was able to bring the first iteration of the da Vinci (Figure 7c, 8c) into human trials in 1998 in Mexico, Germany, and France. Procedures included cholecystectomy, Nissen fundoplication, thoracoscopic internal mammary harvesting, and mitral valve repair.24 By using a minithoracotomy technique, the da Vinci was able to perform a cardiac valve repair that was significantly less invasive than the standard sternotomy involving incisions ranging from 0.8 to 8.0 cm.29 A month following the first robotic-assisted valve repair, the da Vinci was used for CABG.29 The first commercial sale of the da Vinci was to the Leipzig Heart Center in Germany in late 1998, and within a year sales had expanded to 10 units throughout Europe.24 By February 2001, the Brussels team, alongside groups in Mexico City and Paris, had logged an impressive variety of cases in 146 patients, including antireflux procedures, tubal reanastomosis, gastroplasties, inguinal hernias, intrarectal procedures, hysterectomies, cardiac procedures, prostatectomies, and others using both Mona and the upgraded da Vinci.30 In the United States, the da Vinci obtained FDA clearance in 1997 but only for visualization and tissue retraction.24 It was not until 2000 that the device could use its full instrumentation for general surgery indications including cholecystectomy and Nissen fundoplication.31 FDA approval was earned through the same 510K process used by Computer Motion, as Intuitive was able to claim equivalence to the prior technology, allowing Intuitive to rapidly proceed into the commercial market. Intuitive had high hopes of marketing the da Vinci for cardiovascular surgeries but, much like ZEUS, hit a flush of success a bit more caudal than they had anticipated.

The Vattikuti Institute of Detroit, Michigan, was the first to document a process for what they called the Vattikuti Institute prostatectomy, which would become commonly known over time as the robotic-assisted prostatectomy. The Vattikuti team had positive outcomes in comparison with the standard radical retropubic approach.32 The robotic-assisted option was also noted to be advantageous over laparoscopic prostatectomy, much to the relief of urologists.33 Laparoscopic prostatectomies, lthough avoiding a larger incision, necessitate the urethral stump to bladder neck anastomosis portion of the procedure to be performed via inverted movements and the movement-amplifying fulcrum. This made the procedure difficult to learn, making the open approach more advantageous.34 With the da Vinci, patients undergoing prostatectomy endured significantly less blood loss and less need for blood transfusion, lower mean pain score, shorter hospital stays, and higher discharge hemoglobin levels than did patients for whom the comparable open procedure was used.32 Follow-up revealed that patients who underwent robotic-assisted prostatectomy were more likely to have an undetectable prostate specific antigen level, urinary continence, and return of erection and intercourse earlier than their open counterparts.32

In comparison, one of the main advantages of ZEUS was that it had the experimental capacity for remote surgery (which the initial da Vinci did not, because it was only directly connected by cable to the surgeon's console). There were structural and software differences between the 2 devices, as well. ZEUS mounted a pair of robotic arms to the OR table with the 2 most distal joints passive, allowing the joints to pivot easily for trocar alignment. In May 1999, Intuitive requested that the U.S. Patent Office declare interferences between SRI licenses that had been acquired by Intuitive and patents filed by Computer Motion. Although SRI had filed patents on the interferences 6 months before Computer Motion, further conflict soon arose. One year later, Computer Motion sued Intuitive Surgical for infringement on 9 separate patents. Intuitive followed suit with the European Patent Office filing a Notice of Opposition with Computer Motion. The legal battle dragged on for 3 years until the 2 competitors merged in 2003.31 Shortly after, ZEUS was phased out of production, although many of its elements were integrated with later iterations of the da Vinci, such as the dual console feature seen in the da Vinci Si. Computer Motion began working on a dual console under a National Institute of Standards and Technology Advanced Technology Program (NIST ATP) grant at the time of the merger, although Intuitive was the first to launch a product with an auxiliary console.

FURTHER DEVELOPMENTS

Around the same time frame, researchers Akhil Madhani, Gunter Niemeyer, and Ken Salisbury at the Massachusetts Institute of Technology were developing a robotic manipulator specifically for use in minimally invasive surgery under contract with DARPA. Their first prototype, the Silver Falcon, was driven by the PHANToM haptic interface device created by Thomas Massie and Salisbury, also at MIT. The Silver Falcon had 6 total DOFs: 3 within the base positioner, and another 3 from the wrist, plus the distal grasper. Issues arose from poor structural rigidity, inadequate grip strength for manipulating large needles, and gravitational compensation via counterbalancing. Their next attempt, the Black Falcon, rectified these concerns while adding another DOF to the wrist and making the end effector detachable (although the authors note that it was not easily detachable, and tool exchange was notably tedious).35 Intuitive acquired rights to all but one of the patents held by MIT on the project when they were waived by both MIT and the Department of Defense.

Through the use of PHANToM, the Black Falcon had force feedback from the operative manipulator to the surgeon. However, there were several concerns with integrating haptics into a device for clinical applications. The MIT team found that for some tasks, such as suturing, the force reflection offered more hindrance than assistance. The haptic technology at the time was not sensitive enough for operators to discern when the manipulators came in contact with soft tissue. Attempts to scale up the force to increase the likelihood of sensation only made physical manipulation of the controllers fatiguing. Encouragingly, they found that visualization of deflection in soft tissue occurred before force was a factor. Similar concerns arose with force feedback from SRI's system, although the illusion from the system of mirrors and stereoscopic vision was more than sufficient to compensate for dismissing the force feedback. 35,36 Other intellectual property rights licensed to Intuitive include those from IBM and Heartport Inc.9,31

TELESURGERY APPLICATIONS

Although the early goals of surgical robotics were aimed toward long-distance telesurgery, there are only a few cases where this has actually occurred. ZEUS was the first commercially available surgical robot to complete a transatlantic surgery (“The Lindbergh” surgery) with Jacques Marescaux, MD, on September 7, 2001.37,38 After preparatory cases using porcine models, Dr. Marescaux performed a robotic minimally invasive cholecystectomy between New York City and his patient, a 68-year-old woman in Strasbourg, France.38. This was accomplished with an average latency of 155 ms by using a direct trans-Atlantic cable point-to-point connection, which is likely one of the most expensive surgical procedures due to the telecommunications costs, estimated to be greater than US$1 million. These events were historic, but media coverage was overshadowed by the terrorist attacks on September 11, 2001.

The year 2001 also saw the initiation of the world's first national telesurgery in Canada with the goal of transmitting from large tertiary hospitals to remote and rural medical centers.39–41 The Centre for Minimal Access Surgery group led by Dr. Mehran Anvari, MBBS, PhD, located at McMaster University and St. Joseph's Hospital in Ontario, Canada, both educated surgeons through telementoring and completed numerous successful surgeries over an Internet Protocol/Virtual Private Network (IP/VPN).40–43 Procedures completed by this collaborative effort include Nissen fundoplication, hemicolectomies, sigmoid colon resections, and others.42,44 The feasibility and effectiveness as a teaching tool were demonstrated, particularly from the small average lag time of 150 to 200 ms. Dr. Anvari notes that while surgery may be possible with up to a 200-ms lag, the effects of visual cue and proprioception mismatch at any greater level of lag result in extreme difficulty and even nausea.45 Computer Motion saw SOCRATES receive FDA approval in 2001, a tool that could integrate an OR for telementoring.

These successes led to the creative question of whether surgical robotics could be applied in extreme environments. The National Oceanographic and Atmospheric Administration (NOAA)'s Aquarius provided such an environment through Aquarius, an undersea habitat off the coast of Florida. The National Aeronautics and Space Administration (NASA)'s Extreme Environment Mission Operation (NEEMO) has conducted 3 missions focusing on the applications of surgical robotics. NEEMO 7 was able to establish that telementoring was achievable in extreme environments. During NEEMO 9, an impressively 18-day-long mission, SRI's M7 robot was deployed to the habitat and performed basic tasks under the control of Dr. Anvari in Ontario, Canada. The Aquarius team led by Timothy Broderick, MD, assembled the M7 and assisted in completing the objectives. One of the main conclusions from NEEMO 9 was that the lag time, especially considering the distance between Earth and the potential space applications, like a manned mission to Mars, were truly detrimental.46,47 NEEMO 12 followed with using the M7 alongside RAVEN (developed by the University of Washington) to investigate the feasibility of surgeon supervised robotic automation. This was demonstrated by the M7 through a live stream feed to the American Telemedicine Association's annual meeting in 2007 by inserting a needle via robotic arm by ultrasound visualization into a mimetic tissue.46 RAVEN has also been used to illustrate the feasibility of relaying a telesurgical signal through an unmanned airborne vehicle.48

The da Vinci followed with experiments in 2008 when 4 nephrectomies were completed in anesthetized swine. While the surgeon was located in either Cincinnati, Ohio, or Denver, Colorado, the porcine model was found in a Sunnyvale, California, laboratory. The Denver to Sunnyvale surgeries were successful with round trip delay at 450 ms. This lag was doubled to 900 ms in the Cincinnati to Sunnyvale surgery, which is nearly twice the distance at 2400 miles, but had multiple instances of visual packet loss.49 Compared to Dr. Marescaux's earlier success at transatlantic robotic cholecystectomy with Zeus, which traveled round distance nearly 8,700 miles with a lag time of only 155 ms, there were issues with patient safety and exorbitant cost.49. This is likely the most expensive surgical procedure to date, with over $1 million in telecommunications costs alone, which were “donated” by the French telecommunication company ACTEL.

In this time frame, the military had renewed interest in telesurgical applications of robotics. Trauma Pod envisioned using robotic arms from the da Vinci in conjunction with the Life Support for Trauma and Transport (LSTAT) litter for autonomous damage control medical care. Led by Dr. Broderick, who replaced COL Satava at DARPA, the project was successfully demonstrated in 2007.50,51 Although initial efforts were successful, Trauma Pod never moved into final animal trials, which would have included control of hemorrhage, airways, and intravenous line insertion, due to a large-scale command transition and funding complications from revised federal budgeting processes. The elimination of earmarks in 2007 from the federal budget prohibited TATRC from obtaining funding as proposed by Senator Ted Stevens of Alaska.

CONCLUSION

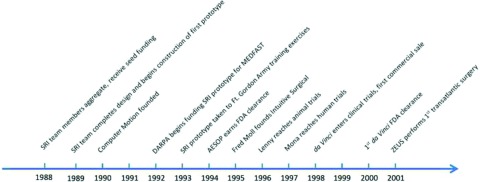

Progress to FDA approval for such a complex system takes an average of 20 years, yet was achieved in little more than a decade (Figure 10). The advancement seen to date has truly been remarkable. While the history of robotics has been well documented, the contributions from living primary sources have added crucial information in creating a definitive documentation. However, the evolution of robotic surgery is far from over, with multiple potential competitors on the horizon. Not only will there be new tools, but surgeons are continually adapting new procedures and specialties for use with robotics. The developments in patient outcomes, surgical ingenuity, and creative technology will be well worth watching.

Figure 10.

Timeline of surgical robotics development.

We would like to thank Mehran Anvari, MD, Timothy Broderick, MD, Simon DiMaio, PhD, and Yulun Wang PhD, for sharing their contributions to the field.

Acknowledgments

Contributor Information

Evalyn I. George, Madigan Army Medical Center, Department of Surgery, Tacoma WA, USA..

COL Timothy C. Brand, Madigan Army Medical Center, Department of Surgery, Tacoma WA, USA..

COL (Ret) Anthony LaPorta, Rocky Vista University, School of Medicine, Parker CO, USA..

Jacques Marescaux, Research Institute against Digestive Cancer, Image Guided Surgery, Strasbourg, France..

COL (Ret) Richard M. Satava, University of Washington, School of Medicine, Seattle WA USA..

References

- 1. Corliss WR, Johnson EG. Teleoperators and Human Augmentation. An AEC-NASA Technology Survey. 1967. Washington, DC: Office of Technology Utilization, National Aeronautics and Space Administration. [Google Scholar]

- 2. Paul HA, Bargar WL, Mittlestadt B, et al. Development of a surgical robot for cementless total hip arthroplasty. Clin Orthop Relat Res. 1992;285:57–66. [PubMed] [Google Scholar]

- 3. Davies BL, Ng W, Hibberd RD. Prostatic resection: an example of safe robotic surgery. Robotica. 1993;11:561–566. [Google Scholar]

- 4. Harris S, Arambula-Cosio F, Mei Q, et al. The Probot—an active robot for prostate resection. Proceedings of the Institution of Mechanical Engineers, Part H. N Engl J Med. 1997;211:317–325. [DOI] [PubMed] [Google Scholar]

- 5. Zajtchuk R, Grande C. Part IV. Surgical Combat Casualty Care: Anesthesia and Perioperative Care of the Combat Casualty, Vol 1. Textbook of Military Medicine. Washington, DC: Office of The Surgeon General at TMM Publications; 1995. [Google Scholar]

- 6. Bellamy RF, Zajtchuk R, Buescher TM, et al. Part I. Warfare, Weaponry, and the Casualty: Conventional Warfare: Ballistic, Blast, and Burn Injuries, Vol 5. Textbook of Military Medicine. Washington, DC: Office of the Surgeon General at TMM Publications; 1991. [Google Scholar]

- 7. Rotondo MF, Schwab CW, McGonigal MD, et al. “Damage control”: an approach for improved survival in exsanguinating penetrating abdominal injury. J Trauma. 1993;35:375–382, discussion 382–373. [PubMed] [Google Scholar]

- 8. Satava RM. Robotic surgery: from past to future: a personal journey. Surg Clin North Am. 2003;83:1491–1500, xii. [DOI] [PubMed] [Google Scholar]

- 9. Satava RM. Surgical robotics: the early chronicles: a personal historical perspective. Surg Laparosc Endosc Percutan Tech. 2002;12:6–16. [DOI] [PubMed] [Google Scholar]

- 10. Zimmerman TG, Lanier J, LBlanchard C, et al. A hand gesture interface device. ACM SIGCHI Bull. 1987;188:189–192. [Google Scholar]

- 11. Jourdan IC, Dutson E, Garcia A, et al. Stereoscopic vision provides a significant advantage for precision robotic laparoscopy. Br J Surg. 2004;91:879–885. [DOI] [PubMed] [Google Scholar]

- 12. Jensen J. Development of a Telepresence Surgery System. US Army Medical Research and Materiel Command Fort Detrick, MD: SRI International Menlo Park, CA; 1999. [Google Scholar]

- 13. Green PS, Hill JW, Jensen JF, Shah A. Telepresence surgery. IEEE Eng Med Biol. 1995;May-Jun. [Google Scholar]

- 14. Jensen JF, Hill JW. Surgical manipulator for a telerobotic system. Google Patents, CA2222150A1, 1998.

- 15. Green PS. Surgical system. Google Patents, US6788999B2, 2005.

- 16. Green PS, Satava R, Hill J, Simon I. Telepresence: advanced teleoperator technology for minimally invasive surgery. Surg Endosc. 1992;6:91. [Google Scholar]

- 17. Satava R, Jenkins D, Jones S. Advanced Biomedical Technology Program. Arlington, VA: Defense Advanced Research Projects Agency; 2000. [Google Scholar]

- 18. Bowersox JC, Shah A, Jensen J, et al. Vascular applications of telepresence surgery: initial feasibility studies in swine. J Vasc Surg. 1996;23:281–287. [DOI] [PubMed] [Google Scholar]

- 19. Bowersox JC, Cordts PR, LaPorta AJ. Use of an intuitive telemanipulator system for remote trauma surgery: an experimental study. J Am Coll Surg. 1998;186:615–621. [DOI] [PubMed] [Google Scholar]

- 20. Bowersox JC, Cornum RL. Remote operative urology using a surgical telemanipulator system: preliminary observations. Urology. 1998;52:17–22. [DOI] [PubMed] [Google Scholar]

- 21. Hill JW, Jensen JF. Telepresence technology in medicine: principles and applications. Proc IEEE. 1998;86:569–580. [Google Scholar]

- 22. Satava RM, Jones SB. Military applications of telemedicine and advanced medical technologies. Army Med Dept J. 1997;16–21. [Google Scholar]

- 23. Brand T. A Chronicle of Robotic Assisted Surgery and the Department of Defense: From DARPA to the MTF. 2017. Presentation given at Western Urologic Forum in Vancouver BC, March 2018. [Google Scholar]

- 24. DiMaio S, Hanuschik M, Kreaden U. The da Vinci Surgical System. In: Surgical Robotics. New York, NY: Springer; 2011:199–217. [Google Scholar]

- 25. Himpens J, Leman G, Cadiere G. Telesurgical laparoscopic cholecystectomy. Surg Endosc. 1998;12. [DOI] [PubMed] [Google Scholar]

- 26. Himpens J. Surgery in space: the future of robotic telesurgery (Haidegger T, Szandor J, Benyo Z. Surg Endosc2011; 25(3):681–690). Surg Endosc. 2012;26:286. [DOI] [PubMed] [Google Scholar]

- 27. Himpens J. My experience performing the first telesurgical procedure in the world. Bariatric Times. 2016. Retrieved from http://bariatrictimes.com/my-experience-performing-the-first-telesurgical-procedure-in-the-world/.

- 28. Cadiere G, Himpens J, Vertruyen M, et al. The world's first obesity surgery performed by a surgeon at a distance. Obes Surg. 1999;9:206–209. [DOI] [PubMed] [Google Scholar]

- 29. Salisbury JK., Jr The heart of microsurgery. Mech Eng. 1998;120:46. [Google Scholar]

- 30. Cadiere GB, Himpens J, Germay O, et al. Feasibility of robotic laparoscopic surgery: 146 cases. World J Surg. 2001;25:1467–1477. [DOI] [PubMed] [Google Scholar]

- 31. Intuitive Surgical Inc. United States Securities and Exchange Commission Form 10-K. 2001. Sunnyvale, California. [Google Scholar]

- 32. Tewari A, Srivasatava A, Menon M. A prospective comparison of radical retropubic and robot-assisted prostatectomy: experience in one institution. BJU Int. 2003;92:205–210. [DOI] [PubMed] [Google Scholar]

- 33. Menon M, Shrivastava A, Tewari A, et al. Laparoscopic and robot assisted radical prostatectomy: establishment of a structured program and preliminary analysis of outcomes. J Urol. 2002;168:945–949. [DOI] [PubMed] [Google Scholar]

- 34. Schuessler WW, Schulam PG, Clayman RV, et al. Laparoscopic radical prostatectomy: initial short-term experience. Urology. 1997;50:854–857. [DOI] [PubMed] [Google Scholar]

- 35. Madhani AJ, Niemeyer G, Salisbury JK. The black falcon: a teleoperated surgical instrument for minimally invasive surgery. In: Intelligent Robots and Systems, 1998. Proceedings of the 1998 IEEE/RSJ International Conference on Intelligent Robots and Systems, 1988:936–944 Victoria, BC. [Google Scholar]

- 36. Salisbury J., Jr and Massachusetts Institute of Technology Cambridge Artificial Intelligence Lab. Look and Feel: Haptic Interaction for Biomedicine. DTIC Document 1997. ADA286842 Maryland: U.S. Army Medical Research and Material Command Fort Detrick. [Google Scholar]

- 37. Marescaux J, Leroy J, Gagner M, et al. Transatlantic robot-assisted telesurgery. Nature. 2001;413:379–380. [DOI] [PubMed] [Google Scholar]

- 38. Marescaux J, Leroy J, Rubino F, et al. Transcontinental robot-assisted remote telesurgery: feasibility and potential applications. Ann Surg. 2002;235:487–492. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Anvari M. Remote telepresence surgery: the Canadian experience. Surg Endosc. 2007;21:537–541. [DOI] [PubMed] [Google Scholar]

- 40. Anvari M, McKinley C, Stein H. Establishment of the world's first telerobotic remote surgical service: for provision of advanced laparoscopic surgery in a rural community. Ann Surg. 2005;241:460–464. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Anvari M. Reaching the rural world through robotic surgical programs. Eur Surg. 2005;37:284–292. [Google Scholar]

- 42. Anvari M. Telesurgery: remote knowledge translation in clinical surgery. World J Surg. 2007;31:1545–1550. [DOI] [PubMed] [Google Scholar]

- 43. Anvari M. Robot-assisted remote telepresence surgery. Semin Laparosc Surg. 2004;11:123–128. [DOI] [PubMed] [Google Scholar]

- 44. Anvari M. Remote telepresence surgery: the Canadian experience. Surg Endosc. 2007;21:537–541. [DOI] [PubMed] [Google Scholar]

- 45. Anvari M, Broderick T, Stein H, et al. The impact of latency on surgical precision and task completion during robotic-assisted remote telepresence surgery. Comput Aided Surg. 2005;10:93–99. [DOI] [PubMed] [Google Scholar]

- 46. Doarn CR, Anvari M, Low T, et al. Evaluation of teleoperated surgical robots in an enclosed undersea environment. Telemed e-Health. 2009;15:325–335. [DOI] [PubMed] [Google Scholar]

- 47. Xu S, Perez M, Yang K, et al. Determination of the latency effects on surgical performance and the acceptable latency levels in telesurgery using the dV-Trainer® simulator. Surg Endosc. 2014;28:2569–2576. [DOI] [PubMed] [Google Scholar]

- 48. Harnett BM, Doarn CR, Rosen J, et al. Evaluation of unmanned airborne vehicles and mobile robotic telesurgery in an extreme environment. Telemed e-Health. 2008;14:539–544. [DOI] [PubMed] [Google Scholar]

- 49. Sterbis JR, Hanly EJ, Herman BC, et al. Transcontinental telesurgical nephrectomy using the da Vinci robot in a porcine model. Urology. 2008;71:971–973. [DOI] [PubMed] [Google Scholar]

- 50. Gilbert GR, Beebe MK. United States Department of Defense Research in Robotic Unmanned Systems for Combat Casualty Care. Army Medical Research and Materiel Command Fort Detrick, MD: Telemedicine and Advanced Tech Research Center; 2010. [Google Scholar]

- 51. Broderick TJ, Carignan CR. and University of Cincinnati, OH. Distributed Automated Medical Robotics to Improve Medical Field Operations. Cincinnati University OH, 2010. ADA581925 Fort Belvoir, VA: Defense Technical Information Center. [Google Scholar]