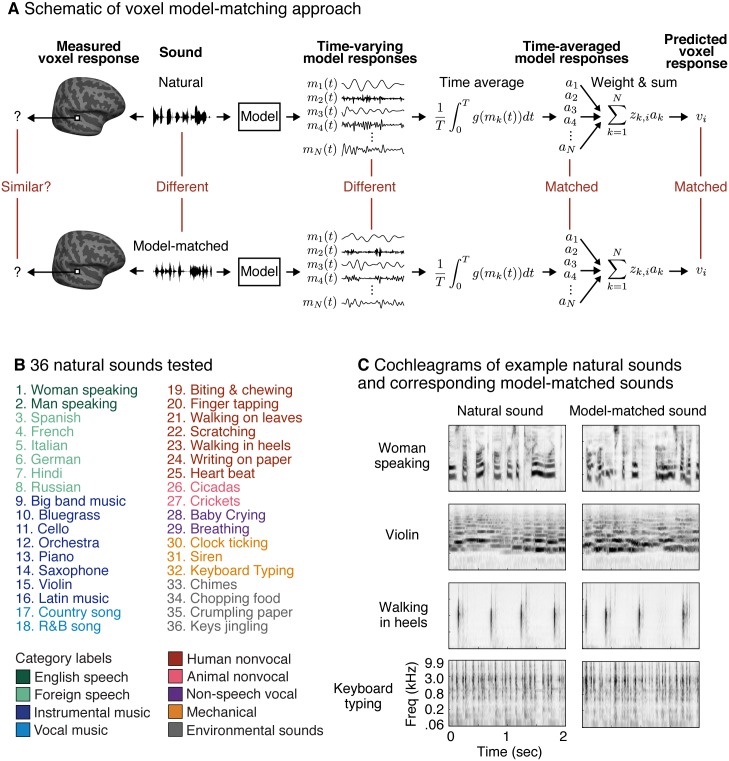

Fig 2. Model-matching methodology and experimental stimuli.

(A) The logic of the model-matching procedure, as applied to fMRI. The models we consider are defined by the time-varying response of a set of model features (mk(t)) to a sound (as in the auditory model shown in Fig 1A). Because fMRI is thought to pool activity across neurons and time, we modeled fMRI voxel responses as weighted sums of time-averaged model responses (Eqs 1 and 2, with ak corresponding to the time-averaged model responses and zk,i to the weight of model feature k in voxel i). Model-matched sounds were designed to produce the same time-averaged response for all of the features in the model (all ak matched) and thus to yield the same voxel response (for voxels containing neurons that can be approximated by the model features), regardless of how these time-averaged activities are weighted. The temporal response pattern of the model features was otherwise unconstrained. As a consequence, the model-matched sounds were distinct from the natural sounds to which they were matched. (B) Stimuli were derived from a set of 36 natural sounds. The sounds were selected to produce high response variance in auditory cortical voxels, based on the results of a prior study [45]. Font color denotes membership in one of nine semantic categories (as determined by human listeners [45]). (C) Cochleagrams are shown for four natural and model-matched sounds constrained by the spectrotemporal modulation model shown in Fig 1A.