Abstract

Argo floats measure seawater temperature and salinity in the upper 2000 m of the global ocean. Statistical analysis of the resulting spatio-temporal dataset is challenging owing to its non-stationary structure and large size. We propose mapping these data using locally stationary Gaussian process regression where covariance parameter estimation and spatio-temporal prediction are carried out in a moving-window fashion. This yields computationally tractable non-stationary anomaly fields without the need to explicitly model the non-stationary covariance structure. We also investigate Student t-distributed fine-scale variation as a means to account for non-Gaussian heavy tails in ocean temperature data. Cross-validation studies comparing the proposed approach with the existing state of the art demonstrate clear improvements in point predictions and show that accounting for the non-stationarity and non-Gaussianity is crucial for obtaining well-calibrated uncertainties. This approach also provides data-driven local estimates of the spatial and temporal dependence scales for the global ocean, which are of scientific interest in their own right.

Keywords: moving-window Gaussian process regression, local kriging, non-stationarity, non-Gaussianity, climatology, physical oceanography

1. Introduction

The subsurface open ocean has historically been one of the least studied places on Earth owing to a lack of observational data at fine enough spatial and temporal resolutions. That changed dramatically soon after the turn of the century with the introduction of the Argo array of profiling floats. Argo is a collection of nearly 4000 autonomous floats that measure temperature and salinity in the upper 2000 m of the ocean. The array's nearly uniform 3° × 3° × 10 days sampling of the global ocean has enabled oceanographers to study the subsurface ocean at unprecedented accuracy and scale. Argo data have been used, for example, to quantify global changes in ocean heat content [1], to study ocean circulation [2], mesoscale eddies [3], internal waves [4] and tropical cyclones [5], and to improve climate model predictions [6]. Argo has now become the primary source of subsurface temperature and salinity data for these and hundreds of other studies of ocean climate and dynamics.

A significant portion of scientific results from Argo rely on spatially and temporally interpolated temperature and salinity maps, such as those in [7–9]. These data products transform the irregularly located Argo observations onto a fine regular grid which facilitates further scientific analysis. In a sense, the goal is to ‘fill in the gaps’ between the in situ Argo observations, such as those shown in figure 1, and to turn them into a continuously interpolated field in both space and time. To achieve this, a number of statistical modelling assumptions need to be made and the resulting interpolated maps, along with their uncertainties, may be sensitive to these choices. Given the unique nature of Argo data and the scientists' reliance on the gridded maps, it is of utmost importance to produce the interpolations using the most appropriate statistical techniques and to rigorously understand their performance and limitations.

Figure 1.

Argo temperature data at 300 dbar ( ≈ 300 m) for February 2012. Argo provides in situ temperature and salinity observations for the upper 2000 m of the global ocean. The statistical question studied in this work is how to interpolate these irregularly sampled data onto a dense regular grid.

From a statistical perspective, Argo observations constitute a fascinatingly rich geostatistical dataset. There is a huge volume of data (nearly 2 million profiles, each having between 50 and 1000 vertical observations, have been collected to date), the data are non-stationary in both their mean and covariance structure and they exhibit heavy tails and other non-Gaussian features. Argo is also a rare example of a truly four-dimensional (3 × space + time) in situ observational dataset. Standard approaches to parameter estimation and interpolation for spatio-temporal random fields do not easily scale up to datasets of this size since the number of required computations grows cubically with the number of observations. Furthermore, in order to capture the full complexity of these data, there is a need to develop models that go beyond the usual assumptions of stationarity and Gaussianity.

In this paper, we propose a statistical framework for interpolating Argo data that is aimed at addressing both the computational issues caused by the size of the dataset and the modelling challenges caused by the non-stationarity of these data. The approach is based on the basic idea that if a prediction is desired at the spatio-temporal location (x*, t*), where x* = [x*lat, x*lon]T is the spatial location in degrees of latitude and longitude and t* is time, then the observations that are close to (x*, t*) in the (x, t) space should be the most informative for making the prediction. (Following standard statistical terminology, we use here and throughout the term prediction to refer to the interpolations of the unknown values of the random field.) Furthermore, while it is clear from a simple exploratory inspection of Argo data that a global random field model for the ocean would need to be non-stationary, it is reasonable to assume that the data can be locally modelled as a stationary random field. (Assuming that the mean has been successfully removed, a spatio-temporal random field f(x, t) is said to be stationary if the covariance k(x1, t1, x2, t2) = Cov( f(x1, t1), f(x2, t2)) is a function of (x1 − x2, t1 − t2) only, that is, k(x1, t1, x2, t2) = k(x1 − x2, t1 − t2). In other words, the covariance depends only on the difference between the two spatio-temporal locations and not on where in the ocean these locations are. When that is not the case, the field is said to be non-stationary.) We combine these two ideas by considering only data within a small spatio-temporal neighbourhood of (x*, t*) and by assuming that this subset of data can be modelled as a stationary random field. We use the data within the neighbourhood to first estimate the unknown parameters of the random field model using maximum likelihood and then to perform the interpolation at (x*, t*). As proposed by Haas in [10,11], we use the neighbourhoods in a moving-window fashion: when moving to the next grid point, the window is re-centred and the parameters re-estimated. This leads to data-driven, spatially varying estimates of the spatio-temporal dependence structure and provides gridded interpolations that reflect the non-stationarity of the underlying random field. This approach is computationally efficient since it considers only a subset of the full dataset at a time and since the computations across the grid points can be fully parallelized. In a line of work described in [12–15], a closely related moving-window method has been developed and successfully applied to mapping remote sensing data.

The Roemmich–Gilson (RG) climatology (along with the associated anomalies) [7] is one of the more popular gridded Argo data products. Roemmich & Gilson [7] first estimate the mean field using a weighted local regression fit to several years of Argo data and then perform kriging [16,17] (also known as optimal interpolation [18] or objective analysis/mapping [19] and closely related to Gaussian process (GP) regression [20]) on the mean-subtracted monthly residuals to obtain the interpolated anomaly fields. In this work, we use the RG mean field, but improve the modelling of the anomalies in three important ways: first, we include time in the interpolation; second, we use data-driven local estimates of the non-stationary covariance structure as described above; and third, we consider Student t-distributed fine-scale variation (the so-called nugget effect) in order to account for non-Gaussian heavy tails in the data (that is, observations whose magnitude is larger than what a Gaussian distribution would produce). We investigate the point prediction and uncertainty quantification performance of the proposed approach using cross-validation studies. We demonstrate that adding the temporal component to the mapping leads to major performance improvements, while the locally estimated covariance parameters and the Student t-distributed nugget effect are crucial for obtaining reasonable uncertainties. The uncertainty quantification part is particularly important since the RG data product does not currently provide any uncertainty information, presumably because of challenges in modelling the non-stationary and non-Gaussian features of the data.

It is worth highlighting that one of the key differences between this work and most previous Argo maps (see §§2a,b for a literature review) is our use of Argo-based data-driven estimates of the covariance function parameters. Using the same data to both estimate the covariance parameters and perform the mapping is standard in the relevant statistics literature [16,17,20,21], but so far the oceanographic community has largely not implemented these ideas (a notable exception is [2,22], where ocean velocity fields are mapped based on data-driven variogram fits to Argo data). Indeed, in many Argo data products, the procedure for choosing the covariance parameters can be best described as making an informed guess. The covariance structure is typically motivated by what is known about ocean dynamics on a qualitative level. For example, the spatial length scales are typically increased near the Equator. But the quantitative details, such as which specific scales are used and how much they are varied, are typically decided in an ad hoc manner, with limited justification. In some cases, some of the covariance length scales are set equal to the 3° Argo sampling resolution [23,24]. This seems statistically incorrect since the length scales should reflect the dependence structure of the underlying physical field and not the sampling resolution of the observing system. In this work, we avoid this kind of arbitrariness by fitting the covariance parameters with maximum likelihood to several years of Argo data themselves. The fit is done using local moving windows, as described above. The estimated covariance parameters are shown to exhibit physically reasonable spatial patterns and can be of scientific interest in their own right, as they contain information about ocean dynamics in the different regions sampled by Argo. The data-driven covariance estimates are shown to improve both the point predictions and the uncertainties in comparison with the RG covariance, with the improvement in uncertainty quantification being particularly substantial.

In the present work, we focus primarily on interpolating Argo temperature anomalies, but similar techniques can also be developed for the salinity fields. The rest of this paper is structured as follows: §2 provides an overview of the existing Argo data products with an emphasis on the RG climatology. Section 3 describes the proposed locally stationary spatio-temporal interpolation method. Section 4 applies the new approach to Argo data and studies its performance in terms of point predictions, uncertainty quantification and the estimated model parameters. Section 5 concludes and discusses directions for future work. The electronic supplementary material provides further information and results. Readers who are unfamiliar with Argo are recommended to consult §1 in the electronic supplementary material for an overview of Argo floats and data.

2. Overview of previous Argo data products

(a). Roemmich–Gilson climatology

The RG climatology and its anomalies [7,25] are constructed by first estimating a seasonally varying mean field and then performing kriging on the mean-subtracted monthly residuals. The vertical dimension is handled by binning the profiles into 58 pressure bins, whose sizes increase with depth. Each binned value is calculated so that it represents an average over the pressure bin (J. Gilson 2017, personal communication). The mean field is estimated using three adjacent pressure levels, but kriging for the anomalies is carried out using data from only one pressure level at a time.

The RG mean field is a weighted local least-squares spatio-temporal regression fit that is carried out separately for each latitude–longitude grid point and each pressure level. The local regression function is (J. Gilson 2016, personal communication)

| 2.1 |

where xlat is latitude, xlon is longitude, z is pressure and t is time in yeardays. This function is fitted to 3 × 12 × 100 nearest neighbours, where the factors refer to the three pressure levels and 12 calendar months. The nearest neighbours are found across the entire Argo dataset and are given weights according to their horizontal distance from the grid point. The horizontal distance metric is

| 2.2 |

where xi = [xlat, i, xlon, i]T, Δxlat is the meridional distance in kilometres, Δxlon is the zonal distance in kilometres (converted from degrees to kilometres using the midpoint latitude) and Pen(x1, x2) is a penalty term for crossing ocean depth contours (see [7] for details). Once fitted, the regression function is evaluated at the grid point for the midpoint of each month to produce the monthly mean-field estimates for that latitude, longitude and pressure.

The anomalies are computed one month at a time. Here the crucial modelling choice concerns the covariance structure of those fields. The RG covariance function is

| 2.3 |

where dRG, a(x1, x2) is otherwise the same distance metric as in equation (2.2), but with Δxlon replaced by a((xlat, 1 + xlat, 2)/2) · Δxlon, where [7,25]

| 2.4 |

Using dRG, a(x1, x2) instead of dRG(x1, x2) has the effect of elongating the zonal dependence in the tropics. The result is a non-stationary covariance function that varies in the zonal direction depending on the latitude. However, the meridional range is kept constant and the same covariance function is used at all longitudes, all pressure levels, all seasons and for both the temperature and the salinity anomalies. The noise-to-signal variance ratio (the ratio of the nugget variance and the GP variance σ2/ϕ in the terminology and notation of §3) is set to be 0.15 throughout the global ocean.

The functional form and the parameter values in equation (2.3) are motivated by the observed empirical correlation in the steric height anomaly (essentially a vertical integral of the density anomaly; see §7.6.2 in [26]) in Argo and satellite altimetry data (see fig. 2.2 in [7]). The parameter values are chosen by a graphical comparison of the correlation functions instead of a formal estimation procedure, such as maximum likelihood or weighted least squares. Owing to atmospheric interaction, the length scales are expected to be longer in the mixed layer than in the deeper ocean. Since the RG covariance is chosen based on a vertically integrated quantity, it is likely that the length scales chosen this way are too short near the surface and too long at larger depths.

An important limitation of the RG climatology is that it does not provide uncertainty estimates. In principle, the formal kriging variance could be used to provide Gaussian prediction intervals, but this would require defining the proportionality constant in equation (2.3) (this constant cancels out in the kriging point predictions). Even if this proportionality constant was provided, the uncertainties are unlikely to be reliable given that the RG covariance does not vary with pressure and uses a fixed noise-to-signal variance ratio. Another limitation is that the RG covariance is only a function of the spatial locations and does not take the temporal dimension into account.

Gasparin et al. [27] improve the RG model by including time in the covariance and by adding non-stationarity in the meridional range parameter. They also briefly investigate the prediction uncertainties. But their analysis is limited to the Equatorial Pacific only, their covariance remains the same for all pressure levels and longitudes, and their covariance parameters are not based on formal statistical estimates. In this work, we go further and develop data-driven covariances for Argo temperature data that can vary as a function of latitude, longitude, pressure and season. Covariance estimates, anomaly maps and uncertainties are investigated in the global ocean at three exemplary pressure levels. The covariances include time and non-stationarity is allowed in all covariance parameters, including the zonal, meridional and temporal range parameters.

(b). Other data products and analysis techniques

Besides the RG climatology, several other Argo-based data products have been produced. We give here a brief overview of some of these products and the underlying statistical methods. Our treatment is by no means exhaustive—a full list of gridded Argo data products can be found at http://www.argo.ucsd.edu/Gridded_fields.html.

One of the defining features of the MIMOC product [8] is that it is mapped on isopycnals (surfaces of constant density) instead of pressure surfaces. This approach may have distinct advantages in handling the vertical movement of water masses and in avoiding density inversions. Statistically, temperature and salinity maps on isopycnals are likely to be less non-stationary than on pressure surfaces. However, in order to enable conversion from density coordinates to pressure coordinates, one needs to provide maps of pressure on the isopycnals and those fields remain highly non-stationary. MIMOC also incorporates techniques for improving the mapping in areas of sharp fronts and varying bathymetry. The EN4 data product [9] provides uncertainty estimates and includes the vertical dimension in the covariance model, but its uncertainty quantification procedure seems statistically ad hoc, is only validated in the root-mean-square sense and shows signs of miscalibration below roughly 400 m. ISAS [23] and MOAA GPV [28] are further examples of kriging-based Argo data products. For most products, there is some effort to use physical data to justify the chosen covariance parameters but no formal statistical estimators of these parameters are used. An exception is the work of Gray & Riser [2,22], which uses a weighted least-squares variogram fit and an iteratively estimated mean field to map ocean velocity fields based on Argo data.

The above-mentioned products are all variants of kriging-based spatial or spatio-temporal interpolation. However, various other techniques are also available for analysing oceanographic data. LOESS regression has proved useful for estimating the mean field and the seasonal cycle from Argo [29]. This is the basis for the RG mean field as well as for the CARS2009 data product [30]. However, kriging is still needed for obtaining the monthly anomalies. Model-driven data assimilation is commonly used for assimilating Argo data to ocean reanalysis products, examples include ORAS5 [31], ECCO [32] and GODAS [33]. Reanalysis products may, however, have non-negligible biases owing to the assumed dynamical model and the intricacies of the data assimilation algorithm. Empirical orthogonal functions (EOFs; also known as principal component analysis to statisticians) are also often used in analysing oceanographic data and especially satellite observations. Since computing the EOFs requires repeated observations at the same spatial locations, ungridded Argo data cannot directly be used for deriving them. An alternative would be to define the leading EOFs using a simulation model followed by a fit to Argo data, but this would make the analysis dependent on the quality of the simulation and would be likely to oversmooth small-scale features.

3. Locally stationary interpolation of Argo data

This section describes the statistical methodology we propose for interpolating Argo temperature data. The approach is based on a locally stationary spatio-temporal Gaussian process regression model. Here a Gaussian process refers to a random function, whose values at any finite set of locations follow a multivariate Gaussian distribution (e.g. ch. 2 in [20]). We start by linearly interpolating the Argo temperature profiles to a given pressure level. We then subtract the seasonally varying RG mean field (see §§2a and 4a) and work with the residuals, which are assumed to have zero mean. Our goal is to use these residuals to produce gridded maps of temperature anomalies. Similar to the RG anomalies, our analysis is carried out separately for each pressure level.

Let (x*, t*) with x* = [x*lat, x*lon]T be a space–time grid point for which a prediction is desired. We assume that within a small spatio-temporal neighbourhood around (x*, t*) the following model holds:

| 3.1 |

where i = 1, …, n refers to years and j = 1, …, mi to observations within at the desired pressure level in the ith year, yi,j is the (i, j)th mean-subtracted temperature residual, xi,j = [xlat, i, j, xlon, i, j]T and ti,j are the location (in degrees of latitude and longitude) and time (in yeardays) of yi,j and GP(0, k(x1, t1, x2, t2;θ)) denotes a zero-mean Gaussian process with a stationary space–time covariance function k(x1, t1, x2, t2;θ) = k(x1 − x2, t1 − t2;θ) depending on parameters θ. We use an anisotropic exponential space–time covariance function , where the GP variance ϕ > 0,

| 3.2 |

and θlat, θlon and θt are positive range parameters. The term εi,j in (3.1) is called the nugget effect and is included in the model to capture fine-scale variation. It is independent of fi and assumed to follow either , where N(0, σ2) is the zero-mean Gaussian distribution with variance σ2, or , where tν(σ2) is the scaled Student t-distribution with ν > 1 degrees of freedom and scale parameter σ > 0 (that is, Z∼tν(σ2) if and only if (Z/σ)∼tν, where tν is the Student t-distribution with ν degrees of freedom). The Student nugget provides a way to model non-Gaussian heavy tails in the observed data. Notice that, under this model, we obtain n independent realizations of the random field, one for each year, which facilitates estimating the model parameters (θ, σ2) or (θ, σ2, ν).

We employ model (3.1) in a moving-window fashion: for each grid point (x*, t*), we use data within the local neighbourhood to estimate the model parameters and to predict y*i = fi(x*, t*) + ε*i. When we move to the next grid point, we re-centre the window around the new location and re-estimate the model parameters. The overlap of the nearby windows results in smoothly varying local estimates of the model parameters. Such a moving-window approach to GP regression was proposed by Haas [10,11]. Figure 2 illustrates the method.

Figure 2.

Illustration of moving-window GP regression. In order to make a prediction at the grid point (x*1, t*), data in its local neighbourhood are used to first estimate the covariance parameters and then to make the prediction. When moving to the next grid point (x*2, t*), the window moves along and the parameter estimates and the prediction are made using data within the new local neighbourhood . For ease of graphical presentation, the time axis is suppressed here, but the same concept applies in the full spatio-temporal space.

This approach facilitates both the modelling and the computational challenges in Argo data analysis. Instead of having to specify a global non-stationary covariance model, the moving-window approach enables us to handle the non-stationarity in Argo data using a collection of locally fitted stationary GP models. In terms of the computations, the approach essentially replaces the inversion of one large covariance matrix by many inversions of smaller covariance matrices. This results in significant computational gains, especially since the computations across the grid points are embarrassingly parallel. More concretely, let n be the number of years and m the typical number of global Argo observations per year. Then, to leading order, the computing time of a global GP regression fit would be n · C · m3, where C is a constant. When each moving window contains fraction f of data, there are N grid points and the computations are parallelized to p threads, then the computing time of the moving-window approach is 1/p · N · n · C · ( fm)3 = 1/p · N · f3 · n · C · m3. Rough values for the analysis carried out in this paper are f = 0.01, N = 30 000 and p = 30, leading to a speed-up factor (1/p · N · f3)−1 = 1000.

In model (3.1), we understand the nugget effect εi,j to primarily reflect ocean variability at spatio-temporal scales smaller than the 3° × 3° × 10 days Argo sampling resolution. The fitted nugget may also capture sensor noise, but we consider this component to be negligibly small in comparison with fine-scale ocean variability. This interpretation of the nugget leads us to make predictions for y*i = fi(x*, t*) + ε*i instead of fi(x*, t*), which widens the prediction intervals by an amount corresponding to the fine-scale component ε*i. This is a sensible approach for temperature data, since fine-scale ocean temperature variability is orders of magnitude larger than the noise level of the Argo temperature sensors. For salinity, especially at large depths, it would be appropriate to model the measurement process more carefully, but this is outside the scope of the present work.

Some previous works [8,22–24,27] on space–time modelling of Argo data use separable covariance models in which the covariance factorizes into a function of space and a function of time. Such covariance models do not allow the dependence in space to interact with the dependence in time (see, for example, §6.1.3 in [21]) and hence imply that large-scale spatial features decay in time just as quickly as small-scale features, which is unrealistic for most real-world processes. The model (3.2) is in contrast non-separable and implies that large-scale spatial features decay more slowly than small-scale features, as one would expect.

To demonstrate the importance of proper modelling of temporal effects, we also consider a spatial version of model (3.1). In that case, the moving window remains the same as before, but we ignore the temporal separation of the observations within the window by setting θt = ∞. This is equivalent to dropping the time covariate ti,j in equation (3.1). The next two sections explain in more detail the parameter estimation, point prediction and uncertainty quantification steps under model (3.1).

(a). Gaussian nugget

With the Gaussian nugget εi,j∼N(0, σ2), the unknown model parameters are the covariance parameters θ = [ϕ, θlat, θlon, θt]T and the nugget variance σ2. We use maximum likelihood to estimate these parameters from Argo data. For each year i = 1, …, n, let Ki(θ) be the mi × mi matrix with elements [Ki(θ)]j,k = k(xi,j, ti,j, xi,k, ti,k;θ) and let yi be the column vector with elements yi,j, j = 1, …, mi. The log-likelihood of the parameters (θ, σ2) is

| 3.3 |

| 3.4 |

and the maximum-likelihood estimator (MLE) of (θ, σ2) is

| 3.5 |

The MLE needs to be obtained using numerical optimization; we use the BFGS quasi-Newton algorithm, as implemented in the Matlab Optimization Toolbox R2016a [34], on log-transformed parameters.

We base the predictions on the conditional distribution , where we have plugged in the MLE for the model parameters. Standard manipulations show that the predictive distribution is

| 3.6 |

where k*i(θ) is a column vector with elements k(x*, t*, xi,j, ti,j;θ), j = 1, …, mi. We make the point predictions using the conditional mean . This is the well-known kriging predictor (e.g. [16,17]) based on the data within the moving window and with plug-in values for the model parameters. It is mean square error optimal assuming that model (3.1) is correct and ignoring the uncertainty of the model parameters. To quantify our uncertainty about y*i, we use the 1 − α predictive intervals based on ,

| 3.7 |

where z1−α/2 is the 1 − α/2 standard normal quantile and is the kriging variance.

(b). Student nugget

With the Student nugget εi,j∼tν(σ2), the likelihood and the predictive distribution p(y*i | yi, θ, σ2, ν) are not available in closed form. To achieve computationally tractable inferences, we employ the Laplace approximation as in [35]; see also §3.4 in [20]. The Laplace approximation yields tractable likelihood computations for estimating the unknown model parameters, including the degrees of freedom ν. It also provides closed-form approximate point predictions, while the prediction intervals can be obtained using Monte Carlo sampling. The computations were implemented by adapting the GPML toolbox [36,37]. Further details are provided in §2 in the electronic supplementary material.

4. Results

In this section, we study the performance of the statistical methodology described in §3 in interpolating Argo temperature data. We use the RG approach described in §2a as a baseline and investigate how the data-driven local estimates of the covariance structure and the inclusion of time improve the interpolation of the anomalies. Section 4a describes the models and datasets that we consider. We then study the fitted models from three perspectives: (1) cross-validated point prediction performance in §4b, (2) cross-validated uncertainty quantification performance in §4c and (3) the estimated spatio-temporal dependence structure in §4d.

(a). Experiment set-up

Our experiments are performed by fitting models to Argo temperature data collected between 2007 and 2016. We investigate three pressure levels: 10, 300 and 1500 decibars (dbar), with the model parameters estimated separately for each pressure level. We focus on one- or three-month temporal windows centred around February (i.e. February 2007, February 2008,…, February 2016 or January–March 2007, January–March 2008,…, January–March 2016 are considered independent realizations from the statistical model in equation (3.1)). To enable comparison of models with different time windows, all the cross-validation studies are done for February data only.

We consider six different statistical models (table 1). Model 1, which we regard as the baseline reference model, is our reimplementation of the RG maps [7]; see §2a. Apart from a few technical details (see below), this model is the same as the one used in [7]. Models 2–6 are variants of the locally stationary mapping procedure described in §3. In each case, the mapping is carried out on a 1° × 1° grid and the covariance parameters are estimated using maximum likelihood within a 20° × 20° moving window on this grid. Model 2 is a one-month fit with a purely spatial covariance k(x1, x2;θ) and a Gaussian nugget. Model 3 is otherwise the same but with a Student nugget. Model 4 is a three-month version of model 2. Models 5 and 6 are three-month fits with a spatio-temporal covariance k(x1, t1, x2, t2;θ) and either a Gaussian or a Student nugget. The spatio-temporal fits are done with three months of data to make sure that there are enough profiles from each float to estimate the temporal covariance structure (there are usually nine profiles from each float within a three-month window).

Table 1.

Description of the models we consider for interpolating Argo temperature data. Model 1 is a reimplementation of the procedure developed by Roemmich & Gilson (RG) in [7] and models 2–6 are variants of locally stationary interpolation. The models differ in terms of how time is taken into account, in the distribution of the fine-scale variation represented by the nugget effect and in the length of the temporal window used in the fit (see text for more details).

| model | time window | mean | covariance | nugget |

|---|---|---|---|---|

| 1 | February | RG (spatial) | RG-like | Gaussian |

| 2 | February | RG (spatial) | local (spatial) | Gaussian |

| 3 | February | RG (spatial) | local (spatial) | Student |

| 4 | January–March | RG (spatio-temporal) | local (spatial) | Gaussian |

| 5 | January–March | RG (spatio-temporal) | local (spatio-temporal) | Gaussian |

| 6 | January–March | RG (spatio-temporal) | local (spatio-temporal) | Student |

For each model, the mean field is the RG local regression fit (see §2a). The publicly available version of the RG climatology [25] includes only the annual mean field, but J. Gilson (2016, personal communication) kindly provided us with the mid-month evaluations of the local mean functions (2.1). We use either these mid-month evaluations treating the mean as a constant over the temporal window (spatial mean) or alternatively a temporally varying reconstruction of the original mean field (spatio-temporal mean) as indicated in table 1. As a function of time, the local regression function (2.1) has 13 free parameters which we wish to reconstruct from the 12 mid-month evaluations. We do this by using the Moore–Penrose pseudoinverse, which handles the one extra degree of freedom by finding the minimum-norm solution in the space of the regression coefficients [38].

The reference model (model 1) implements the key aspects of the RG approach. There are, however, two technical differences between our implementation and theirs. First, we do not include the depth penalty term Pen(x1, x2) when computing the point estimates and the uncertainties. And, second, we do not implement any specialized treatment of distances across islands or continental land. These differences are unlikely to markedly affect the conclusions drawn below. Finally, we note that, when computing the prediction intervals for the reference model, we need to provide the proportionality constant in equation (2.3) (i.e. the variance ϕ of the GP part of the spatial model). Since RG do not provide uncertainty estimates, they also do not give estimates of this constant as it cancels out in the point predictions. To simulate what potentially could have been done to produce uncertainties under the RG model, we estimate the proportionality constant using a moving-window empirical variance. That is, the RG-inspired prediction intervals at the grid point x* are formed using the covariance model (2.3) with the following estimate of the GP variance ϕ:

| 4.1 |

where the denominator originates from the RG noise-to-signal variance ratio 0.15 via the relation Var(yi,j) = ϕ + σ2 = ϕ(1 + σ2/ϕ) = 1.15 · ϕ. We emphasize that the resulting prediction intervals are not part of the original RG climatology and are by no means advocated by them for uncertainty quantification.

We fit models 1–6 to the global Argo dataset as of 8 May 2017 [39]. The quality control criteria used for filtering out profiles with technical issues are given in the electronic supplementary material. There were a total of 1 417 813 Argo profiles in 2007–2016, out of which 994 709 passed our selection criteria. We also performed a further temporal filtering to focus on the desired time windows and a spatial filtering based on the RG land mask [25], which filters out profiles located in marginal seas, such as the Mediterranean Sea or the Gulf of Mexico. The final dataset has 70 227 profiles for February and 223 797 profiles for January to March.

The analysis was carried out using Matlab R2016a. As noted in §3b, we use GPML [36,37] to fit models 3 and 6, while the other models are our own implementations. The computations were carried out on the Midway2 cluster at the University of Chicago Research Computing Center, Chicago, IL, USA. The possibility of parallelizing the moving-window computations to the 28 threads of the Midway2 compute nodes was crucial for making the analysis computationally feasible. The Matlab code used to produce the results is available on Github [40].

(b). Point predictions

We first investigate the performance of the different models in making point predictions of the temperature anomalies. Figure 3 displays the February 2012 temperature anomalies at 10, 300 and 1500 dbar for the locally stationary spatio-temporal model with a Gaussian nugget (model 5) and for the reference model (model 1). The maps are on a 1° × 1° grid and the space–time field is evaluated at noon on 15 February 2012. The overall patterns in both fields are similar: one can recognize the large-scale anomalies near the surface, the elongated patterns in subsurface Equatorial regions and the meanders and eddies associated with the western boundary currents and the Antarctic Circumpolar Current. There are, however, a number of clear differences between the maps. Especially at 10 dbar, the reference map shows small speckles that are absent from the locally fitted map. This is related to the RG covariance parameters which are not optimized for mapping anomalies near the surface. Also, while present in both maps, the zonal elongation of the anomalies in the Equatorial regions is much more pronounced in the reference maps.

Figure 3.

Argo temperature anomalies for February 2012 at 10, 300 and 1500 dbar for the locally stationary three-month spatio-temporal model with a Gaussian nugget (model 5, a,c,e) and for the RG-like reference model (model 1, b,d,f).

In order to study the difference between the reference method and the locally stationary maps in more quantitative terms, we performed a cross-validation study comparing the predictive performance of the different methods. We consider two cross-validation strategies: in leave-one-observation-out (LOOO) cross-validation, we left out one temperature observation at a time, while in leave-one-float-out (LOFO) cross-validation we left out an entire float. LOOO predictions are easier to make since there will almost always be nearby observations from the same float to constrain the temperature value at the prediction location. LOFO cross-validation, on the other hand, creates a ‘gap’ in the Argo array and has a higher amount of irreducible error. In the actual mapping problem, the typical distance from a grid point to nearby floats will be somewhere between these two extremes.

We investigate the cross-validation performance in terms of the root-mean-square error (RMSE), the median absolute error (MdAE) and the third quartile of the absolute error (Q3AE). Let be the prediction at (xi,j, ti,j) with either yi,j removed from the dataset (LOOO) or all observations with the same float ID as yi,j removed (LOFO). Then

| 4.2 |

and MdAE and Q3AE are the sample median and sample third quartile of . When cross-validating yi,j, the model parameters are taken from the moving window centred at the 1° × 1° grid point closest to yi,j. The parameter estimates and the mean fields are kept fixed during the cross-validation.

Table 2 summarizes the LOOO cross-validation performance for the models with a Gaussian nugget (models 1, 2, 4 and 5). Also included is the performance when the predictions are made using only the RG spatial mean without any modelling of the anomalies (the performance of the spatio-temporal mean is only slightly better and is omitted for clarity). For all three pressure levels and for all three performance metrics, the relative performance of the models is in the following order: the reference model (model 1) outperforms the spatial mean, the locally stationary one-month spatial model (model 2) outperforms the reference model (model 1), the locally stationary three-month spatial model (model 4) outperforms the one-month spatial model (model 2) and the locally stationary three-month spatio-temporal model (model 5) outperforms the three-month spatial model (model 4). The combination of data-driven local covariance parameters, a larger temporal window and a covariance structure that includes time leads to a fairly substantial 10–30% performance improvement over the reference model. The improvement is particularly large near the surface at 10 dbar. The distribution of the squared prediction errors (not shown) has a much fatter right tail than there would be if the errors followed a common normal distribution, so we also consider MdAE and Q3AE as summaries of prediction performance to make sure that our conclusions are not driven by a small fraction of poor predictions. Table 2 confirms that all three performance metrics are consistent in their relative ranking of the various methods.

Table 2.

Point prediction performance measured in terms of the root-mean-square error (RMSE), the third quartile of the absolute error (Q3AE) and the median absolute error (MdAE) for leave-one-observation-out (LOOO) cross-validation. The compared models are the RG spatial mean, the RG-like reference model (model 1), the locally stationary one-month and three-month spatial models (models 2 and 4) and the locally stationary three-month spatio-temporal model (model 5), all with a Gaussian nugget. The units are degrees Celsius and the percentages in the parentheses are improvements in comparison with the reference model.

| pressure level | performance metric | spatial mean | reference model | space (one month) | space (three months) | space–time (three months) |

|---|---|---|---|---|---|---|

| 10 dbar | RMSE | 0.8889 | 0.6135 | 0.5876 (4.2%) | 0.5667 (7.6%) | 0.5072 (17.3%) |

| Q3AE | 0.8670 | 0.5026 | 0.4824 (4.0%) | 0.4568 (9.1%) | 0.3735 (25.7%) | |

| MdAE | 0.4750 | 0.2556 | 0.2490 (2.6%) | 0.2293 (10.3%) | 0.1801 (29.5%) | |

| 300 dbar | RMSE | 0.8149 | 0.5782 | 0.5692 (1.6%) | 0.5675 (1.9%) | 0.5124 (11.4%) |

| Q3AE | 0.6320 | 0.4213 | 0.4150 (1.5%) | 0.4005 (4.9%) | 0.3684 (12.6%) | |

| MdAE | 0.3062 | 0.1991 | 0.1957 (1.7%) | 0.1873 (5.9%) | 0.1740 (12.6%) | |

| 1500 dbar | RMSE | 0.1337 | 0.1014 | 0.0997 (1.7%) | 0.0935 (7.8%) | 0.0883 (12.9%) |

| Q3AE | 0.1043 | 0.0736 | 0.0725 (1.5%) | 0.0678 (7.9%) | 0.0641 (12.8%) | |

| MdAE | 0.0530 | 0.0356 | 0.0355 (0.3%) | 0.0328 (8.0%) | 0.0311 (12.7%) |

With LOFO cross-validation (table 3), the prediction errors are consistently larger than with LOOO cross-validation, which reflects the more challenging nature of the LOFO prediction task. Even in this case, there are still distinct advantages from appropriate modelling of the anomalies. The ranking of the models and the general conclusions are otherwise the same as above, except for two differences. First, here the three-month spatial model (model 4) does not significantly improve upon the one-month model (model 2) and can in fact even perform worse. This happens because the model confuses spatial and temporal variation, which highlights the importance of using a full spatio-temporal covariance model. Second, at 1500 dbar, the RMSE of the reference model is slightly larger than the RMSE of the spatial mean. As discussed above, this may happen because of a few values in the right tail of the squared prediction error distribution, but may also indicate that the RG covariance parameters are not particularly well suited for this pressure level. In comparison, all the data-driven models perform better than the spatial mean, as expected.

Table 3.

Same as table 2 but for leave-one-float-out (LOFO) cross-validation.

| pressure level | performance metric | spatial mean | reference model | space (one month) | space (three months) | space–time (three months) |

|---|---|---|---|---|---|---|

| 10 dbar | RMSE | 0.8889 | 0.7177 | 0.6823 (4.9%) | 0.6954 (3.1%) | 0.6489 (9.6%) |

| Q3AE | 0.8670 | 0.6107 | 0.5776 (5.4%) | 0.5987 (2.0%) | 0.5222 (14.5%) | |

| MdAE | 0.4750 | 0.3165 | 0.2981 (5.8%) | 0.3062 (3.2%) | 0.2552 (19.3%) | |

| 300 dbar | RMSE | 0.8149 | 0.7686 | 0.7486 (2.6%) | 0.7483 (2.6%) | 0.7388 (3.9%) |

| Q3AE | 0.6320 | 0.5942 | 0.5733 (3.5%) | 0.5666 (4.6%) | 0.5556 (6.5%) | |

| MdAE | 0.3062 | 0.2856 | 0.2753 (3.6%) | 0.2732 (4.3%) | 0.2664 (6.7%) | |

| 1500 dbar | RMSE | 0.1337 | 0.1373 | 0.1308 (4.8%) | 0.1313 (4.4%) | 0.1307 (4.8%) |

| Q3AE | 0.1043 | 0.1015 | 0.0976 (3.9%) | 0.0973 (4.2%) | 0.0959 (5.6%) | |

| MdAE | 0.0530 | 0.0511 | 0.0499 (2.3%) | 0.0491 (3.7%) | 0.0484 (5.3%) |

The cross-validation results for the Student nugget are given in the electronic supplementary material. The Student models tend to perform worse than the comparable Gaussian models. This happens because they smooth out non-Gaussian high-frequency features (eddies in particular) by including them in the nugget term. Nevertheless, the same conclusion that the spatio-temporal model outperforms the purely spatial model remains true. Even though the Student models have inferior point prediction performance, they offer significant advantages in uncertainty quantification (see §4c).

To summarize, these results highlight the importance of including time in the mapping and allowing the covariance parameters to change with location and pressure. Indeed, the largest improvements are observed at 10 dbar, where one would intuitively expect the data-driven covariances to differ a lot from the RG model (see §2a). To put these results into perspective, it is useful to keep in mind that statistical procedures typically converge at sublinear rates as the amount of data increases. For example, assuming a rate of convergence, a 20% improvement in the predictive performance translates into 56% more data. This would correspond to deploying roughly 2000 additional floats at a cost of US$30 million (one Argo float costs approx. US$15 000 [41]). Similarly, even a 10% performance improvement corresponds to approximately 23% more data at a cost of some US$12 million. It should be noted that these dollar amounts are rough order-of-magnitude estimates using Argo-type floats. The actual costs would be higher if one took into account the need to replenish the array and the cost of data handling. On the other hand, improved upper ocean sampling could also be achieved at a lower cost by deploying cheaper floats that sample only the upper few hundred metres of the water column.

(c). Uncertainty quantification

We next investigate the uncertainty quantification performance of the different models. Figure 4 displays the post-data-to-pre-data variance ratio Var(y*i|yi,θ̂,σ̂2)/Var(y*i|θ̂,σ̂2)=(ϕ̂+σ̂2−(k*i(θ̂))T(Ki(θ̂)+σ̂2I)−1k*i(θ̂))/(ϕ̂+σ̂2), i.e. the ratio of the predictive variances with and without Argo data, for the locally stationary three-month spatio-temporal model with a Gaussian nugget (model 5) at 10, 300 and 1500 dbar in February 2012. When this ratio is close to 0, Argo data provide firm inferences about the temperature anomaly, while a value close to 1 means that observing Argo data did not considerably reduce our uncertainty about the temperature anomaly at that particular location and time. Also shown is the same ratio for the reference model (model 1) at 10 dbar. Since the uncertainties of Gaussian process models depend only on the covariance parameters and the observation locations and since the RG covariance is the same at all pressure levels, the uncertainty of the reference model at 300 dbar and 1500 dbar (not shown) looks essentially the same as the uncertainty at 10 dbar (there are minor differences due to some profiles not extending all the way from 10 dbar to 1500 dbar). By contrast, the locally stationary uncertainties are vastly different at different pressures. This happens because the estimated covariance parameters vary significantly as a function of pressure (see §4d). Based on the anomalies shown in figure 3, it makes intuitive sense that the uncertainties near the surface should be quite different from the uncertainties at greater depths. Note also that in the RG model, with its fixed noise-to-signal variance ratio σ2/ϕ=0.15, the post-data-to-pre-data variance ratio in figure 4d does not depend on how the GP variance ϕ is chosen. By contrast, the rest of the results in this section require an estimate of ϕ (see §4a).

Figure 4.

Post-data-to-pre-data variance ratio Var(y*i|yi,θ̂,σ̂2)/Var(y*i|θ̂,σ̂2) in February 2012 for the locally stationary three-month spatio-temporal model with a Gaussian nugget (model 5) at 10, 300 and 1500 dbar (a– c) and for the RG-like reference model (model 1) at 10 dbar (d). Because the RG covariance is the same at all pressures, the variance ratios for the reference model at 300 dbar and 1500 dbar (not shown) are essentially the same as the one at 10 dbar.

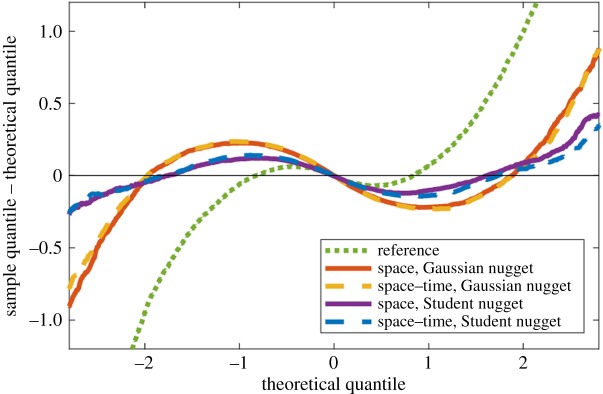

In order to study the uncertainties in a more quantitative way, we cross-validate the entire predictive distribution for the different interpolation methods. We compare the calibration of the reference model (model 1), the locally stationary one-month spatial models with a Gaussian and a Student nugget (models 2 and 3) and the locally stationary three-month spatio-temporal models with a Gaussian and a Student nugget (models 5 and 6). For each model, let qsample denote the cross-validated predictive sample quantile on the N(0,1) scale and qtheory the corresponding theoretical N(0,1) quantile. The computation of qsample is described in detail in the electronic supplementary material. We plot in figure 5 the quantile difference qsample−qtheory against the theoretical quantile qtheory at 300 dbar for LOOO cross-validation. This can be understood as the usual QQ plot with the identity line subtracted— plotting the quantiles this way helps visualize differences at the core of the distribution. The reference model is poorly calibrated with both tails of the data distribution much wider than those of the predictive distribution. The locally stationary models with the Gaussian nugget improve the calibration, but the data distribution still has heavier tails and a pointier core than the predictive distribution. We understand this as evidence of non-Gaussian heavy tails in the subsurface temperature data (similar heavy tails have been previously reported for sea surface temperatures; see [42]). The Student nugget provides a way to account for these heavy tails and indeed the calibration of the Student models is much better than that of the fully Gaussian models. Even though some miscalibration still remains, the overall improvement over the reference model is quite substantial. We also note that the spatial and spatio-temporal models are essentially equally well calibrated in the present setting.

Figure 5.

The difference of the cross-validated sample quantile and the corresponding standard Gaussian theoretical quantile (qsample−qtheory) plotted against the theoretical quantile (qtheory) for LOOO cross-validation at 300 dbar. The compared models are the RG-like reference model (model 1), the locally stationary one-month spatial and three-month spatio-temporal models with a Gaussian nugget (models 2 and 5) and the corresponding models with a Student nugget (models 3 and 6). The closer the curves are to a horizontal straight line at 0, the better the calibration of the predictive distributions.

The electronic supplementary material stratifies figure 5 by years and latitude bins. This shows that February 2016 has slightly worse calibration than the other years, but otherwise the calibration is remarkably similar across the years, supporting the assumption made in §3 that the data can be treated as having the same distribution for each year. Stratification by latitude shows that the better calibration of the Student models primarily comes from improved performance in areas south of 45° S and north of 45° N.

The quantile plots in figure 5 translate directly into coverage probabilities for the predictive intervals. This is illustrated in table 4, which shows the LOOO cross-validated empirical coverages and interval lengths for 68%, 95% and 99% predictive intervals at 300 dbar for the same models as in figure 5. As expected based on figure 5, the reference model undercovers at all three confidence levels. The locally stationary models overcover at the 68% level, are well calibrated at the 95% level and undercover at the 99% level. At the 68% and 99% levels, the coverage of the Student intervals is much closer to the nominal level than the coverage of the Gaussian intervals. The space–time intervals are always shorter than the corresponding spatial intervals, which is consistent with the performance improvements described in §4b.

Table 4.

Empirical coverage and length (in°C) of the predictive intervals at 300 dbar for LOOO cross-validation. The models are the same as in figure 5.

| confidence level | method | empirical coverage | mean length | median length |

|---|---|---|---|---|

| 68% | reference | 0.6607 | 0.7511 | 0.6435 |

| space, Gaussian nugget | 0.7745 | 0.9749 | 0.8728 | |

| space–time, Gaussian nugget | 0.7800 | 0.8722 | 0.7816 | |

| space, Student nugget | 0.7261 | 0.8427 | 0.7621 | |

| space–time, Student nugget | 0.7389 | 0.7697 | 0.7049 | |

| 95% | reference | 0.8755 | 1.4721 | 1.2612 |

| space, Gaussian nugget | 0.9482 | 1.9108 | 1.7107 | |

| space–time, Gaussian nugget | 0.9490 | 1.7095 | 1.5320 | |

| space, Student nugget | 0.9432 | 1.9918 | 1.7525 | |

| space–time, Student nugget | 0.9452 | 1.8543 | 1.6192 | |

| 99% | reference | 0.9329 | 1.9347 | 1.6575 |

| space, Gaussian nugget | 0.9777 | 2.5112 | 2.2483 | |

| space–time, Gaussian nugget | 0.9793 | 2.2466 | 2.0134 | |

| space, Student nugget | 0.9835 | 3.9385 | 2.6417 | |

| space–time, Student nugget | 0.9844 | 3.8086 | 2.3976 |

The quantile plots, empirical coverages and interval lengths for the other pressure levels and for LOFO cross-validation are given in the electronic supplementary material. The LOOO conclusions at 10 dbar are similar to those at 300 dbar, except that the spatio-temporal models appear to have worse calibration than the spatial models. This might be an indication that the spatio-temporal dependence structure near the surface is more complicated than what our exponential covariance function with geometric anisotropy can capture. For LOOO at 1500 dbar, the spatial and spatio-temporal models are equally well calibrated, but there also appears to be less non-Gaussianity and the Student nugget provides only limited improvement over the Gaussian nugget. The basic conclusions that locally stationary modelling improves the calibration over the reference model and that the Student nugget improves over the Gaussian nugget still largely hold true for LOFO cross-validation, but here the spatio-temporal models are slightly worse calibrated than the spatial models at all pressures. The spatio-temporal models nonetheless provide distinct advantages in terms of interval length. In these cases, the optimal choice of intervals depends on the relative importance given to accurate calibration and short interval length.

(d). Local estimates of dependence structure

In this section, we study the locally estimated model parameters and demonstrate that they exhibit physically meaningful patterns. We analyse in detail the three-month spatio-temporal model with a Gaussian nugget (model 5). Further plots and analogous results for the other models are given in the electronic supplementary material.

We first investigate the estimated total variance ϕ̂+σ̂2 at 10, 300 and 1500 dbar (figure 6). At the first two pressure levels, we can clearly see the large variability in the western sides of the ocean basins caused by strong western boundary currents. For example, the Kuroshio Current off the coast of Japan, the Gulf Stream in the northwest Atlantic, the Brazil Current in the southwest Atlantic and the Agulhas retroflection and leakage areas around the southern tip of Africa (see §11.4.2 in [26]) can all be easily identified at both pressure levels. The East Australian Current is also visible at 300 dbar. By contrast, the eastern sides of the ocean basins have significantly less variability, as expected. A notable exception is the northeastern Atlantic at 1500 dbar, which has much more variability than other regions at that pressure level. We suspect that this can be attributed to eddies caused by the outflow from the Mediterranean Sea [43]. The bands of large variability at roughly 10° N and 10° S at 10 dbar in the Pacific Ocean might be related to Rossby waves at those latitudes.

Figure 6.

Estimated total variance ϕ̂+σ̂2 for the locally stationary three-month spatio-temporal model with a Gaussian nugget (model 5).

We next study the estimated ranges of zonal, meridional and temporal dependence in Argo temperature data. We do this by plotting maps of the correlation implied by the locally estimated covariance parameters at given zonal, meridional and temporal lags. We prefer to plot the maps in the correlation space instead of the range parameter space since there is some degree of ambiguity with regard to what fraction of the variability is attributed to the nugget effect in the MLE fits (at intermediate lags, a large nugget variance σ2, a small GP variance ϕ and a large range parameter θlat, θlon or θt can imply almost the same fitted correlation as a small nugget variance σ2, a large GP variance ϕ and a small range parameter θlat, θlon or θt). The interested reader can find maps of the individual model parameters in the electronic supplementary material.

Figure 7a, c, e shows the fitted correlations at the zonal lag Δxlon=800 km (with Δxlat=0, Δt=0 and taking the conversion latitude into account when converting degrees into kilometres). The range of zonal dependence varies considerably as a function of pressure, with much longer ranges near the surface than at greater depths. The large correlation values near the surface are most likely caused by interaction with the atmosphere. There is also a general tendency to have zonally elongated ranges in the Equatorial regions and the estimated ranges are generally longer in the Pacific Ocean than in the Indian or Atlantic Oceans. At 10 dbar in the Pacific Ocean, there is again evidence of patterns that are likely to be related to Equatorial Rossby and Kelvin waves.

Figure 7.

Fitted correlations at zonal lag Δxlon=800 km (a, c, e) and at meridional lag Δxlat=800 km (b, d, f) for the locally stationary three-month spatio-temporal model with a Gaussian nugget (model 5). To facilitate comparison, all the panels have the same color scale.

Figure 7b, d, f shows the fitted correlations at the meridional lag Δxlat=800 km (with Δxlon=0 and Δt=0). The general conclusions are similar to above: the ranges are longer near the surface and in the Equatorial regions. The distinct patch of large correlations at 300 dbar in the Equatorial West Pacific is likely to be related to the Pacific thermocline, which is tilted westward along the Equator. The meridional ranges also show interesting patterns in areas where the Amazon River and the Congo River flow into the Atlantic Ocean. Notice that all the plots in figure 7 are on the same (logarithmic) colour scale and can thus be compared directly. This comparison shows that the dependence structure is clearly anisotropic with the zonal ranges longer than the meridional ones.

The fitted correlations at temporal lag Δt=10 days (with Δxlat=0 and Δxlon=0) are given in figure 8. While there are differences in the correlation patterns between the three pressure levels, the overall magnitude of the temporal correlation changes relatively little with pressure. There does seem to be a slight tendency for the temporal correlation to increase with pressure in the Southern Ocean and to decrease with pressure in most other areas, but these changes are much less pronounced than in the case of the zonal and meridional ranges (figure 7).

Figure 8.

Fitted correlations at temporal lag Δt=10 days for the locally stationary three-month spatio-temporal model with a Gaussian nugget (model 5).

Ninove et al. [44] have previously estimated dependence scales using Argo data. They estimate the range parameters (which are often called ‘decorrelation scales’ by oceanographers) by dividing the global ocean into large disjoint boxes followed by a weighted least-squares variogram fit to data within each box. They only consider spatial dependence and do not provide estimates of the temporal scales. In comparison, our moving-window approach includes the temporal dimension and provides information about the dependence structure at much finer horizontal resolution. It is also well established in the spatial statistics literature that MLE fits should be preferred over variogram fits [17]. Nevertheless, many of our conclusions qualitatively agree with those of Ninove et al. They also find longer ranges near the surface, zonal elongation in the tropics, strong anisotropy and shorter ranges in the Indian and Atlantic Oceans. However, we do not find evidence for the large increase in spatial ranges below 700 m that Ninove et al. observe. Instead, our spatial ranges are almost always shorter at 1500 dbar than at 300 dbar. We suspect that the effect seen by Ninove et al. is an artefact caused by the pre-Argo mean field that they used. This mean field is likely to be poorly constrained at depth, where few data were available before Argo, and any unmodelled non-stationary mean effects would then show up as increased spatial dependence in the empirical variograms.

5. Conclusion and outlook

We have demonstrated that the spatio-temporal dependence structure of ocean temperatures can be estimated from Argo data using local moving-window maximum-likelihood estimates of the covariance parameters. The resulting fully data-driven non-stationary anomaly fields and their uncertainties yield substantial improvements over existing state-of-the-art methods. The improvements in point prediction accuracy are comparable to deploying hundreds of new Argo floats. There is also evidence of non-Gaussian heavy tails in the temperature data and taking this into account is crucial for obtaining well-calibrated uncertainties. The estimated covariance models exhibit physically sensible patterns that can be analysed further to test theories of large-scale ocean circulation.

The choice of the covariance parameters has been a long-standing conundrum in Argo mapping. The values of these parameters affect in particular how small-scale features, such as mesoscale eddies, are displayed on the map. It is sometimes argued that the covariance parameters should be chosen so that eddies are smoothed out from the final map. We do not regard this as a good basis for producing a general-purpose Argo data product. Instead, we believe that the covariance function should ideally reflect all the ocean variability present in the observations, including eddies and other small-scale features. The resulting map captures as much of the physical variation as possible and can be customized post hoc for various purposes by applying operators on it. For example, an eddy-reduced map can be obtained by applying a low-pass filter on the map. This line of thinking allows the covariance function to model the actual ocean variability as well as possible, which is essentially what the data-driven length scales used in this paper are aiming to accomplish, and leaves the choice of which features to emphasize to the user of the data product, effectively decoupling these two issues.

Statistically, this work demonstrates how well suited moving-window GP regression is for handling massive modern non-stationary spatio-temporal datasets. This approach helps address in a straightforward manner challenges related to both non-stationarity and computational complexity, issues that affect the analysis of almost any large-scale environmental dataset. In the present work, we developed a version of the moving-window approach that uses a Student t-distributed nugget effect to address heavy tails in the temperature data. To the best of our knowledge, the Student nugget has not been previously used in conjunction with moving-window GP regression. While spatial and spatio-temporal moving-window techniques have been around since the seminal work of Haas [10,11], we feel that there is much room for further application and methodological development on this front.

To produce the interpolated temperature anomalies, we used the fairly simple exponential covariance function with geometric anisotropy along the zonal, meridional and temporal axes (see equation (3.2)). We have compared the model fit with empirical covariances in various regions and generally find the two to be in reasonably good agreement. We have also investigated more complex space–time covariance models including the Matérn model [17], the Gneiting model [45] and geometric anisotropy, which is not constrained to be aligned with the latitude–longitude coordinate axes. We have experimented with these models in selected regions of the Pacific Ocean at 300 dbar. In each case, we found only minor improvements in the likelihood values and point predictions in comparison with the simple exponential covariance function. At the same time, the estimated covariance parameters became very challenging to interpret and validate since several combinations of the model parameters can represent almost the same covariance structure. Furthermore, the likelihood computations were much too slow to be practical on a global scale. These extensions could still be useful in other regions or pressure levels, but the interpretability and computations remain problematic. We have also experimented with various ways of adding the ocean depth into the distance metric in equation (3.2), as is done for example in [7], but found that the corresponding range parameter is estimated to be very large in comparison with typical depth differences, which effectively removes this component from the model. We have also explored the possibility of accounting for land barriers using a simple line of sight approach, where those data points within the moving window that do not have a line of sight to the grid point at the centre are discarded from the computations. We found that this way of handling land does not change the estimates much and can in fact lead to degraded performance since potentially useful data are left out of each prediction. An interesting future extension would be to add a velocity term to the covariance, as is done, for example, in the mapping of satellite altimetry data [46].

While the Student prediction intervals clearly yield improved uncertainty quantification, the present implementation of the Student nugget suffers from algorithmic instabilities, which is evident in the plots of the estimated model parameters given in the electronic supplementary material. These instabilities are likely to be related to the non-concave optimization problem that needs to be solved as part of the Laplace approximation [35]. Alternatively, it might be that the Laplace approximation itself is not appropriate in some parts of the ocean. We experimented with an alternative implementation of the Laplace approximation [47] but observed similar instabilities. Further research is needed to understand the precise cause of these instabilities before the Student models can be fully recommended for use in actual data products.

This work has only scratched the surface in terms of the statistical research that can be done with Argo data. We sketch below some potential extensions and directions for future work.

-

—

In this work, we have focused on Argo temperature data only. In principle, similar locally stationary interpolation is also applicable to salinity data, but the challenge is that especially at larger depths the variability of the salinity field is so small that instrumental errors can no longer be ignored. This means that a more careful modelling of the nugget effect to include measurement error is needed. We also note that, when mapping both temperature and salinity, one should ideally take into account their correlation, which requires modelling the spatio-temporal cross-covariance between the two fields. This cross-covariance function can also be of scientific interest in its own right.

-

—

Maps of the locally estimated covariance models (figures 6–8) tend to sometimes have zonal or meridional stripes that are related to the sharp boundary of the 20°×20° moving window. These stripes could be removed by replacing the moving window by a smooth kernel function as in [48]. Since it is often possible to represent almost the same covariance structure by various combinations of the model parameters, we also sometimes see abrupt transitions from one parameter configuration to another. It should be possible to fix this by finding a way to borrow strength across the nearby grid points in the parameter estimates. One could, for instance, envision using a Bayesian hierarchical model, where the maps of the local model parameters are themselves modelled as spatially dependent random fields, as in [49], for example. However, it seems challenging to find a way to carry out the computations at the scale of the global ocean with such a model.

-

—

The type of non-Gaussianity considered in this work is spatially and temporally uncorrelated Student t-distributed heavy tails. However, the remaining miscalibration in figure 5 indicates that either there is room for improvement in the covariance modelling or there is some non-Gaussian structure in the data that remains unaccounted for. Further exploratory analysis of the mean-subtracted residuals reveals, for example, the presence of skewness (especially near the surface, as can be expected based on previous analyses of sea surface temperature data [42]) and regions where the distribution of the residuals appears to be multimodal. It is also highly likely that the heavy-tailed features, which at least partially correspond to eddies, are spatially and temporally correlated. Hence, an important area for future work would be to develop tools for understanding the spatial and temporal dependence scales of these heavy-tailed features. This would enable us to better understand how long these features persist and how large spatial regions are affected. Capturing all these effects would require developing space–time models with more versatile non-Gaussian structure. Stochastic partial differential equations, as in [50], may provide a way to construct such models.

-

—

In the present work, we have not exploited dependence across pressure levels. A natural extension would be to carry out full four-dimensional mapping by including the vertical dimension in the covariance structure. In principle, the moving-window approach can be easily extended to this situation by considering observations at nearby pressures. However, modelling the vertical covariance seems non-trivial since the vertical direction contains phenomena that are fundamentally different from the other dimensions. For example, ocean stratification can cause abrupt vertical changes, while eddies exhibit coherent vertical structure. One would also ideally like to find a way to incorporate the knowledge that, in a stably stratified ocean, density needs to be a monotonically increasing function of pressure, so any violations of monotonicity must be constrained to be transient and small.

-

—

Here we have only considered point-wise uncertainties. However, many scientifically important quantities are functionals of the temperature and salinity fields (or some field derived from these two quantities). For example, ocean heat content is essentially a three-dimensional integral of the temperature field. Providing uncertainties for functionals requires access to predictive covariances or conditional simulations, but neither of these can be directly obtained from the moving-window approach considered here. It should, however, be possible to combine the local covariance models into a valid global non-stationary model using the approach developed in [49]. This global model could then be used to compute the predictive covariance matrix or to produce conditional simulations, although it is not immediately clear if all the necessary computations are feasible on the scale of the Argo dataset.

Supplementary Material

Acknowledgments

We are grateful to Fred Bingham, Chen Chen, Bruce Cornuelle, Donata Giglio, John Gilson, Sarah Gille, Alison Gray, Malte Jansen, Alice Marzocchi, Matt Mazloff, Breck Owens, Dean Roemmich, Megan Scanderbeg and Nathalie Zilberman for many discussions about Argo data, physical oceanography and previous mapping algorithms as well as for helpful feedback on preliminary results throughout this project. We would also like to thank the four anonymous referees and the editor for their detailed and insightful feedback, which led to a much improved presentation of these results. This work was carried out while M.K. was at the University of Chicago, Department of Statistics, and at the Statistical and Applied Mathematical Sciences Institute. This work was completed in part using computational resources provided by the University of Chicago Research Computing Center.

Data accessibility

The results presented in this paper are based on the 8 May 2017 snapshot of the Argo GDAC (http://doi.org/10.17882/42182#50059). The Matlab code used to produce the results is available at https://github.com/mkuusela/ArgoMappingPaper. Argo data were collected and made freely available by the International Argo Program and the national programs that contribute to it (http://www.argo.ucsd.edu, http://argo.jcommops.org). The Argo Program is part of the Global Ocean Observing System.

Author's contributions

M.K. developed the statistical methodology, wrote the code, produced the analyses and drafted the manuscript. M.L.S. conceived the project, provided guidance and helped prepare the manuscript. Both authors gave final approval for publication.

Competing interests

We have no competing interests.

Funding

This work was supported by the STATMOS Research Network (NSF awards nos. 1106862, 1106974 and 1107046), the US Department of Energy grant no. DE-SC0002557 and the National Science Foundation grant no. DMS-1638521 to the Statistical and Applied Mathematical Sciences Institute.

Disclaimer

Any opinions, findings and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the National Science Foundation or the US Department of Energy.

References

- 1.Roemmich D, Church J, Gilson J, Monselesan D, Sutton P, Wijffels S. 2015. Unabated planetary warming and its ocean structure since 2006. Nat. Clim. Change 5, 240–245. ( 10.1038/nclimate2513) [DOI] [Google Scholar]

- 2.Gray AR, Riser SC. 2014. A global analysis of Sverdrup balance using absolute geostrophic velocities from Argo. J. Phys. Oceanogr. 44, 1213–1229. ( 10.1175/JPO-D-12-0206.1) [DOI] [Google Scholar]

- 3.Zhang Z, Wang W, Qiu B. 2014. Oceanic mass transport by mesoscale eddies. Science 345, 322–324. ( 10.1126/science.1252418) [DOI] [PubMed] [Google Scholar]

- 4.Hennon TD, Riser SC, Alford MH. 2014. Observations of internal gravity waves by Argo floats. J. Phys. Oceanogr. 44, 2370–2386. ( 10.1175/JPO-D-13-0222.1) [DOI] [Google Scholar]

- 5.Cheng L, Zhu J, Sriver RL. 2015. Global representation of tropical cyclone-induced short-term ocean thermal changes using Argo data. Ocean Sci. 11, 719–741. ( 10.5194/os-11-719-2015) [DOI] [Google Scholar]

- 6.Chang Y-S, Zhang S, Rosati A, Delworth TL, Stern WF. 2013. An assessment of oceanic variability for 1960–2010 from the GFDL ensemble coupled data assimilation. Clim. Dyn. 40, 775–803. ( 10.1007/s00382-012-1412-2) [DOI] [Google Scholar]

- 7.Roemmich D, Gilson J. 2009. The 2004–2008 mean and annual cycle of temperature, salinity, and steric height in the global ocean from the Argo Program. Prog. Oceanogr. 82, 81–100. ( 10.1016/j.pocean.2009.03.004) [DOI] [Google Scholar]

- 8.Schmidtko S, Johnson GC, Lyman JM. 2013. MIMOC: a global monthly isopycnal upper-ocean climatology with mixed layers. J. Geophys. Res. Oceans 118, 1658–1672. ( 10.1002/jgrc.20122) [DOI] [Google Scholar]

- 9.Good SA, Martin MJ, Rayner NA. 2013. EN4: quality controlled ocean temperature and salinity profiles and monthly objective analyses with uncertainty estimates. J. Geophys. Res. Oceans 118, 6704–6716. ( 10.1002/2013jc009067) [DOI] [Google Scholar]

- 10.Haas TC. 1990. Lognormal and moving window methods of estimating acid deposition. J. Am. Stat. Assoc. 85, 950–963. ( 10.1080/01621459.1990.10474966) [DOI] [Google Scholar]

- 11.Haas TC. 1995. Local prediction of a spatio-temporal process with an application to wet sulfate deposition. J. Am. Stat. Assoc. 90, 1189–1199. ( 10.1080/01621459.1995.10476625) [DOI] [Google Scholar]

- 12.Hammerling DM, Michalak AM, Kawa SR. 2012. Mapping of CO2 at high spatiotemporal resolution using satellite observations: global distributions from OCO-2. J. Geophys. Res. Atmos. 117, D06306 ( 10.1029/2011JD017015) [DOI] [Google Scholar]

- 13.Hammerling DM, Michalak AM, O'Dell C, Kawa SR. 2012. Global CO2 distributions over land from the Greenhouse Gases Observing Satellite (GOSAT). Geophys. Res. Lett. 39, L08804 ( 10.1029/2012GL051203) [DOI] [Google Scholar]

- 14.Tadić JM, Qiu X, Yadav V, Michalak AM. 2015. Mapping of satellite Earth observations using moving window block kriging. Geosci. Model Dev. 8, 3311–3319. ( 10.5194/gmd-8-3311-2015) [DOI] [Google Scholar]

- 15.Tadić JM, Qiu X, Miller S, Michalak AM. 2017. Spatio-temporal approach to moving window block kriging of satellite data v1.0. Geosci. Model Dev. 10, 709–720. ( 10.5194/gmd-10-709-2017) [DOI] [Google Scholar]

- 16.Cressie NAC. 1993. Statistics for spatial data, Revised edition New York, NY: John Wiley & Sons. [Google Scholar]

- 17.Stein ML. 1999. Interpolation of spatial data: some theory for kriging. Berlin, Germany: Springer. [Google Scholar]

- 18.Daley R. 1991. Atmospheric data analysis. Cambridge, UK: Cambridge University Press. [Google Scholar]

- 19.Bretherton FP, Davis RE, Fandry CB. 1976. A technique for objective analysis and design of oceanographic experiments applied to MODE-73. Deep Sea Res. 23, 559–582. ( 10.1016/0011-7471(76)90001-2) [DOI] [Google Scholar]

- 20.Rasmussen CE, Williams CKI. 2006. Gaussian processes for machine learning. New York, NY: MIT Press. [Google Scholar]

- 21.Cressie N, Wikle CK. 2011. Statistics for spatio-temporal data. New York, NY: John Wiley & Sons. [Google Scholar]

- 22.Gray AR, Riser SC. 2015. A method for multiscale optimal analysis with application to Argo data. J. Geophys. Res. Oceans 120, 4340–4356. ( 10.1002/2014jc010208) [DOI] [Google Scholar]

- 23.Gaillard F. 2012. ISAS-Tool version 6: method and configuration. Technical Report LPO-12-02, Ifremer, France. See https://archimer.ifremer.fr/doc/00115/22583/20271.pdf.

- 24.Gaillard F, Autret E, Thierry V, Galaup P, Coatanoan C, Loubrieu T. 2009. Quality control of large Argo datasets. J. Atmos. Oceanic Technol. 26, 337–351. ( 10.1175/2008JTECHO552.1) [DOI] [Google Scholar]

- 25.Roemmich D, Gilson J. 2016. Roemmich–Gilson Argo climatology. See http://sio-argo.ucsd.edu/RG_Climatology.html (accessed 1 December 2016).