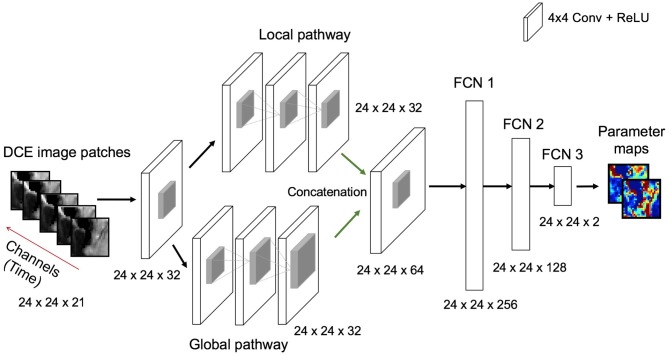

Figure 5.

Illustration of the deep learning architecture used for the estimation of PK parameter maps given the DCE image patch-time series as input. All convolutional layers except concatenation layer learn 32 filters whereas concatenation layer learns 64 filters. Every convolutional layer involves a filter size of 4 × 4. ReLU is used as a non-linear activation function after each convolutional and fully connected layer. The size of the outputs from each layer operation [e.g., input, convolution and full connection (FCN)] are also displayed at the bottom of each layer.