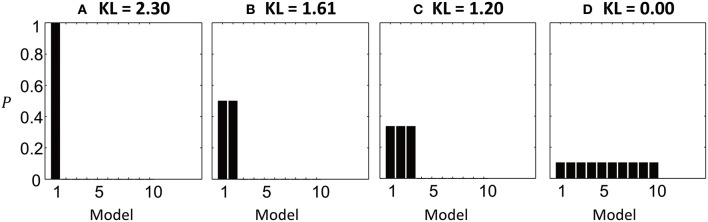

Figure 2.

Illustration of the KL-divergence in four simulated model comparisons. The bars show the posterior probabilities of 10 models and the titles give the computed KL-divergence from the priors. (A) Model 1 has posterior probability close to 1. The KL-divergence is at its maximum of ln 10 = 2.3. (B) The probability density is shared between models 1 and 2, reducing the KL-divergence. (C) The probability density shared between 3 models. (D) The KL-divergence is minimized when all models are equally likely, meaning no information has been gained relative to the prior.