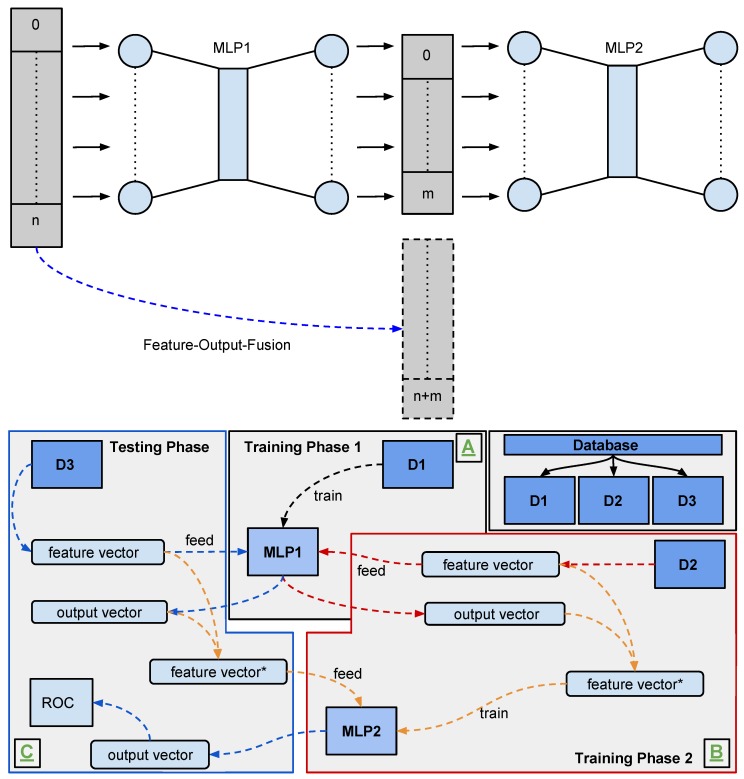

Figure 13.

A sketch of our two-stage MLP model (top) and an affiliated training procedure (bottom). For an input histogram of size and an output layer size of neurons, the secondary MLP receives the primary MLPs’ output activation plus another histogram as input. In our training procedure, we split our dataset D in three parts , and , such that we may train our two MLPs on independent parts of the dataset and test it on unseen data.