Abstract

Can we hear the sound of our brain? Is there any technique which can enable us to hear the neuro-electrical impulses originating from the different lobes of brain? The answer to all these questions is YES. In this paper we present a novel method with which we can sonify the electroencephalogram (EEG) data recorded in “control” state as well as under the influence of a simple acoustical stimuli—a tanpura drone. The tanpura has a very simple construction yet the tanpura drone exhibits very complex acoustic features, which is generally used for creation of an ambience during a musical performance. Hence, for this pilot project we chose to study the nonlinear correlations between musical stimulus (tanpura drone as well as music clips) and sonified EEG data. Till date, there have been no study which deals with the direct correlation between a bio-signal and its acoustic counterpart and also tries to see how that correlation varies under the influence of different types of stimuli. This study tries to bridge this gap and looks for a direct correlation between music signal and EEG data using a robust mathematical microscope called Multifractal Detrended Cross Correlation Analysis (MFDXA). For this, we took EEG data of 10 participants in 2 min “control condition” (i.e. with white noise) and in 2 min ‘tanpura drone’ (musical stimulus) listening condition. The same experimental paradigm was repeated for two emotional music, “Chayanat” and “Darbari Kanada”. These are well known Hindustani classical ragas which conventionally portray contrast emotional attributes, also verified from human response data. Next, the EEG signals from different electrodes were sonified and MFDXA technique was used to assess the degree of correlation (or the cross correlation coefficient γx) between the EEG signals and the music clips. The variation of γx for different lobes of brain during the course of the experiment provides interesting new information regarding the extraordinary ability of music stimuli to engage several areas of the brain significantly unlike any other stimuli (which engages specific domains only).

Keywords: EEG, Sonification, Tanpura drone, Hindustani Ragas, MFDXA, Cross-correlation coefficient

Introduction

Can we hear our brain? If we can, how will it sound? Will the sound of our brain be different from one cognitive state to another? These are the questions which opened the vistas of a plethora of research done by neuroscientists to sonifiy obtained EEG data. The first thing to be addressed while dealing with sonification is what is meant by ‘sonification’. As per the definition of International Conference on Auditory Display (ICAD) “the use of non-speech audio to convey information; more specifically sonification is the transformation of data relations into perceived relations in an acoustic signal for the purposes of facilitating communication or interpretation” (Kramer et al. 2010). Hermann (2008) gives a more classic definition for sonification as”a technique that uses data as input, and generates sound signals (eventually in response to optional additional excitation or triggering)”.

History of sonifying EEG waves

Over the past decade, sonification or the transformation of data into sound, has received considerable attention from the scientific community. Sonification is the data-driven sound synthesis designed to make specific features within the data perceptible (Kramer 1994). It has been applied to human EEG in different approaches (Hermann et al. 2002; Meinicke et al. 2004), most of which are not easily implemented for real-time applications (Baier et al. 2007). While the sense of vision continues to predominate the hierarchy of senses, auditory data representation has been increasingly recognized as a legitimate technique to complement existing modes of data display (Supper 2012). Sonification is, however, not an exclusively scientific endeavor; the technique has been commonly applied within the domain of experimental music. This poses a challenge to the field, as some argue for a sharper distinction between scientific and artistic sonification, whereas others proclaim openness to both sides of the science–art spectrum (Supper 2012).

The interplay between science and art is beautifully demonstrated by the sonification of the EEG, a practice that was first described in the early 1930s and subsequently gave rise to a variety of medical and artistic applications. In neurophysiology, sonification was used to complement visual EEG analysis, which had become increasingly complex by the middle of the 20th century. In experimental music, the encounter between physicist Edmond Dewan (1931–2009) and composer Alvin Lucier (b. 1931) inspired the first brainwave composition Music for the solo performer (1965). By the end of the century, advances in EEG and sound technology ultimately gave rise to brain–computer music interfaces (BCMIs), a multidisciplinary achievement that has enhanced expressive abilities of both patients and artists (Miranda and Castet 2014). The technique of sonification though underexposed, beautifully illustrates the way in which the domains of science and art may, perhaps more than occasionally, enhance one another.

In 1929, the German psychiatrist Hans Berger (1873–1941) published his first report on the human EEG, in which he described a method for recording electrical brain activity by means of non-invasive scalp electrodes. In 1934, the renowned neurophysiologist and Nobel laureate Edgar Adrian (1889–1977) first described the transformation of EEG data into sound (Adrian and Matthews 1934). Whereas these early experiments were primarily concerned with the recording of brainwaves in health, the EEG would soon prove to be of particular value to clinical diagnostics. Indeed, over the following decades, ‘EEG reading’ would greatly enhance neurological diagnostics. At the same time, ‘EEG listening’ continued to provide a useful complement to visual analysis, as various EEG phenomena were more readily identified by ear, such as the ‘alpha squeak’, a drop in alpha rhythm frequency upon closing the eyes (van Leeuwen and Bekkering 1958), the ‘chirping’ sound associated with sleep spindles and the low-pitch sound typical for absence seizures. Interestingly, the use of sonification for the detection of sleep disorders and epileptic seizures has recently resurfaced (Väljamäe et al. 2013). Besides its undisputed value as a diagnostic tool, the impact of the EEG extended far beyond the domain of neurophysiology. With the rise of cybernetics after the end of World War II, the conceptual similarities between the nervous system and the electronic machine were increasingly recognized, inspiring both scientists and artists to explore the boundaries between the ‘natural’ and the ‘artificial’. In this context, the EEG came to be regarded as a promising tool to bridge the gap between mind and machine, potentially allowing for the integration of mental and computational processes into one single comprehensive system.

Applications of EEG sonification

The sonification of brainwaves took a new turn by the end of the 1960s, when prevalent cybernetic theories and scientific breakthroughs gave rise to the field of biofeedback, in which ‘biological’ processes were measured and ‘fed back’ to the same individual in order to gain control over those processes (Roseboom 1990). In 1958, American psychologist Joe Kamiya (b. 1925) had first demonstrated that subjects could operantly learn to control their alpha activity when real-time auditory feedback was provided, but the technique only became popular after his accessible report in Psychology Today (1968). As the alpha rhythm had long been associated with a calm state of mind, EEG biofeedback—later called neurofeedback—was soon adopted for the treatment of various neuropsychiatric conditions, such as attention deficit hyperactivity disorder (ADHD), depression and epilepsy (Rosenboom 1999). In therapeutic neurofeedback, the auditory display of brainwaves proved to be indispensable, as subjects could not possibly watch their own alpha activity while holding their eyes closed. Elgendi et al. (2013) provides feature dependent real time EEG sonification for diagnosis of neurological diseases.

Following Lucier’s Music for the solo performer, various experimental composers started to incorporate the principles of neurofeedback into their brainwave compositions, allowing for the continuous fine-tuning of alpha activity based on auditory feedback of previous brainwaves (Rosenboom 1999). In 1973, computer scientist Jaques Vidal published his landmark paper Toward Direct Brain-Computer Communication, in which he proposed the EEG as a tool for mental control over external devices (Miranda and Castet 2014). This first conceptualization of a brain–computer interface naturally relied on advances in computational technology, but equally on the discovery of a new sort of brain signal: the event-related potential (ERP). To enhance musical control further, BCMI research recently turned towards ERP analysis (Miranda and Castet 2014). The detection of steady-state visual-evoked potentials—focal electrophysiological responses to repeated visual stimuli—has proven to be particularly useful, as subjects are able to voluntarily control musical parameters simply by gazing at reversing images presented on a computer screen; the first clinical trial with a severely paralyzed patient has demonstrated that it is indeed possible for a locked-in patient to make music (Miranda and Castet 2014). Real time EEG sonification enjoys a wide number of applications including diagnostic purposes like epileptic seizure detection, different sleep states etc. (Glen 2010; Olivan et al. 2004; Khamis et al. 2012), neuro-feedback applications (Hinterberger et al. 2013; McCreadie et al. 2013), brain-controlled musical instruments (Arslan et al. 2005) while a special case involves converting brain signals directly into meaningful musical compositions (Miranda and Brouse 2005; Miranda et al. 2011). Also, we have a number of studies which deal with the emotional appraisal in human brain corresponding to a wide variety of scale-free musical clips using EEG/fMRI techniques (Sammler et al. 2007; Koelsch et al. 2006; Lin et al. 2010; Lu et al. 2012; Wu et al. 2009), but none of them provides a direct correlation between the music sample used and the EEG signal generated using the music as a stimulus, although both are essentially complex time series variations. The main reason behind this lacunae is the disparity between the sampling frequency of music signals and EEG signals (which are of much lower sampling frequency). A recent study (Lu et al. 2018) used a neural mass model, the Jansen–Rit model, to simulate activity in several cortical brain regions and obtained BOLD (blood-oxygen level dependent) music for healthy and epileptic patients. The EEG signals are lobe specific and characterized with a lot of fluctuations corresponding to different musical and other types of stimulus. So, the information procured varies continuously throughout the period of data acquisition and that too the fluctuations are different in different lobes.

The role of Tanpura in Hindustani classical music

In this work, the main attempt is to device a new methodology which looks to obtain a direct correlation between the external musical stimuli and the corresponding internal brain response using latest state of the art non-linear tools for characterization of bio-sensor data. For this, we chose to study the EEG response corresponding to the simplest (and yet very complex) musical stimuli—the Tanpura drone. The Tanpura (sometimes also spelled Tampura or Tambura) is an integral part of classical music in India. It is a fretless musical instrument which is very simple in construction, yet the acoustic signals generated from it is quite complex. It consists of a large gourd and a long voluminous wooden neck which act as resonance bodies with four or five metal strings supported at the lower end by a meticulously curved bridge made of bone or ivory. The strings are plucked one after the other in cycles of few seconds generating a buzzing drone sound. The Tanpura drone primarily establishes the “Sa” or the scale in which the musical piece is going to be sung/played. One complete cycle of the drone sound usually comprises of Pa/Ma (middle octave)—Sa (upper octave)—Sa (upper octave)—Sa (middle octave) played in that order. The drone signal has repetitive quasi-stable geometric forms characterized by varying complexity with prominent undulations of intensity of different harmonics. Thus, it will be quite interesting to study the response of brain simultaneously to a simple drone sound using different non-linear techniques. This work is essentially a continuation of our work using MFDFA technique on drone-induced EEG signals (Maity et al. 2015) and the hysteresis effects on brain using raga music of contrast emotion (Banerjee et al. 2016). Because there is a felt resonance in perception, psycho-acoustics of Tanpura drone may provide a unique window into the human psyche and cognition of musicality in human brain. Earlier “Fractal Analysis” technique has been used to study the non linear nature of the tanpura signals (Sengupta et al. 2005). Global Descriptors (GD) have also been used to identify the time course of activation in human brain in response to tanpura drone (Braeunig et al. 2012). Hence it demands robust non-linear methods to assess the complex nature of tanpura signals.

Emotions and Indian classical music

In Indian classical music (ICM), each raga has a well defined structure consisting of a series of four/five or more musical notes upon which its melody is constructed. However, the way the notes are approached and rendered in musical phrases and the mood they convey are more important in defining a Raga than the notes themselves. From the time of Bharata’s Natyashastra (Ghosh 2002), there have been a number of treatises which speak in favor of the various rasas (emotional experiences) that are conveyed by the different forms of musical performances.

The aim of any dramatic performance is to emote in the minds of audience a particular kind of aesthetic experience, which is described as “Rasa”. The concept of “Rasa” is said to be the most important and significant contribution of the Indian mind to aesthetics.

It is only from the last two decades of the twentieth century that scientists began to understand the huge potential of systematic research that Indian classical music (ICM) has to offer in the advancement of cognitive science as well as psychological research. A number of works tried to harvest this immense potential by studying objectively the emotional experiences attributed to the different ragas of Indian classical music (Balkwill and Thompson 1999; Chordia and Rae 2007; Wieczorkowska et al. 2010; Mathur et al. 2015). The studies have revealed unlike Western Music pieces the emotions evoked by Indian Classical Music pieces are often more ambiguous and far more subdued. Earlier few musicologists believed that a particular emotion can be assigned to a particular Raga but recent studies (Wieczorkowska et al. 2010) clearly revealed that different phrases of a particular Raga is capable of evoking emotions among the listeners. So, the need arises to have a direct correlation between the input raga which actually conveys the emotional cue and the output EEG signal which forms the cognitive appraisal of the input sound. Multifractal Detrended Cross-Correlation Analysis (MFDXA) provides a robust non-linear methodology which gives the degree of cross-correlation between two non-stationary, non-linear complex time series i.e. music signal and EEG signal. The same can be used to capture the degree of correlation between different lobes of brain while listening to music input, which in turn gives a quantitative measure of the arousal-based effects in different locations of the human brain.

Fractals and Multifractals in EEG study

A fractal is a rough or fragmented geometrical object that can be subdivided in parts, each of which is (at least approximately) a reduced-size copy of the whole. Fractals are generally self-similar and independent of scale (fractal dimension)—the degree of roughness or brokenness or irregularity in an object. They are created by repeating a simple process over and over in an ongoing feedback loop. A fundamental characteristic of fractal objects is that their measured metric properties, such as length or area, are a function of the scale of measurement.

Chaos has been already studied and discovered in a wide range of natural phenomena such as the weather, population cycles of animals, the structure of coastlines and trees and leaves, bubble-fields and the dripping of water, biological systems such as rates of heartbeat, and also acoustical systems such as that of woodwind multiphonics. The study of chaos is approached and modeled through the use of nonlinear dynamic systems, which are the mathematical equations whose evolution is unpredictable and whose behavior can show both orderly and/or chaotic conditions depending on the values of initial parameters. What has attracted the non-science community to these dynamic systems is that they display fractal properties and, thus, patterns of self-similarity on many levels (Pressing 1988). Already, the art community has employed such chaotic patterns, and many examples exist of nonlinear/fractal visual art created with the assistance of the computer (Pressing 1988; Truax 1990). Thus, non-linear dynamical modeling for source clearly indicates the relevance of non-deterministic/chaotic approaches in understanding the speech/music signals (Behrman 1999; Kumar and Mullick 1996; Sengupta et al. 2001; Bigerelle and Iost Bigerelle and Iost 2000; Hsü and Hsü 1990; Sengupta et al. 2005, 2010). In this context fractal analysis of the signal which reveals the geometry embedded in signal assumes significance.

In recent past, the Detrended Fluctuation Analysis (DFA) has become a very useful technique to determine the fractal scaling properties and long-range correlations in noisy, non-stationary time-series (Hardstone et al. 2012). DFA is a scaling analysis method used to quantify long-range power-law correlations in signals—with the help of a scaling exponent, α, to represent the correlation properties of a signal. In the realm of complex cognition, scaling analysis technique was used to confirm the presence of universality and scale invariance in spontaneous EEG signals (Bhattacharya 2009). In stochastic processes, chaos theory and time series analysis, DFA is a method for determining the statistical self-affinity of a signal. The obtained exponent is similar to the Hurst exponent, except that DFA may also be applied to signals whose underlying statistics (such as mean and variance) or dynamics are non-stationary (changing with time). DFA method was applied in (Karkare et al. 2009) to show that scale-free long-range correlation properties of the brain electrical activity are modulated by a task of complex visual perception, and further, such modulations also occur during the mental imagery of the same task. In case of music induced emotions, DFA was applied to analyze the scaling pattern of EEG signals in emotional music (Gao et al. 2007) and particularly Indian music (Banerjee et al. 2016). It has also been applied for patients with neurodegenerative diseases (John et al. 2018; Yuvaraj and Murugappan 2016; Bornas et al. 2015; Gao et al. 2011), to assess their emotional response, to assess the change of neural plasticity in a spinal cord injury rat model (Pu et al. 2016) and so on.

It is known that naturally evolving geometries and phenomena are rarely characterized by a single scaling ratio and therefore different parts of a system may be scaling differently. That is, the self similarity pattern is not uniform over the whole system. Such a system is better characterized as ‘multifractal’. A multifractal can be loosely thought of as an interwoven set constructed from sub-sets with different local fractal dimensions. Music too, has non-uniform property in its movement. It is therefore necessary to re-investigate the musical structure from the viewpoint of the multifractal theory. Multifractal Detrended Fluctuation Analysis (MFDFA) (Kantelhardt et al. 2002) technique analyzes the musical signal in different scales and gives a multifractal spectral width which is the measure of complexity of the signal. MFDFA has been applied successfully to study multifractal scaling behavior of various non-stationary time series (Sadegh Movahed et al. 2006; Telesca et al. 2004; Kantelhardt et al. 2003) as well as in detection or prognosis of diseases (Dutta et al. 2013, 2014; Figliola et al. 2007). EEG signals are essentially multifractals as they consist of segments with large variations as well as segments with very small variations, hence when applied to the alpha EEG rhythms, the multifractal spectral width will be an indicator of emotional arousal corresponding to particular clip (Banerjee et al. 2016; Maity et al. 2015).

Correlation in general determines the degree of similarity between two signals. In signal processing, cross-correlation is a measure of similarity of two series as a function of the lag of one relative to the other. A robust technique called Multifractal Detrended Cross correlation Analysis (MFDXA) (Zhou 2008) has been used to analyze the multifractal behaviors in the power-law cross-correlations between any two non-linear time series data (in this case music and sonified EEG signals). With this technique, all segments of the music clips and the sonified EEG signals were analyzed to find out a cross correlation coefficient (γx) which gives the degree of correlation between these two categories of signals. For uncorrelated data, γx has a value 1 and the lower the value of γx more correlated is the data (Podobnik and Stanley 2008). Thus a negative value of γx signifies that the music and bio-signals have very high degree of correlation between them. The strength of the MF-DXA method is seen in various phenomena using one- and two-dimensional binomial measures, multifractal random walks (MRWs) and financial markets (Ghosh et al. 2015; Wang et al. 2012; Wang et al. 2013). MFDXA technique has also been successfully applied to detect the prognosis or onset of epileptic seizures (Ghosh et al. 2014). We have also used the MFDXA technique to assess the degree of correlation between pairs of EEG electrodes in various experimental conditions (Ghosh et al. 2018b). In this study, two experiments are performed with the sole objective to quantify how the different lobes of brain are correlated while processing musical stimuli and how they are correlated with the input music stimuli. For the first experiment a tanpura drone is used as a musical stimuli while in the second experiment, we have used a pair of ragas of contrast emotion—Chayanat and Darbari Kanada as in Banerjee et al. (2016) and evaluated the EEG cross-correlation coefficients corresponding to them. With this novel technique, a direct correlation is possible between the audio signals and the corresponding biosensors data. We intend to have rigorous methodology on emotion categorization using musical stimuli.

Overview of the present work

In this work, we took EEG data of 10 naive participants while they listened to the 2 min tanpura drone clip which was preceded by a 2 min resting period. In another experiment, we took EEG data for the same set of participants using the same pair of ragas having contrast emotions Chayanat and Darbari Kanada as in one of our previous works (Banerjee et al. 2016) which dealt with the evidence of neural hysteresis (Ghosh et al. 2018a). The main constraint in establishing a direct correlation between EEG signals and the stimulus sound signal is the disparity in sampling frequency of the two; while an EEG signal is generally sampled at up to 512 samples/sec (in our case it is 256 samples/sec), the sampling frequency of a normal recorded audio signal is 44100 samples/sec. Hence the need arises to up-sample the EEG signal to match the sampling frequency of an audio signal so that the correlation between the two can be established. This phenomenon is called sonification (or audification) in essence, and we propose a novel algorithm in this work to sonify EEG signals and then to compare them with the source sound signals. A human response psychological data was taken initially to standardize the emotional appraisal corresponding to the acoustic clips chosen for this study, followed by EEG response using the same musical clips as stimuli. Five frontal (F3, F4, Fp1, Fp2 and Fz)and temporal (T3/T4) electrodes were selected for our study corresponding to auditory and cognitive appraisals associated with our study. The alpha frequency wave is extracted from the entire EEG signal using Wavelet Transform technique as elaborated in Banerjee et al. (2016) as alpha waves are mainly associated with emotional activities corresponding to music clips (Sammler et al. 2007; Maity et al. 2015; Babiloni et al. 2012). Next, the MFDXA technique has been applied to assess the degree of correlation between the two non-linear, non-stationary signals (in this case output EEG signals and the input acoustic musical signals). The output of MFDXA is γx (or the cross-correlation coefficient) which determines the degree of cross-correlation of the two signals taken. For the “Auditory Control/rest” state, we have determined the cross-correlation coefficient using the “auditory control/rest” EEG data as one input and a simulated “white noise” as the other input. We have provided a comparative analysis of the variation of correlation between the “rest” state EEG and the “music induced” EEG signals. Furthermore, the degree of cross-correlation between different lobes of the brain has also been computed for the different experimental conditions. The results clearly indicate a significant rise in the correlation during the music induced state compared to the auditory control condition in case of tanpura drone. In case of emotional music, the values of γx show distinct evidence in support of emotional quantification in specific electrodes of human brain. In this experiment we have unique insights into the arousal based responses simultaneously in different portions of the brain under the influence of musical stimuli. This novel study can be very useful in the domain of auditory neuroscience when it comes to quantifying the emotional state of a listener in respect to a particular stimulus.

Materials and methods

Subjects summary

For the human response data, 50 participants (M = 33, F = 17) were asked to rate the music clips in a sheet on the basis of what emotion they felt for each clip. From the 50 listeners, 10 musically untrained right handed adults (6 male and 4 female) voluntarily participated in the EEG study. Their ages were between 19 and 25 years (SD = 2.21 years). None of the participants reported any history of neurological or psychiatric diseases, nor were they receiving any psychiatric medicines or using a hearing aid. None of them had any formal music training and hence can be considered as naïve listeners. Informed consent was obtained from each subject according to the ethical guidelines of the Ethical Committee of Jadavpur University. All experiments were performed at the Sir C.V. Raman Centre for Physics and Music, Jadavpur University, Kolkata.

Experimental details

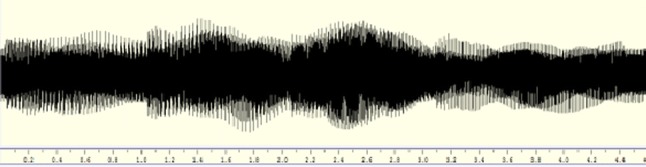

The tanpura stimuli given for our experiment was the sound generated using software ‘Your Tanpura’ in C# pitch and in Pa (middle octave)—Sa (middle octave)—Sa (middle octave)—Sa (lower octave) cycle/format. From the complete recorded signal a segment of about 2 min was cut out at the zero point crossing using open source software toolbox Wavesurfer (Sjőlander and Beskow 2009). Variations in the timbre were avoided as same signal were given to all the participants. Fig 1 depicts a 2 min Tanpura drone signal that was given as an input stimulus to all the informants.

Fig. 1.

Waveform of 1 cycle of tanpura drone signal

In the second experiment, the two pair ragas chosen for our analysis were “Chayanat” (romantic/joy) and “Darbari Kannada” (sad/pathos). Each of these sound signals was digitized at the sample rate of 44.1 KHZ, 16 bit resolution and in a mono channel. From the complete playing of the ragas, segments of about 2 min were cut out for analysis of each Raga. Help was taken of some experienced musicians for identifying the emotional phrases in the music signal along with their time intervals, based on their feelings. A sound system (Logitech R_Z-4 speakers) with high S/N ratio was used in the measurement room for giving music input to the subjects. For the listening test, each participant were asked to mark on a Template as presented in Table 1. Here Clip 1 refers to tanpura drone, Clip 2 refers Chayanat and Clip 3 refers to Darbari Kannada. The subjects were asked to mark in any of the options they find suitable, even if it is more than one option.

Table 1.

Response sheet to identify the emotion corresponding to each clip

| Joy | Anxiety | Sorrow | Calm | |

|---|---|---|---|---|

| Clip 1 | ||||

| Clip 2 | ||||

| Clip 3 |

Finally, the EEG experiment was conducted in the afternoon (around 2 PM) in a room with the subjects sitting relaxed in a comfortable chair with their eyes closed during the experiment.

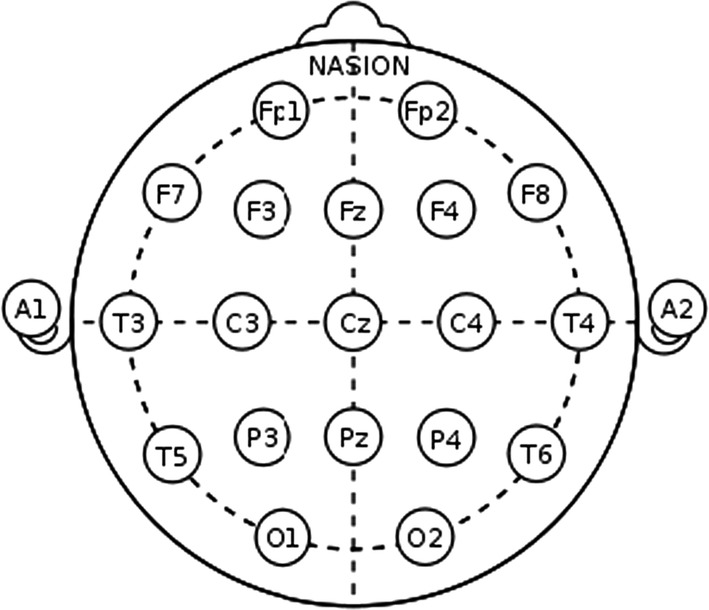

Experimental protocol

The EEG experiments were conducted in the afternoon (around 2 PM) in an air conditioned room with the subjects sitting in a comfortable chair in a normal diet condition. All experiments were performed as per the guidelines of the Institutional Ethics Committee of Jadavpur University. All the subjects were prepared with an EEG recording cap with 19 electrodes (Fig. 2) (Ag/AgCl sintered ring electrodes) placed in the international 10/20 system. Impedances were checked below 5 k Ohms. The EEG recording system (Recorders and Medicare Systems) was operated at 256 samples/s recording on customized software of RMS. Raw EEG signals were filtered using a low and high pass filter with cut-off frequencies of 0.5 to 35 Hz. The electrical interference noise (50 Hz) was eliminated using notch filter. Muscle artifacts were removed by selecting the EMG filter. The ear electrodes A1 and A2 linked together have been used as the reference electrodes. The same reference electrode is used for all the channels. The forehead electrode, FPz has been used as the ground electrode. Each subject was seated comfortably in a relaxed condition in a chair in a shielded measurement cabin.

Fig. 2.

The position of electrodes as per 10-20 system

After initialization, a 6 min recording period was started, and the following protocol was followed:

2 min auditory control (white noise)

2 min with tanpura drone

2 min Rest (after music).

In the second experiment for emotional categorization, the following protocol was observed:

2 min auditory control

2 min with music (Chayanat)

2 min auditory control

Sequence 2–3 was repeated With Music (Darbari Kannada)

In between the application of stimuli, the subjects were asked to rate the clips on the basis of emotional appraisal. Markers were set at start, signal onset/offset, and at the end of the recording. We divided each of the experimental conditions in four windows of 30 s each and calculated the cross-correlation coefficient for each window corresponding to the frontal (F3, F4, Fp1, Fp2 and Fz)and temporal (T3/T4) electrodes.

The listening test experiment was performed following the same protocol with each subject being asked to mark the emotional appraisal corresponding to each music clip.

Methodology

Pre-processing of EEG signals

We have obtained artifact free EEG data for all the electrodes using the EMD technique as in Maity et al. (2015) and used this data for further analysis and classification of acoustic stimuli induced EEG features. The amplitude envelope of the alpha (8–13 Hz) frequency range was obtained using wavelet transform (WT) technique (Akin et al. 2001). Data was extracted for the the frontal (F3, F4, Fp1, Fp2 and Fz) and temporal (T3/T4) electrodes according to the time period given in the Experimental protocol section i.e. for Experimental conditions.

Sonification of EEG signals

Sonification refers to the use of non-speech audio for data perception as a complement to data visualization. A sudden or gradual increase or a drop in an information can be perceived as an increase in the amplitude, pitch or tempo in the audio file formed from the data information by using Acoustic Sonification. We attempt to make a similar venture with the bio-medical signals such as Electroencephalography (EEG) signals. EEG signal which is essentially a time-series data is to be transformed and interpreted through signal processing techniques as an audio waveform and this technique provides a mean by which large datasets can be explored by listening to the data itself.

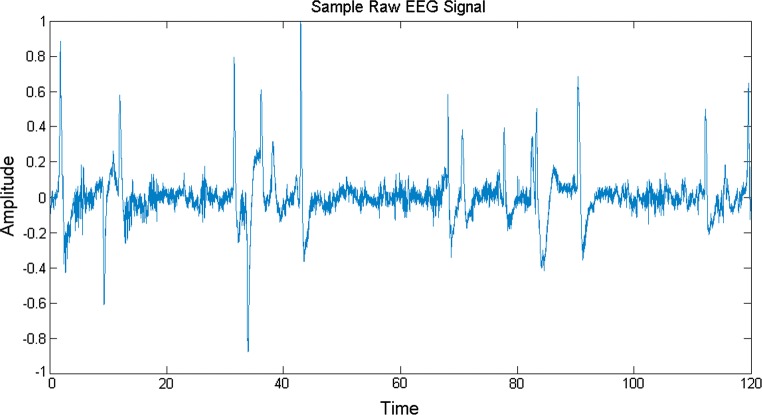

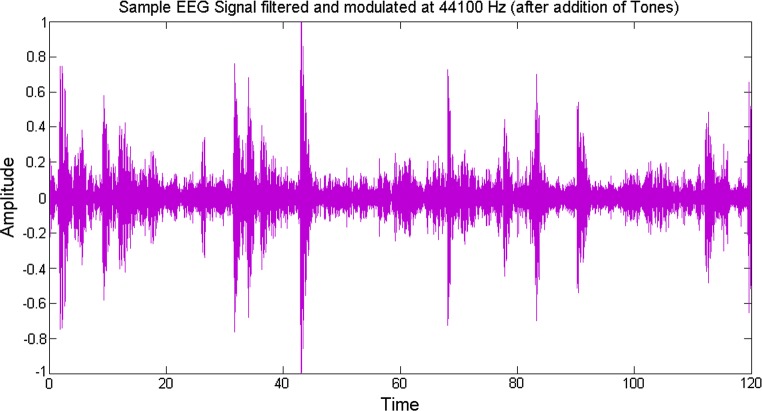

EEG signals are characterized with a lot of variations and are essentially lobe specific. Hence, the information varies constantly throughout a period of data acquisition and also the fluctuations are unique in different lobes. The sampling rate of EEG signal used in this experiment is 256 Hz. We have taken music induced EEG signals in this work and envisage to obtain a direct comparison between these music induced EEG signals and the source acoustical music signals which caused arousal in the brain dynamics of different lobes. A sample music induced raw EEG signal is shown in Fig. 3.

Fig. 3.

A 2 min raw EEG signal

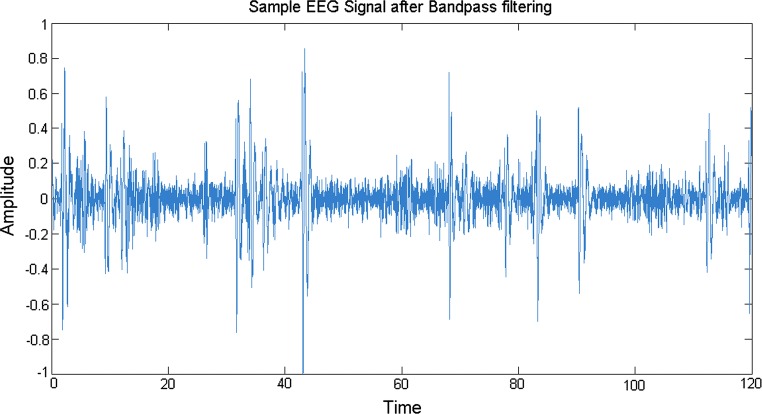

We are interested to find the arousals in different lobes and to determine the similarity or differences in the behaviour of different lobes under the effect of emotional music stimuli. In particular, attempt is made to sonify alpha rhythms in an excerpt of an EEG recording using modulation techniques to produce an audible signal. The alpha rhythms are characterized by their frequency ranges from 8 to 13 Hz and usually appear when the eyes are closed and the subject is relaxed. To obtain the signal in the frequency range of interest to be used for further exploration, band-pass filtering is done to remove unwanted frequencies. A representative plot is shown in Fig. 4 after the sample EEG signal is band-pass filtered for alpha wave.

Fig. 4.

A 2 min alpha frequency EEG signal after bandpass filtering

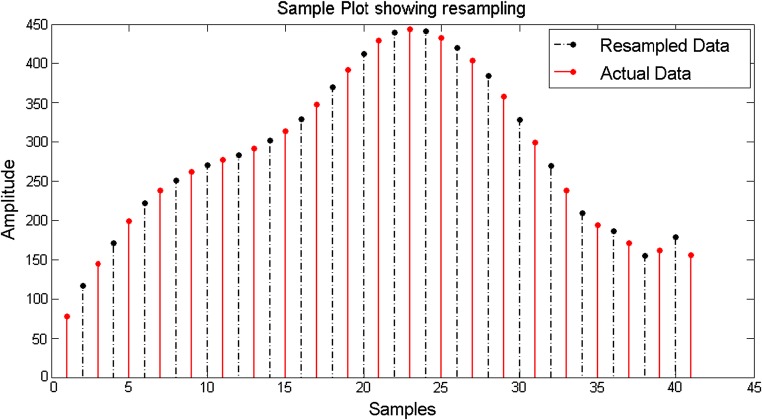

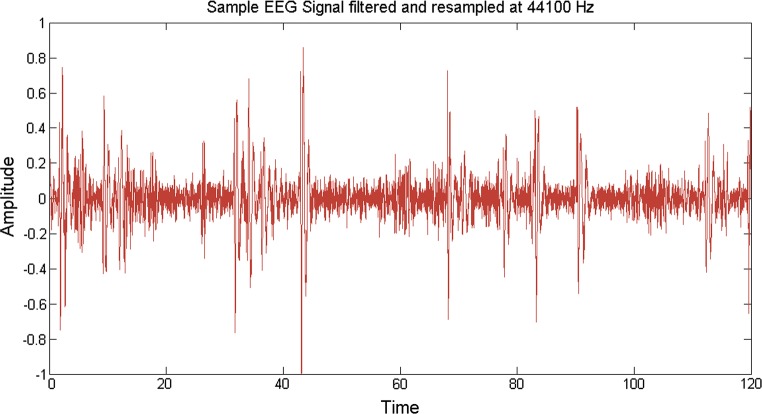

Till date, no direct correlation have been established between the source acoustical and the output EEG signals, because the EEG signals have a frequency (in the order of 256 Hz generally) much less than that of the music signals (in the order of 44.1 kHz) and hence the sampling frequencies don’t match. Keeping in mind this problem, we resampled (upsampling followed by interpolation) the bandpass filtered desired EEG data to a higher frequency of 44.1 kHz. This is done by using an inbuilt Matlab function (called resample) which not only removes any distortion in the form of aliasing but also compensates for any delay introduced by the anti-aliasing filter. Upsampling is done by inserting zeros in between the original samples. Upsampling creates imaging artifacts which are removed by low-pass filtering. In the time domain, low-pass filtering interpolates the zeros inserted by upsampling. The resampling modified an EEG signal of frequency 256 Hz to a modulated EEG signal of frequency 44.1 kHz. A sample plot is shown considering a portion of the bandpass filtered EEG data which is resampled from 256 Hz to 512 Hz. Here 512 Hz is considered for simplicity in representation, otherwise the plot would have been clumsy. Here it is shown that the initial envelope of the signal is maintained and using resampling, new samples are inserted, consequently increasing the sampling rate (Fig. 5). The solid red lines indicate the actual signal while the dashed-dotted black lines indicate the resampled data inserted within two consecutive data samples. In the original methodology, the same procedure is applied to upsample the EEG signal to 44.1 kHz (shown in Fig. 6).

Fig. 5.

Sample plot showing resampling of EEG data

Fig. 6.

Sample EEG signal filtered and resampled at 44100 Hz

In order to add aesthetic sense, the obtained signals are modulated into the auditory range by assigning different tones (electrode wise) to them so that when they are played together the sudden increase or decrease in the data information can be perceived as manifested by the change in the amplitudes of the signal. For this, some suitable frequencies are chosen to produce a sound which is harmonious to the ear. Now that we have a resampled music induced EEG signal of the same frequency as that of the music signal, an audio file was written out for the obtained signal (Fig. 7).

Fig. 7.

Sample EEG signal filtered and modulated at 44100 Hz (after addition of tones)

Additionally, we can now use cross-correlation techniques to establish any relation between these signals. We used a robust non-linear cross correlation technique called Multi Fractal Detrended Cross Correlation Analysis (MFDXA) (Zhou 2008) in this case, taking the tanpura drone/music signal as the first input and a music induced resampled EEG signal (electrode wise) as the second input.

Multifractal Detrended Cross-Correlation Analysis (MFDXA)

In 2008, Zhou proposed a method called multifractal detrended cross-correlation analysis(MFDXA) is an offshoot of the generalized MFDFA method, which is based on detrended covariance to investigate the multifractal behaviors between two time series or high-dimensional quantities. In order to study the degree of correlation between two time series, linear cross-correlation analysis is normally used, but two time series are always considered stationary. However, real-time series are hardly stationary and to cure that, as a rule, short intervals are considered for analysis. a method called detrended cross-correlation analysis (DXA) was reported by Podobnik and Stanley (2008). To unveil the multifractal nature of the music and sonified EEG signals, multifractal detrended cross-correlation analysis (MF-DXA) is applied here to study the degree of correlation between the simultaneously recorded EEG signals.

Here, we compute the profiles of the underlying data series x(i) and y(i) as

| 1 |

The next steps proceed in the same way as the MFDFA method, with the only difference being we have to take 2Ns bins here. The qth order detrended covariance Fq(s) is obtained after averaging over 2Ns bins.

| 2 |

where q is an index which can take all possible values except zero because in that case the factor 1/q blows up. The procedure can be repeated by varying the value of s. Fq(s) increases with increase in value of s. If the series is long range power correlated, then Fq(s) will show power law behavior

If such a scaling exists ln Fq will depend linearly on ln s, with λ(q) as the slope. Scaling exponent λ(q) represents the degree of the cross-correlation between the two time series. In general the exponent λ(q) depends on q. We cannot obtain the value of λ(0) directly because Fq blows up at q = 0. Fq cannot be obtained by the normal averaging procedure; instead a logarithmic averaging procedure is applied

| 3 |

For q = 2 the method reduces to standard DCCA. If scaling exponent λ(q) is independent of q, the cross-correlations between two time series are monofractal. If scaling exponent λ(q) is dependent on q, the cross-correlations between two time series are multifractal. Furthermore, for positive q, λ(q) describes the scaling behavior of the segments with large fluctuations and for negative q, λ(q) describes the scaling behavior of the segments with small fluctuations. Scaling exponent λ(q) represents the degree of the cross-correlation between the two time series x(i) and y(i). The value λ(q) = 0.5 denotes the absence of cross-correlation. λ(q) > 0.5 indicates persistent long range cross-correlations where a large value in one variable is likely to be followed by a large value in another variable, while the value λ(q) < 0.5 indicates anti-persistent cross-correlations where a large value in one variable is likely to be followed by a small value in another variable, and vice versa (Movahed and Hermanis 2008).

Zhou (2008) found that for two time series constructed by binomial measure from p-model, there exists the following relationship:

| 4 |

Podobnik and Stanley have studied this relation when q = 2 for monofractal Autoregressive Fractional Moving Average (ARFIMA) signals and EEG time series (Podobnik and Stanley 2008).

In case of two time series generated by using two uncoupled ARFIMA processes, each of both is autocorrelated, but there is no power-law cross correlation with a specific exponent (Movahed and Hermanis 2008). According to auto-correlation function given by:

| 5 |

The cross-correlation function can be written as

| 6 |

where γ and γx are the auto-correlation and cross-correlation exponents, respectively. Due to the non-stationarities and trends superimposed on the collected data, direct calculation of these exponents are usually not recommended; rather the reliable method to calculate auto-correlation exponent is the DFA method, namely

Recently, Podobnik et al. (2011), have demonstrated the relation between cross-correlation exponent, γx and scaling exponent λ(q) derived by Eq. (4) according to

For uncorrelated data, γx has a value 1 and the lower the value of γ and γx more correlated is the data. In general, λ(q) depends on q, indicating the presence of multifractality. In other words, we want to point out how two non-linear signals (in this case music and EEG) are cross-correlated in various time scales.

Results and discussion

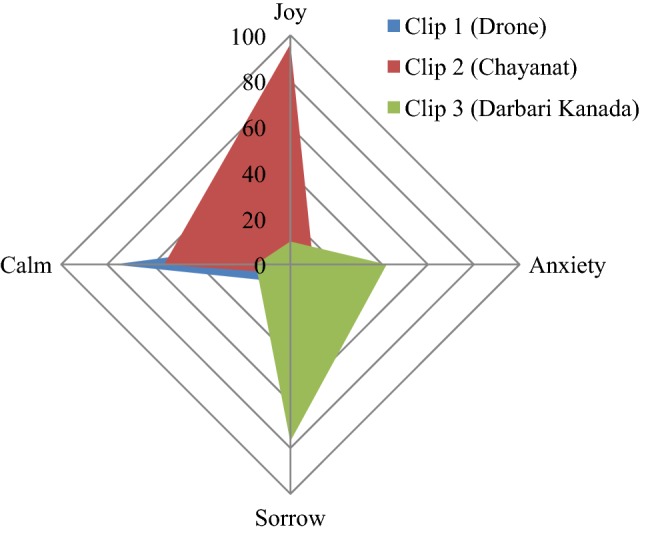

The emotional responses corresponding to each music clip have been given in Table 2 which gives the percentage response obtained for each particular emotion, while Fig. 8 displays the emotional appraisal in a radar plot.

Table 2.

Percentage response of 50 listeners to music clips

| Joy | Anxiety | Sorrow | Calm | |

|---|---|---|---|---|

| Clip 1 (Drone) | 10 | 5 | 8 | 77 |

| Clip 2 (Chayanat) | 96 | 10 | 4 | 55 |

| Clip 3 (Darbari Kanada) | 10 | 42 | 77 | 15 |

Fig. 8.

Emotional plot for the three clips

Thus it is seen that tanpura drone is mainly associated with a serene/calm emotion, while the two raga clips in general portray mainly contrast emotions, the internal neuro-dynamics associated are assessed in the following EEG study.

For preliminary analysis, we chose five electrodes from the frontal and fronto-polar lobe viz. F3, F4, Fp1, Fp2 and Fz, as the frontal lobe has been long associated with cognition of music and other higher order cognitive skills. Also, two electrodes from the temporal lobe viz. T3, T4 have been analyzed since the auditory cortex is preliminary processing centre for the musical stimuli.

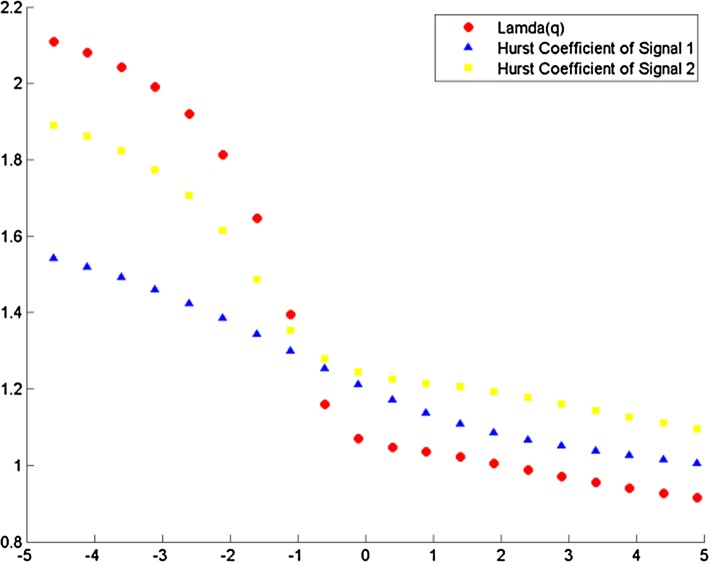

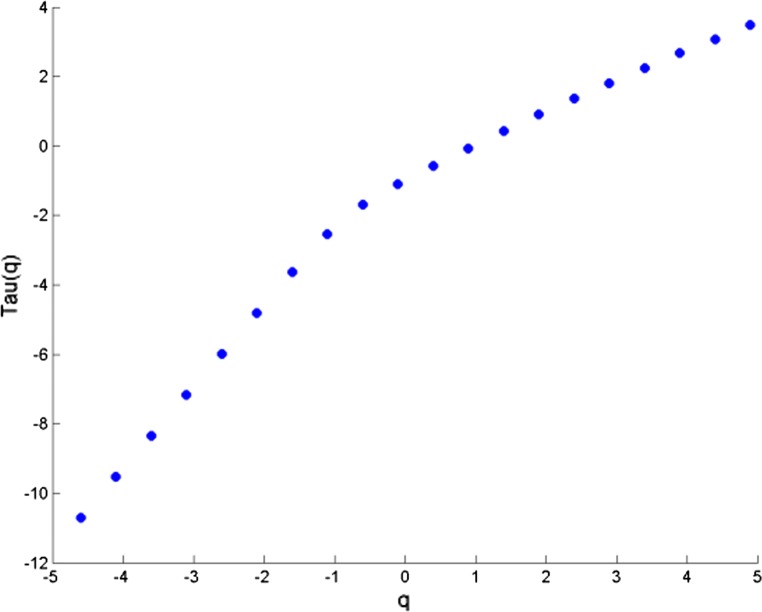

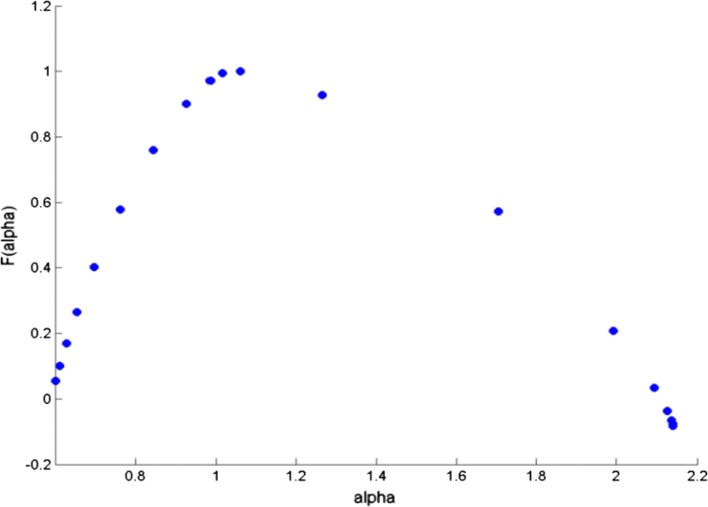

The cross correlation coefficient is evaluated for all possible combinations of electrode and music clips during the experimental conditions. All the data sets were first transformed to reduce noise in the form of muscle and blink artifacts in the data using the EMD technique (Maity et al. 2015). The integrated time series were then divided to Ns bins where Ns = int (N/s), N is the length of the series. The qth order detrended covariance Fq(s) was obtained for values of q from − 5 to + 5 in steps of 1. Power law scaling of Fq(s) with s is observed for all values of q. Fig 9 is a representative figure which shows the variation of scaling exponent, λ(q) with q for tanpura drone and music in the “with drone” period is displayed. For comparison, we have also shown variation of H(q) with q individually for the same two signals—music and F3 electrode EEG by means of MF-DFA in the same figure. If the scaling exponent is a constant, the series is monofractal, otherwise it is multifractal. The plot depicts multifractal behavior of cross-correlated time series as for q = 2 the cross-correlation scaling exponent λ(q) is greater than 0.5 which is an indication of persistence long-range cross-correlation between the two signals. In the same way, λ(q) was evaluated for all the possible combinations of music-EEG as well as EEG–EEG data. The q-dependence of the classical multifractal scaling exponent τ(q) is depicted in Fig. 10 for the music-EEG correlation case. From Fig. 10 we can see τ(q) is nonlinearly dependent on q, which is yet another evidence of multifractality. We also plotted the multifractal width of the cross correlated signals of music and F3 electrode in Fig. 11. The presence of spectral width of cross correlated EEG signals confirms the presence of multifractality even in the cross-correlated music and EEG signals. This is a very interesting observation from the stand-point of psycho-acoustics which reveals the fact that not only the music and EEG signals originating from the brain are cross-correlated, but the cross-correlated signals are also multifractal in nature. The same analysis was repeated for all other electrodes and music clips as well as between the EEG electrodes which revealed the amount cross-correlation between the different electrodes of human brain.

Fig. 9.

Variation of λ(q) versus q for music and F3

Fig. 10.

Variation of τ(q) versus q for music vs F3

Fig. 11.

Multifractal spectral width for cross correlated music and F3 electrode

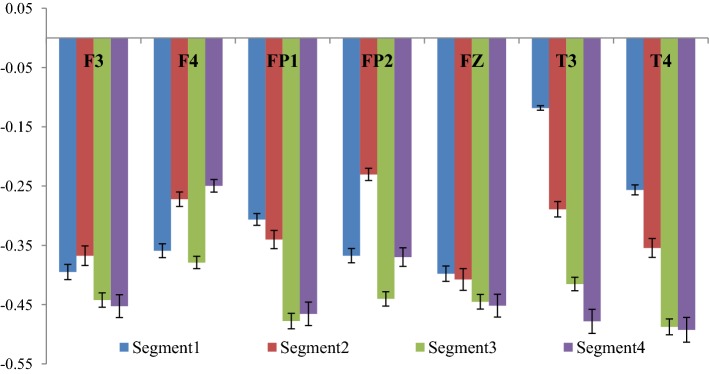

The cross-correlation coefficient (γx) corresponding to the two experimental conditions were computed and the difference between the two conditions were computed and the corresponding graph (Fig. 12) shows the same. The complete 2 min signal (both EEG and audio signal) was segregated into 4 segments of 30 s each and for each part the cross-correlation coefficient was computed. Fig 12 represents the change in cross-correlation coefficient under the effect of the four segments of the drone stimulus. It is worth mentioning here that a decrease in the value of γx signifies an increase in correlation between the two signals.

Fig. 12.

Variation of correlation between rest and tanpura drone condition

From the figure it is clear that the degree of correlation between audio signal and the EEG signal generated from the different electrodes increase with the progress of time where Segment 1 denotes the initial 30 s, while Segment 4 denotes the concluding 30 s of the drone stimulus. In most of the cases it is seen that the degree of correlation is the highest in the third segment i.e. in between 1 min - 1 min 30 s and in few electrodes (i.e. Fz and F3), the degree of cross-correlation is highest in the last segment i.e. in between 1 min 30 s–2 min. In one of our earlier works (Maity et al. 2015), we reported how the complexity of the EEG signals generated from frontal lobes increase significantly under the influence of tanpura drone signal, while in this work we report how the audio signal which causes the change is directly correlated with the output EEG signal. Also, how the correlation varies during the course of the experiment is also an interesting observation from this experiment. From the figure, a gradual increase in the degree of cross-correlation is observed, but the 2nd part shows a fall in few electrodes, but in the 3rd and 4th part there is always an increase. The temporal electrodes also show maximum increase in the third and final segments as is seen from the figures. The error bars in the figure show the computational errors in the measurement of cross-correlation values. It can thus be interpreted that the middle part of the audio signal is the most engaging part as it is in this section that the correlation between the frontal lobes and the audio signal becomes the highest. In our previous study also, we have shown that the increase in complexity was the highest in this part only. So the results from this experiment corroborate our previous findings. Next, we wanted to see how inter/intra lobe cross-correlations vary under the effect of tanpura drone stimuli. So, we calculated the cross-correlation coefficient between pairs of electrodes chosen for our study during the two experimental conditions i.e. “Rest/Auditory Control state” and “with music” state. Again the difference between the two states has been plotted in Fig. 13. In this case we considered only the electrodes of left and right hemispheres and neglected the frontal midline electrode Fz.

Fig. 13.

Variation of correlation among different electrodes

From the figure, it is seen that the correlation between left and right frontal electrode (i.e. F3 and F4) with the left fronto-polar electrode i.e. FP1 has the most significant increase in the 2nd and 3rd segment of the audio stimuli. Apart from these, F3-FP2 correlation also increase consistently again in the 2nd and 3rd segments of the audio clip while inter lobe frontal correlation, i.e. between F3 and F4 electrodes show the highest rise in the last two parts of the audio signal. Inter-lobe fronto-polar correlation rises significantly in the 2nd and 4th segments of the experiment. An interesting observation from the figure is that the cross-correlation of the temporal electrodes with frontal electrodes is much higher compared to the correlation between frontal electrodes, while the inter lobe correlation among the temporal electrodes (T3–T4) is the strongest of all. Amongst the correlation values, the cross-correlation of temporal electrode with fronto-polar electrodes are on the lower side. It is seen that the correlation of temporal lobe with other electrodes is the strongest, which is caused by the use of tanpura drone as brain stimulus. The computational errors from the experiment have been shown in the form of error bars in the figure. Thus, it is clear that different lobes of human brain activate themselves differently and in different portions under the effect of simple auditory stimuli. In general, it is seen that the middle portion of an audio clip possesses the most important information which leads to higher degree of cross-correlation among the different lobes of the human brain. The last portion of the signal also engages a certain section of the brain to a great extent. This experiment provides novel insights into the complex neural dynamics going on in the human brain during the cognition and perception of an audio signal.

In the following experiment, EEG data corresponding to emotional music stimuli were sonified and degree of cross-correlation was evaluated for the audio clip and the sonified EEG signals. From this we look to develop a robust emotion categorization algorithm which depends on direct correlation between the audio signal and the EEG waveform. Furthermore, the correlation between the various EEG electrodes was also evaluated to have an idea of the simultaneous arousal based response in different regions while listening to emotional music stimulus. The following table (Table 3) reports the values of cross-correlation coefficient between the music clips and the EEG signals originating from different frontal electrodes in various experimental conditions. Table 4 reports inter and intra lobe cross-correlations corresponding to the same experimental conditions. In the auditory control condition, white noise has been used as the reference signal for which the value of cross-correlation is computed with the specific EEG electrodes.

Table 3.

Values of cross-correlation coefficients between music and sonified EEG

| Part 1 (auditory control) | Part 2 (Chayanat) | Part 3 (auditory control) | Part 4 (Darbari Kanada) | |

|---|---|---|---|---|

| F3 | − 1.35 ± 0.04 | − 0.64 ±0.02 | − 1.25 ± 0.02 | − 0.23 ± 0.01 |

| F4 | − 1.46 ± 0.06 | − 0.85 ±0.06 | − 1.28 ± 0.03 | − 0.39 ± 0.02 |

| FP1 | − 1.39 ± 0.03 | − 0.56 ± 0.03 | − 1.52 ± 0.04 | − 0.13 ± 0.04 |

| FP2 | − 1.27 ±0.02 | − 0.92 ± 0.04 | − 1.24 ± 0.02 | − 0.15 ± 0.02 |

| Fz | − 1.19 ± 0.06 | − 0.38 ± 0.02 | − 1.27 ± 0.05 | − 0.12 ± 0.04 |

| T3 | − 0.34 ± 0.03 | − 0.95 ± 0.04 | − 0.34 ± 0.02 | − 0.93 ± 0.07 |

| T4 | − 0.36 ± 0.04 | − 0.98 ± 0.05 | − 0.37 ± 0.04 | − 1.04 ± 0.06 |

Table 4.

Values of Cross-Correlation coefficients among EEG electrodes

| Part 1 (auditory control) | Part 2 (Chayanat) | Part 3 (auditor control) | Part 4 (Darbari Kanada) | |

|---|---|---|---|---|

| F3–F4 | − 2.05 ± 0.06 | − 1.78 ± 0.04 | − 1.69 ± 0.06 | − 2.09 ± 0.06 |

| F3–FP1 | − 1.72 ± 0.04 | − 1.92 ± 0.06 | − 1.03 ± 0.04 | − 1.86 ± 0.07 |

| F3–FP2 | − 2.23 ± 0.07 | − 1.61 ± 0.07 | − 1.49 ± 0.05 | − 2.07 ± 0.04 |

| F4–FP1 | − 2.47 ± 0.08 | − 1.90 ± 0.06 | − 1.47 ± 0.05 | − 1.94 ± 0.07 |

| F4–FP2 | − 2.11 ± 0.03 | − 1.74 ± 0.04 | − 1.64 ± 0.07 | − 2.07 ± 0.11 |

| FP1–FP2 | − 2.11 ± 0.06 | − 1.91 ± 0.03 | − 1.43 ± 0.08 | − 1.86 ± 0.09 |

| F3–T3 | − 0.89 ± 0.03 | − 0.50 ± 0.02 | − 0.44 ± 0.03 | − 1.15 ± 0.02 |

| F4–T3 | − 0.86 ± 0.02 | − 0.26 ± 0.03 | − 0.35 ± 0.02 | − 1.11 ± 0.04 |

| FP1–T3 | − 0.31 ± 0.03 | − 0.79 ± 0.05 | − 0.85 ± 0.04 | − 0.79 ± 0.07 |

| FP2–T3 | − 0.85 ± 0.04 | − 0.61 ± 0.02 | − 0.18 ± 0.04 | − 1.14 ± 0.03 |

| F3–T4 | − 0.95 ± 0.06 | − 0.44 ± 0.076 | − 0.43 ± 0.02 | − 1.18 ± 0.06 |

| F4–T4 | − 0.92 ± 0.02 | − 0.12 ± 0.04 | − 0.47 ± 0.05 | − 1.15 ± 0.07 |

| FP1–T4 | − 0.31 ± 0.04 | 0.21 ± 0.06 | − 0.74 ± 0.04 | − 0.76 ± 0.04 |

| FP2–T4 | − 0.91 ± 0.05 | − 0.68 ± 0.07 | − 0.26 ± 0.03 | − 1.16 ± 0.06 |

| T3–T4 | − 0.89 ± 0.03 | − 0.23 ± 0.02 | − 0.48 ± 0.01 | − 1.11 ± 0.01 |

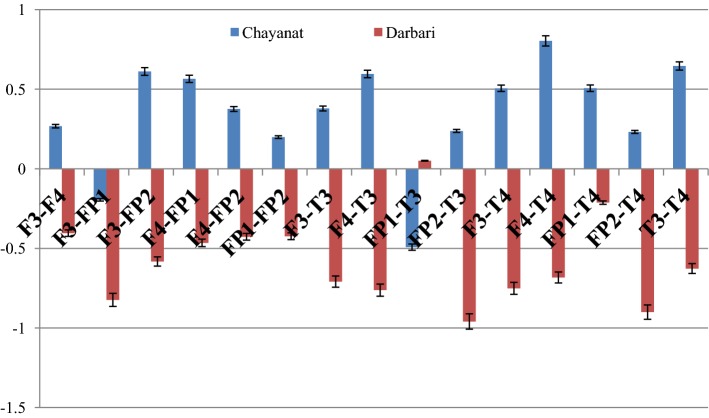

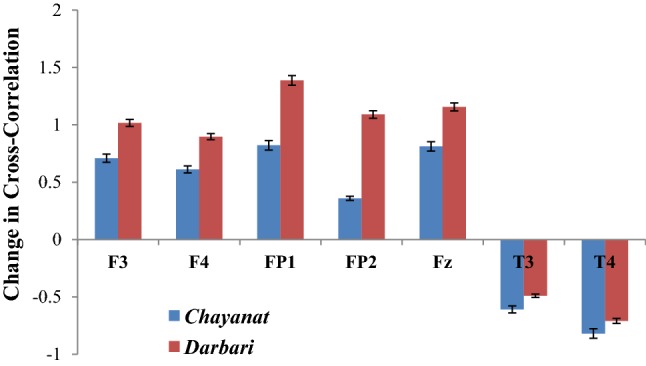

The SD values corresponding to each experimental condition represents the residual error coming out from the EEG analysis performed on the 10 subjects. ANOVA tests revealed significant p-values for each of the experimental condition with confidence level of > 85%. We have calculated the impact of each music clip on human brain taking the difference of music induced state from the control state. The difference of musical stimuli induced state from the control state has been reported in the following figures. While the first figure (Fig. 14) reports the change in the degree of cross-correlation coefficient between the music signal and the EEG electrodes for the two specific experimental conditions, the second figure (Fig. 15) reports the change in inter and intra lobe cross-correlation under the same two conditions.

Fig. 14.

Change in degree of cross-correlation between music signal and frontal electrodes

Fig. 15.

Change in degree of cross-correlation between frontal electrodes under two contrast music

The given Fig. 14 reports the change in the cross correlation coefficient γx (which is computed between the music clip and the sonified EEG signal) under the effect of two Hindustani raga clips of contrast emotion. For the rest state, the cross-correlation coefficient is computed using white noise, which is taken as the reference state on which the response of Chayanat and Darbari Kanada is computed. It is found that in both the cases, the value of γx increases, indicating the degree of cross-correlation between music and EEG signal decreases. All the frontal electrodes uniformly show a decrease in correlation with the source music signals. In case of raga Chayanat (conventionally portraying happy emotion), it is found that the decrease in correlation is much lesser as compared to the decrease in case of raga Darbari. The two fronto-polar electrodes, FP1/FP2 and the frontal midline electrode Fz are the ones which show significant increase in cross-correlation coefficient for both the music clips. The response of temporal lobes is in stark contrast to the other electrodes as is seen from the figure. The degree of correlation increase considerably under the effect of both Chayanat and Darbari raga, the increase being higher for the happy clip as compared to the sad one. Thus the cognitive appraisal corresponding to the temporal lobe is significantly different from that of the frontal lobe. In this way, using EEG sonification techniques, we have been able to establish a direct correlation between the source music signals and the output EEG signals generated as a result of induction of the musical stimuli. Our observations point in the direction of distinct emotion categorization between positive and negative emotional stimuli using robust non-linear scientific analysis techniques. The following Fig. 15 reports the change in inter lobe and intra-lobe cross correlation coefficient under the effect of contrast emotional music stimuli.

A general look into the figure reveals two distinct modes of emotional processing in human in respect to positive and negative emotional stimuli. While for the raga Chayanat, we find an increase in cross-correlation coefficient for all electrode combinations, the same decreases for all electrode combinations in case of raga Darbari Kanada. Thus, in general it can be said that for happy clips the degree of correlation between the frontal and fronto-polar electrodes decrease, the same increases strongly for a sad clip. The increase in correlation between electrodes for the sad clip is most prominent in case of F3–FP1, F3–FP2, FP2–T3 and Fp2–T4 combinations, while for the happy clip; the decrease in correlation is most prominent in F3–FP2, F4–FP1, T3–T4 and F4–T4 combinations. The observations shed new light on how the neuronal signals originating from different lobes of brain are correlated with one another during the cognitive processing of an emotional musical stimulus. The emotional categorization is most prominent in the increase or decrease of inter/intra-lobe correlation with respect to temporal lobe. Also, the activity of different brain lobes corresponding to specific musical emotion processing is an interesting revelation of this study. Further studies corresponding to other electrodes and a variety of emotional music stimuli is being conducted to get more robust and concrete results in this domain.

Conclusion

Despite growing efforts to establish sonification as a scientific discipline, the legitimacy of sound as a means to represent scientific data remains to be contested. Consequently, the sonification community has witnessed increasing attempts to sharpen the boundaries between scientific and artistic sonification. Some have argued, however, that these demarcation efforts do injustice to the assets composers and musicians could potentially bring to the field. Professor Michael Ballam of Utah State University explains the effects of musical repetition: “The human mind shuts down after three or four repetitions of a rhythm, or a melody, or a harmonic progression.” As a result, repetitive rhythmic music may cause people to actually release control of their thoughts, making them more receptive to whatever lyrical message is joined to the music. The tanpura drone in Hindustani music is a beautiful and most used example of repetitive music wherein the same pattern repeats itself again and again to engage the listeners and also to create an atmosphere. In this novel study, we deciphered a direct correlation between the source audio signal and the output EEG signal and also studied the correlation between different parts of the brain under the effect of same auditory stimulus. The following are the interesting conclusions obtained from the first experiment:

A direct evidence of correlation existing between audio signal and the sonified EEG signals obtained from different lobes of human brain is obtained. The degree of correlation goes on increasing as the audio clip progresses and becomes maximum in the 3rd and 4th segment of the audio clip which is around 1–2 min in our case. The rise in correlation is different in scale in different electrodes, but in general we have found a stipulated time period wherein the effect of music on human brain is the maximum.

While computing the degree of correlation among different parts of the brain, we found that the audio clip has the ability to activate different brain regions simultaneously or in different times. Again, we find that the mid-portions of the audio clip are the ones which leads to most pronounced correlation in different electrode combinations. In the final portion of the audio clip also we find high value of γx in several electrode combinations. This shows the ability of a music clip to engage several areas of the brain at a go not possible by any other stimulus at hand.

The intra lobe correlation of the two temporal electrodes as well as the inter lobe correlation with other temporal electrodes seem to be affected most significantly under the effect of acoustical stimuli. The degree of correlation increases significantly under the effect of even a neutral musical stimulus like tanpura drone, which does not elicit any emotional appraisal as is evident from the psychological study.

The second experiment dealing with emotional music clips provides conclusive data in regard to emotional categorization in the brain using Hindustani classical music. The following are the interesting conclusions as found from the study:

A strong correlation is found between the music clips and the EEG frontal electrodes as evidenced from the values of cross-correlation coefficient. The correlation is found to decrease under the influence of music of both happy and sad emotion. The decrease is found to be much more significant in case of the music which portrays sad emotion as compared to the happy emotional clip. We have direct quantitative evidence of correlation between music and EEG signals as well as the level of arousal in among the electrodes in regard to emotional music stimuli.

In the cross-correlation study between different pair of electrodes in right and left hemispheres of frontal lobe as well as fronto-polar lobe clear evidence of distinct emotional categorization is observed. While for raga Darbari Kanada, the degree of correlation among the electrodes increases significantly across all the electrode combinations, the same decreases for raga Chayanat. The inter lobe correlation between frontal and fronto-polar electrodes appear to increase most in case of the sad music clips.

The emotional categorization becomes most prominent in the amount of correlation found in respect to the temporal electrodes, with the specification most prominent in the F3–T4 and F4–T4 combination followed by the T3–T4 combination. Thus, in case of acoustical stimuli temporal electrodes seem to play an important role in non-linear correlation with signals originating from other positions of human brain

During the 1970s, the rise of neurofeedback stimulated parallel search for brainwave control using EEG waves. In a similar manner, future works in this domain involve procuring EEG data with sonified musical EEG signals as the stimulus and further study of the brain response. The use of sonified EEG waves (obtained from different musical stimulus on human brain) as a musical stimulus to the same participants and the analysis of the so obtained EEG waves is in progress in our Laboratory and forms an interesting future application of this novel study. In conclusion, this study provides unique insights into the complex neural and audio dynamics simultaneously and has the potential to go a long way to device a methodology for scientific basis of cognitive music therapy, a foundation has been laid in that direction using Hindustani Classical music as an agent.

Acknowledgement

The first author, SS acknowledges the Council of Scientific and Industrial Research (CSIR), Govt. of India for providing the Senior Research Fellowship (SRF) to pursue this research (09/096(0876)/2017-EMR-I).One of the authors, AB acknowledges the Department of Science and Technology (DST), Govt. of India for providing (A.20020/11/97-IFD) the DST Inspire Fellowship to pursue this research work. All the authors acknowledge Department of Science and Technology, Govt. of West Bengal for providing the RMS EEG equipment as part of R&D Project (3/2014).

References

- Adrian ED, Matthews BH. The Berger rhythm: potential changes from the occipital lobes in man. Brain. 1934;57(4):355–385. doi: 10.1093/brain/57.4.355. [DOI] [PubMed] [Google Scholar]

- Akin M, Arserim MA, Kiymik MK, Turkoglu I (2001) A new approach for diagnosing epilepsy by using wavelet transform and neural networks. In: Engineering in medicine and biology society, 2001. Proceedings of the 23rd annual international conference of the IEEE, vol 2. IEEE, pp 1596–1599

- Arslan B, Brouse A, Castet J, Filatriau JJ, Lehembre R, Noirhomme Q, Simon C (2005) Biologically-driven musical instrument. In: Proceedings of the summer workshop on multimodal interfaces (eNTERFACE’05). Faculté Polytechnique de Mons, Mons, BL

- Babiloni C, Buffo P, Vecchio F, Marzano N, Del Percio C, Spada D, Rossi S, Bruni I, Rossini PM, Perani D. Brains “in concert”: frontal oscillatory alpha rhythms and empathy in professional musicians. Neuroimage. 2012;60(1):105–116. doi: 10.1016/j.neuroimage.2011.12.008. [DOI] [PubMed] [Google Scholar]

- Baier G, Hermann T, Stephani U. Event-based sonification of EEG rhythms in real time. Clin Neurophysiol. 2007;118(6):1377–1386. doi: 10.1016/j.clinph.2007.01.025. [DOI] [PubMed] [Google Scholar]

- Balkwill LL, Thompson WF. A cross-cultural investigation of the perception of emotion in music: psychophysical and cultural cues. Music Percept Interdiscip J. 1999;17(1):43–64. doi: 10.2307/40285811. [DOI] [Google Scholar]

- Banerjee A, Sanyal S, Patranabis A, Banerjee K, Guhathakurta T, Sengupta R, Ghose D, Ghose P. Study on brain dynamics by non linear analysis of music induced EEG signals. Physica A. 2016;444:110–120. doi: 10.1016/j.physa.2015.10.030. [DOI] [Google Scholar]

- Behrman A. Global and local dimensions of vocal dynamics. J Acoust Soc Am. 1999;105:432–443. doi: 10.1121/1.424573. [DOI] [PubMed] [Google Scholar]

- Bhattacharya J. Increase of universality in human brain during mental imagery from visual perception. PLoS ONE. 2009;4(1):e4121. doi: 10.1371/journal.pone.0004121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bigerelle M, Iost A. Fractal dimension and classification of music. Chaos Solitons Fract. 2000;11(14):2179–2192. doi: 10.1016/S0960-0779(99)00137-X. [DOI] [Google Scholar]

- Bornas X, Fiol-Veny A, Balle M, Morillas-Romero A, Tortella-Feliu M. Long range temporal correlations in EEG oscillations of subclinically depressed individuals: their association with brooding and suppression. Cogn Neurodyn. 2015;9(1):53–62. doi: 10.1007/s11571-014-9313-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Braeunig M, Sengupta R, Patranabis A (2012) On tanpura drone and brain electrical correlates. In: Ystad S, Aramaki M, Kronland-Martinet R, Jensen K, Mohanty S (eds) Speech, sound and music processing: embracing research in India. pp 53–65

- Chordia P, Rae A (2007) Understanding emotion in raag: an empirical study of listener responses. In: International symposium on computer music modeling and retrieval. Springer, Berlin, pp 110–124

- Dutta S, Ghosh D, Chatterjee S. Multifractal detrended fluctuation analysis of human gait diseases. Fron Physiol. 2013;4:274. doi: 10.3389/fphys.2013.00274. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dutta S, Ghosh D, Samanta S, Dey S. Multifractal parameters as an indication of different physiological and pathological states of the human brain. Physica A: Statistical Mechanics and its Applications. 2014;396:155–163. doi: 10.1016/j.physa.2013.11.014. [DOI] [Google Scholar]

- Elgendi M, Rebsamen B, Cichocki A, Vialatte F, Dauwels J. Real-time wireless sonification of brain signals. In: Yamaguchi Y, editor. Advances in cognitive neurodynamics (III) Dordrecht: Springer; 2013. pp. 175–181. [Google Scholar]

- Figliola A, Serrano E, Rosso OA. Multifractal detrented fluctuation analysis of tonic-clonic epileptic seizures. European Phys J Spec Topics. 2007;143(1):117–123. doi: 10.1140/epjst/e2007-00079-9. [DOI] [Google Scholar]

- Gao TT, Wu D, Huang YL, Yao DZ. Detrended fluctuation analysis of the human EEG during listening to emotional music. Journal of Electronic Science and Technology. 2007;5(3):272–277. [Google Scholar]

- Gao J, Hu J, Tung WW. Complexity measures of brain wave dynamics. Cogn Neurodyn. 2011;5(2):171–182. doi: 10.1007/s11571-011-9151-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ghosh M. Natyashastra (ascribed to Bharata Muni) Varanasi: Chowkhamba Sanskrit Series Office; 2002. [Google Scholar]

- Ghosh D, Dutta S, Chakraborty S. Multifractal detrended cross-correlation analysis for epileptic patient in seizure and seizure free status. Chaos, Solitons Fractals. 2014;67:1–10. doi: 10.1016/j.chaos.2014.06.010. [DOI] [Google Scholar]

- Ghosh D, Dutta S, Chakraborty S. Multifractal detrended cross-correlation analysis of market clearing price of electricity and SENSEX in India. Physica A. 2015;434:52–59. doi: 10.1016/j.physa.2015.03.082. [DOI] [Google Scholar]

- Ghosh D, Sengupta R, Sanyal S, Banerjee A. Emotions from Hindustani classical music: an EEG based study including neural hysteresis. In: Baumann C, editor. Musicality of human brain through fractal analytics. Singapore: Springer; 2018. pp. 49–72. [Google Scholar]

- Ghosh D, Sengupta R, Sanyal S, Banerjee A. Musical perception and visual imagery: do musicians visualize while performing. In: Baumann C, editor. Musicality of human brain through fractal analytics. Singapore: Springer; 2018. pp. 73–102. [Google Scholar]

- Glen J. Use of audio signals derived from electroencephalographic recordings as a novel ‘depth of anaesthesia’monitor. Med Hypotheses. 2010;75(6):547–549. doi: 10.1016/j.mehy.2010.07.025. [DOI] [PubMed] [Google Scholar]

- Hardstone R, Poil SS, Schiavone G, Jansen R, Nikulin VV, Mansvelder HD, Linkenkaer-Hansen K. Detrended fluctuation analysis: a scale-free view on neuronal oscillations. Front Physiol. 2012;3:450. doi: 10.3389/fphys.2012.00450. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hermann T (2008) Taxonomy and definitions for sonification and auditory display. In: Proceedings of the 14th international conference on auditory display (ICAD 2008)

- Hermann T, Meinicke P, Bekel H, Ritter H, Müller HM, Weiss S (2002) Sonification for eeg data analysis. In: Proceedings of the 2002 international conference on auditory display

- Hinterberger T, Hill J, Birbaumer N (2013) An auditory brain-computer communication device. In: proceedings IEEE BIOCAS’04, 2004. The 19th international conference on auditory display (ICAD-2013), Lodz, Poland

- Hsü KJ, Hsü AJ. Fractal geometry of music. Proc Natl Acad Sci. 1990;87(3):938–941. doi: 10.1073/pnas.87.3.938. [DOI] [PMC free article] [PubMed] [Google Scholar]

- John TN, Puthankattil SD, Menon R. Analysis of long range dependence in the EEG signals of Alzheimer patients. Cogn Neurodyn. 2018;12(2):183–199. doi: 10.1007/s11571-017-9467-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kantelhardt JW, Zschiegner SA, Koscielny-Bunde E, Havlin S, Bunde A, Stanley HE. Multifractal detrended fluctuation analysis of nonstationary time series. Physica A. 2002;316(1):87–114. doi: 10.1016/S0378-4371(02)01383-3. [DOI] [Google Scholar]

- Kantelhardt JW, Rybski D, Zschiegner SA, Braun P, Bunde EK, Livina V, et al. Multifractality of river runoff and precipitation: comparison of fluctuation analysis and wavelet methods. Phys A. 2003;330:240–245. doi: 10.1016/j.physa.2003.08.019. [DOI] [Google Scholar]

- Karkare S, Saha G, Bhattacharya J. Investigating long-range correlation properties in EEG during complex cognitive tasks. Chaos Solitons Fract. 2009;42(4):2067–2073. doi: 10.1016/j.chaos.2009.03.148. [DOI] [Google Scholar]

- Khamis H, Mohamed A, Simpson S, McEwan A. Detection of temporal lobe seizures and identification of lateralisation from audified EEG. Clin Neurophysiol. 2012;123(9):1714–1720. doi: 10.1016/j.clinph.2012.02.073. [DOI] [PubMed] [Google Scholar]

- Koelsch S, Fritz T, Müller K, Friederici AD. Investigating emotion with music: an fMRI study. Hum Brain Mapp. 2006;27(3):239–250. doi: 10.1002/hbm.20180. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kramer G (ed) (1994) Some organizing principles for representing data with sound. In: Auditory display-sonification, audification, and auditory interfaces. Reading, MA, Addison-Wesley, pp 185–221

- Kramer G, Walker B, Bonebright T, Cook P, Flowers JH, Miner N, Neuhoff J (2010) Sonification report: status of the field and research agenda

- Kumar A, Mullick SK. Nonlinear dynamical analysis of speech. J Acoust Soc Am. 1996;100(1):615–629. doi: 10.1121/1.415886. [DOI] [Google Scholar]

- Lin YP, Wang CH, Jung TP, Wu TL, Jeng SK, Duann JR, Chen JH. EEG-based emotion recognition in music listening. IEEE Trans Biomed Eng. 2010;57(7):1798–1806. doi: 10.1109/TBME.2010.2048568. [DOI] [PubMed] [Google Scholar]

- Lu J, Wu D, Yang H, Luo C, Li C, Yao D. Scale-free brain-wave music from simultaneously EEG and fMRI recordings. PLoS ONE. 2012;7(11):e49773. doi: 10.1371/journal.pone.0049773. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lu J, Guo S, Chen M, Wang W, Yang H, Guo D, Yao D. Generate the scale-free brain music from BOLD signals. Medicine. 2018;97(2):e9628. doi: 10.1097/MD.0000000000009628. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maity AK, Pratihar R, Mitra A, Dey S, Agrawal V, Sanyal S, Banerjee A, Ghosh D, Sengupta R. Multifractal detrended fluctuation analysis of alpha and theta EEG rhythms with musical stimuli. Chaos Solitons Fract. 2015;81:52–67. doi: 10.1016/j.chaos.2015.08.016. [DOI] [Google Scholar]

- Mathur A, Vijayakumar SH, Chakrabarti B, Singh NC. Emotional responses to Hindustani raga music: the role of musical structure. Front Psychol. 2015;6:513. doi: 10.3389/fpsyg.2015.00513. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCreadie KA, Coyle DH, Prasad G. Sensorimotor learning with stereo auditory feedback for a brain–computer interface. Med Biol Eng Compu. 2013;51(3):285–293. doi: 10.1007/s11517-012-0992-7. [DOI] [PubMed] [Google Scholar]

- Meinicke P, Hermann T, Bekel H, Müller HM, Weiss S, Ritter H. Identification of discriminative features in the EEG. Intell Data Anal. 2004;8(1):97–107. doi: 10.3233/IDA-2004-8106. [DOI] [Google Scholar]

- Miranda ER, Brouse A. Interfacing the brain directly with musical systems: on developing systems for making music with brain signals. Leonardo. 2005;38(4):331–336. doi: 10.1162/0024094054762133. [DOI] [Google Scholar]

- Miranda ER, Castet J (eds) (2014) Guide to brain-computer music interfacing. Springer

- Miranda ER, Magee WL, Wilson JJ, Eaton J, Palaniappan R. Brain-computer music interfacing (BCMI): from basic research to the real world of special needs. Music Med. 2011;3(3):134–140. doi: 10.1177/1943862111399290. [DOI] [Google Scholar]

- Movahed MS, Hermanis E. Fractal analysis of river flow fluctuations. Physica A. 2008;387(4):915–932. doi: 10.1016/j.physa.2007.10.007. [DOI] [Google Scholar]

- Olivan J, Kemp B, Roessen M. Easy listening to sleep recordings: tools and examples. Sleep Med. 2004;5(6):601–603. doi: 10.1016/j.sleep.2004.07.010. [DOI] [PubMed] [Google Scholar]

- Podobnik B, Stanley HE. Detrended cross-correlation analysis: a new method for analyzing two nonstationary time series. Phys Rev Lett. 2008;100(8):084102. doi: 10.1103/PhysRevLett.100.084102. [DOI] [PubMed] [Google Scholar]

- Podobnik B, Jiang ZQ, Zhou WX, Stanley HE. Statistical tests for power-law cross-correlated processes. Phys Rev E. 2011;84(6):066118. doi: 10.1103/PhysRevE.84.066118. [DOI] [PubMed] [Google Scholar]

- Pressing Jeff. Nonlinear maps as generators of musical design. Comput Music J. 1988;12(2):35–46. doi: 10.2307/3679940. [DOI] [Google Scholar]

- Pu J, Xu H, Wang Y, Cui H, Hu Y. Combined nonlinear metrics to evaluate spontaneous EEG recordings from chronic spinal cord injury in a rat model: a pilot study. Cogn Neurodyn. 2016;10(5):367–373. doi: 10.1007/s11571-016-9394-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosenboom D. Extended musical interface with the human nervous system: assessment and prospectus. Leonardo. 1999;32(4):257–257. doi: 10.1162/002409499553398. [DOI] [Google Scholar]

- Sadegh Movahed M, Jafari GR, Ghasemi F, Rahvar S, Reza Rahimi TM. Multifractal detrended fluctuation analysis of sunspot time series. J Stat Mech. 2006;0602:P02003. [Google Scholar]

- Sammler D, Grigutsch M, Fritz T, Koelsch S. Music and emotion: electrophysiological correlates of the processing of pleasant and unpleasant music. Psychophysiology. 2007;44(2):293–304. doi: 10.1111/j.1469-8986.2007.00497.x. [DOI] [PubMed] [Google Scholar]

- Sengupta R, Dey N, Nag D, Datta AK. Comparative study of fractal behavior in quasi-random and quasi-periodic speech wave map. Fractals. 2001;9(04):403–414. doi: 10.1142/S0218348X01000932. [DOI] [Google Scholar]

- Sengupta R, Dey N, Datta AK, Ghosh D. Assessment of musical quality of tanpura by fractal-dimensional analysis. Fractals. 2005;13(03):245–252. doi: 10.1142/S0218348X05002891. [DOI] [Google Scholar]

- Sengupta R, Dey N, Datta AK, Ghosh D, Patranabis A. Analysis of the signal complexity in sitar performances. Fractals. 2010;18(02):265–270. doi: 10.1142/S0218348X10004816. [DOI] [Google Scholar]

- Sjőlander K, Beskow J (2009) Wavesurfer. Computer program, version, 1(3)

- Supper A (2012) The search for the “killer application”: drawing the boundaries around the sonification of scientific data. In: The oxford handbook of sound studies

- Telesca L, Lapenna V, Macchiato M. Mono- and multi-fractal investigation of scaling properties in temporal patterns of seismic sequences. Chaos Soliton Fract. 2004;19:1–15. doi: 10.1016/S0960-0779(03)00188-7. [DOI] [Google Scholar]

- Truax, B (1990) Chaotic non-linear systems and digital synthesis: an exploratory study. In: International computer music conference (ICMC). Glasgow, Scotland, pp 100–103

- Väljamäe A, Steffert T, Holland S, Marimon X, Benitez R, Mealla S et al (2013). A review of real-time EEG sonification research. In: Proceddings of the interntional conference on auditory display, 2013, pp 85–93

- Van Leeuwen WS, Bekkering ID. Some results obtained with the EEG-spectrograph. Electroencephalogr Clin Neurophysiol. 1958;10(3):563–570. doi: 10.1016/0013-4694(58)90019-1. [DOI] [PubMed] [Google Scholar]

- Wang J, Shang P, Ge W. Multifractal cross-correlation analysis based on statistical moments. Fractals. 2012;20(03n04):271–279. doi: 10.1142/S0218348X12500259. [DOI] [Google Scholar]