Abstract

Various mental disorders are accompanied by some degree of cognitive impairment. Particularly in neurodegenerative disorders, cognitive impairment is the phenotypical hallmark of the disease. Effective, accurate and timely cognitive assessment is key to early diagnosis of this family of mental disorders. Current standard-of-care techniques for cognitive assessment are primarily paper-based, and need to be administered by a healthcare professional; they are additionally language and education-dependent and typically suffer from a learning bias. These tests are thus not ideal for large-scale pro-active cognitive screening and disease progression monitoring. We developed the Integrated Cognitive Assessment (referred to as CGN_ICA), a 5-minute computerized cognitive assessment tool based on a rapid visual categorization task, in which a series of carefully selected natural images of varied difficulty are presented to participants. Overall 448 participants, across a wide age-range with different levels of education took the CGN_ICA test. We compared participants’ CGN_ICA test results with a variety of standard pen-and-paper tests, such as Symbol Digit Modalities Test (SDMT) and Montreal Cognitive Assessment (MoCA), that are routinely used to assess cognitive performance. CGN_ICA had excellent test-retest reliability, showed convergent validity with the standard-of-care cognitive tests used here, and demonstrated to be suitable for micro-monitoring of cognitive performance.

Introduction

Brain disorders can cause deficiency in cognitive performance. In particular, in neurodegenerative disorders, cognitive impairment is the phenotypical hallmark of the disease. Neurodegenerative disorders, including Dementia and Alzheimer’s disease, continue to represent a major economic, social and healthcare burden1. These diseases remain underdiagnosed or are diagnosed too late2; resulting in less favorable health outcomes. Current routinely used approaches to cognitive assessment, such as the Mini Mental State Examination (MMSE)3, Montreal Cognitive Assessment (MoCA)4, and Addenbrooke’s Cognitive Examination (ACE)5 are primarily paper-based, language and education-dependent and need to be administered by a healthcare professional (e.g. physician). These tests are therefore not ideal tools for wide pro-active screening of cognitive impairment, which can be crucial to earlier diagnosis.

Several studies have emphasized the importance of early diagnosis2,6–9 and its role in driving better treatment and improvement of cognition and quality of life10. Therefore, developing new tools for effective, accurate and timely cognitive assessment is key to tackling this family of brain disorders.

Growing attention has been drawn to changes in the visual system in connection with dementia and cognitive impairment11–16. Previous studies have linked visual function abnormalities with Alzheimer’s Disease and other types of cognitive impairment17–19. All parts of the visual system may be affected in Alzheimer’s disease, including the optic nerve, retina, lateral geniculate nucleus (LGN) and the visual cortex19. Therefore, visual dysfunction can predict cognitive deficits in Alzheimer’s Disease19,20. The human motor cortex21,22, and the oculomotor23,24 are also shown to be affected in Alzheimer’s Disease.

We therefore developed a rapid visual categorization test that measures subject’s accuracy and response reaction times, engaging both visual and motor cortices as well as oculomotor function. Categorization accuracies and reaction times are then summarized to assess participants’ cognitive performance. The proposed integrated cognitive assessment (CGN_ICA) test is designed to target cognitive domains and brain areas that are affected in the initial stages of cognitive disorders such as dementia, ideally before the onset of memory symptoms. Thus, as opposed to solely focusing on working memory, the test engages the retina, the visual cortex and the motor cortex, all of them are shown to be affected pre-dementia or in early stages of the disease21,25–31. The CGN_ICA’s focus on speed and accuracy of processing visual information32–35 is in line with latest evidence suggesting that simultaneous object perception deficits are related to reduced visual processing speed in amnestic mild cognitive impairment36. Additionally, the proposed test is self-administered and is intrinsically independent of language and culture, thus making it ideal for large-scale pro-active cognitive screening and cognitive monitoring.

This study aims to assess CGN_ICA’s convergent validity with the routinely used standard pen-and-paper cognitive tests, its test-retest reliability, and whether the proposed test is suitable for micro-monitoring of cognitive performance.

Material and Methods

CGN_ICA test description

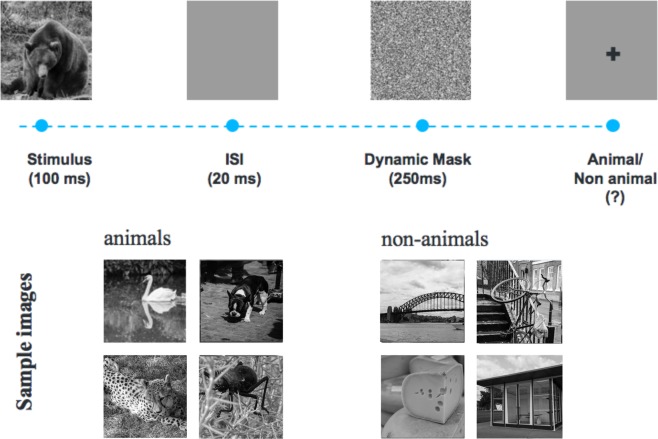

The CGN_ICA test is a rapid visual categorization task with backward masking33,37,38. One hundred natural images (50 animal and 50 non-animal) with various levels of difficulty were presented to the participants. Each image was presented for 100 ms followed by a 20 millisecond inter-stimulus interval (ISI), followed by a dynamic noisy mask (for 250 ms), followed by subject’s categorization into animal vs non-animal (Fig. 1). When using iPad, the categorization was done by tapping on the left or right side of the screen; when using RasPi, subjects indicated their responses by pressing either of the two pre-assigned keys on a keyboard (‘F’ vs. ‘J’). Images were presented at the center of the screen at 7 degree visual angle. For more information about rapid visual categorization tasks refer to Mirzaei et al.33.

Figure 1.

The CGN_ICA test pipeline. One hundred natural images (50 animal and 50 non-animal) with various levels of difficulty are presented to the participants. Each image is presented for 100 ms followed by 20 ms inter-stimulus interval (ISI), followed by a dynamic noisy mask (for 250 ms), followed by subject’s categorization into animal vs. non-animal. Few sample images are shown for demonstration purposes.

The CGN_ICA test starts with a different set of 10 test images (5 animal, 5 non-animal) to familiarize participants with the task. These images are later removed from further analysis. If participants perform above chance (>50%) on these 10 images, they will continue to the main task. If they perform at chance level (or below), the test instructions will be presented again, and a new set of 10 introductory images will follow. If they perform above chance in this second attempt, they will progress to the main task. If they perform below chance for the second time the test will be aborted. Only in experiment 2, three participants, out of 61, were aborted from the study due to this reason, thus 58 subjects remaining in experiment 2 that are shown in Table 1.

Table 1.

Summary of all the experiments.

| Experiment | Number of Participants | Age mean years, SD [min, max] | Education mean years, SD [min, max] | Gender (#female) | Cognitive Tests | ICA Platform |

|---|---|---|---|---|---|---|

| 1 | 212 | 74,10, [46, 98] | 9, 6, [0, 23] | 110 (51%) | MoCA, CGN_ICA | Raspberry Pi (RaPi) |

| 2 | 58 | 62, 6, [54, 79] | 14, 5, [3, 24] | 33 (56%) | MoCA, ACE-R, CGN_ICA | iPad |

| 3 | 166 | 37,10, [19, 65] | 14, 3, [1, 20] | 125 (75%) | SDMT, BVMT-R, CVLT-II, CGN_ICA | iPad |

| 3′ (re-test) | 44 | 38, 12 [18, 64] | 14, 2 [8, 20] | 29 (66%) | CGN_ICA, SDMT | iPad |

| 4 | 12 | 29, 3, [20, 36] | 19, 4, [15, 24] | 5 (41%) | CGN_ICA | Web |

For each experiment, the table shows number of participants, their demographics (age, education and gender), and the cognitive tests they have taken in each experiment. A total number of 448 volunteers took part in these experiments. Experiments 1 and 2 were to establish CGN_ICA correlation with standard-of-care cognitive assessment tools for MCI and dementia screening in older adults (i.e. MoCA and ACE-R). The third experiment covers younger individuals (19 to 65 years-old) who have taken CGN_ICA along with three other standard cognitive tests, particularly suitable for this age-range. Experiment 3′ is a test-retest: 44 volunteers who participated in the third experiment were called back after five weeks (+−15 days) to take the CGN_ICA and SDMT test for the second time. This was to assess CGN_ICA test-retest reliability (r = 0.96, p < 10−7)]). Experiment 4 is the learning-effect experiment in which 12 young university students took the CGN_ICA test every other day for two weeks to see whether CGN_ICA is free from learning bias and suitable for micro-monitoring of cognitive performance.

Scientific rationale behind the CGN_ICA test

The CGN_ICA test takes advantage of millions of years of human evolution – the human brain’s strong reaction to animal stimuli39–42. Human observers are very good at recognising whether briefly flashed novel images contain the image of an animal, and previous work has shown that the underlying visual processing can be performed quickly38,43. The strongest categorical division represented in the human higher level visual cortex (known as inferior temporal cortex) appears to be that between animates and inanimates. Several studies have shown this in human and non-human primates38–40,44,45. Studies also show that on average it takes about 100 ms to 120 ms for humans to differentiate animate from inanimate stimuli46–48. Following this rationale, in the CGN_ICA test, the images are presented for 100 ms followed by a short inter-stimulus-interval (ISI), then followed by a dynamic mask. Shorter periods of ISI can make the animal detection task more difficult and longer periods reduce the potential use for testing purposes as it may not allow for detecting less severe cognitive impairments. The dynamic mask is used to remove (or at least reduce) the effect of recurrent processes in the brain49–53. This makes the task more challenging by reducing the ongoing recurrent neural activity that could boost subject’s performance. This leaves less room for the resilient brain to compensate for the subtle ongoing neurodegeneration in early stages of the disease.

Participants

As shown in Table 1, we conducted four different experiments; in total, 448 volunteers took part in this study. The study was conducted according to the Declaration of Helsinki and approved by the local ethics committee at Royan Institute. Informed consent was obtained from all participants.

Participants’ inclusion criteria were individuals above age 18, with normal or corrected-to-normal vision, without severe upper limb arthropathy or motor problems that could prevent them from completing the tests independently. For each participant, information about age, education and gender was also collected.

Stimulus set

We used a set of 100 grayscale natural images, half of them contained an animal. The images varied in their level of difficulty. In some images the head or body of the animal is clearly visible to the participants, which makes it easier to detect. In other images the animals are further away or otherwise presented in cluttered environments, making them more difficult to detect. Few sample images are shown in Fig. 1. Grayscale images were used to remove the possibility of some typical color blindness affecting participants’ results. Furthermore, color images can facilitate animal detection solely based on color, without fully processing the shape of the stimulus. This could have made the task easier and less suitable for detecting less severe cognitive dysfunctions.

To construct the mask, a white noise image was filtered at four different spatial scales, and the resulting images were thresholded to generate high contrast binary patterns. For each spatial scale, four new images were generated by rotating and mirroring the original image. This leaves us with a pool of 16 images. The noisy mask used in the CGN_ICA test was a sequence of 8 images, chosen randomly from the pool, with each of the spatial scales to appear twice in the dynamic mask.

Reference pen-and-paper cognitive tests

Montreal Cognitive Assessment (MoCA)

MoCA4 is a widely used screening tool for detecting cognitive impairment, typically in older adults. The MoCA test is a one-page 30-point test administered in approximately 10 minutes.

Mini-Mental State Examination (MMSE)

The MMSE3 test is a 30-point questionnaire that is used in clinical and research settings to measure cognitive impairment. It is commonly used to screen for dementia in older adults; and takes about 10 minutes to administer.

Addenbrooke’s Cognitive Examination -Revised (ACE-R)

The ACE54,55 was originally developed at Cambridge Memory Clinic as an extension to the MMSE. ACE-R is a revised version of ACE that includes MMSE score as one its sub-scores. The ACE-R5 assesses five cognitive domains: attention, memory, verbal fluency, language and visuospatial abilities. On average, the test takes about 20 minutes to administer and score.

Symbol Digit Modalities Test (SDMT)

The SDMT is designed to assess speed of information processing, and takes about 5 minutes to administer56. A series of nine symbols are presented at the top of a standard sheet of paper, each paired with a single digit. The rest of the page contains symbols with an empty box next to them, in which participants are asked to write down the digit associated with this symbol as quickly as possible. The outcome score is the number of correct matches over a time span of 90 seconds.

California Verbal Learning Test -2nd edition (CVLT-II)

The CVLT-II test57,58 begins with the examiner reading a list of 16 words. Participants listen to the list and then report as many of the items as they can recall. After that, the entire list is read again followed by a second attempt at recall. Altogether, there are five learning trials. The final score, which is out of 80, is the summation of all the correct recalls. As in the brief international cognitive assessment for multiple sclerosis (BICAMS) battery59, we only used the learning trials of the CVLT-II, which takes about 10 minutes to administer.

Brief Visual Memory Test–Revised (BVMT-R)

The BVMT-R test assesses visuo-spatial memory60,61. In this test, six abstract shapes are presented to the participant for 10 seconds. The display is removed from view and patients are asked to draw the stimuli via pencil on paper manual responses. There are three learning trials, and the primary outcome measure is the total number of points earned over the three learning trials. The test takes about 5 minutes to administer.

Experiments

We conducted four different experiments, as summarized in Table 1. The first three experiments were designed to measure the CGN_ICA correlation with a wide range of routinely used reference cognitive tests. The goal was to investigate whether the speed and accuracy of visual processing in a rapid visual categorization task is correlated with subject’s cognitive performance.

In the first and second experiments, we aimed to test CGN_ICA’s ability in assessing cognitive performance in older adults. Therefore, we used MoCA and/or ACE-R as reference cognitive tests, both of which are routinely used to screen for mild cognitive impairment (MCI) and dementia in older adults. In the first experiment, 212 volunteers participated; the CGN_ICA test was delivered via a Raspberry Pi (RaPi) platform, which is a small single-board computer, attached to a keyboard and a LCD monitor; and MoCA was used as the reference cognitive test. For the second experiment, we had 58 participants; the CGN_ICA was delivered via iPad, and both MoCA and ACE-R were used as reference tests in this experiment.

The third experiment had SDMT, BVMT-R and CVLT-II as the reference cognitive tests, measuring speed of information processing, visuo-spatial memory and verbal learning, respectively. These three tests together form the BICAMS battery, which requires about 15 to 20 minutes to administer, and is primarily used to detect cognitive dysfunction in younger adults who may suffer from multiple sclerosis (MS). 166 participants took part in this experiment. Forty-four of them were selected for a re-test as part of a second visit to assess CGN_ICA test-retest reliability. Participants for the re-test session were selected at random, while keeping the age-range, level of education, and gender ratio relatively similar to the set of participants in the first session. The CGN_ICA was delivered via an iPad platform.

All the pen-and-paper cognitive tests were administered by a healthcare professional. The administration order for CGN_ICA vs. reference cognitive tests was at random.

Finally, experiment 4 was designed to study whether the CGN_ICA test had a learning bias if taken multiple times in short intervals. Learning bias is defined as the ability to improve your test score by learning the test simply because of several exposures to the test. 12 young volunteers participated in this study. For convenience, the CGN_ICA was delivered remotely via a web platform. Participants took the CGN_ICA test every other day for two weeks.

Accuracy, speed, and CGN_ICA summary score calculations

Preprocessing

We used boxplot to remove outlier reaction times, before computing the CGN_ICA score. Boxplot is a non-parametric method for describing groups of numerical data through their quartiles; and allows for detection of outliers in the data. Following the boxplot approach, reaction times greater than q3 + w * (q3 − q1) or less than q1 − w * (q3 − q1) are considered outliers. q1 is the lower quartile, and q3 is the upper quartile of the reaction times. Where “w” is a ‘whisker’; w = 1.5.

Accuracy is simply defined as the number of correct categorisations divided by the total number of images, multiplied by a 100.

| 1 |

Speed is defined based on participant’s response reaction times in trials they responded correctly.

| 2 |

RT: reaction time

e: Euler’s number ~2.7182……

Speed is inversely related with participants’ reaction times; the higher the speed, the lower the reaction time. The reason for defining the above formula for speed, instead of using the raw reaction times, was to have a more intuitive and standardized score to report to the clinicians, scaled between 0 to 100.

The CGN_ICA summary score is a simple combination of accuracy and speed, defined as follows:

| 3 |

Statistical analysis

Within the manuscript, convergent validity, and test-retest reliability for the CGN_ICA test is shown with Pearson’s Correlation. P-values for Pearson’s correlation are based on a Student’s t distribution. Calculations are done using MathWorks’ statistics and machine learning toolbox (https://www.mathworks.com/help/stats/index.html).

To measure dependency of the cognitive tests with level of education, we used explained variance, defined as the square of Pearson’s Correlation between participants’ cognitive score and their level of education (i.e. number of years). Here the statistical significance was obtained by a permutation test (10,000 permutations of participants). To formally assess statistical independence, we used a non-parametric independence test, proposed by Gretton and Gyorfi62, based on 10,000 bootstrap resampling of participants.

Finally, we used a single factor analysis of variance (ANOVA) to compare average CGN_ICA scores for participants who had taken the CGN_ICA test every other day for two weeks. The goal was to see if the mean CGN_ICA scores are significantly different at any given day.

Results

Convergent validity with the standard-of-care cognitive tests

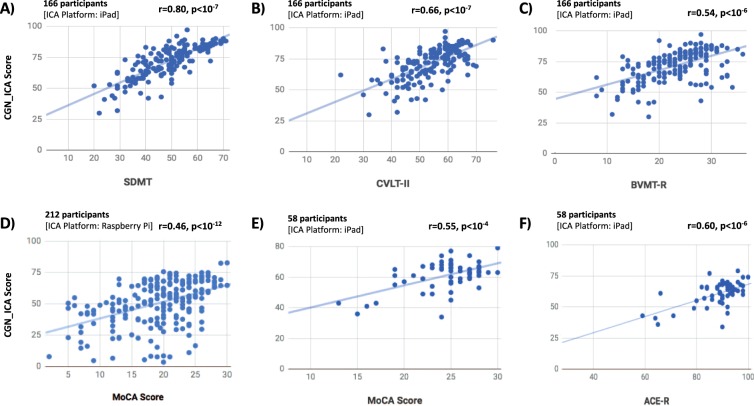

A key requirement for a clinically useful cognitive assessment test is to establish validity and a correlation with an existing recognized neuropsychological test that is routinely used in clinical practice. Here in three different experiments (see Table 1, experiments 1 to 3), we show that the CGN_ICA test is significantly correlated with six standard neuropsychological tests (Fig. 2 and Table 2).

Figure 2.

The CGN_ICA test score is significantly correlated with a wide range of standard cognitive tests. Participants have taken the CGN_ICA test along with one or more standard cognitive tests (see Table 1). Each scatter plot shows the ICA score (y axis) vs. one of the standard cognitive tests (x axis). Each blue dot indicates an individual; the lines are results of linear regression, fitting a linear line to the data in each plot. For each plot, number of participants who have taken the tests and platform on which the CGN_ICA is taken are written on top of the scatter plot. ‘r’ and ‘p’ on top-right corner of each plot show the Pearson correlation between the two candidate tests, and the p-value of the correlation, respectively.

Table 2.

Correlation of the CGN_ICA test scores with various domains of cognition.

| Cognitive Test | Correlation with | Cognitive Domain | ||

|---|---|---|---|---|

| CGN_ICA | Accuracy | Speed | ||

| SDMT | 0.80, p < 10−7 | 0.41, p < 10−7 | 0.71, p < 10−7 | speed of processing |

| CVLT-II | 0.66, p < 10−7 | 0.40, p < 10−6 | 0.56, p < 10−14 | verbal learning |

| BVMT-R | 0.54, p < 10−6 | 0.40, p < 10−8 | 0.43, p < 10−7 | visual memory |

| MoCA (1) -RaPi | 0.46, p < 10−11 | 0.48, p < 10−12 | 0.19, p < 0.01 | General |

| MoCA (2) -iPad | 0.55, p < 10−4 | 0.56, p < 10−5 | 0.19, ns | General |

| ACE-R total score | 0.60, p < 10−6 | 0.52, p < 10−4 | 0.28, p < 0.05 | total score of 5 domains |

| ACE|Attention | 0.27, p < 0.05 | 0.25, ns | 0.11, ns | attention |

| ACE|Memory | 0.47, p < 10−3 | 0.37, p < 0.01 | 0.26, p < 0.05 | verbal memory |

| ACE|Fluency | 0.45, p < 10−3 | 0.25 ns | 0.35, p < 0.01 | Fluency |

| ACE|Language | 0.53, p < 10−4 | 0.62, p < 10−6 | 0.12, ns | Language |

| ACE|Visuospatial | 0.42, p < 10−3 | 0.44, p < 10−3 | 0.12, ns | visuospatial |

| MMSE | 0.33, p < 0.01 | 0.36, p < 0.01 | 0.08, ns | General |

This table shows correlation of the CGN_ICA test with a wide variety of standard-of-care tests for cognitive assessment, each measuring various domains of cognition. The two columns for accuracy and speed indicate the contribution of each of these two components separately toward the CGN_ICA score correlation with the reference cognitive tests. The correlations are Pearson correlations, p-values are written in front of each correlation; ns means not-significant (p > 0.05). MoCA(1): correlation between MoCA and RaPi implementaiont of the ICA test (experiment 1). MoCA(2): correlations between MoCA and the iPad implementation of the CGN_ICA test (experiment 2).

Given the variability in subject’s demographics, such as age, gender, and level of education, a statistically significant correlation typically above 0.4 (sometimes > 0.3) with reference cognitive tests is considered within the acceptable range for convergent validity (i.e. construct validity)63–65. To give few examples, convergent validity for ACE-R is shown with a correlation of −0.32 with clinical dementia scale5; for CogState (a computerized cognitive battery), convergent validity is shown by correlations that vary between 0.11 and 0.53 with reference pen-and-paper cognitive tests64,66,67. Similarly, cerebral spline fluid (CSF) and blood biomarkers have correlations in the range of 0.4 to 0.668,69 with standard cognitive tests, such as MoCA.

We show that the CGN_ICA score is significantly correlated with MoCA, tested on two different hardware platforms (RaPi and iPad). CGN_ICA correlation with MoCA varies from 0.46 to 0.55 (Fig. 2D and E) that is within the range for determining construct validity.

The CGN_ICA test had a slightly higher correlation with ACE-R (r = 0.60, p < 10−6), compared to MoCA. ACE-R provides a more comprehensive assessment of cognitive abilities and takes a longer time to administer and score (~20 minutes). It is comprised of five subsections, assessing attention, memory, fluency, language, and visuospatial abilities. The CGN_ICA correlation with ACE-R (Fig. 2F) and its different sub-sections are shown in Table 2. Subject’s MMSE score can also be extracted from the ACE-R test (see Table 2). MoCA and ACE-R are typically used to screen for MCI and dementia in older adults.

MMSE is shown to be less sensitive in detecting cognitive impairment4,5 compared to MoCA or ACE-R. Therefore, a smaller correlation with MMSE (r = 0.33), and a higher correlation with MoCA and ACE-R (r = 0.55 and 0.60) is of interest.

In addition, we compared CGN_ICA against another set of tests, including SDMT, BVMT-R and CVLT-II (Fig. 2A–C, and Table 2) that are more often used in younger individuals to assess cognitive performance. For example, all these three tests are included as part of larger battery of tests that assess cognitive impairment in individuals with MS, such as the ‘minimal assessment of cognitive function in MS’ (MACFIMS) and the ‘brief international cognitive assessment for MS’ (BICAMS).

ICA had the highest correlation with SDMT (r = 0.80, p < 10−7), which is a pen-and-paper test mostly measuring the speed of information processing. CVLT-II, measuring verbal learning, and BVMT-R, measuring visual memory, had correlations of 0.66 and 0.54 with the CGN_ICA score, respectively. SDMT is shown to be more sensitive in detecting cognitive impairment in patients with MS59,70, compared to CVLT-II and BVMT-R, therefore, CGN_ICA’s higher correlation with SDMT (compared to CVLT-II and BVMT-R) is of interest.

It is worth noting that a correlation of one is not desirable between the CGN_ICA test and any of these cognitive tests, as none of these standard tests are considered the ground truth (or gold standard) in detecting cognitive impairments.

The majority of cognitive tests (Table 2) were more correlated with the accuracy component of the CGN_ICA test, except for SDMT and CVLT-II, both of which have got a significantly higher correlation with speed compared to that of accuracy (p < 0.001; bootstrap resampling of subjects).

Each reference cognitive test used in this study (shown in Table 2) measured different domains of cognition. The CGN_ICA score had significant correlations with all of these tests, suggesting that it can be effectively used as one integrated test to provide insights about different cognitive domains (e.g. speed of processing, memory, verbal learning, attention, and fluency).

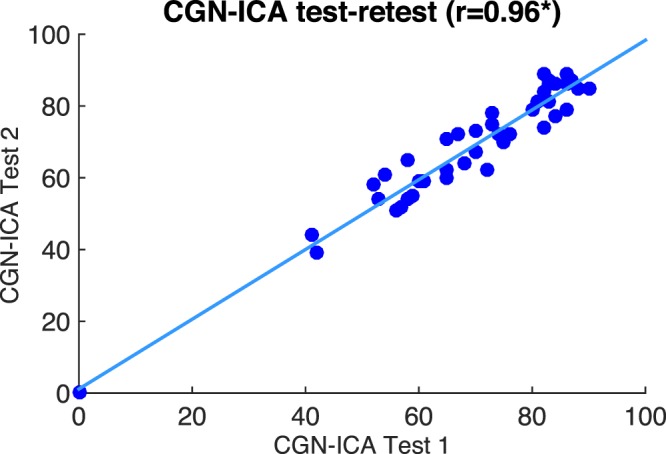

CGN_ICA shows excellent test-retest reliability

One of the most critical psychometric criteria for the applicability of a test is its reliability. That is the deviation observed when using the same instrument multiple times under similar circumstances.

To assess the reliability of the CGN_ICA test, a subgroup of 44 participants from experiment 3 (see Table 1) took the CGN_ICA test for the second time after about five weeks (+−15 days). Test-retest reliability was measured by computing the Pearson correlation between the two CGN_ICA scores [Fig. 3; Pearson’s r = 0.96 (p < 10−7)]. R values for test-retest correlation are considered adequate if > 0.70 and good if > 0.80 (Anastasi, 1988).

Figure 3.

CGN_ICA score, test-retest scatter plot. Each blue dot shows the ICA score for an individual taken on two different days. The blue line indicates the linear curve fitted to the test-retest data. [Pearson’s r = 0.96 (*p < 10−7)].

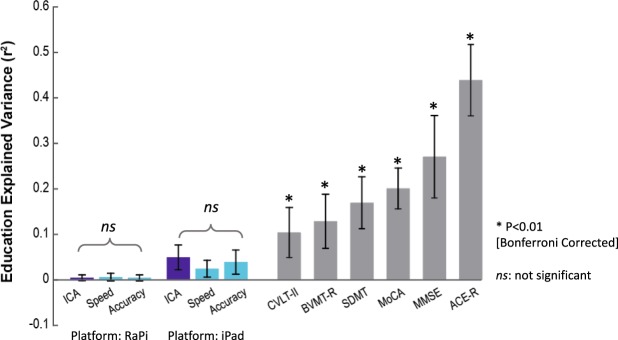

How much of the CGN_ICA score is explained by education?

People with higher levels of education tend to score better in the standard pen-and-paper tests, compared to their age-matched group that fall into the same cognitive category. This makes ‘the level of education’ a confounding factor for cognitive assessment.

We were interested to see how much of the CGN_ICA score is explained by education in comparison to other cognitive tests. To this end, we computed the Pearson’s correlation between participants’ test scores and their level of education (in years). Explained variance is defined as the square of this correlation, and indicates how of much of the variance of these test scores can be explained by education (Fig. 4).

Figure 4.

Dependency of standard-of-care cognitive tests on education. Bars indicate how much of the scores reported in each test are explained by education [explained variance = (Pearson’s r)2]. Stars show statistical significance, indicating that a significant variance of the test score is explained by education. Statistical significance is obtained by permutation test (10,000 permutations). Error bars are standard errors of the mean (SEM) obtained by 10,000 bootstrap resampling of subjects. P-values smaller than 0.01 (after Bonferroni correction for multiple comparison) are considered significant. ‘ns’ means not significant. The results for CGN_ICA (RaPi platform) are based on 212 subjects (Experiment 1 in Table 1); results for CGN_ICA (iPad platform) are based on the combined data from Experiments 2 and 3 (224 participants in total) all of whom took the CGN_ICA on an iPad. The results for MoCA are based on the combined data from Experiments 1 and 2, in both of which participants took MoCA (270 participants in total). ACE-R and MMSE results are based on the data from Experiment 2. Results for SDMT, BVMT-R and CVLT-II are based on the data from Experiment 3.

We find that a significant variance of all the standard cognitive assessment tests is explained by education, whereas the CGN_ICA test does not show a significant relationship with education (Fig. 4). In Fig. 4, we separately reported the CGN_ICA test results for the RaspberryPi platform (Experiment 1: 212 participants) and the iPad platform (experiments 2 and 3: 58 + 166 = 224 participants).

Furthermore, we formally tested whether the CGN_ICA score is independent of education using a non-parametric test of independence62. In experiment 1 (i.e. CGN_ICA taken on RaPi), the statistical test of independence was positive, showing that CGN_ICA score is independent of education (based on 10,000 bootstrap resampling of subjects).

The CGN_ICA test has no learning bias

One problem with many existing cognitive tests is that they have a learning bias, meaning that subject’s cognitive performance is improved by repeated exposure to the test as a result of learning the task, without any change in their cognitive ability. A learning bias reduces the reliability of a test if repeatedly used, for example when monitoring performance over time. An ideal test for early diagnosis of cognitive disorders and monitoring cognitive performance would show no ‘learning bias’.

The currently available pen-and-paper tests, such as MoCA, MMSE and Addenbrooke’s Cognitive Examination (ACE), are not appropriate for micro-monitoring of cognitive performance because if identical questions are repeated, healthy participants and those with mild impairment can easily learn the test and improve upon their previous scores – as a result of learning rather than any improvement in their cognitive performance.

To investigate whether CGN_ICA might be appropriate for such micro-monitoring, we recruited 12 young individuals with high capacity for learning [University students, aged 20 to 36], and asked them to take the test every other day for two weeks (8 days in total). The CGN_ICA was delivered remotely via a web platform.

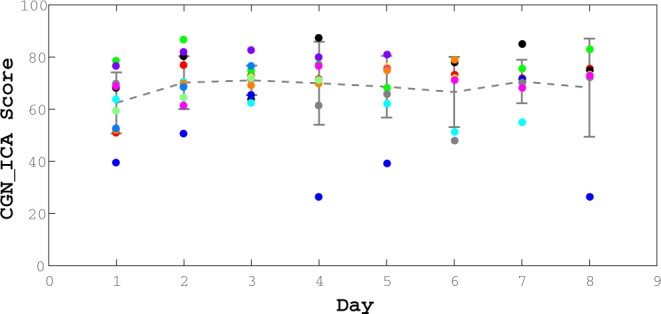

The test data indicate that even in subjects with a high capacity to learn, no learning bias was detected (Fig. 5). The CGN_ICA score does not increase monotonically, and comparing the mean of the CGN_ICA scores across these days, no significant difference was observed (ANOVA, F(7) = 0.62; P-value = 0.73).

Figure 5.

No significant effect of learning in repeated exposure to the CGN_ICA test. We find no learning bias when the test is taken multiple times. 12 healthy participants (age range = [20, 36]) took the CGN_ICA test every other day for over two weeks (ANOVA, F(7) = 0.62; P-value = 0.73). From these 12 participants, 7 of them completed all the sessions (8 days); and the rest did the test for at least the first three days.

Discussion

Early diagnosis is the mainstay of focus in scientific research71,72. There is currently no available cognitive screening tool that can detect early phenotypical changes prior to the emergence of memory problems and other symptoms of dementia. The vast majority of cognitive tests rely on the patients’ capacity to read and write while more educated individuals can often “second-guess” them. All of these standard tests require a clinician or a health-care professional to administer them, thus adding a considerable cost to the procedure.

We demonstrated that the combination of speed and accuracy of visual processing in a rapid visual categorization task can be used as a reliable measure to assess individual’s cognitive performance. The proposed visual test has significant advantages over the conventional cognitive tests because of its efficient administration, shorter duration, automatic scoring, language and education independency, potential for medical record or research database integration, and the capacity for micro monitoring of cognitive performance given the absence of a “learning bias”. Thus, we suggest CGN_ICA as a practical tool for routine screening of cognitive performance.

Potential use of CGN_ICA for early detection of dementia

Because of the high compensatory potential of the brain, symptoms of chronic neurodegenerative diseases, such as Alzheimer’s (AD), Parkinson (PD), Huntington (HD) diseases, vascular and frontotemporal (FTD) dementias occur 10–20 years after the beginning of the pathology9. Late stages of these disorders are characterized by massive neuronal death that is irreversible. Therefore, any late therapeutic treatment in the course of the disease will most likely fail to positively affect the disease progression in any meaningful way. This is illustrated by recent failures of anti-AD therapies in late stage clinical trials2,73. Thus further emphasizing the importance of the development of screening tests capable of detecting such diseases in their early asymptomatic stage.

ICA aims at early detection of cognitive dysfunction by targeting brain functionalities that are affected in the initial stages of the neurodegenerative disorders (e.g. dementia), specifically before the onset of memory symptoms. Given the decade-long lag between tissue damage and memory deficits in dementia, the CGN_ICA instead examines the visuo-motor pathway. Studies in the past 20 years reveal that all parts of the visual system may be affected in Alzheimer’s Disease, including the optic nerve, retina, lateral geniculate nucleus (LGN) and the visual cortex19. Particularly, in early stages of the disease, brain areas associated with the visuo-motor pathway are affected, beginning with the retina26–28,30, the visual cortex25,29,30 and the motor cortex21,31, so together these represent more effective areas to look for the impact of early stage neurodegeneration as opposed to solely focusing on memory. The CGN_ICA focuses on cognitive functions such as speed and accuracy of processing visual information which have been shown to engage a large volume of cortex, while being a predictor of people’s cognitive performance33–35; thus, monitoring the performance and functionality of these areas altogether can be a reliable early indicator of the disease onset.

Suitability for remote and frequent cognitive assessment

Remote monitoring or home-based online assessments is beneficial for patients, clinicians and researchers. Home-based assessment allows for a more comfortable setting for patients with a low stress environment. In addition, researchers and clinicians will have a time-efficient and convenient assessment instrument, which enables a valid and reliable evaluation of individuals’ cognitive performance. Furthermore, online assessment allows the researcher to collect data from a large number of participants in a short time period.

Given that the CGN_ICA test is self-administered and that it does not suffer from a learning bias, it can be used remotely and frequently to track changes in individuals’ cognitive performance over time. This makes the test even more useful for early diagnosis, by allowing the test to be used longitudinally, in a design wherein individuals are compared against their own baseline.

Conclusion and Future Directions

The CGN_ICA is designed to be an extremely easy to use, versatile and practical measurement tool for studies into dementias and other conditions that have an element of cognitive function, as it allows simple, sensitive and repeatable data collection of an overall score of a subject’s cognitive ability. The CGN_ICA platform is being further developed to employ artificial intelligence (AI) to improve its predictive power, utilizing patterns of participants’ response reaction times. The AI platform will allow for accurate classification of participants into cognitively healthy or cognitively impaired by comparing their CGN_ICA test profile with a large dataset of many individuals with validated clinical status which the AI platform has “learned” from. The AI engine will have the ability to improve its accuracy over time by learning from new data points that are incorporated into its training datasets.

Acknowledgements

We thank Mark Phillips, and Giulia Paggiola for reviewing the paper prior to submission. We also thank Jonathan El-Sharkawy, Johannes Bausch, and Jean Maillard for help with developing the software needed for data acquisitions. We are also grateful to Mohammad Arbabi for his help with subject recruitment.

Author Contributions

S.K.R. wrote the manuscript. C.K. gave comments on the manuscript. S.K.R., S.H., M.S., H.M., M.K., S.M.N., and E.S. helped with the data acquisitions. S.K.R., S.H., S.M.N. and C.K. helped with devising the protocol for the experiments. S.K.R., M.S., H.M., M.K. analyzed the data.

Data Availability Statement

The data generated during this study are included in this published article. Commercially insensitive raw data can be made available upon reasonable request from the corresponding author.

Competing Interests

Dr. Khaligh-Razavi, Dr. Habibi, and Dr. Kalafatis serve as directors at Cognetivity ltd. Other authors declared no competing interests.

Footnotes

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.2018 Alzheimer’s disease facts and figures. Alzheimers Dement. J. Alzheimers Assoc. 14, 367–429 (2018).

- 2.Sperling RA, Jack CR, Aisen PS. Testing the Right Target and Right Drug at the Right Stage. Sci. Transl. Med. 2011;3:111cm33–111cm33. doi: 10.1126/scitranslmed.3002609. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Folstein MF, Folstein SE, McHugh PR. “Mini-mental state”: a practical method for grading the cognitive state of patients for the clinician. J. Psychiatr. Res. 1975;12:189–198. doi: 10.1016/0022-3956(75)90026-6. [DOI] [PubMed] [Google Scholar]

- 4.Nasreddine ZS, et al. The Montreal Cognitive Assessment, MoCA: a brief screening tool for mild cognitive impairment. J. Am. Geriatr. Soc. 2005;53:695–699. doi: 10.1111/j.1532-5415.2005.53221.x. [DOI] [PubMed] [Google Scholar]

- 5.Mioshi E, Dawson K, Mitchell J, Arnold R, Hodges JR. The Addenbrooke’s Cognitive Examination Revised (ACE-R): a brief cognitive test battery for dementia screening. Int. J. Geriatr. Psychiatry. 2006;21:1078–1085. doi: 10.1002/gps.1610. [DOI] [PubMed] [Google Scholar]

- 6.Pasquier F. Early diagnosis of dementia: neuropsychology. J. Neurol. 1999;246:6–15. doi: 10.1007/s004150050299. [DOI] [PubMed] [Google Scholar]

- 7.Leifer, B. P. Early diagnosis of Alzheimer’s disease: clinical and economic benefits. J. Am. Geriatr. Soc. 51 (2003). [DOI] [PubMed]

- 8.Prince, M., Bryce, R. & Ferri, C. World Alzheimer Report 2011: The benefits of early diagnosis and intervention. (Alzheimer’s Disease International, 2011).

- 9.Sheinerman, K. S. & Umansky, S. R. Circulating cell-free microRNA as biomarkers for screening, diagnosis and monitoring of neurodegenerative diseases and other neurologic pathologies. Front. Cell. Neurosci. 7 (2013). [DOI] [PMC free article] [PubMed]

- 10.Dubois B, Padovani A, Scheltens P, Rossi A, Dell’Agnello G. Timely diagnosis for Alzheimer’s disease: a literature review on benefits and challenges. J. Alzheimers Dis. 2016;49:617–631. doi: 10.3233/JAD-150692. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Katz B, Rimmer S. Ophthalmologic manifestations of Alzheimer’s disease. Surv. Ophthalmol. 1989;34:31–43. doi: 10.1016/0039-6257(89)90127-6. [DOI] [PubMed] [Google Scholar]

- 12.Mendez MF, Tomsak RL, Remler B. Disorders of the visual system in Alzheimer’s disease. J Clin Neuroophthalmol. 1990;10:62–69. [PubMed] [Google Scholar]

- 13.Holroyd S, Shepherd ML. Alzheimer’s disease: a review for the ophthalmologist. Surv. Ophthalmol. 2001;45:516–524. doi: 10.1016/S0039-6257(01)00193-X. [DOI] [PubMed] [Google Scholar]

- 14.Jackson GR, Owsley C. Visual dysfunction, neurodegenerative diseases, and aging. Neurol. Clin. 2003;21:709–728. doi: 10.1016/S0733-8619(02)00107-X. [DOI] [PubMed] [Google Scholar]

- 15.Kirby E, Bandelow S, Hogervorst E. Visual impairment in Alzheimer’s disease: a critical revie. w. J. Alzheimers Dis. 2010;21:15–34. doi: 10.3233/JAD-2010-080785. [DOI] [PubMed] [Google Scholar]

- 16.Valenti DA. Alzheimer’s disease: visual system review. Optom.-J. Am. Optom. Assoc. 2010;81:12–21. doi: 10.1016/j.optm.2009.04.101. [DOI] [PubMed] [Google Scholar]

- 17.Bell MA, Ball MJ. Neuritic plaques and vessels of visual cortex in aging and Alzheimer’s dementia. Neurobiol. Aging. 1990;11:359–370. doi: 10.1016/0197-4580(90)90001-G. [DOI] [PubMed] [Google Scholar]

- 18.Iseri PK, Altinas Ö, Tokay T, Yüksel N. Relationship between cognitive impairment and retinal morphological and visual functional abnormalities in Alzheimer disease. J. Neuroophthalmol. 2006;26:18–24. doi: 10.1097/01.wno.0000204645.56873.26. [DOI] [PubMed] [Google Scholar]

- 19.Tzekov R, Mullan M. Vision function abnormalities in Alzheimer disease. Surv. Ophthalmol. 2014;59:414–433. doi: 10.1016/j.survophthal.2013.10.002. [DOI] [PubMed] [Google Scholar]

- 20.Cronin-Golomb A, Corkin S, Growdon JH. Visual dysfunction predicts cognitive deficits in Alzheimer’s disease. Optom. Vis. Sci. Off. Publ. Am. Acad. Optom. 1995;72:168–176. doi: 10.1097/00006324-199503000-00004. [DOI] [PubMed] [Google Scholar]

- 21.Ferreri F, et al. Motor cortex excitability in Alzheimer’s disease: a transcranial magnetic stimulation follow-up study. Neurosci. Lett. 2011;492:94–98. doi: 10.1016/j.neulet.2011.01.064. [DOI] [PubMed] [Google Scholar]

- 22.Bak, T. H. Why patients with dementia need a motor examination (BMJ Publishing Group Ltd 2016).

- 23.Pirozzolo FJ, Hansch EC. Oculomotor reaction time in dementia reflects degree of cerebral dysfunction. Science. 1981;214:349–351. doi: 10.1126/science.7280699. [DOI] [PubMed] [Google Scholar]

- 24.Hutton JT, Johnston CW, Shapiro I, Pirozzolo FJ. Oculomotor programming disturbances in the dementia syndrome. Percept. Mot. Skills. 1979;49:312–314. doi: 10.2466/pms.1979.49.1.312. [DOI] [PubMed] [Google Scholar]

- 25.Armstrong, R. The visual cortex in Alzheimer disease: laminar distribution of the pathological changes in visual areas V1 and V2. in Visual cortex (eds Harris, J. & Scott, J.) 99–128 (Nova science, 2012).

- 26.Paquet C, et al. Abnormal retinal thickness in patients with mild cognitive impairment and Alzheimer’s disease. Neurosci. Lett. 2007;420:97–99. doi: 10.1016/j.neulet.2007.02.090. [DOI] [PubMed] [Google Scholar]

- 27.Berisha F, Feke GT, Trempe CL, McMeel JW, Schepens CL. Retinal Abnormalities in Early Alzheimer’s Disease. Invest. Ophthalmol. Vis. Sci. 2007;48:2285–2289. doi: 10.1167/iovs.06-1029. [DOI] [PubMed] [Google Scholar]

- 28.Lu Y, et al. Retinal nerve fiber layer structure abnormalities in early Alzheimer’s disease: Evidence in optical coherence tomography. Neurosci. Lett. 2010;480:69–72. doi: 10.1016/j.neulet.2010.06.006. [DOI] [PubMed] [Google Scholar]

- 29.Brewer, A. A. & Barton, B. Visual cortex in aging and Alzheimer’s disease: Changes in visual field maps and population receptive fields. Front. Psychol. 1 (2012). [DOI] [PMC free article] [PubMed]

- 30.Chang, L. Y. L. et al. Alzheimer’s disease in the human eye. Clinical tests that identify ocular and visual information processing deficit as biomarkers. Alzheimers Dement, 10.1016/j.jalz.2013.06.004 (2013). [DOI] [PubMed]

- 31.Bak, T. H. Why patients with dementia need a motor examination. J. Neurol. Neurosurg. Psychiatry jnnp-2016-313466, 10.1136/jnnp-2016-313466 (2016). [DOI] [PubMed]

- 32.Khaligh-Razavi, S.-M. & Habibi, S. System for assessing mental health disorder. UK Intellect. Prop. Off. (2013).

- 33.Mirzaei A, Khaligh-Razavi S-M, Ghodrati M, Zabbah S, Ebrahimpour R. Predicting the human reaction time based on natural image statistics in a rapid categorization task. Vision Res. 2013;81:36–44. doi: 10.1016/j.visres.2013.02.003. [DOI] [PubMed] [Google Scholar]

- 34.Zhang R, et al. Novel object recognition as a facile behavior test for evaluating drug effects in AβPP/PS1 Alzheimer’s disease mouse model. J. Alzheimers Dis. JAD. 2012;31:801–812. doi: 10.3233/JAD-2012-120151. [DOI] [PubMed] [Google Scholar]

- 35.Mudar RA, et al. Effects of age on cognitive control during semantic categorization. Behav. Brain Res. 2015;287:285–293. doi: 10.1016/j.bbr.2015.03.042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Ruiz-Rizzo AL, et al. Simultaneous object perception deficits are related to reduced visual processing speed in amnestic mild cognitive impairment. Neurobiol. Aging. 2017;55:132–142. doi: 10.1016/j.neurobiolaging.2017.03.029. [DOI] [PubMed] [Google Scholar]

- 37.Vanrullen R, Thorpe SJ. The time course of visual processing: from early perception to decision-making. J. Cogn. Neurosci. 2001;13:454–461. doi: 10.1162/08989290152001880. [DOI] [PubMed] [Google Scholar]

- 38.Bacon-Macé N, Macé MJM, Fabre-Thorpe M, Thorpe SJ. The time course of visual processing: Backward masking and natural scene categorisation. Vision Res. 2005;45:1459–1469. doi: 10.1016/j.visres.2005.01.004. [DOI] [PubMed] [Google Scholar]

- 39.Kiani R, Esteky H, Mirpour K, Tanaka K. Object Category Structure in Response Patterns of Neuronal Population in Monkey Inferior Temporal Cortex. J. Neurophysiol. 2007;97:4296–4309. doi: 10.1152/jn.00024.2007. [DOI] [PubMed] [Google Scholar]

- 40.Kriegeskorte N, et al. Matching Categorical Object Representations in Inferior Temporal Cortex of Man and Monkey. Neuron. 2008;60:1126–1141. doi: 10.1016/j.neuron.2008.10.043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Connolly AC, et al. The representation of biological classes in the human brain. J. Neurosci. Off. J. Soc. Neurosci. 2012;32:2608–2618. doi: 10.1523/JNEUROSCI.5547-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Khaligh-Razavi S-M, Kriegeskorte N. Deep Supervised, but Not Unsupervised, Models May Explain IT Cortical Representation. PLoS Comput Biol. 2014;10:e1003915. doi: 10.1371/journal.pcbi.1003915. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Thorpe SJ. The Speed of Categorization in the Human Visual System. Neuron. 2009;62:168–170. doi: 10.1016/j.neuron.2009.04.012. [DOI] [PubMed] [Google Scholar]

- 44.Naselaris T, Stansbury DE, Gallant JL. Cortical representation of animate and inanimate objects in complex natural scenes. J. Physiol.-Paris. 2012;106:239–249. doi: 10.1016/j.jphysparis.2012.02.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Khaligh-Razavi, S.-M., Henriksson, L., Kay, K. & Kriegeskorte, N. Fixed versus mixed RSA: Explaining visual representations by fixed and mixed feature sets from shallow and deep computational models. J. Math. Psychol (2016). [DOI] [PMC free article] [PubMed]

- 46.Liu H, Agam Y, Madsen JR, Kreiman G. Timing, timing, timing: fast decoding of object information from intracranial field potentials in human visual cortex. Neuron. 2009;62:281–290. doi: 10.1016/j.neuron.2009.02.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Carlson T, Tovar DA, Alink A, Kriegeskorte N. Representational dynamics of object vision: The first 1000 ms. J. Vis. 2013;13:1. doi: 10.1167/13.10.1. [DOI] [PubMed] [Google Scholar]

- 48.Cichy RM, Pantazis D, Oliva A. Resolving human object recognition in space and time. Nat. Neurosci. 2014;17:455–462. doi: 10.1038/nn.3635. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Lamme VAF, Roelfsema PR. The distinct modes of vision offered by feedforward and recurrent processing. Trends Neurosci. 2000;23:571–579. doi: 10.1016/S0166-2236(00)01657-X. [DOI] [PubMed] [Google Scholar]

- 50.Lamme VAF, Zipser K, Spekreijse H. Masking Interrupts Figure-Ground Signals in V1. J. Cogn. Neurosci. 2002;14:1044–1053. doi: 10.1162/089892902320474490. [DOI] [PubMed] [Google Scholar]

- 51.Breitmeyer BG, Ogmen H. Recent models and findings in visual backward masking: A comparison, review, and update. Percept. Psychophys. 2000;62:1572–1595. doi: 10.3758/BF03212157. [DOI] [PubMed] [Google Scholar]

- 52.Fahrenfort JJ, Scholte HS, Lamme VAF. Masking Disrupts Reentrant Processing in Human Visual Cortex. J. Cogn. Neurosci. 2007;19:1488–1497. doi: 10.1162/jocn.2007.19.9.1488. [DOI] [PubMed] [Google Scholar]

- 53.Rajaei, K., Mohsenzadeh, Y., Ebrahimpour, R. & Khaligh-Razavi, S.-M. Beyond Core Object Recognition: Recurrent processes account for object recognition under occlusion. bioRxiv 302034 (2018). [DOI] [PMC free article] [PubMed]

- 54.Mathuranath PS, Nestor PJ, Berrios GE, Rakowicz W, Hodges JR. A brief cognitive test battery to differentiate Alzheimer’s disease and frontotemporal dementia. Neurology. 2000;55:1613–1620. doi: 10.1212/01.wnl.0000434309.85312.19. [DOI] [PubMed] [Google Scholar]

- 55.Hodges, J. R. & Larner, A. J. Addenbrooke’s Cognitive Examinations: ACE, ACE-R, ACE-III, ACEapp, and M-ACE. In Cognitive Screening Instruments 109–137 (Springer, 2017).

- 56.Smith, A. Symbol digit modalities test. (Western Psychological Services Los Angeles, CA, 1982).

- 57.Delis, D. C., Kramer, J. H., Kaplan, E. & Ober, B. A. CVLT-II: California verbal learning test: adult version. (Psychological Corporation, 2000).

- 58.Stegen S, et al. Validity of the California Verbal Learning Test–II in multiple sclerosis. Clin. Neuropsychol. 2010;24:189–202. doi: 10.1080/13854040903266910. [DOI] [PubMed] [Google Scholar]

- 59.Benedict RH, et al. Brief International Cognitive Assessment for MS (BICAMS): international standards for validation. BMC Neurol. 2012;12:55. doi: 10.1186/1471-2377-12-55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Benedict RH, Schretlen D, Groninger L, Dobraski M, Shpritz B. Revision of the Brief Visuospatial Memory Test: Studies of normal performance, reliability, and validity. Psychol. Assess. 1996;8:145. doi: 10.1037/1040-3590.8.2.145. [DOI] [Google Scholar]

- 61.Benedict, R. H. Brief visuospatial memory test–revised: professional manual. (PAR, 1997).

- 62.Gretton, A. & Györfi, L. Nonparametric independence tests: Space partitioning and kernel approaches. In International Conference on Algorithmic Learning Theory 183–198 (Springer, 2008).

- 63.Duffy, C. J. De novo classification request for cognivue. Cognivue submission number: den130033 date of de novo: june 24, 2013. 25 (2013).

- 64.Hammers D, et al. Validity of a brief computerized cognitive screening test in dementia. J. Geriatr. Psychiatry Neurol. 2012;25:89–99. doi: 10.1177/0891988712447894. [DOI] [PubMed] [Google Scholar]

- 65.Lam B, et al. Criterion and Convergent Validity of the Montreal Cognitive Assessment with Screening and Standardized Neuropsychological Testing. J. Am. Geriatr. Soc. 2013;61:2181–2185. doi: 10.1111/jgs.12541. [DOI] [PubMed] [Google Scholar]

- 66.Charvet, L. E., Shaw, M., Frontario, A., Langdon, D. & Krupp, L. B. Cognitive impairment in pediatric-onset multiple sclerosis is detected by the Brief International Cognitive Assessment for Multiple Sclerosis and computerized cognitive testing. Mult. Scler. J. 1352458517701588, 10.1177/1352458517701588 (2017). [DOI] [PubMed]

- 67.Mielke, M. M. et al. Performance of the CogState computerized battery in the Mayo Clinic Study on Aging. Alzheimers Dement. J. Alzheimers Assoc, 10.1016/j.jalz.2015.01.008 (2015). [DOI] [PMC free article] [PubMed]

- 68.Yu S, et al. Potential biomarkers relating pathological proteins, neuroinflammatory factors and free radicals in PD patients with cognitive impairment: a cross-sectional study. BMC Neurol. 2014;14:113. doi: 10.1186/1471-2377-14-113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Meng, Y. et al. A correlativity study of plasma APL1β28 and clusterin levels with MMSE/MoCA/CASI in aMCI patients. Sci. Rep. 5, (2015). [DOI] [PMC free article] [PubMed]

- 70.Langdon DW, et al. Recommendations for a brief international cognitive assessment for multiple sclerosis (BICAMS) Mult. Scler. J. 2012;18:891–898. doi: 10.1177/1352458511431076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Robinson L, Tang E, Taylor J-P. Dementia: timely diagnosis and early intervention. Bmj. 2015;350:h3029. doi: 10.1136/bmj.h3029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Nordberg A. Dementia in 2014: Towards early diagnosis in Alzheimer disease. Nat. Rev. Neurol. 2015;11:69. doi: 10.1038/nrneurol.2014.257. [DOI] [PubMed] [Google Scholar]

- 73.Pillai JA, Cummings JL. Clinical trials in predementia stages of Alzheimer disease. Med. Clin. 2013;97:439–457. doi: 10.1016/j.mcna.2013.01.002. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data generated during this study are included in this published article. Commercially insensitive raw data can be made available upon reasonable request from the corresponding author.