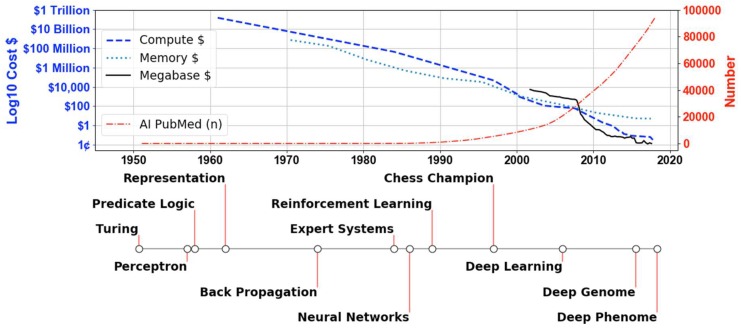

Figure 1.

The cost of technology in 2017 US dollars on a log10 scale is plotted in relation to the left axis, and the cumulative number (n) of artificial intelligence (AI) publications in PubMed is plotted in relation to the right axis across time up to and including 2017. The costs of three technologies are compared: Compute, Memory and Megabase. Compute corresponds to the computing costs in gigaflops (one billion floating point operations per second), memory corresponds to the cost of one gigabyte of random access memory, and Megabase corresponds to the cost per megabase sequenced. The cumulative number of PubMed AI-related publications was calculated from identical scripts run for each year starting in 1950. The bottom timeline represents events in the history of AI beginning with Turing’s 1950 publication of “Computing Machinery and Intelligence” and ending with deep learning applied to biomedical phenomic data in 2018 (Supplementary File).