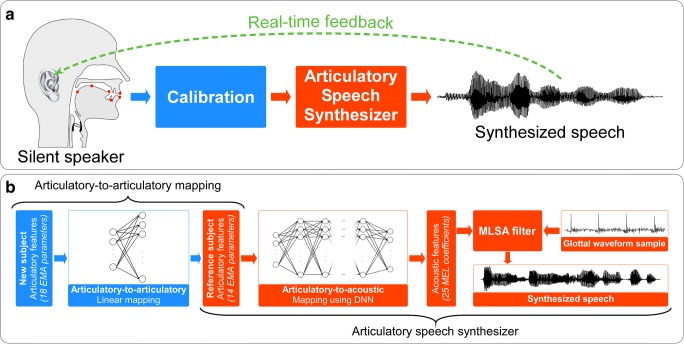

Fig. 13.

Strategy proposed by Bocquelet et al. [87] to synthesize speech from articulatory representations in the brain. (A) Overall flow of training approach, in which a silent speaker synthesizes speech with an articulatory speech synthesizer calibrated to the anatomy of the speaker and based on a real-time feedback loop. (B) Specific steps of articulatory-to-acoustic inversion approach, in which articulatory features are adjusted to match a reference speaker and then passed through a deep neural network to obtain acoustic features that can excited by an Mel Log Spectrum Approximation (MLSA) filter to synthesize speech [87]. In the original study, articulatory parameters derived from electromagnetic articulography (EMA) recordings were mapped to the intended speech waveform [87]. However, the authors suggest that in a BCI application, the EMA parameters could be inferred from neural activity and then mapped to the intended speech waveform using the pretrained ground-truth model [87]. Figure reused with permission from Ref. [87]