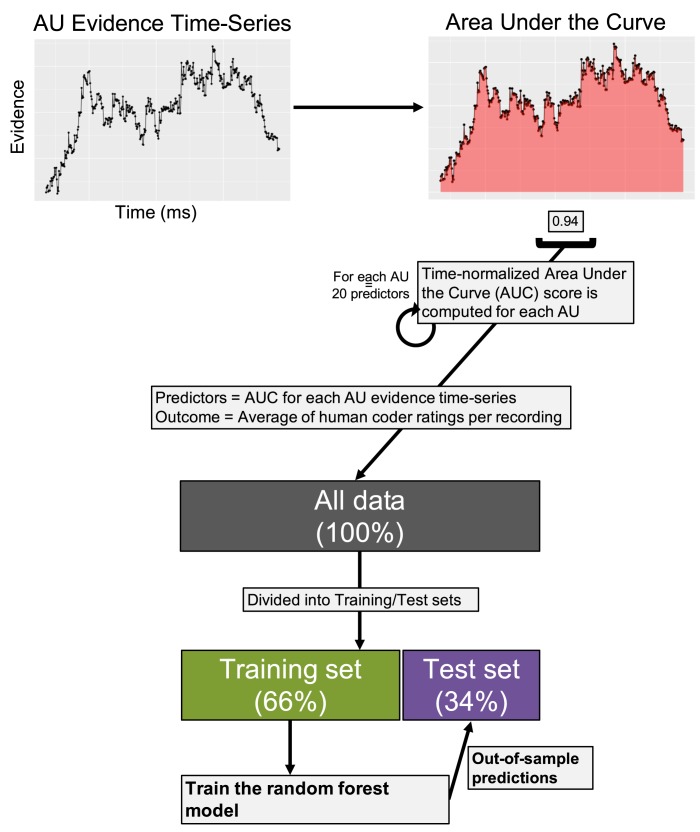

Fig 2. Machine learning procedure.

The goal of our first analysis was to determine whether or not CVML could perform similarly to humans in rating facial expressions of emotion. For each AU evidence time-series, we computed the normalized (i.e., divided by the total time that FACET detected a face) Area Under the Curve (AUC), which captures the probability that a given AU is present over time. All AUC values (20 total) were entered as predictors into the random forest (RF) model to predict the average coder rating for each recording. To test how similar the model ratings were to human ratings, we separated the data into training (3,060 recordings) and test (1,588 recordings) sets. We fit the RF to the training set and made predictions on the unseen test set. Model performance was assessed by comparing the Pearson and intraclass correlations between computer- and human-generated ratings in the test sets.