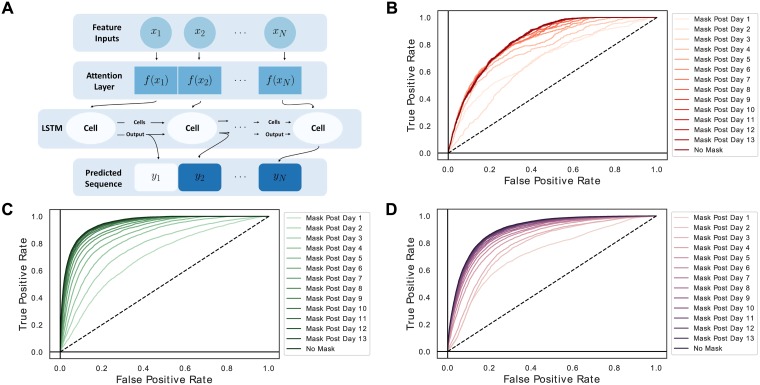

Fig 1. LSTMs incorporating attention can model multiple clinical targets.

(A) The schematic of a basic attention-LSTM sequence to sequence architecture. The input variable-level attention layer (highlighted in red) is passed to a recurrent neural network LSTM cell which then sends both an encoded cell state vector and an encoded output to the next time point, the latter of which is used to make a prediction for the target at each time point. (B-D) Three AUROC curves demonstrate this approach’s ability to model same-day myocardial ischemia (AUC 0.834), sepsis (AUC 0.952), and vancomycin administration (AUC 0.904) in the ICU. We note that since predictors and targets are drawn from the same time window in this formulation of the model, this is a modeling rather than predictive task.