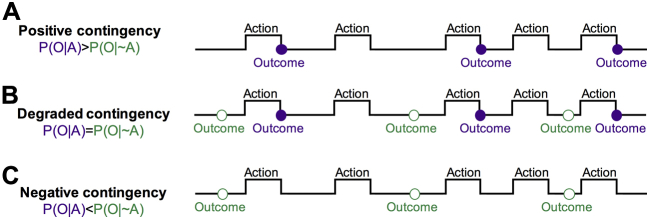

Figure 1.

Contingency manipulation. To degrade the contingency, once agents have learned to perform an action to receive a reward with a certain probability, a schedule of noncontingent outcome delivery is superimposed. By increasing the frequency of noncontingent outcomes, the overall contingency (i.e., the causal association between an action and its consequences) is degraded or becomes negative. If guided by the goal-directed system, an agent should stop responding in the face of contingency degradation. (A) Diagram illustrating a schedule with a positive contingency, in which outcome is delivered on performance of an action with a given probability [P(O|A)]. (B) Contingency is degraded by also delivering outcomes in the absence of an action with a given probability [P(O|∼A)]. If the contingency is degraded to the extent that the two probabilities are equal, the causal status of the action is nil, and the probability of the reinforcer is the same regardless of any response. (C) When P(O|∼A) is higher than P(O|A), the contingency becomes negative, and the action reduces the probability of reinforcer delivery. P(O|A), probability of outcome given an action; P(O|∼A) probability of outcome given the absence of an action; violet filled circles, contingent outcomes; green empty circles, noncontingent outcomes.