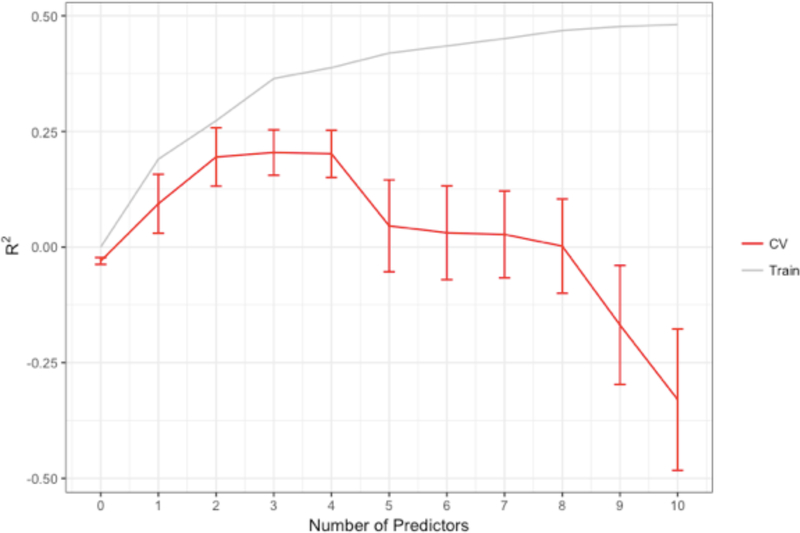

Figure 6. R-squared for the training sample and the cross-validation as a function of the number of predictors.

It is apparent that while adding more predictors explains a larger portion of the variance in the training sample, it yields diminishing returns for the cross-validation. In fact, not only are there diminishing returns, more than 4 predictors is actively detrimental to finding a reproducible solution. Our data also suggest that there is severe overfitting when too many predictors are included—the predictive R-squared is negative, which indicates that the model is predicting noise, and that the predictions are actually worse than simply predicting the mean for everyone.