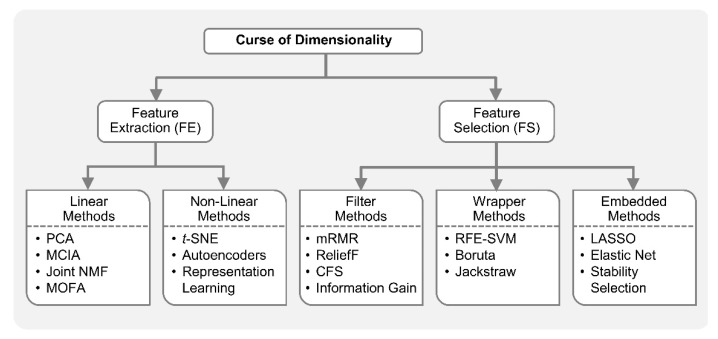

Figure 1.

Machine learning (ML) with curse of dimensionality. ML-based dimensionality reduction (DR) approaches, for tackling the curse of dimensionality, can be classified into feature extraction (FE) and feature selection (FS). FE methods project data from a high-dimensional space to a lower dimensional space, while FS methods identify a small relevant subset of original features in order to reduce the dimensionality. Principal component analysis (PCA), multi-omics factor analysis (MOFA), multiple co-inertia analysis (MCIA), and joint non-negative matrix factorization (NMF) are some examples of FE methods applied in integrative analysis. These FE approaches assume linear relationships in the dataset. Nonlinear FE methods also exist including t-SNE, autoencoders, representation learning, etc. ML-based FS is broadly divided into filter, wrapper and embedded methods. Filter methods such as maximal-relevance and minimal-redundancy (mRMR), correlation-based FS (FCS), ReliefF and Information Gain are employed as a pre-processing step before training any model, while wrapper methods such as recursive feature elimination-support vector machine (RFE-SVM) and Boruta incorporate a predictive model to judge the importance of features. Embedded methods which include least absolute shrinkage and selection operator (LASSO), Elastic Net, stability selection, etc., perform feature selection as part of the model building process.