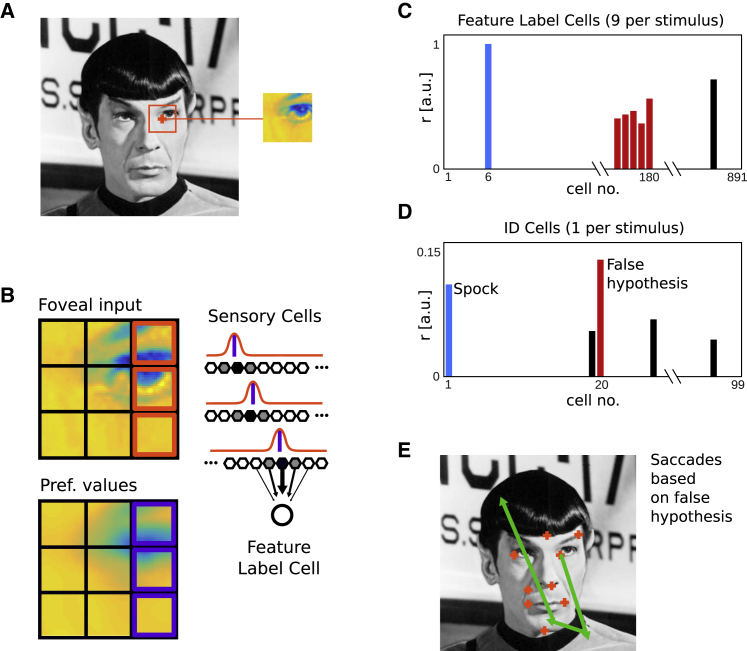

Figure 2.

Feature Detection and Ambiguity

(A) The first (randomly chosen) stimulus feature is assumed to attract attention in a bottom-up manner.

(B) The foveated feature is compared to imprecise reference values by banks of sensory cells (hexagons). Each pixel drives a given set of sensory cells maximally (filled hexagons). Here, the responses for 3 pixels are illustrated (red Gaussians and pixels). During training, a blurred version of the stimulus is presented (purple pixels and bars [preferred values] under Gaussians), resulting in feature ambiguity. Connections between sensory cells and feature label cells are learned (black arrows, only one set of connections shown for clarity).

(C) Feature ambiguity could lead to a feature label cell from an incorrect stimulus identity being the most active, or multiple feature label cells from an incorrect stimulus being partially active (red).

(D) In either case, the corresponding (incorrect, red) stimulus identity cell will receive more input than the correct one (Spock, blue), and the system starts with an incorrect hypothesis.

(E) Thus, the incorrect stimulus identity cell (red) determines the next saccade(s), which cannot bring the memorized features of the stimulus into foveal focus. Image credit: Mr. Spock: public domain image.