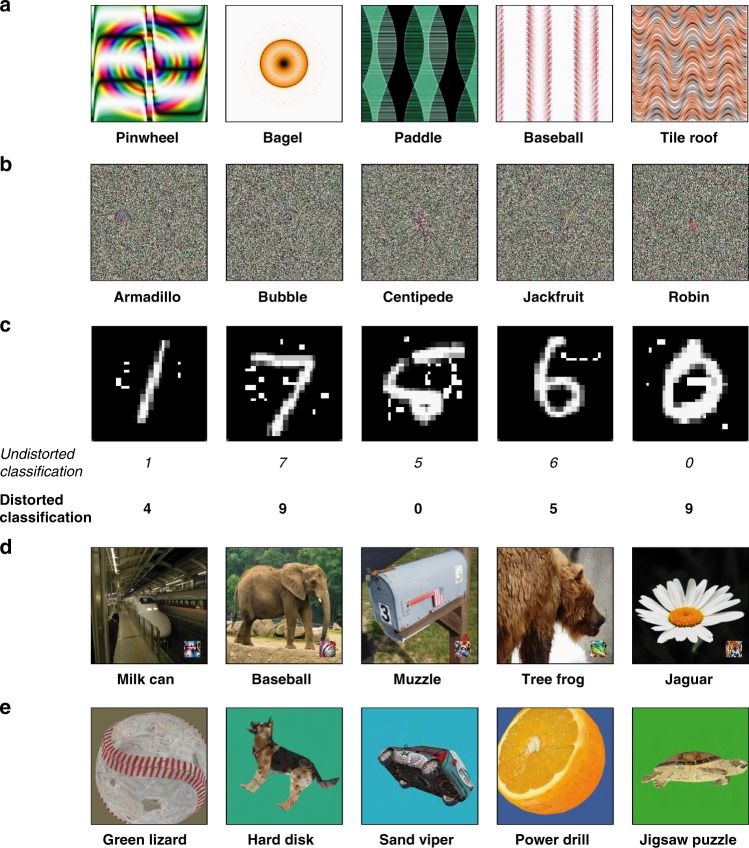

Fig. 1.

Examples of adversarial images that “fool” Convolutional Neural Nets trained to classify familiar objects, with labels indicating the machine’s classifications. a Indirectly encoded “fooling” images (from ref. 14). b Directly encoded “fooling” images (from ref. 14). c Perturbed adversarial images that cause the machine to classify one kind of digit as another (from ref. 15). d The LaVAN attack13 can cause a machine to misclassify a natural image even when the noise is localized to a corner of the image. e “Robust” adversarial images12 are renders of 3D objects that are misclassified from multiple viewpoints (and can even be physically produced in the real world)