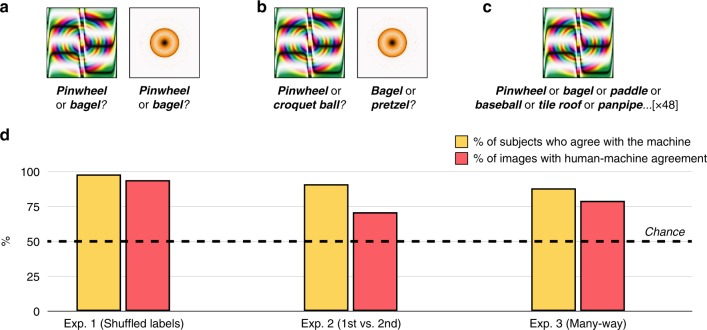

Fig. 2.

Forced-choice classification with indirectly encoded fooling images. a In Experiment 1, 200 subjects saw one fooling image at a time (48 images total), and chose between two candidate labels: The machine’s choice for that image, and a random label drawn from the other images in the imageset. b In Experiment 2, 200 subjects chose between the machine’s first-choice classification and its second-choice classification. c In Experiment 3a, 200 subjects saw the same images as before, but with all 48 labels visible at once. d In all 3 experiments, most subjects agreed with the machine more often than would be predicted by chance responding (yellow bars), and most images showed human-machine agreement more often than would be predicted by chance responding (red bars). Given that raw classification accuracy in human subjects will be modulated by factors such as attention, motivation, engagement with the task, time spent studying an image, etc., we report the percentage of subjects who agreed with the machine at above-chance rates, and the percentage of images that showed above-chance human-machine agreement. For Experiment 1, the 95% confidence interval for the % of subjects with above-chance classification was [94.6% 99.4%], and it was [82.8% 98.7%] for the % of images with above-chance classification. For Experiment 2, these intervals were [87.7% 95.7%] and [58.0% 83.7%], respectively. For Experiment 3, these intervals were [83.2% 93.2%] and [67.7% 90.7%], respectively. Across all three experiments, these outcomes were reliably different from chance at p < 0.001 (two-sided binomial probability test)