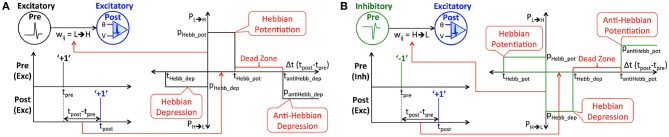

Figure 2.

(A) Illustration of eHB-STDP, an STDP-based probabilistic learning rule, incorporating Hebbian and anti-Hebbian learning mechanisms, for training the binary synaptic weights interconnecting excitatory pre- and post-neurons firing positive spikes. The synaptic weight is probabilistically potentiated for small positive time difference (Hebbian in nature) while it is probabilistically depressed for large positive (anti-Hebbian in nature) or small negative time difference (Hebbian in nature) between the pre- and post-spikes. The switching probability is held constant within the Hebbian potentiation, Hebbian depression, and anti-Hebbian depression windows, and is zero in the dead zone. (B) Illustration of iHB-STDP for binary synaptic weights connecting inhibitory pre-neurons firing negative spikes to excitatory post-neurons. The iHB-STDP dynamics are obtained by mirroring the eHB-STDP dynamics about the Δt (tpost−tpre) axis.