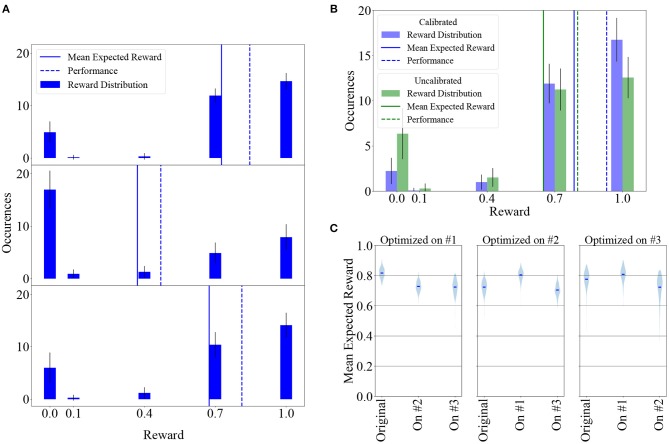

Figure 7.

Learning can largely supplant individual calibration of neuron parameters and adapts synaptic weights to compensate for neuronal variability. (A) Top: Reward distribution over 100 experiments measured with a previously learned, fixed weight matrix. Middle: Same as above, with randomly permuted neurons in each of the 100 experiments. The agent's performance and therefore its received reward decline due to the weight matrix being adapted to a specific pattern of fixed-pattern noise. Bottom: Allowing the agent to learn for 50, 000 additional iterations after having randomly shuffled its neurons leads to weight re-adaptation, increasing its performance and received reward. In these experiments, LIF parameters were not calibrated individually per neuron. (B) Reward distribution after 50, 000 learning iterations in 100 experiments for a calibrated and an uncalibrated system, with learning being largely able to compensate for the difference. (C) Results can be reproduced on different chips. Violin plot of mean expected reward after hyperparameter optimization on chips #1, #2, and #3. Results are shown for the calibrated case. All other results in this manuscript were obtained using Chip #1.