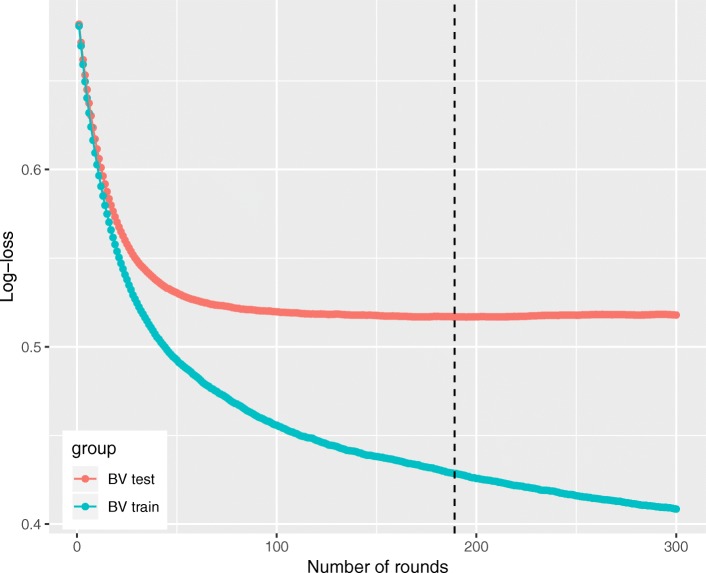

Fig. 3.

Training process of the extreme gradient boosting machine. Sample output of bootstrap validation (BV) during XGBoost hyperparameter tuning, using the values specified in the final XGBoost model (learning rate = 0.04, minimum loss reduction = 10, maximum tree depth = 9, subsample = 0.6, and number of trees = 300). Log-loss value for the training and testing datasets is shown in the vertical axis. The dashed vertical line indicates the number of rounds with the minimum log-loss in the test sample. The conditions of well-tuned model were satisfied: BV training log-loss decreases as the number of trees in an ensemble increases, and BV testing log-loss is less than 0.693 (e.g., a log-loss of 0.693 is the performance of a binary classifier that performs no better than chance: − log 0.5 ≈ 0.693) and only slightly more than BV training log-loss as the tree grows.