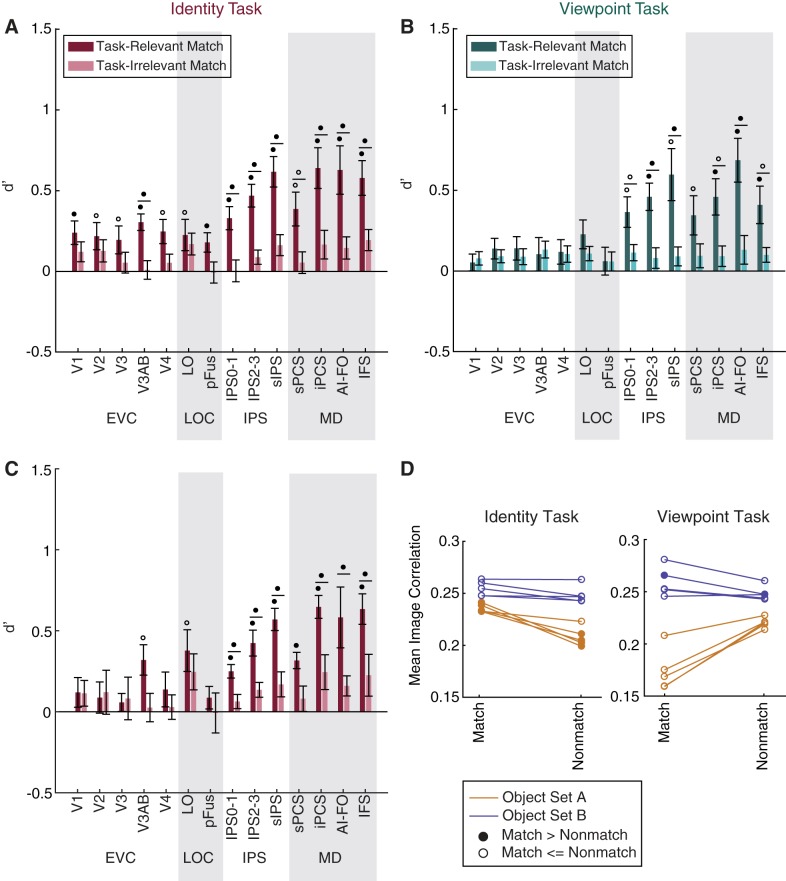

Fig. 6.

Control analyses related to Fig. 5: viewpoint and identity match information in MD regions is not driven by low-level image statistics. A and B: to address the possibility that match status could have been inferred from low-level visual properties, we removed all trials in which an object had a high degree of shape similarity to the previous object and repeated the analyses of Fig. 5. A: identity match information remained above chance in all regions. B: viewpoint match information dropped to chance in the early visual cortex (EVC; comprising V1, V2, V3, V3AB, and V4) and lateral occipital complex (LOC; comprising LO and pFus) but remained above chance in multiple-demand (MD) network [superior precentral sulcus (sPCS), inferior precentral sulcus (iPCS), anterior insula/frontal operculum (AI/FO), and inferior frontal sulcus (IFS)] regions of interest (ROIs). For individual subject data, see Fig. 7. C: after identifying that the pairwise similarity between images in object set A was informative about identity match status, we reanalyzed the identity task data using only the subjects shown object set B. Identity match classification in early visual and ventral visual cortex drops below significance when subjects shown object set A are removed but remains above chance in MD regions. P values were computed at the subject level over these 5 subjects and FDR-corrected across ROIs. Open circles indicate significance at q = 0.05; closed circles indicate significance at q = 0.01. Circles above individual bars indicate above-chance classification performance (test against 0); circles above pairs of bars (denoted by horizontal lines) indicate significant differences between bars (paired t-test). Error bars are ±SE. D: image correlation is predictive of identity match status for several subjects in object set A. To assess the possibility that identity match classification in EVC may have been driven by low-level similarity between pairs of images, we used the Pearson correlation coefficient to calculate the similarity between all pairs of object images (excluding image pairs that were matched in both category and viewpoint and thus highly similar). For each subject, we calculated the mean correlation coefficient between pairs of images that were a match in each feature vs. images that were not a match. In the plot, closed circles indicate that this mean value was higher for matching pairs than for nonmatching pairs (α = 0.01). This finding suggests that the above-chance classification of identity match status observed in set A subjects may have been driven by low-level image features.