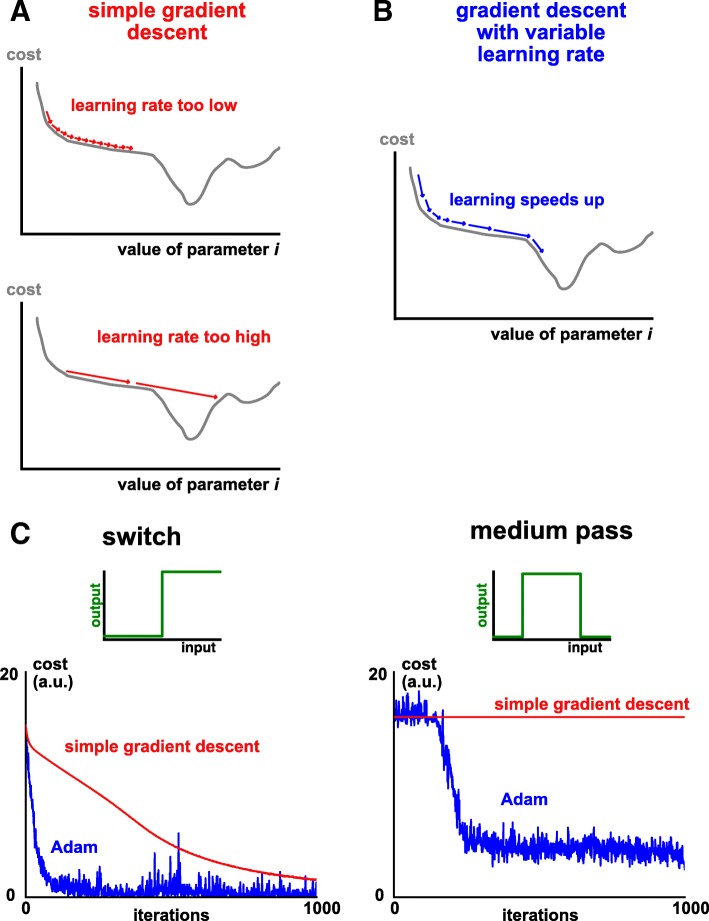

Fig. 2.

Adam is an effective gradient descent algorithm for ODEs. a Using a constant learning rate in gradient descent creates difficulties in the optimization process. If the learning rate is too low (upper schematic), the algorithm ‘gets stuck’ in plateau regions with a shallow gradient (saddle points in high dimensions). If instead the learning rate is too high (lower schematic), important features are missed and/or the learning algorithm won’t converge. b An adaptive learning rate substantially improves optimization (schematic). Intuitively, learning speeds up when traversing a shallow, but consistent, gradient. c Cost minimization plotted against iteration number, comparing classic gradient descent (red) with the Adam algorithm (blue). Left: an ultrasensitive switch. Right: a medium pass filter