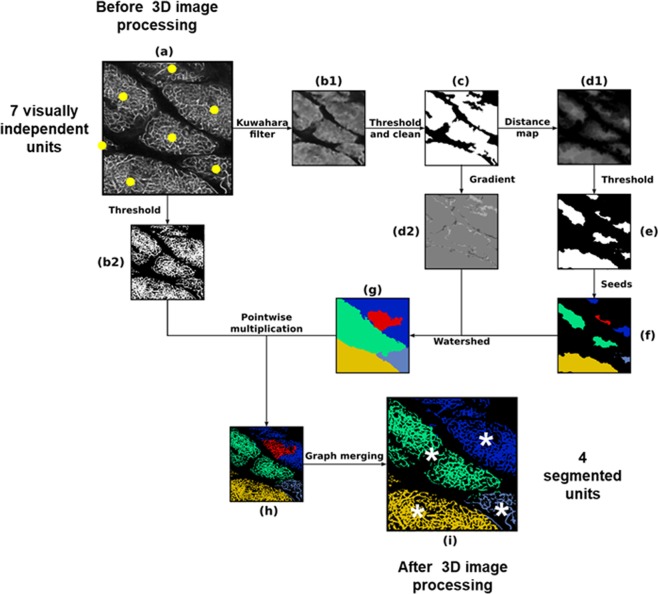

Figure 1.

Schematic illustration of the 3D image post-treatment work-flow. Each sub-figure represents the result of one step of the algorithm. The links between them describe the operations needed to pursue one step after another. (a) Downscaled original image before processing, (b1) image filtered using a customized Kuwahara filter (each pixel is replaced with the mean of the means/variances ratio evaluated at neighbouring boxes), (b2) downscaled original image binarized using a simple threshold, (c) filtered image binarized using a simple threshold and cleaned using mathematical morphology closure treatments to fill gaps and remove smallest “islands”, (d1) result of distance map, (d2) gradient image using Sobel method, (e) binarized image using simple threshold, (f) seeds for watershed obtained by computing connected components, (g) watershed image obtained using flooding method, (h) pre-segmented image obtained from original downscaled binarized image and watershed image by point-wise multiplication (result from (b2) and (g)), (i) final segmented image after a graph merging method based on evaluation of contact surface between relative to mean total surface for every pair of pre-segmented subunits. Each colour codes one 3D segmented unit. One can note that the segmentation procedure finally detects four different units (white asterisks, picture “i”) whereas human eye would distinguish seven units (yellow points, picture “a”).