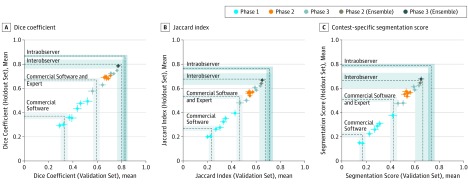

Figure 2. Artificial Intelligence Algorithm Performance in Each Phase of the Contest Compared Against Benchmarks.

A comparison of average performance (point) and standard error (whiskers) based on metrics (A) Dice coefficient, (B) Jaccard Index, and (C) contest-specific segmentation score (S score) of top 7 algorithms from phase 1, top 5 algorithms from phase 2, top 5 algorithms from phase 3, phase 2 ensemble model, and phase 3 ensemble model on the validation (x axis) and holdout data sets (y axis). Performance benchmarks for comparison include commercially available region-growing-based algorithms before (commercial software) and after (commercial software and expert) human intervention, the average of human interobserver comparisons between 6 radiation oncologists, and intraobserver variation in the human expert performing the segmentation task twice independently on the same tumor. Benchmarks are shown as dashed lines (gray bands indicate estimated uncertainties as described in eTable 3 in the Supplement).