Abstract

Involving patients in research broadens a researcher’s field of influence and may generate novel ideas. Preclinical research is integral to the progression of innovative healthcare. These are not patient-facing disciplines and implementing meaningful public and patient involvement (PPI) can be a challenge. A discussion forum and thematic analysis identified key challenges of implementing public and patient involvement for preclinical researchers. In response we developed a “PPI Ready” planning canvas. For contemporaneous evaluation of public and patient involvement, a psychometric questionnaire and an open source tool for its evaluation were developed. The questionnaire measures information, procedural and quality assessment. Combined with the open source evaluation tool, researchers are notified if public and patient involvement is unsatisfactory in any of these areas. The tool is easy to use and adapts a psychometric test into a format familiar to preclinical scientists. Designed to be used iteratively across a research project, it provides a simple reporting grade to document satisfaction trend over the research lifecycle.

Introduction

Involving patients or the interested public in research broadens a researcher’s field of influence, generating novel ideas, challenges and discussions. Basic, translational and preclinical research (hereto preclinical) is integral to the progression of innovative healthcare, indeed the majority of National Institute for Health (NIH) funds in the USA are focused on preclinical research[1]. Preclinical research is not a traditionally patient-facing discipline and implementing meaningful public and patient involvement (PPI) can be a serious challenge in the absence of well-defined support structures.

Increasingly in healthcare, patients and the interested public are sought as partners in study design and governance. This trend is growing due to an increasing requirement by national, international and charitable funding bodies to include PPI as a condition of funding[2–6]. We use the INVOLVE definition of PPI, whereby PPI in research is defined as research carried out with or by patients and those who have experience of a condition, rather than for, to, or about them[7]. PPI has multiple demonstrated positive impacts on research including the potential to reduce waste in the research landscape[4,8,9]. Furthermore, if a project or researcher is funded by a public body there is a duty to demonstrate accountability for the expenditure of supporting funding. Thus, incorporating PPI should be beneficial to both researcher and research outputs. There tends to be a recognition within the biomedical community that PPI can be beneficial to all stakeholders[10]. Awareness of PPI is certainly increasing, however, the incorporation of meaningful PPI as standard research practice is progressing slower than expected[11,12].

PPI is collaborative by its very nature and its impact on key stakeholders should be assessed in considering PPI success. Preclinical researchers are not patient-facing by nature and as such, some of the basic aspects of implementing meaningful PPI can be challenging without the relevant support structures. Understanding the perceived challenges and barriers as viewed by preclinical researchers is a first step in the development of appropriate resources and guidance documents to facilitate valuable collaboration and involvement between the public and the largest recipient cohort of publicly funded health research[13]. Here we describe an analysis of the views of preclinical researchers into the challenges regarding PPI. In response, we have developed open source tools to help individual researchers prepare themselves to successfully incorporate PPI into their research, and have also developed a PPI assessment survey and analysis tool for iterative and contemporaneous assessment which facilitates refinement and improvement of PPI activities in response to feedback from the patients or public involved.

Materials and methods

Ethics statement

Ethical approval was granted by UCD Human Research Ethics Committee. Survey responses were collected anonymously with informed consent of the participant for non-commercial use of the data provided.

Public and patient involvement statement

Patients were engaged through The Patient Voice in Arthritis Research PPI group. People with experience of living with any rheumatic disease from any area of Ireland were invited to apply to be involved in this study. Communication was remote, via email and phone. The patient insight partner (PIP) group of 12 were sent a review guide and a structured template for their review (Supporting Methods B in S1 File). PIPs were asked specifically about: language accessibility, relevance, usefulness, necessity of the questionnaire, missing aspects, the scale used, and the likelihood of intended use, questionnaire length, overall views and alternative assessment methods. The questionnaire was adjusted in response to PIP feedback. Telephone follow-up was used to obtain views on questionnaire refinement. PIPs were also asked to share the pilot survey within their relevant networks. The results of the study will be shared with PIPs via email and through the patient/researcher co-produced newsletter of the UCD Centre for Arthritis Research, News Rheum.

Researcher view on public and patient involvement

Researchers

An institutional wide notice and school-wide email was sent to researchers in the School of Medicine, The Conway Institute and the associated research faculties. Basic, biomedical, biomolecular, translational and preclinical researchers were invited to attend a discussion forum focusing on what they perceive to be barriers or challenges to patient involvement in research. Researchers from all experience levels (undergraduate to PI), a range of disease areas and research disciplines were in attendance (researcher characteristics, Table 1).

Table 1. PPI discussion forum researcher characteristics.

| Research Experience | n (%) | Primary Disease Area | n (%) | Primary Research Area | n (%) |

|---|---|---|---|---|---|

| Undergrad | 1 [6%] | Rheumatic Diseases | 4 [25%] | Statistical | 2 [13%] |

| Research Assistant | 1 [6%] | Diabetes | 2 [13%] | Molecular | 7 [44%] |

| Project Manager | 1 [6%] | Prostate Cancer | 4 [25%] | Basic | 6 [38%] |

| Postdoc | 7 [44%] | Breast Cancer | 2 [13%] | Psycho-social | 1 [6%] |

| Research Fellow | 3 [19%] | Cancer (Other) | 2 [13%] | ||

| Principal Investigator | 3 [19%] | Multi | 2 [13%] |

Discussion forum format

A semi-structured model was used as per Braun and Clarke[14]. A facilitator’s guide was prepared based on a review of the literature[15–28]. Discussion forum structure: an introduction to PPI, what it is, what it is not, how it applies to research, and consequences for funding, ethics and policy[29]. Clarification of key terms was also printed and posted on the walls for the entirety of the discussion forum for reference to all who attended. The attendees were divided into three discussion groups, each with a facilitator and a note-taker. An option for written contribution was also provided for those who did not wish to voice their opinions. The discussion forum lasted 2 hours and was held in an innovation meeting room, designed to facilitate community-brainstorming within the research institution.

Basic, translational and preclinical health researchers within the UCD College of Health and Agricultural Sciences were invited to a discussion forum to express their views on implementing PPI. All attendees consented to the non-commercial use of the data provided. A facilitated semi-structured discussion forum structure was used, consisting of three groups, each with a note-taker and a facilitator. There was also a roaming central facilitator. Notes were combined and a qualitative textual analysis was performed.

Planning canvas development

In response to the preclinical researcher views on implementing PPI, we proposed that reflecting on the main theoretical challenges for implementing PPI, which stem from the uncertain boundaries of the concept, in advance of starting a research project would facilitate downstream success for PPI. A common tool in to help business rethink their business strategy in a fast-evolving landscape is the Business Model Canvas (BMC). The BMC is used to enhance strategic thinking about business innovation[30]. We used the theory and design concept of the BMC informed by researcher views to develop the PPI Ready: Researcher Planning Canvas. The PPI Ready Canvas is designed to facilitate the researcher’s preparedness for PPI, rather than for planning an individual PPI activity.

Questionnaire development

A review of the health, public engagement, and marketing research literature was conducted[17,31–44]. A long questionnaire of 15 questions was developed in response to the researcher discussion forum (Supporting Methods C in S1 File). Three key processes for assessment were information (n = 4 questions), procedural fairness (n = 4) and quality (n = 7). The global assessment question “Overall, how satisfied/dissatisfied are you with your involvement in this project” was included as question 16 for convergent validity.

Face validity and questionnaire accessibility

A voluntary, community recruited panel of PIPs (n = 12) reviewed the questionnaire for face validity and language accessibility. The PIP review document can be found in supporting information (Supporting Methods B in S1 File). Questions were simplified and refined in response and changes discussed with PIPs. An 11 point satisfaction scale with 3 anchor points (at 0, 5 and 10) was used for all questions[45].

Survey pilot

A survey containing the 15 public involvement (PI) questions, one global assessment of satisfaction (GAS) question and the well characterized 10-question general self-efficacy (GSE) scale[46] was piloted on a cohort of 63 adults (Supporting Methods C in S1 File). All respondents self-reported as having attended a meeting or event(s) that gave them the opportunity to discuss or express their views about health research or to share their experience with researchers. Three did not consent to data storage and use; therefore responses were excluded from analysis. Of the 60 respondents, 72% (n = 43) were patients, 8% (n = 5) were carers, 17% (n = 10) were family members and 3% (n = 2) were other members of the public. All surveys were complete and there were no missing entries.

Data analysis

Data was analysed in IBM SPSS v24. Factor analysis with correlation matrix was used to identify co-linearity and redundancy within the 15 PI questionnaire. As expected, there was a high degree of co-linearity and variables were removed based on a correlation greater than 0.8. To inform refinement, PIP insight, views, and reported relevance was considered. Eight questions remained after refinement (8QPI). Factor analysis was repeated on the 8QPI data, which met all requirements for determinant (>0.0001), KMO (>0.8) and Bartlett’s test for sampling adequacy (p<0.05). Cronbach’s alpha with a cut-off of 0.7 was used for internal consistency reliability testing. Discriminant validity was analysed via factor analysis and component analysis and correlation between 8QPI and GSE. Convergent validity was established via linear regression between the average 8QPI and GAS question, using a cut-off value of 1-(2SE) (two standard errors).

Modelling the 8QPI into a quality control framework

The validated PI questionnaire includes two questions for information assessment, two questions for assessment of procedural fairness, four questions for quality assessment and the GAS question. We developed a simple flagging system for each of the three key assessment categories that are measured on a per-individual basis. Furthermore, there is also an overall assessment grade that provides a global measure of PIP satisfaction with an individual PPI scheme.

The excel analysis template can be found in supporting information (Supporting Methods A in S1 File and S1 Excel Template). PAS results are entered directly or via a linked file. For each question, responses of 0–3 issue an ‘Immediate Attention Required’ flag; 4–6 issued a ‘Some Attention Required’ flag and responses of 7–10 issued a ‘No Attention Required’ flag[47]. Content validity is automatically tested by comparing the GAS response to the overall mean PAS (Q1-8) response. If the GAS is within the mean +/- 2 standard deviations (as determined in the PAS pilot), a ‘PASS’ flag is generated, otherwise a ‘FAIL’ flag is produced and the data must be interpreted with caution.

An output summary table is generated on tab 2. Each category (communication, procedural and quality) receives a score based on the mean responses for all PIPs. An overall score based on mean response for all questions (Q1-8) and associated PPI Grade is generated for simple PPI satisfaction reporting. Based on the risk matrix concept, overall scores of 0–3 are ‘High Risk’ for PIP dissatisfaction/PPI failure, scores of 4–6 represent a ‘Moderate Risk’ and a score of 7–10 are ‘Acceptable’[48].

Results and discussion

Determining preclinical researcher views on PPI

Attendees were also encouraged to note their opinions and views if they did not wish to vocalise them or if a point occurred to them after the discussion had moved on. Discussions focused on key challenges and facilitators for implementation of PPI. The challenges were broken into three concepts: barriers, worries and concerns. Barriers refer to institutional barriers that prevent or discourage active PPI (for example: ethics, funding, administrative supports). Worries refer to personal challenges a researcher faces in implementing PPI (for example: social anxiety, imposter syndrome). Concerns refer to challenges about the impact on research (for example: loss of research focus, public discomfort with research methods (such as animal research).

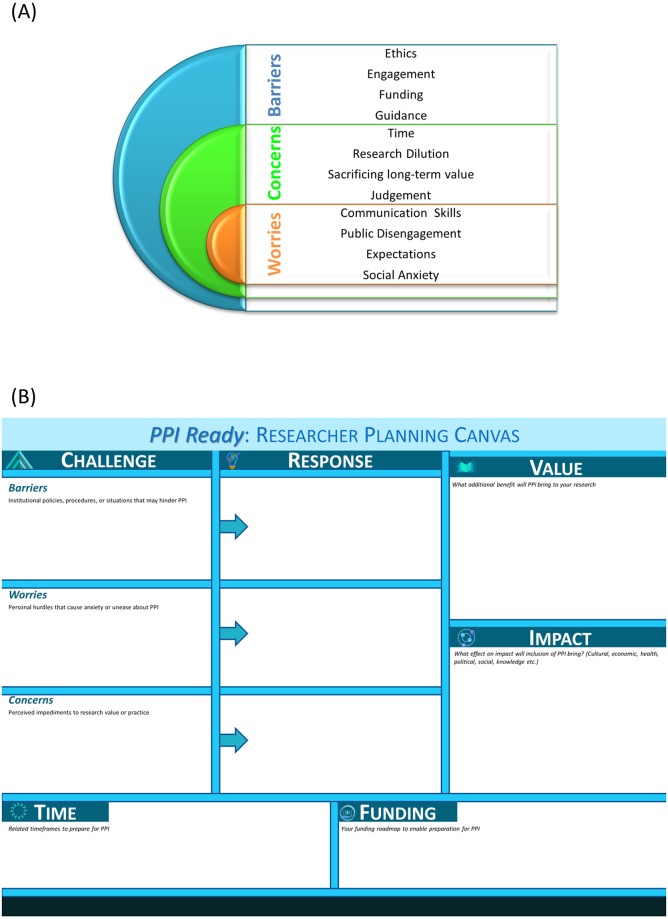

Notes were collated and a textual and thematic analysis was performed (Fig 1A). The major barriers identified were ethical challenges, engagement of patients and the interested public, funding to carry out PPI and a perceived lack of relevant guiding documents. Key concerns identified were time commitment, dilution of research objectives, sacrificing long-term value for short-term gains, and public judgement of ethically approved research (particularly animal models and experimentation). Personal worries that hindered PPI included lack of communication skills, fear of public disengagement, burden of expectation and social anxieties.

Fig 1. Key challenges hindering PPI and a tool for researchers to develop strategies to overcome these challenges.

(A) Thematic analysis of a researcher discussion forum with preclinical researchers identified key challenges in the implementation and adoption of PPI into research practice. (B) A visual design tool based on the business model canvas, which we’ve called PPI Ready: Researcher Planning Canvas was developed to aid the individual researcher to explore their personal barriers to adopting PPI and map a strategy to overcome or circumvent them.

Communication is a key factor in researcher hesitancy to adopt PPI

A common theme across all challenges was communication. Communication barriers included a lack of predefined routes or mechanisms to source PIPs, and a lack of guidance documents regarding the legal and ethical responsibilities for local, national and international involvement of patients in research.

“One of the biggest barriers is engaging patients and getting them to come in […] we need to be able to sell PPI to the public.”

[Principal Investigator, Diabetes Research]

Communication worries included discomfort or reluctance to talk about personal experiences with patients; fear of upsetting a patient; anxiety about how to handle disagreements with a PIP.

“Worried that a mistake I make will upset a patient”

[Postdoctoral Researcher, Cancer Biology].

Communication concerns included being able to explain and justify the need for preclinical and basic research, which has a longer term and less direct research impact. There was concern around public representatives bringing personal judgement upon research methods.

“Patients maybe against some aspects of research such as animal testing. We need […] to convey the importance of animal testing when it comes to the progression of research”

[Principal Investigator, Neuroscience]

There was concern that the time spent justifying research that has already been through rigorous ethics application to public partners with alternative agendas could slow the research process and be counter-productive.

Communicating with vulnerable groups is not in the preclinical researchers’ toolkit

Preclinical research training may include scientific communications, presentation skills and even media training. Seldom, however, is training provided for communicating with vulnerable groups such as patient cohorts. The worry of appropriately communicating with vulnerable groups was particularly prominent with less experienced researchers and in researchers investigating fatal and life-limiting illnesses.

“The unfortunate reality of PPI is that some patients may have life-threatening diseases. What happens if a patient dies? As a researcher I don’t know what to do in that situation and I don’t know how to handle it”

[Postdoctoral Researcher, Pathobiology].

More generally, there was a recurrent worry of upsetting the public, fear of “saying the wrong thing” or causing upset or public disengagement. The perceived lack of guidance, combined with the bespoke nature of PPI resulted in many researchers feeling the burden of publicly representing their research and their institute. Whereas they may be comfortable presenting a fully peer-reviewed “sanctioned” piece of research output, there was a personal and professional vulnerability associated with sharing early stage and on-going research with the public. Researchers felt they would be guarded and could not discuss preliminary research fully if a public member was present for fear of said research being misconstrued or offering false hope.

Time consumption is a major concern hindering PPI implementation

The overwhelming largest concern about implementing PPI was the time required for the engagement, recruitment, procedural, and on-going management of public involvement. This may be a function of the relatively new strategy change towards PPI in Ireland.

“I simply don’t have enough time to implement PPI. I can’t delegate any work in relation to PPI because I don’t think enough people know what it is and what to do”

[Principal Investigator, Diabetes].

In particular, researchers who had no experience in PPI had the strongest sense that the time consuming nature of PPI outweighed its benefit to preclinical research.

“Time spent engaging with patients is time spent away from doing research”

[Postdoctoral Researcher].

Again, this could be influenced by the lack of experience in engaging patients and the perceived lack of guidance and clarity around PPI[49] and dissociation between preclinical research and policy setting[50–52].

Development of a tool to facilitate an individual researcher to be become PPI ready

PPI is bespoke; there is no standard formula for a good PPI initiative. However, one can be as prepared as possible to implement a bespoke PPI initiative. By reflecting on the main theoretical challenges for implementing PPI in advance of starting a research project, an individual researcher can facilitate the downstream success of their PPI initiative. An individual researcher’s theoretical challenges will stem from the uncertain boundaries of the concept of PPI. Therefore, we have adapted a standard business tool, the business model canvas (BMC), which is designed to enhance strategic thinking for business innovation and inform the exploitation of emerging opportunities in changing environments[30,53]. The PPI Ready Researcher Planning Canvas (Fig 1B) is designed to enhance strategic thinking about PPI and enable a researcher to prepare themselves for PPI. The PPI Ready Canvas is a visual plan with elements describing a researcher’s challenges, proposed solutions, the solutions’ value proposition, research impact, financial and time costs. It is designed to assist researchers in aligning their activities by illustrating potential benefits and trade-offs.

The PPI Ready Researcher Planning Canvas elements allow researchers to consider what the challenges are that are hindering their implementation of PPI. It is broken down into Barriers: Institutional policies, procedures or situations that may hinder PPI; Worries: Personal hurdles that cause anxiety or unease about PPI; Concerns: Perceived impediments to research value or practice. There is corresponding space to consider the response to the challenges. In order to consider the cost/benefit analysis of implementing PPI, there is a module of Value: what additional benefit will PPI bring to your research; and Impact: What effect on impact will inclusion of PPI bring? Consider cultural, economic, health, political, social and knowledge impacts. To ground the proposed solutions or responses, there are modules to consider both the timeframe required to prepare for PPI and also the associated costs and funding required.

A mechanism for iterative PPI assessment to allow incremental revisions and PPI development

Formalised PPI is a new concept in preclinical research. All initiatives, especially paradigm shifts, have associated risk. The earlier in the project lifecycle you can avoid a risk, the more accurate you can make your design. Many risks are not discovered until they have been to be integrated into a system and it is not feasible to identify all risks. Therefore, just like any area of the research cycle and design process, PPI should be assessed, evaluated and improved iteratively in response to experience and lessons learned. This may be tricky considering PPI by its very nature is not prescriptive. The form that PPI takes will be bespoke depending upon the needs of the research. However, the underlying concepts for valuable and meaningful PPI remain constant and can be measured and assessed.

We have developed a PPI satisfaction assessment survey to be used for iterative and contemporaneous PPI assessment throughout the life cycle of a research project (Table 2). The survey should be completed by PIPs throughout their role in the lifecycle of the research project. This iterative approach has a number of benefits including: mitigated risks earlier because PPI elements can be integrated progressively; accommodation of changing requirements and tactics as experience is gained and lessons learned; facilitated refinement and improvement of PPI resulting in a more robust and meaningful initiative; facilitated knowledge exchange as institutions can learn from this approach and improve their process; and enhance reusability of PPI initiatives.

Table 2. Validated PPI assessment survey (PAS).

| In response to the following questions, please indicate your level of satisfaction. 0 = very dissatisfied, 5 = neither satisfied nor dissatisfied, 10 = very satisfied. |

||

|---|---|---|

| Value Cate-gory | Q. | How satisfied are you: |

| I | 1 | With the facilities provided? |

| I | 2 | That your needs were considered in the planning of the project meeting(s)? |

| P | 3 | With your level of contribution in relation to what you expected? |

| P | 4 | With your comfort in voicing your thoughts and opinions? |

| Q | 5 | With your understanding of the research project and its aims? |

| Q | 6 | That you understand the roles of other team members? |

| Q | 7 | With the communication and feedback tools that you have been asked to use in this project? |

| Q | 8 | That you know who to contact if an issue arises or, if you have concerns about the project or your involvement? |

| GAS | 9 | Overall, how satisfied/dissatisfied are you with your involvement in this project? |

I = Informational; P = Procedural; Q = Quality; GAS = Global Assessment of Satisfaction

PPI assessment survey development

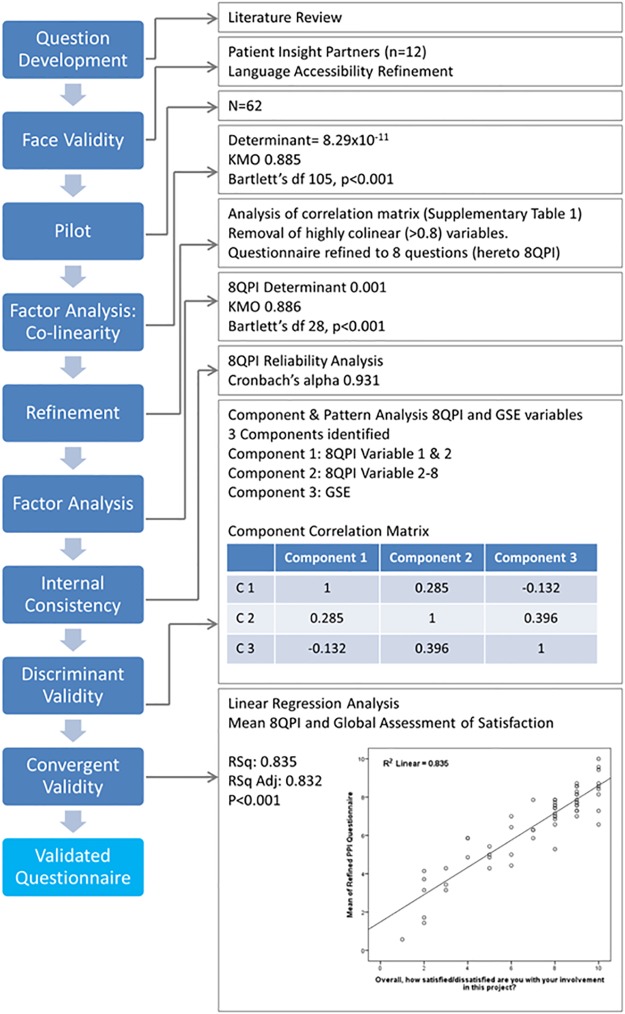

A review of the literature and qualitative review by PIPs (n = 12, Table 3) lead to the development of 15 PPI assessment questions. Based on feedback from our PIPs and previous evaluation of scale types for health related research, we used an 11-point satisfaction scale with 3 anchor points (0 = very dissatisfied, 5 = neither dissatisfied nor satisfied, 10 = very satisfied)[45,54]. The global assessment of satisfaction (GAS) question was added to the questionnaire as a measure of convergent validity. The 16 question PPI evaluation (PI) questionnaire and the well characterized 10-question generalized self-efficacy scale (GSE)[46] were piloted on a cohort of 62 patients or interested public who self-reported as having attended a meeting or event that gave them the opportunity to discuss or express their views about health research or to share their experience with researchers. 60/62 provided the appropriate consent for analysis. The analysis pathway is outlined in Fig 2. Dimension reduction by oblique Factor analysis indicated multi-colinearity within the PI questionnaire (determinant 8.279x10-11). Sampling was adequate (Kaiser-Meyer-Olkin (KMO, 0.885)) and there was homogeneity of variance (Barlett’s Test df 105, p<0.001). Analysis of the correlation matrix (Table A in S1 File) leads to the removal of highly collinear questions (Q3, 4, 7, 9, 11, 12 and 14). Factor analysis was rerun on the refined 8-question PI questionnaire (8QPI). Determinant value of 0.001 indicates an absence of collinearity. Sampling was adequate (KMO 0.886) and there is homogeneity of variance (Bartlett’s df 28, p<0.001).

Table 3. PIP characteristics.

| Sex | n (%) | Public/Patient | n (%) | Rheumatic Disease | n (%) |

|---|---|---|---|---|---|

| Male | 3 [25%] | Patient | 11 [92%] | Osteo-arthritis | 3 [25%] |

| Female | 9 [75%] | Carer | 1 [8%] | Rheumatoid Arthritis | 4 [33%] |

| Psoriatic Arthritis | 2 [17%] | ||||

| Juvenile Idiopathic Arthritis | 1 [8%] | ||||

| Not specified | 2 [17%] |

Fig 2. Development of the PPI assessment survey (PAS).

Questions were adapted from the literature and reviewed by a PIP cohort of n = 12 for face validity, relevance, jargon use, comprehension and language and format accessibility. Question wording and scale were refined in response and reassessed by a subset of the PIP cohort. The refined questionnaire and the general self-efficacy scale (GSE) were piloted on a cohort of patients and public that had attended a research involvement or engagement event. N = 60 responses passed the consent check. Oblique factor analysis identified co-linearity between PPI questions which lead to question exclusion based on quantitative analysis and informed by qualitative input for PIPs. The refined questionnaire passed content validity analysis, including, internal validity, discriminant and convergent validity analysis.

Internal consistency was measured for 8QPI questionnaire via reliability analysis and produced a Cronbach’s alpha of 0.932, indicating high reliability/internal consistency of the variables in the scale. Discriminant validity was measured between the 8QPI and GSE scales. Factor analysis identified 3 components: GSE variables, 8QPI variable 1 and 2, and 8QPI variable 3–8 (Table C in S1 File). Correlation between components ranged from -0.132–0.396 (Fig 2). The variance extracted (range 0.56–0.68) is greater than the correlation squared (range 0.02–0.12) and thus discriminant validity is established.

Convergent validity was measured via the correlation between the per-case mean 8QPI response and the GAS question (Overall, how satisfied/dissatisfied are you with your involvement in this project?), which was included in the pilot survey. Linear regression demonstrated a positive correlation (R 0.914, RSq 0.835 and Adjusted RSq 0.832, Fig 2).

An open-source simple analysis and reporting tool for the PPI assessment survey

Time consumption was highlighted as a major barrier to implementing PPI in the preclinical sciences. Therefore, development of a PPI assessment survey (PAS) would be redundant unless it was quick and easy to use. The tool is simple by design and at the suggestion of preclinical researchers who did not want to require any further training in order to be able to use it. An excel spreadsheet was selected as this programme is widely used and available and researchers were comfortable with it. We have therefore developed a series of simple excel templates with pre-formulated inputs for the analysis of the survey (Fig 3). Furthermore, there are linked templates that can be sent to the PIP which allows the researcher’s file to be updated and analysed as the PIP fills in their survey response in a separate excel document.

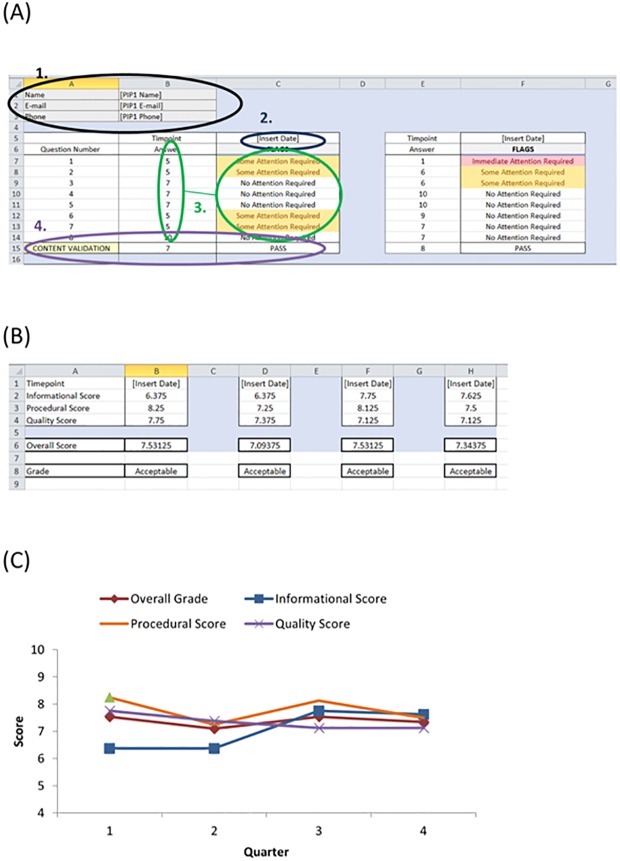

Fig 3. PAS assessment tool.

A preformatted excel file with embedded analysis formula is available for use with the PAS. (A) Tab 1 of the excel file is the data input tab. The PIP details are entered in (1). The date is entered in (2). The responses to the PAS survey are entered in (3) and the flagging system is automatically generated. The PAS scores can be entered manually or can be updated via a linked excel file sent directly to the PIP(s). (4) The GSE score should be within two standard errors of the mean PPI scores (Q1-8). If not, a FAIL flag is generated and caution is advised in the interpretation of results. (B) Tab 2 is the output summary. It summarises the scores for each value category across all PIPs for each given time point and also generates an overall score and grade. (C) The scores can be plotted over time to visualise the PPI satisfaction trend. As illustrated, the overall score is relatively flat, but plotting of each value score illustrates that the informational assessment required attention and improved upon implementation of changes (after the second quarter) to increase clarity in communications.

The analysis is multi-dimensional and uses a flagging system, which preclinical researchers would be familiar with from standard quality control measures used by laboratory equipment. The analysis tool uses a flagging system for each question answered on an individual PIP level. The banding for our flag system was based upon a previously published large analysis of different response scales[47]. If a PIP scored a question ≥7 no flag is generated. If a question is scored between 4–6 a yellow warning flag “some attention required” is generated. A score on any individual question of ≤3 generates a red warning flag “Immediate attention required”. These flags alert the researcher to risks associated with their PPI initiatives and allows them to put control measures in place. The iterative use of the PAS over time will facilitate trend analysis to determine the success of the control measures.

The GAS question is included in the PAS (question 9). For validation of the survey during use, the analysis template has an inbuilt content validation (Fig 3). If the GAS response is within the range of the Mean Q1-8 +/- two standard errors, the PAS is consistent in its measurement of satisfaction and a “PASS” flag is produced. If the GAS is outside this range a “FAIL” flag is produce and caution should be used in interpreting the results as there may be an underlying construct skewing the PAS response.

There is also an overall PAS assessment grade produced based on the mean PIP response across all questions. This can be used as an easy reporting measure for annual grant reports and for assessing a PPI initiative across the lifecycle of the project. The overall grade uses categorisation based upon standard risk assessment, a concept familiar to most preclinical researchers[55]. An overall grade ≥7 is acceptable, 4–6 highlights a moderate risk to PPI success, and a grade <4 highlights a substantial risk to PPI success. The overall global grade is a useful tool for the measurement and reporting of PAS. However, if used in isolation there is a risk of masking potential subscale concerns. The PAS assesses three value levels: informational (quality and clarity of the information and communication provided, n = 2 questions), procedural fairness (consideration of the PIP needs, n = 2 questions), and quality (how valued and valuable a PIP perceives the PPI initiative, n = 4 questions). The analysis tool generates a per-category summary, which is the mean response from PIPs within each value category. This allows easy identification of improvements required between value categories and allows the researcher to pinpoint the area requiring refinement in order to improve their PAS grade (Fig 3B).

Limitations of this study

The PAS is designed to facilitate assessment of PPI for preclinical researchers who tend to have little to no experience in qualitative, semi-quantitative or psychometric research. It is designed specifically for those with limited experience or access to established PPI resources. It addresses the key concepts underlying successful PPI collaborations between patients/public and researchers and ensures they are being addressed and assessed throughout the lifecycle of the project. The use of the open source analysis tool takes away the need for specialist training to use the PAS. The PAS can tell a researcher if there is a problem with PPI and in which category (information/communication, procedural fairness or quality); however, it does not identify the issue. The use of the flagging system on an individual PIP basis, allows for the rapid identification of whether it is an issue perceived by a single member of a PIP cohort, or if multiple PIPs have identified the same issue. This allows for simple direct follow-up with the PIP(s) to establish the cause of the issue without in depth qualitative analysis.

The circumvention of the qualitative analysis of other assessment tools, such as feedback forms which allow free text input, reduces the length of time and requirement for specialist knowledge and training for PPI assessment. It also allows the satisfaction trend to be easily tracked across time and in response to improvement measures. This can be beneficial but is also a limitation as there is a loss to the extent of information that can be assessed. A quantitative method cannot provide in-depth information on the motivations behind the levels of satisfaction nor provide details of PIP experiences with PPI[56]. The use of the PAS with informal PIP feedback in response to issues identified in PAS analysis provides a realist and feasible solution given the researcher challenges described above. However, for the most robust development and progression of PPI as a research and impact tool, a mixed methods approach using the PAS and qualitative in-depth exploratory PPI narrative research and analysis could enable new topics and insights to emerge, which could also be confirmatory for the quantitative findings.

Concluding remarks

Preclinical researchers are increasingly required to wear multiple hats as part of the standard expectations of a research career position. Established roles include study design, conduct and experimental analysis but they are also now expected to be professional teachers, presenters, public speakers, writers, reviewers, editors, managers and mentors. There is increasing pressure to commercialise research in-house, act as innovators and become social and political influencers[57,58]. It is therefore unsurprising that time commitment, communication and lack of guidance were considered major challenges to the implementation of PPI in preclinical research. Understanding that researchers face personal challenges, anxieties and worries, as well as institution barriers and research concerns is a first step in encouraging meaningful PPI collaborations. Addressing the challenges faced by preclinical researchers will greatly help the development of resources and guidance documents to facilitate PPI to one of the largest recipient cohorts of publicly funded health research[1,59].

The research describe here will aid preclinical researchers in the formulation of PPI. Adequate preparation in advance of a research project with public involvement should improve the success of that involvement. All new concepts have challenges and risk and involving the public in research is no different. Challenges are inevitable, however assessing public involvement iteratively through the use of the PAS described here within allows for those challenges to be addressed and managed contemporaneously. In conclusion, we have developed a tool specifically for the needs of the preclinical researchers to facilitate the implementation, assessment and improvement of PPI throughout the lifecycle of a research project.

Supporting information

Supporting methods and tables.

(PDF)

(XLSX)

Acknowledgments

We wish to acknowledge our PIPs, all voluntary members of the UCD PPI initiative, The Patient Voice in Arthritis Research: Jacqui Browne, Wendy Costello, John Sherwin, Breda Fay, Eileen Tunney, Nicola Nestor and all Patient Voice in Arthritis Research contributors to the project.

The authors also wish to thank Dr. Geertje Schuitema (UCD College of Business) and Dr. Ricardo Segurado (UCD School of Public Health, Physiotherapy and Sports Science) for their assistance and advice.

Data Availability

All relevant data are within the manuscript and its Supporting Information files.

Funding Statement

ED received funding from the Irish Research Council (GOIPD/2017/1310) URL: http://research.ie/. JM and ED received funding from the Health Research Board (SS-2018-094) URL: https://www.hrb.ie/. The funders had no role in the study design, data collection or analysis, decision to publish or preparation of the manuscript.

References

- 1.Moses H iii, Matheson DM, Cairns-Smith S, George BP, et al. (2015) The anatomy of medical research: Us and international comparisons. JAMA 313: 174–189. 10.1001/jama.2014.15939 [DOI] [PubMed] [Google Scholar]

- 2.Ireland HRBo (2015) Ireland: Research. Evidence. Action. HRB Strategy 2016–2020. Ireland: HRB.

- 3.van Thiel GS, Pieter (2013) Priorities medicines for Europe and the world "A public health approach to innovation". World Health Organization.

- 4.Richards T, Snow R, Schroter S (2016) Co-creating health: more than a dream. BMJ 354. [DOI] [PubMed] [Google Scholar]

- 5.Institute P-COR (2015) What we mean by engagement USA: PCORI.

- 6.Research NIfH (2017) Patients and the public. UK.

- 7.INVOLVE What is public involvement in research. UK: INVOLVE (NHS).

- 8.Chalmers I, Bracken MB, Djulbegovic B, Garattini S, Grant J, et al. (2014) How to increase value and reduce waste when research priorities are set. The Lancet 383: 156–165. [DOI] [PubMed] [Google Scholar]

- 9.Chu LF, Utengen A, Kadry B, Kucharski SE, Campos H, et al. (2016) “Nothing about us without us”—patient partnership in medical conferences. BMJ 354. [DOI] [PubMed] [Google Scholar]

- 10.Pollock J, Raza K, Pratt AG, Hanson H, Siebert S, et al. (2016) Patient and researcher perspectives on facilitating patient and public involvement in rheumatology research. Musculoskeletal Care 15: 395–399. 10.1002/msc.1171 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Ocloo J, Matthews R (2016) From tokenism to empowerment: progressing patient and public involvement in healthcare improvement. BMJ Quality & Safety. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Nagraj S, Gillam S (2011) Patient participation groups. BMJ 342. [DOI] [PubMed] [Google Scholar]

- 13.Chatterjee SK, Rohrbaugh ML (2014) NIH inventions translate into drugs and biologics with high public health impact. Nature Biotechnology 32: 52 10.1038/nbt.2785 [DOI] [PubMed] [Google Scholar]

- 14.Braun V, Clarke V (2006) Using thematic analysis in psychology. Qualitative Research in Psychology 3: 77–101. [Google Scholar]

- 15.Gibson A, Welsman J, Britten N (2017) Evaluating patient and public involvement in health research: from theoretical model to practical workshop. Health expectations: an international journal of public participation in health care and health policy 20: 826–835. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Bagley HJ, Short H, Harman NL, Hickey HR, Gamble CL, et al. (2016) A patient and public involvement (PPI) toolkit for meaningful and flexible involvement in clinical trials–a work in progress. Research Involvement and Engagement 2: 15 10.1186/s40900-016-0029-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Stocks SJ, Giles SJ, Cheraghi-Sohi S, Campbell SM (2015) Application of a tool for the evaluation of public and patient involvement in research. BMJ Open 5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Brett J, Staniszewska S, Mockford C, Herron-Marx S, Hughes J, et al. (2014) Mapping the impact of patient and public involvement on health and social care research: a systematic review. Health expectations: an international journal of public participation in health care and health policy 17: 637–650. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Boivin A, Lehoux P, Lacombe R, Burgers J, Grol R (2014) Involving patients in setting priorities for healthcare improvement: a cluster randomized trial. Implementation science: IS 9: 24–24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Oliver SR, Rees RW, Clarke-Jones L, Milne R, Oakley AR, et al. (2008) A multidimensional conceptual framework for analysing public involvement in health services research. Health expectations: an international journal of public participation in health care and health policy 11: 72–84. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Domecq JP, Prutsky G, Elraiyah T, Wang Z, Nabhan M, et al. (2014) Patient engagement in research: a systematic review. BMC Health Services Research 14: 89 10.1186/1472-6963-14-89 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Shippee ND, Domecq Garces JP, Prutsky Lopez GJ, Wang Z, Elraiyah TA, et al. (2015) Patient and service user engagement in research: a systematic review and synthesized framework. Health Expectations 18: 1151–1166. 10.1111/hex.12090 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Carman KL, Dardess P, Maurer M, Sofaer S, Adams K, et al. (2013) Patient And Family Engagement: A Framework For Understanding The Elements And Developing Interventions And Policies. Health Affairs 32: 223–231. 10.1377/hlthaff.2012.1133 [DOI] [PubMed] [Google Scholar]

- 24.Esmail L, Moore E, Rein A (2015) Evaluating patient and stakeholder engagement in research: moving from theory to practice. Journal of Comparative Effectiveness Research 4: 133–145. 10.2217/cer.14.79 [DOI] [PubMed] [Google Scholar]

- 25.Crawford MJ, Rutter D, Manley C, Weaver T, Bhui K, et al. (2002) Systematic review of involving patients in the planning and development of health care. BMJ (Clinical research ed) 325: 1263–1263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Forsythe L, Heckert A, Margolis MK, Schrandt S, Frank L (2018) Methods and impact of engagement in research, from theory to practice and back again: early findings from the Patient-Centered Outcomes Research Institute. Quality of life research: an international journal of quality of life aspects of treatment, care and rehabilitation 27: 17–31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Barello S, Graffigna G, Vegni E (2012) Patient engagement as an emerging challenge for healthcare services: mapping the literature. Nursing research and practice 2012: 905934–905934. 10.1155/2012/905934 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Boote J, Wong R, Booth A (2015) 'Talking the talk or walking the walk?' A bibliometric review of the literature on public involvement in health research published between 1995 and 2009. Health expectations: an international journal of public participation in health care and health policy 18: 44–57. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Liabo K, Boddy K, Burchmore H, Cockcroft E, Britten N (2018) Clarifying the roles of patients in research. BMJ 361. [DOI] [PubMed] [Google Scholar]

- 30.Spieth P, Schneckenberg D, Ricart JE (2014) Business model innovation–state of the art and future challenges for the field. R&D Management 44: 237–247. [Google Scholar]

- 31.Staniszewska S, Brett J, Mockford C, Barber R (2011) The GRIPP checklist: Strengthening the quality of patient and public involvement reporting in research. International Journal of Technology Assessment in Health Care 27: 391–399. 10.1017/S0266462311000481 [DOI] [PubMed] [Google Scholar]

- 32.Edelman N, Barron D (2016) Evaluation of public involvement in research: time for a major re-think? Journal of Health Services Research & Policy 21: 209–211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Crocker JC, Boylan A-M, Bostock J, Locock L (2017) Is it worth it? Patient and public views on the impact of their involvement in health research and its assessment: a UK-based qualitative interview study. Health Expectations 20: 519–528. 10.1111/hex.12479 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Staley K (2015) ‘Is it worth doing?’ Measuring the impact of patient and public involvement in research. Research Involvement and Engagement 1: 6 10.1186/s40900-015-0008-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Choy E, Perrot S, Leon T, Kaplan J, Petersel D, et al. (2010) A patient survey of the impact of fibromyalgia and the journey to diagnosis. BMC Health Services Research 10: 102 10.1186/1472-6963-10-102 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Lempp HK, Hatch SL, Carville SF, Choy EH (2009) Patients’ experiences of living with and receiving treatment for fibromyalgia syndrome: a qualitative study. BMC Musculoskeletal Disorders 10: 124–124. 10.1186/1471-2474-10-124 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Raymond MC, Brown JB (2000) Experience of fibromyalgia. Qualitative study. Canadian Family Physician 46: 1100–1106. [PMC free article] [PubMed] [Google Scholar]

- 38.Rodham K, Rance N, Blake D (2010) A qualitative exploration of carers’ and ‘patients’ experiences of fibromyalgia: one illness, different perspectives. Musculoskeletal Care 8: 68–77. 10.1002/msc.167 [DOI] [PubMed] [Google Scholar]

- 39.Connolly M, McLean S, Guerin S, Walsh G, Barrett A, et al. (2018) Development and Initial Psychometric Properties of a Questionnaire to Assess Competence in Palliative Care: Palliative Care Competence Framework Questionnaire. American Journal of Hospice and Palliative Medicine®: 1049909118772565. [DOI] [PubMed] [Google Scholar]

- 40.Kernohan WG, Brown MJ, Payne C, Guerin S (2018) Barriers and facilitators to knowledge transfer and exchange in palliative care research. BMJ Evidence-Based Medicine 23: 131 10.1136/bmjebm-2017-110865 [DOI] [PubMed] [Google Scholar]

- 41.Lin F-H, Tsai S-B, Lee Y-C, Hsiao C-F, Zhou J, et al. (2017) Empirical research on Kano’s model and customer satisfaction. PLoS One 12: e0183888 10.1371/journal.pone.0183888 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Boynton PM, Greenhalgh T (2004) Selecting, designing, and developing your questionnaire. BMJ: British Medical Journal 328: 1312–1315. 10.1136/bmj.328.7451.1312 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Rowe G, Frewer LJ (2005) A Typology of Public Engagement Mechanisms. Science, Technology, & Human Values 30: 251–290. [Google Scholar]

- 44.Perlaviciute G, Schuitema G, Devine-Wright P, Ram B (2018) At the Heart of a Sustainable Energy Transition: The Public Acceptability of Energy Projects. IEEE Power and Energy Magazine 16: 49–55. [Google Scholar]

- 45.Preston CC, Colman AM (2000) Optimal number of response categories in rating scales: reliability, validity, discriminating power, and respondent preferences. Acta Psychologica 104: 1–15. [DOI] [PubMed] [Google Scholar]

- 46.Jerusalem RSaM (1995) Generalized Self-Efficacy Scale. Windsor, England: NFER-Nelson.

- 47.van Beuningen JvdH, Karolijne; Moone, Linda (2014) Measuring well-being. An analysis of different response scales. The Hague, Netherlands: Statistics Netherlands.

- 48.Baybutt P (2017) Guidelines for designing risk matrices. Process Safety Progress 37: 49–55. [Google Scholar]

- 49.Patricia Wilson EM, Julia Keenan, Elaine McNeilly, Claire Goodman, Amanda Howe, Fiona Poland, Sophie Staniszewska, Sally Kendall, Diane Munday, Marion Cowe, and Stephen Peckham. (2015) ReseArch with Patient and Public invOlvement: a RealisT evaluation–the RAPPORT study. Health Services and Delivery Research, No 338. Southampton (UK): NIHR Journals Library. [PubMed]

- 50.de Jong SPL, Smit J, van Drooge L (2016) Scientists’ response to societal impact policies: A policy paradox. Science and Public Policy 43: 102–114. [Google Scholar]

- 51.Boswell C, Smith K (2017) Rethinking policy ‘impact’: four models of research-policy relations. Palgrave Communications 3: 44. [Google Scholar]

- 52.Gianos PL (1974) Scientists as Policy Advisers: the Context of Influence. Western Political Quarterly 27: 429–456. [Google Scholar]

- 53.Osterwalder Alexander PY (2010) Business Model Generation: A Handbook for Visionaries, Game Changers, and Challengers: Wiley. [Google Scholar]

- 54.Courser ML, Paul J (2012) Item-Nonresponse and the 10-point response scale in telephone surveys. Survey Practice. [Google Scholar]

- 55.Lyon BKP, Georgi (2016) The art of assessing risk. Professional Safety: 40–51. [Google Scholar]

- 56.Chow MYK, Quine S, Li M (2010) The benefits of using a mixed methods approach–quantitative with qualitative–to identify client satisfaction and unmet needs in an HIV healthcare centre. AIDS Care 22: 491–498. 10.1080/09540120903214371 [DOI] [PubMed] [Google Scholar]

- 57.Arnette R (2005) Wearing Many Hats. Science Magazine.

- 58.Hollenbach AD (2014) The many hats of an academic researcher. ASBMB Today: American Society for Biochemistry and Molecular Biology. [Google Scholar]

- 59.Moses H, Martin JB (2011) Biomedical Research and Health Advances. New England Journal of Medicine 364: 567–571. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supporting methods and tables.

(PDF)

(XLSX)

Data Availability Statement

All relevant data are within the manuscript and its Supporting Information files.