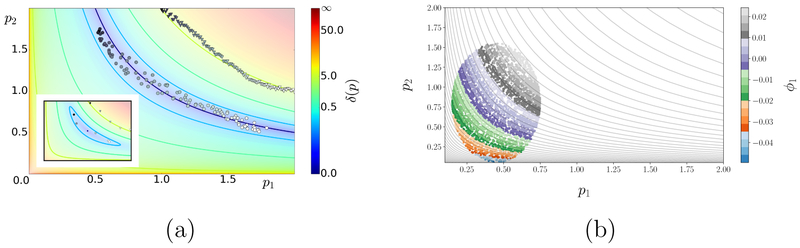

Figure 1:

Exact and learned (global) parameter space foliations for the model fε (p1, p2) = (p1p2 + 2ε(p1 – p2), ln(p1p2), (p1p2)2). The combination peff = p1p2 is an effective parameter for the unperturbed (ε = 0) model, since f0 =const. whenever p1p2 =const. (a) Level sets of the cost function δ(p) = ∥fε (p) – f* ∥ for the unperturbed (main) and perturbed (inset) model and for data f* = (1, 0, 1) corresponding to (p1, p2) = (1,1). Level sets of peff can be learned by data fitting: feeding various initializations (triangles) to a gradient descent algorithm for the unperturbed problem yields, approximately, the hyperbola peff = 1 (circles; colored by initialization). This behavior persists qualitatively for ε = 0.2 despite the existence of a unique minimizer p*, because δ (p) remains within tolerance over extended almost neutral sets around p* that approximately trace the level sets of peff. (b) Learning peff by applying DMAPS, with an output-only-informed metric (see the SI), to input–output data of the unperturbed model. For ε = 0, points on any level curve of peff are indistinguishable for this metric, as f0 maps them to the same output. DMAPS, applied to the depicted oval point cloud, recovers those level curves as level sets of the single leading nontrivial DMAPS eigenvector ϕ1.