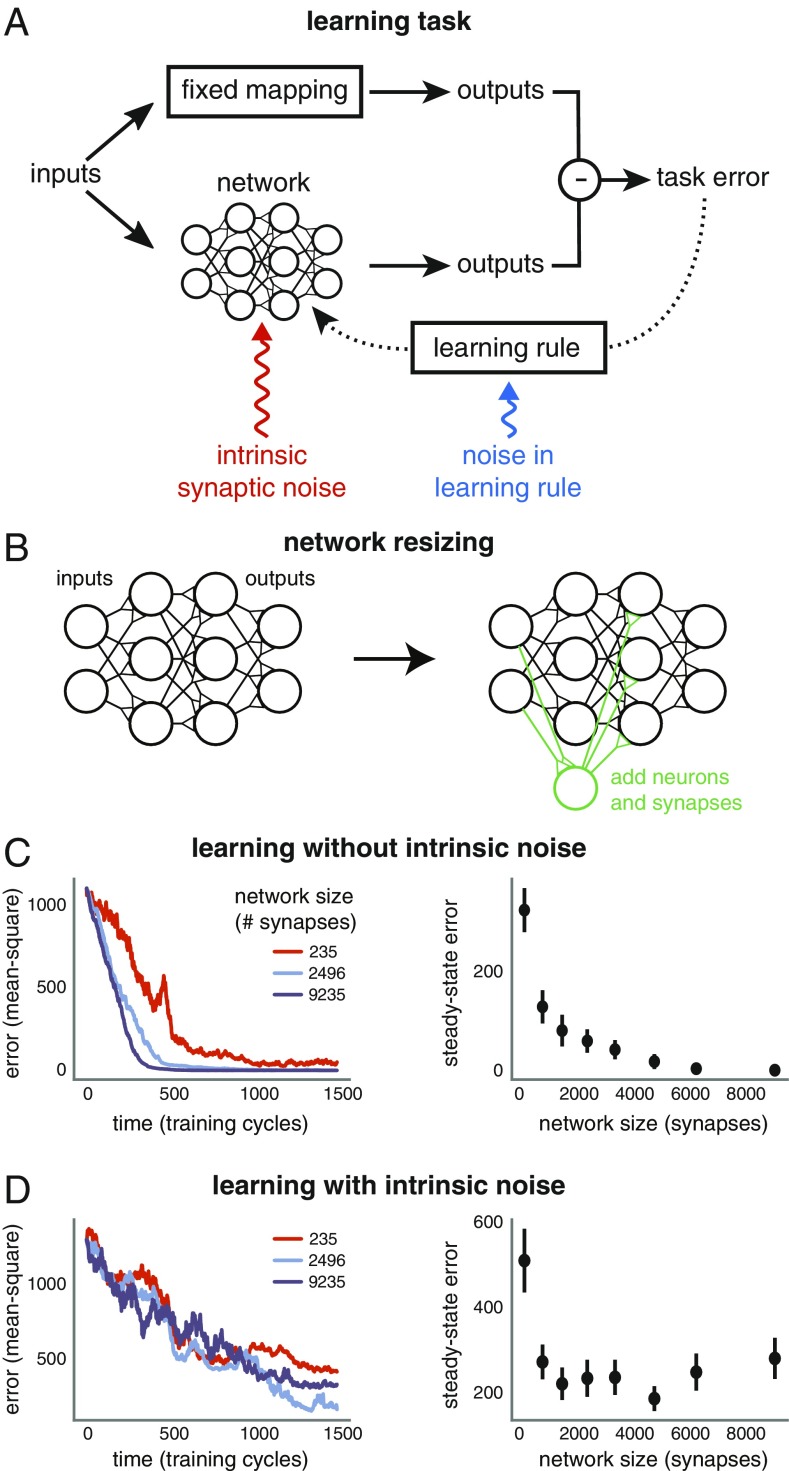

Fig. 2.

(A) Learning task. Neuronal networks are trained to learn an input–output mapping using feedback error and a gradient-based learning rule to adjust synaptic strengths. The feedback is corrupted with tunable levels of noise (blue), reflecting imperfect sensory feedback, imperfect learning rules, and task-irrelevant changes in synaptic strengths. Synaptic strengths are additionally subject to independent internal noise (red), reflecting their inherent unreliability. (B) Network size is increased by adding neurons and synapses to inner layers. (C) Three differently sized networks are trained on the same task, with the same noise-corrupted learning rule. A learning cycle consists of a single input (drawn from a Gaussian distribution) being fed to the network. The gradient of feedback error with respect to this input (i.e., the stochastic gradient) is then calculated and corrupted with noise (blue component in A). All networks have five hidden layers of equal size. We vary this size from 5 neurons to 45 neurons across the networks. (C, Right) Mean task error after 1,500 learning cycles, computed over 12 simulations. Error bars depict SEM. (C, Left) Task error over time for a single simulation of each network. (D) The same as C but each synapse is subject to internal independent noise fluctuations in addition to noise in the learning rule (red component in A).