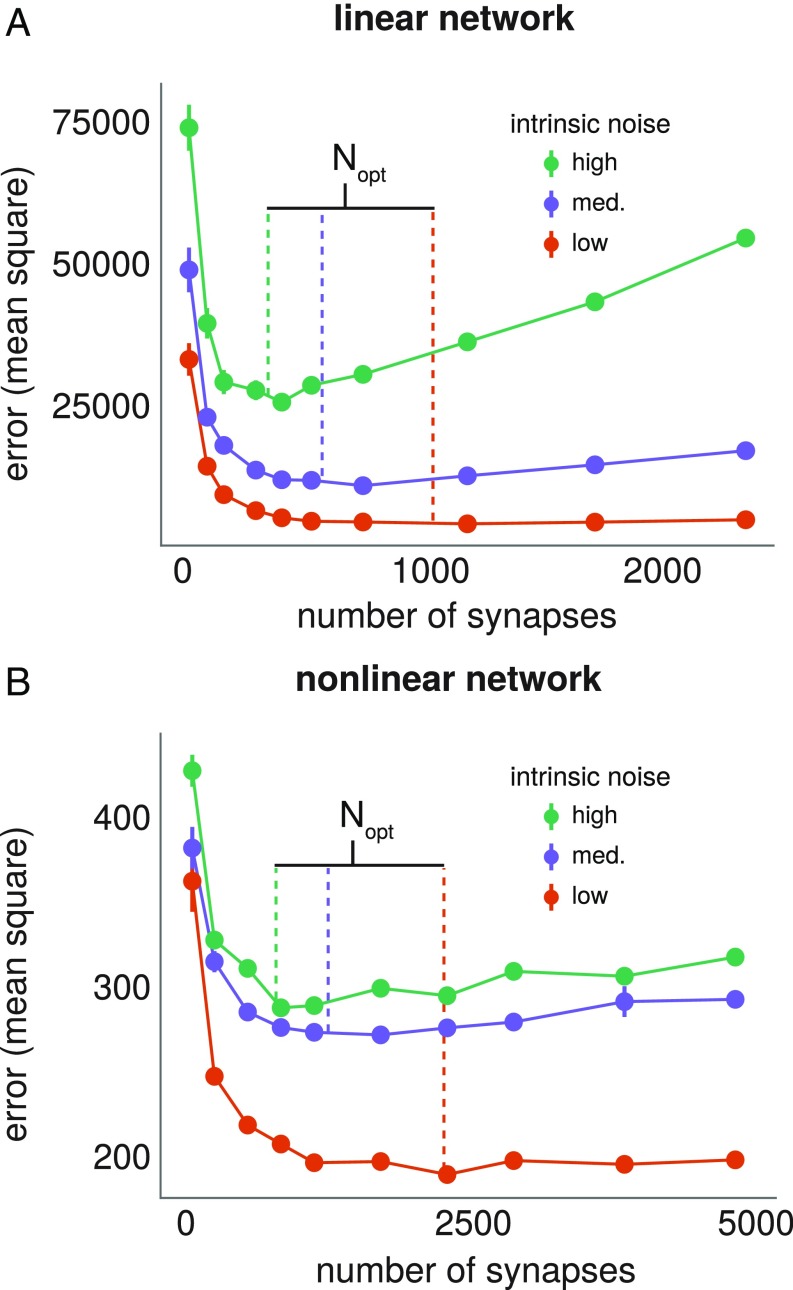

Fig. 6.

Testing analytic prediction of optimal network size for linear and nonlinear networks. (A and B) Linear (A) and nonlinear (B) networks of different sizes are trained for 1,500 learning cycles of length . Mean steady-state error over 12 repeats is plotted against network size. Error bars denote SEM. Colored lines represent a priori predicted optimal network sizes using Eqs. 17 and 19 for the linear and nonlinear examples, respectively. (A) Linear networks all have a 2:1 ratio of inputs to outputs. On each repeat, networks of all considered sizes learn the same mapping, embedded in the appropriate input/output dimension (detailed in SI Appendix, Learning in a Linear Network). The learning rule uses (low intrinsic noise), (medium intrinsic noise), and (high intrinsic noise). (B) Nonlinear networks have sigmoidal nonlinearities at each neuron and a single hidden layer (Materials and Methods). All networks have 10 input and 10 output neurons and learn the same task. The number of neurons in the hidden layer is varied from 5 to 120. The learning rules all use and . The value of is set respectively at 0.03, 0.04, and 0.05, in the low, medium, and high intrinsic noise cases.