Abstract

Brain areas that control gaze are also recruited for covert shifts of spatial attention1–9. In the external space of perception, there is a natural, ecological link between the control of gaze and of spatial attention, since information sampled at covertly attended locations can inform where to look next2,10,11. Attention can also be directed internally to representations held within the spatial layout of visual working memory12–16. In such cases, the incentive for using attention to direct gaze disappears since there are no external targets to scan. Here we investigate whether the brain’s oculomotor system also participates in attention within the internal space of memory. Paradoxically, we reveal this participation through gaze behaviour itself. We demonstrate that selecting an item from visual working memory biases gaze in the direction of the memorised location of that item – despite there being nothing to look at and even though location memory was never explicitly probed. This retrospective ‘gaze bias’ occurs only when an item is not already in the internal focus of attention, and predicts the performance benefit associated with the focusing of internal attention. We conclude that the oculomotor system also participates in the focusing of attention within memorised space, leaving traces all the way to the eyes.

We report four complementary experiments investigating the recruitment of the brain’s oculomotor system during attentional focusing within the spatial layout of visual working memory. In each experiment, we probed this involvement in the most direct way possible: by investigating gaze behaviour itself. In doing so, we capitalised on the observation that recruitment of the brain’s oculomotor system leaves peripheral traces, even during covert task demands (17 for discussion of such peripheral traces).

Each experiment involved keeping multiple coloured and oriented bars in memory in order to reproduce the orientation (experiments 1-4) or colour (experiment 4) of one of them after a short memory delay in which only the fixation cross remained on the screen. The bar to be reported was always indicated by a change in the colour (experiments 1-4) or shape (experiment 4) of the central fixation cross (the memory probe). Response initiation (a key-press in experiment 1, and a movement of the computer mouse in experiments 2-4) prompted the appearance of a response dial which also appeared centrally. In all four experiments, the spatial locations of the memory items were purely incidental and were not strictly required for task performance at any point (colour-orientation bindings were sufficient). Because the probe and response-dial always occurred centrally, the anticipation and processing of these stimuli did not depend on item location either.

In experiment 1 (Fig. 1a), each trial contained one left and one right item that were equally likely to be probed for report after a 2 to 2.5 s memory delay. On average, participants required 758.41 ± 56.69 (m + s.e.m) ms after probe onset to initiate their response, and reproduced the probed item’s orientation with an average absolute error of 14.22 ± 0.91 degrees.

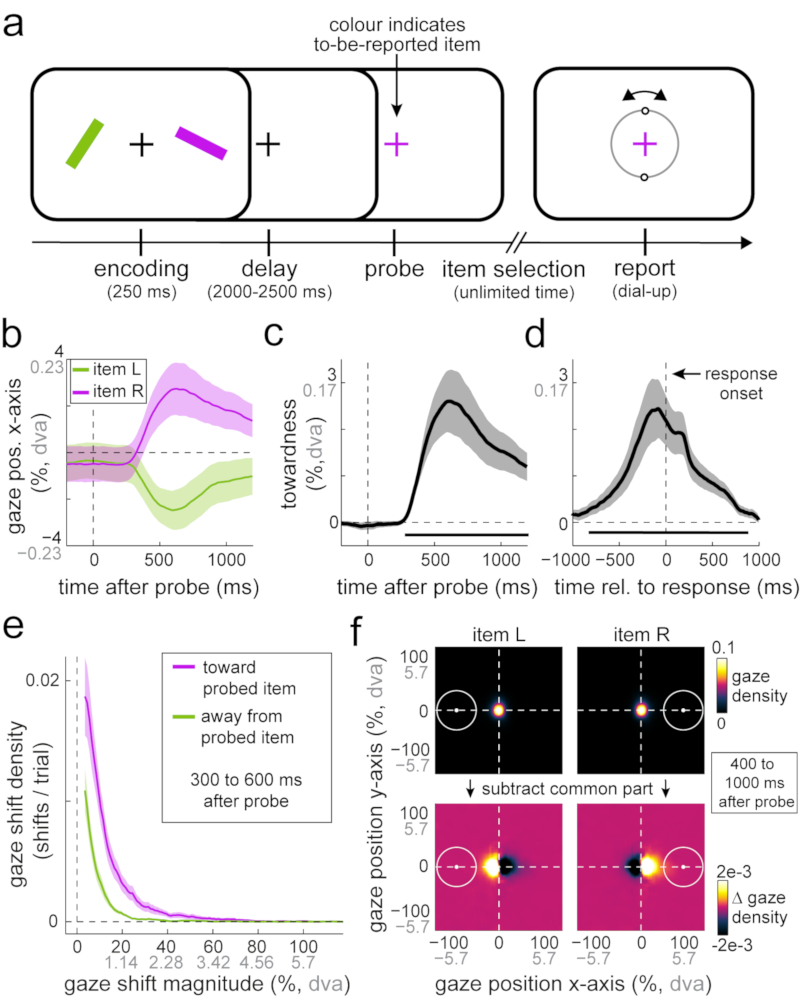

Fig 1. Selection from working memory biases gaze toward the location of memorised visual items.

a) Task schematic of experiment 1. Participants memorised two differently-coloured oriented bars in order to reproduce the orientation of either bar after a 2 to 2.5 s delay. A colour-change of the central fixation cross prompted participants to report the orientation of the colour-matching memory item. The central response dial appeared on the screen upon response initiation. b) Average gaze position (in the horizontal plane) after the probe, as a function of the memorised location of the probed item. c) Gaze bias toward the probed item’s memorised location relative to probe onset. d) Same as c, but relative to response onset. Horizontal bars indicate significant temporal clusters; cluster-based permutation tests of the sum of t-values across time points; probe-locked data in panel c: ∑T= 3732.1, 95% of permutations between -864.1 and 826.6, P < 0.001; decision-locked data in panel d: ∑T = 5824.8, 95% of permutations between -1262.1 and 1224.4, P < 0.001. e) Density of gaze shifts toward and away from the probed item’s memorised location, as a function of shift magnitude (see also Supplementary Fig. 2). f) Density of two-dimensional gaze position following probes of left and right items, before and after subtraction of the density values that were shared between left and right item probes. White circles indicate the area occupied by the probed item at encoding. Percentages are defined relative to the items’ centre locations at encoding, with 100% corresponding to approximately 5.7 degrees visual angle (denoted “dva”). To enhance interpretability, we depict both metrics on all axes that involve gaze position or gaze shift magnitude information (percentage in black; degrees of visual angle in grey). Shaded areas in all panels represent ± 1 s.e.m (n = 23).

Though item location was never probed, we observed a clear systematic gaze shift in the direction of the original (encoded) location of the probed item. When the probed memory item had previously occupied the left position on the screen, gaze became biased to the left; whereas when the probed memory item had been on the right, gaze became biased to the right (Fig. 1b). To increase sensitivity and interpretability, we combined these time courses in a single metric of ‘towardness’ (Fig. 1c). A cluster-based permutation analysis of this time course (which deals with the multiple comparisons along the time axis18) showed that this effect was highly robust (horizontal black line in Fig. 1c; ∑T= 3732.1, 95% of permutations between -864.1 and 826.6, n = 23 participants, P < 0.001). Though the average magnitude of this bias was relatively small (peaking at 2.62% toward the item’s memorised location, corresponding to approximately 0.15 degrees visual angle; Fig. 1c), this gaze bias was positive (toward>away) in every participant (Supplementary Figs. 1 and 2b).

To characterise the temporal window of this gaze bias in relation to our task, we additionally re-aligned the data to response onset (Fig. 1d). This revealed that the bias is particularly prominent just before response onset and dissipates soon after the dial-up of the selected item’s orientation commences. This timing thus coincides with the period of attentional item selection (during which only the fixation cross was present on the screen). Indeed, its onset and time course is well in line with the time course of the voluntary deployment of attention (as, for example, in 19–21).

To understand how the frequency and magnitude of gaze shifts contributed to the overall bias, we zoomed into the window during which the bias emerged (300 to 600 ms post-probe; the window in which the putative shifts of gaze occurred; Supplementary Fig. 2a) and constructed a density plot for gaze shifts arranged by magnitude (Fig. 1e). This confirmed a higher proportion of gaze shifts toward (vs. away from) the probed item’s memorised location. At the same time, this revealed that this bias is composed of gaze shifts of relatively small magnitude. As Figure 1e makes clear, participants virtually never looked all the way to the probed item’s original location (denoted 100% in Fig. 1e, and corresponding to 5.7 degrees visual angle; Supplementary Fig. 3 for the relevant calibration data). The gaze bias reported here therefore differs from earlier reports of gaze re-visits to previously occupied locations during long-term memory retrieval or imagery of visual images22–26. Instead, it is in line with the increased propensity for small gaze shifts (microsaccades) to occur in the direction of covertly attended locations17,27–29 – here shown for attended locations within the internal space of memory. A complementary quantification of average gaze shift frequency, shift magnitude, and duration of ensuing fixations (Supplementary Fig. 2b) confirmed that the identified gaze position bias primarily reflected an increased number of gaze shifts toward (vs. away from) the memorised location of the probed item (Mtoward = 0.245 detected shifts per trial vs. Maway = 0.096 shifts per trial; t(19) = 8.058, P < 0.001, d = 1.68, 95%CI of d between 1.032 and 2.313), supplemented by a modest increase in their average magnitude (Mtoward = 12.47%, 0.711 dva vs. Maway = 10.382%, 0.592 dva; t(19) = 3.576, P = 0.002, d = 0.746, 95%CI of d between 0.275 and 1.203). We did not find evidence for longer fixation durations following gaze shifts toward vs. away from the memorised location of the probed item (Mtoward = 363.99 ms vs. Maway = 360.434 ms; t(19) = 0.25, P = 0.805, d = 0.052, 95%CI of d between -0.357 and 0.461; Supplementary Fig. 2b for a graphical depiction of these data).

Because this bias is carried by gaze shifts of relatively small magnitude, it may have often gone unnoticed in previous work – such as in control analyses that only consider larger gaze shifts, or gaze shifts whose end-location is in the vicinity of the item’s memorised location. This is nicely illustrated by the heat maps (2D density plots) of gaze position in the post-probe window in which the gaze bias is most pronounced (400 to 1000 ms; note that this window is different than the 300 to 600 ms window used for the analysis of the shifts-of-gaze that inevitably precede the position-of-gaze bias that we depict here). When considering only overall fixation within one experimental condition (Fig. 1f, top row), it appears that participants are maintaining fixation really well, and similarly after probes of left and right items. However, merely subtracting the common part (average) from both heat maps (leaving only the difference in gaze density following left vs. right item probes; Fig. 1f, bottom row) reveals that this is not the case: gaze visits clearly prevail in the direction of the probed item’s memorised location.

If the identified gaze bias reflects the attentional selection and focusing of memory items, then this bias should occur whenever an item is selected, even if the selected item has to be held in memory for another delay before a response is prompted. Moreover, it should only occur when the probed item is not already in the focus of attention.

To investigate these predictions, experiment 2 employed a retro-cue task with a four-item array (Fig. 2a). Informative (coloured) retro-cues during the retention period informed participants, with 100% validity, which item would be probed after a subsequent delay period. In contrast, neutral (grey) retro-cues also constituted a transient change of the fixation cross, but gave no information regarding which item would most likely be probed after the second delay period.

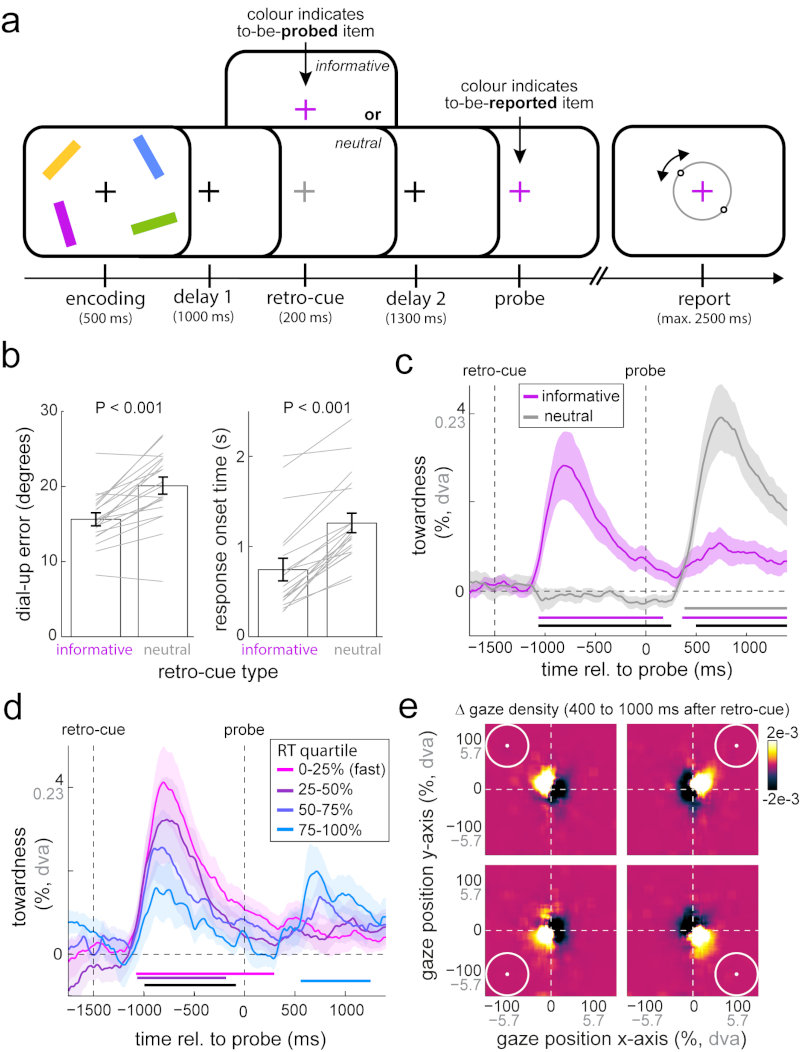

Fig 2. Gaze bias reflects attentional focusing of currently unfocused memory items.

a) Experiment 2 involved the same orientation reproduction working memory task as experiment 1, but this time with four memory items and with a retro-cue manipulation during the delay period. Informative (coloured) retro-cues informed which bar would be probed after another delay period, whereas neutral (grey) retro-cues were uninformative. b) Average reproduction errors and response onset times for trials with informative and neutral retro-cues. Grey lines indicate individual participant data. Paired-samples t-tests; errors: t(19) = -6.324; P < 0.001, d = -1.414, 95%CI of d between -2.031 and -0.779; response onset times: t(19) = -9.978; P < 0.001, d = -2.231, 95%CI of d between -3.052 and -1.394. c) Gaze bias toward the probed item’s memorised location for trials with informative and neutral retro-cues. Horizontal bars denote significant clusters for both retro-cue conditions separately, as well as for their difference in black; cluster-based permutation tests of the sum of t-values across time points; informative (purple): ∑T left cluster = 3986.6, ∑T right cluster = 2781, 95% of permutations between -1313.4 and 1427.5, P left cluster < 0.001, P right cluster = 0.006; neutral (grey): ∑T = 5144.6, 95% of permutations between -1430.1 and 1466, P < 0.001; difference (black): ∑T left cluster= 3884.4, ∑T right cluster = -4516.7; 95% of permutations between -1593 and 1561.6, P < 0.001 (both clusters). d) Gaze bias in informative retro-cue trials as a function of response onset time after the probe. The black horizontal bar denotes the parametric effect; cluster-based permutation test of the sum of regression t-values of across time: ∑T= -3067.3, 95% of permutations between -1091.1 and 1129.3, P = 0.002. e) Density of gaze position after informative retro-cues as a function of the memorised location of the cued item, following subtraction of the common part of all four maps (Supplementary Fig. 4 for data before and after subtraction). Conventions as in Fig. 1. Error bars and shaded areas in all panels represent ± 1 s.e.m (n = 20).

Retro-cues were highly effective in facilitating behavioural performance (Fig. 2b). Average reproduction errors were vastly reduced in trials with informative vs. neutral retro-cues (errors: t(19) = -6.324; P < 0.001, d = -1.414, 95%CI of d between -2.031 and -0.779) and this facilitation was even clearer in response onset times (t(19) = -9.978; P <0.001, d = -2.231, 95%CI of d between -3.052 and -1.394).

Having established the utility of the retro-cues, we turned to the gaze data. For simplicity, we again focused on the aggregate measure of towardness. Informative retro-cues also elicited a robust gaze bias (Fig. 2c; cluster-based permutation test of the sum of t-values across time points: ∑T= 3986.6, 95% of permutations between -1313.4 and 1427.5, n = 20 participants, P < 0.001). Thus this bias is not strictly linked to responding, but also occurs when items are brought into focus during the retention period. No systematic bias could be observed after neutral retro-cues, because these did not afford selection of any particular item. At the time of the probe, a robust gaze bias was measured in trials with neutral retro-cues, but this was substantially reduced in trials with an informative retro-cue (black horizontal line in Fig. 2c; cluster-based permutation test of the sum of t-values across time points: ∑T= -4516.7, 95% of permutations between -1593 and 1561.6, n = 20 participants, P < 0.001). Having previously focused on the item to be retrieved, in informative retro-cue trials, the probe provided only redundant information for selecting the relevant memorandum. Thus, this gaze bias appears to reflect the process of item selection and focusing that occurs when the to-be-selected item is not already in the internal focus of attention. This is also in line with the observation that the observed gaze bias is relatively transient, even after informative retro-cues that require the item to be held in the sustained focus of attention throughout the second retention period (Fig. 2c). This gaze bias thus marks the process of focusing of attention rather than the focused attentional status of an item per se.

Under this focusing account, the observation of residual gaze bias after the probe (in informative retro-cue trials) may reflect the occasional failure to use the retro-cue effectively to place the cued item in the focus of attention. To investigate this possibility, we sorted informative retro-cue trials into quartiles according to cue benefit, as indexed by response-onset times – given that the largest effect of informative retro-cues was observed on response times (Fig. 2b). Figure 2d shows that slower trials indeed showed a reduced average gaze bias (peak-bias of 1.585% for the slowest trials, compared to 4.12% for the fastest trials) following the informative retro-cues (cluster-based permutation test of the sum of t-values of the parametric effect across time points: ∑T= -3067.3, 95% of permutations between -1091.1 and 1129.3, n = 20 participants, P = 0.002; black horizontal line in Fig. 2d). Moreover, as predicted, only the slowest trials showed a notable and significant gaze bias following the probe (cluster-based permutation test of the sum of t-values across time points: ∑T= 1893.9, 95% of permutations between -1094.3 and 1010.8, n = 20 participants, P = 0.004; light blue horizontal line in Fig. 2d). The gaze bias after the retro-cue thus marks the success of this cue to facilitate subsequent performance by reducing the need to (re-)focus the to-be-reported item after the probe.

Heat maps of the retro-cue-induced gaze bias in the 400 to 1000 ms window after the informative retro-cue (Fig. 2e) confirmed the same nature of this bias as in experiment 1. They further demonstrate that this bias occurs along both the horizontal and the vertical plane, thus adhering to a two-dimensional spatial-layout of visual working memories. As in Figure 1f, this only became clear after subtracting the common part from all four heat maps (Supplementary Fig. 4 for a comparison of heat maps before and after common-part subtraction).

Experiments 1 and 2 show that attentional selection from working memory leads to involuntary shifts in gaze. In experiment 3 we asked whether the reverse can be demonstrated too: whether involuntary (by which we mean not deliberate or goal-directed) shifts in gaze can also trigger the attentional selection of an item in visual working memory. To this end, we introduced a task manipulation to induce a small gaze shift during the retention period. We did this by temporarily displacing the central fixation (for 500 ms; before repositioning it in the centre) slightly toward or away from the memorised location of the item that would subsequently be probed (Fig. 3a). Fixation displacements were never predictive of which item would be probed. We chose the magnitude of this fixation displacement (0.5 degrees visual angle; 7.5 % toward the centre of either bar) to render shifts in gaze comparable to those observed during attentional selection of items from working memory (see Fig. 1e and Supplementary Fig. 2). If gaze shifts of this order were sufficient to trigger attentional selection of the side-congruent item, then we predicted that congruent fixation displacements should act similarly as informative retro-cues: facilitating performance (compared to incongruent trials) and reducing the subsequent gaze bias after the probe (as following informative retro-cues in experiment 2).

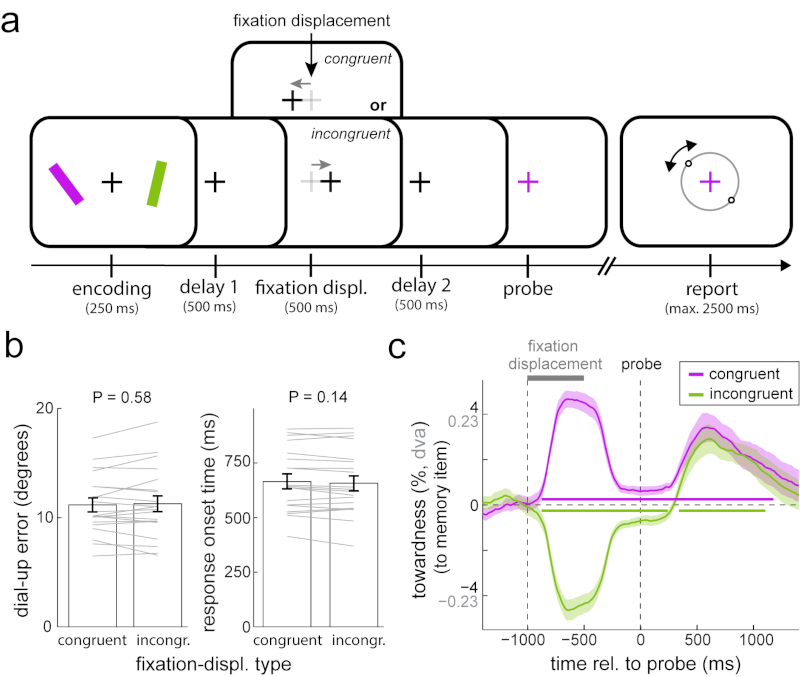

Fig 3. Involuntary gaze shifts are insufficient to trigger attentional facilitation in visual working memory.

a) Experiment 3 involved the same orientation reproduction working memory task as experiments 1 and 2, but this time with a fixation displacement manipulation during the delay period. Fixation displacements were equally often in the same (congruent) or opposite (incongruent) direction as the memorised location of the to-be-probed item, and were therefore uninformative. b) Average reproduction errors and response onset times for trials with congruent and incongruent fixation displacements. Paired-samples t-tests; errors: t(19) = -0.564, P = 0.579, d = -0.126, 95%CI of d between -0.565 and 0.316; response onset times t(19) = 1.553, P = 0.137, d = 0.347, 95%CI of d between -0.109 and 0.795. c) Gaze bias toward the probed item’s memorised location for trials with congruent and incongruent fixation displacements. Horizontal bars indicate significant temporal clusters; cluster-based permutation tests of the sum of t-values across time points; congruent (purple): ∑T= 11700, 95% of permutations between -1506.8 and 1549.3, P < 0.001; incongruent (green): ∑T left cluster = -7360.3, ∑T right cluster = 3037.8, 95% of permutations between -1629.8 and 1575.3, P left cluster < 0.001, P right cluster = 0.002. Conventions as in Fig. 1. Error bars and shaded areas in all panels represent ± 1 s.e.m (n = 20).

Figure 3c shows that the fixation displacement manipulation yielded a reliable shift in gaze toward (congruent) or away from (incongruent) the item that would subsequently be probed (e.g. comparing the average ‘towardness’ in the period between fixation displacement and probe onset yielded a highly reliable difference: t(19) = 11.197; P < 0.001, d = 2.504, 95%CI of d between 1.593 and 3.39). This was the case even though participants were instructed to ignore this displacement. Despite this successful implementation of our manipulation, we did not observe either of the predicted effects. Performance was virtually indistinguishable between congruent and incongruent trials (Fig. 3b; errors: t(19) = -0.564, P = 0.579, d = -0.126, 95%CI of d between -0.565 and 0.316) and, if anything, response times were slightly (though not significantly) longer for congruent trials (t(19) = 1.553, P = 0.137, d = 0.347, 95%CI of d between -0.109 and 0.795). Complementing this absence of the predicted performance effect, we also found no evidence for the predicted reduction of gaze bias following the congruent probe (Fig. 3c).

These data thus suggest that involuntary shifts of gaze, of comparable magnitude to those observed during attentional item selection, may be insufficient to cause the type of attentional shifts that bring an item into the focus of attention and keep it there to benefit subsequent performance. Of course, though, it remains possible that these predicted effects would have been manifest with particular variations of our fixation displacement manipulation, such as with different displacement timing or magnitude, or with explicit instructions to follow this displacement. In line with the latter, goal-directed gaze shifts of larger magnitude have previously been shown to facilitate memory performance for gaze-congruent items30,31 (see also 32 for related results).

These data nevertheless help convey our main contribution, which is the demonstration of a directional gaze bias during the attentional focusing of items in visual working memory. Namely, they re-confirm that this bias is highly replicable and show that it occurs even when the eyes have only just returned from a prior shift of gaze.

In the three experiments described so far, participants always received a colour probe that indexed the relevant memory item, and were asked to retrieve and report its memorised orientation. Though item orientation was independent of item location (the latter being the key variable in our gaze analyses), it may be that the gaze-bias effect is specific to when individuals are asked to retrieve and report orientation. For example, orientation could be particularly strongly coupled to information about spatial location, provided orientation is itself also a spatial feature. Alternatively, the gaze bias may reflect a general phenomenon that occurs whenever individuals retrieve and report features of items in visual working memory, even when these features are intrinsically non-spatial, such as colour.

To test the generality of the gaze bias effect, in experiment 4 (Fig. 4a) we added a condition in which we reversed the roles of item colour and orientation as features used for cueing and reporting. In the new ‘colour blocks’ participants viewed two bars of distinct orientations and reproduced the colour of the item that was probed through its orientation. We compared this with the ‘orientation blocks’ in which participants reported the orientation of the item probed through its colour (as in experiments 1-3). For comparability, items in orientation blocks (Fig. 4a, top) had 2 unique colours (green, purple) and were drawn from 180 unique orientations, while items in colour blocks (Fig. 4a, bottom) had 2 unique orientations (vertical, horizontal) and were drawn from 180 unique colours. We found no statistically significant difference in reproduction errors between orientation and colour reports (Fig. 4b; t(19) = -0.565, P = 0.579, d = -0.126, 95%CI of d between -0.565 and 0.315) though response onset times for colour reports were slower than for orientation reports (Fig. 4b; t(19) = 6.935, P < 0.001, d = 1.551, 95%CI of d between -0.885 and 2.198).

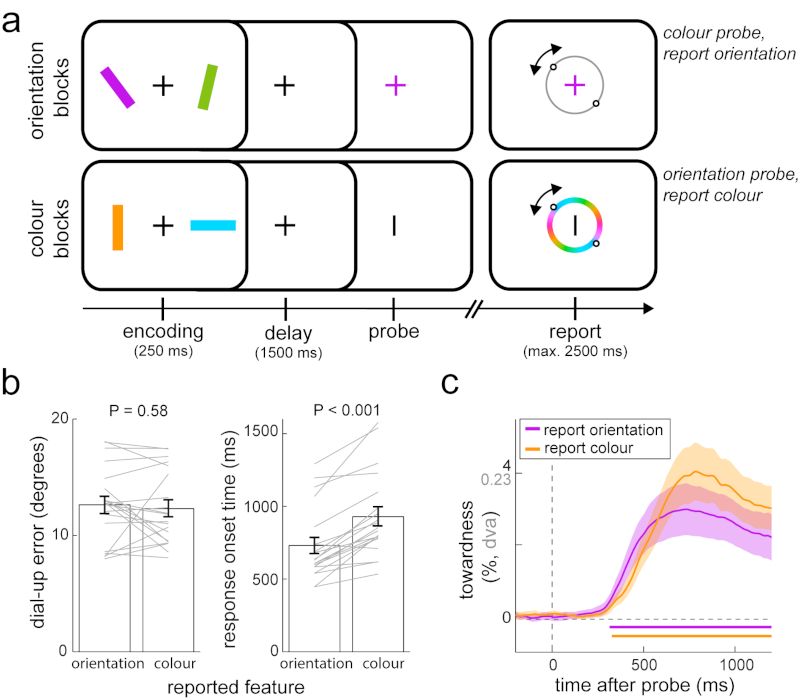

Fig 4. Gaze bias generalises across visual features.

a) Experiment 4 consisted of two types of blocks. Orientation blocks involved the same orientation reproduction working memory task as experiments 1-3. In colour blocks we reversed the roles played by item colour and orientation – we used orientation (vertical or horizontal) to probe the to-be-selected memory item, of which participants were required to then report the colour. b) Average reproduction errors and response onset times for blocks with orientation and colour reports. Paired-samples t-tests; errors: t(19) = -0.565, P = 0.579, d = -0.126, 95%CI of d between -0.565 and 0.315; response onset times: t(19) = 6.935, P < 0.001, d = 1.551, 95%CI of d between -0.885 and 2.198. c) Gaze bias toward the probed item’s memorised location for blocks with orientation and colour reports. Horizontal bars indicate significant temporal clusters; cluster-based permutation tests of the sum of t-values across time points; orientation reports: ∑T= 3522.5, 95% of permutations between -1000.6 and 1053.8, P < 0.001; colour reports: ∑T= 4065.3, 95% of permutations between -988.6 and 978.1, P < 0.001. Conventions as in Fig. 1. Error bars and shaded areas in all panels represent ± 1 s.e.m (n = 20).

The main result in experiment 4 is that the identified gaze bias was similarly observed when participants reported memorised colour (orange time course in Fig. 4c; cluster-based permutation test of the sum of t-values across time: ∑T= 4065.3, 95% of permutations between -988.6 and 978.1, n = 20 participants, P < 0.001). Moreover, this gaze bias was at least as prominent and robust for colour as it was for orientation reports (Fig. 4c), with no significant differences between block types (zero clusters found). The identified gaze bias – that depends on the memorised location of the probed item – thus generalises across the retrieval of orientation or colour of the memory item at that location.

Our results show that focusing attention on an item within the spatial layout of working memory involves the brain’s oculomotor system, with consequences that can be traced all the way to the eyes, and that can predict subsequent performance benefits. These findings expand the attentional role of the oculomotor system to the internal space of memory and carry relevant implications for the study as well as our understanding of the neural mechanisms by which our brains flexibly prioritise information in memory to serve adaptive behaviour.

In the domain of perception, the deployment of spatial attention has previously been shown to increase the propensity of small fixational gaze shifts (microsaccades) in the direction of covertly attended locations outside of current fixation27,28 that may be critical for attentional facilitation to occur29. This has been interpreted as an inadvertent spillover effect17,27 from activating oculomotor brain areas (such as the Frontal Eye Field and Superior Colliculus) that are recruited for both spatial attention and gaze. In the context of perception, however, it remains possible that such gaze behaviour reflects the sub-threshold consequence of the urge to look at the attended (or expected) item when explicitly instructed not to do so. Here, we demonstrate a similar gaze bias within the context of visual working memory, where there was nothing to look at (nor expected) in the direction of the bias. These data therefore not only imply a role for the oculomotor system in focusing attention within the internal space of memory, but also show that such oculomotor engagement leaves peripheral traces (17 for discussion of such ‘peripheral traces’) even when there is no incentive for them.

Memory-based ‘looking at nothing’ (33 for review), has previously been reported in the contexts of long-term memory retrieval26, visual imagery22,24,25, and semantic comprehension23,34. Our data provide a clear example of memory-based gaze behaviour in the context of visual working memory. In contrast to prior work, however, gaze behaviour in our task did not involve re-visiting previously occupied locations, but was constituted by gaze shifts of much smaller magnitudes (thus being more in line with the microsaccade-propensity biases discussed above; though we do not rule out the possibility that ocular drifts35,36 may contribute to the observed gaze position bias as well). In this sense, our observations reflect a looking-toward-nothing, rather than a looking-at-nothing, phenomenon. Moreover, unlike previous accounts, our data revealed that the identified gaze bias was not the consequence of the automatic co-activation of the ‘spatial tag’ of an item that occurs whenever an item is retrieved (probed) from memory. Instead, our bias depended on the need to bring the item into the internal focus of attention. Whether internal focusing may also account for previous demonstrations of looking at nothing remains an interesting possibility to be investigated.

We observed the spatial gaze bias even though item location was never asked about. Participants were asked to reproduce the orientation of the item that was probed through its colour, or the colour of the item that was probed through its orientation. In principle, colour-orientation bindings were thus sufficient to perform well on our working memory tasks. The fact that gaze became biased in the direction of incidental memory locations implies that these locations were nevertheless retained in memory and were used to select the appropriate item and to place it into the focus of attention. This thus provides compelling evidence for a grounding role of spatial location in organising as well as accessing and prioritising visual working memories (see also 16,37–43 for further evidence supporting such a grounding role). It also shows that the oculomotor system is utilised for visual working memory (as also demonstrated in44–47) even when spatial location is not the target memory attribute.

In addition to these conceptual advances, these data also carry relevant practical implications. They show that gaze behaviour can provide a reliable and real-time proxy for attentional focusing in visual working memory, and can even predict the degree to which one benefits from such focusing for guiding subsequent behaviour. The real-time nature of this measure – capturing attentional focusing while it occurs – complements traditional measures of accuracy and reaction time that only provide a single value at the end of each trial. This provides a new, non-invasive way to investigate the involvement of the human oculomotor system in visual working memory, as well as the associated spatial grounding of this fundamental memory system.

Methods

Experimental procedures were reviewed and approved by the Central University Research Ethics Committee of the University of Oxford.

Participants

We present eye-tracking data from four complementary experiments. Data from experiment 1 came from an electroencephalography (EEG) study with the original purpose to study electrophysiological brain activity associated with working memory guided action (van Ede et al., accepted48). Here, we report the complementary eye-tracking data from this experiment that were not part of the original article. Twenty-five human volunteers participated in experiment 1 (age range 19-36, 11 male, 2 left handed). Sample size for experiment 1 was set based on our planned EEG analysis. No statistical methods were used to pre-determine sample size, but our sample size was chosen to be similar to those reported in previous publications from the lab that focused on similar neural signatures (e.g. 16). For the current eye-tracker analysis, data from two participants had to be excluded due to too poor eye-tracking quality. Experiments 2-4 were specifically designed to follow-up the eye tracking results of experiment 1. Because the identified gaze bias in experiment 1 was so robust, we set the sample size to 20 in experiments 2-4 (experiment 2: age range 22-40, 9 male, 0 left handed; experiment 3: age range 19-32, 8 male, 2 left handed; experiment 4: age range 18-37, 6 male, 0 left handed). One participant kept closing the eyes during experiment 3 and was replaced. Participant sampling was performed separately for each task. All participants had normal or corrected-to-normal vision. Participants provided written consent before participation and were reimbursed £15/hour for experiment 1 (which included EEG) and £10/hour for experiments 2-4.

Task essentials

All four experiments involved the same basic visual working-memory task in which participants remembered the orientation and colour of multiple visual bars in order to reproduce the orientation (experiments 1-4) or colour (experiment 4) of one of them after a working-memory delay. Bars were centred at a viewing distance of 5.7 degrees visual angle and were 5.7 degrees in length and 0.8 degrees in width. The to-be-reported bar was indicated by a change in colour (experiments 1-4) or shape (experiment 4) of the central fixation cross (the memory probe). In Experiments 1-3, bars had unique colours that were drawn from a set of four (green, purple, orange, blue) whereas in experiment 4 bars were drawn either from a set of two colours (green, purple; report-orientation blocks) or from a set of 180 colours (report-colour blocks, detailed below). Every bar in a display was equally likely to be probed (or retro-cued before the probe, as in experiment 2), independent of its colour, orientation, or location. Participants had unlimited time after the memory probe before response initiation. The response dial consisted of a circle (5.7 degrees visual angle in diameter) with two small circular handles that had to be re-aligned to match the memorised orientation (experiments 1-4) or colour (experiment 4) of the probed bar. Dial-up was performed with either the keyboard (experiment 1) or the mouse (experiments 2-4), as further detailed below. The response dial appeared on the screen only at response initiation and was always positioned around the central fixation cross. Bar colour and orientation were always independent of bar location and bar location was never explicitly asked about. Participants received feedback immediately after response termination by turning the fixation cross green (for 200 ms) for reproduction errors less than 20 degrees, and red otherwise.

A custom gaze-calibration module was inserted after every task block. Participants were instructed to look at a small white calibration point that was re-positioned every 1 to 1.5 s to one out of 7 positions that were visited in randomised order. The positions we used were: left-top, left-middle, left-bottom, right-top, right-middle, right-bottom, as well as the centre of the screen (see also Supplementary Fig. 3). Calibration positions were set to 5.7 degrees visual angle in the horizontal and the vertical axes, corresponding to the centres of the bars used in the memory tasks.

Task variations

Experiment 1 (Fig. 1a) involved the most basic task with no additional manipulations. Experiment 2 additionally incorporated a retro-cue manipulation (Fig. 2a), experiment 3 additionally incorporated a fixation-displacement manipulation (Fig. 3a), and experiment 4 additionally included blocks in which participants reported the colour of the probed item as opposed to its orientation (Fig. 4a). The retro-cue manipulation in experiment 2 involved a transient (200 ms) colour change of the fixation cross that occurred in the middle of retention interval (Fig. 2a for exact intervals). The fixation cross either changed from black to a colour that matched one of the four bars (informative retro-cue) or from black to grey (neutral retro-cue). Informative retro-cues informed with 100% validity that the colour-matching bar would be probed after the second delay. Neutral cues were uninformative. Informative and neutral retro-cues were equally likely and were randomly intermixed across trials. The fixation-displacement manipulation in experiment 3 also occurred in the middle of the retention interval (Fig. 3a) and involved a temporary (500 ms) displacement (0.5 degrees visual angle) of the central fixation cross to either the left or the right. After displacement, the fixation cross was repositioned in the centre. Fixation displacements were not predictive of which bar would subsequently be probed as they were equally likely to be in the same (congruent) or opposite (incongruent) direction as the memorised location of the to-be-probed bar. Congruent and incongruent trials were randomly intermixed. Finally, experiment 4 consisted of two types of blocks, which were randomly intermixed. In ‘orientation blocks’, participants were asked to report memorised bar orientation following a colour change of the central fixation cross (as per experiments 1-3). One bar was always green and the other one purple and bar orientations were randomly drawn between 0 and 180 degrees. Conversely, in ‘colour blocks’, one bar was always vertical and the other horizontal, while bar colours were now drawn from a circular (CIELAB-based) colour space with 180 distinct colour values. In these blocks, the probe consisted of a shape change (turning the fixation cross to a “|” or “–”) and participants reported the colour of the shape-matching item on a mirror-symmetrical colour wheel (Fig. 4a). We used horizontal and vertical bar and probe orientations as these are each ‘neutral’ with respect to left-right gaze biases and we used a mirror-symmetrical colour wheel to increase comparability with the orientation report.

There were also more subtle differences in settings between experiments. However, because we observed the same qualitative gaze bias across the four experiments, these variations appear not essential for this bias to be manifest, and we therefore mention them only briefly. In experiment 1, bars were oriented between ±20 to ±70 degrees (avoiding 20 degrees from horizontal and vertical), whereas in experiment 2-4 they spanned from 0 to ±90 degrees (full circle). In experiment 1, one bar was always oriented to the left and the other oriented to the right (though bar orientation and location remained orthogonal across trials), whereas in experiments 2-4 bar orientations were drawn independently from each other. Experiments 1, 3, and 4 always contained two bars that were positioned to the left and right of fixation (at 5.7 degrees visual angle), whereas experiment 2 contained four bars positioned in the four quadrants of the screen (at 5.7 degrees visual angle in the horizontal and the vertical axis). Bars were presented at encoding for 250 ms in experiments 1, 3, and 4, but for 500 ms in experiment 2. In experiment 1, orientation dial-up was performed by holding down one of two keys on the keyboard (the “\” key to rotate the dial leftward and the “/” key to rotate the dial rightward) and the response was terminated at key release. In experiments 2-4, the mouse (operated with the dominant hand) was used for dial-up, and the response was terminated when the left mouse button was pressed (or after the maximum dial-up time of 2500 ms after response initiation). The dial always started from the vertical (upright) orientation in experiment 1, but from a random orientation in experiments 2-4. Exact intervals differed between experiments too (see task schematics in Figs. 1-4).

Experiment 1 contained two consecutive sessions of one hour (with a 15 minute break), while experiments 2-4 each involved one session that lasted between one and one-and-a-half hour. In experiments 1-3, sessions always contained 10 blocks, while experiment 4 contained 14 blocks (7 orientation blocks and 7 colour blocks). Blocks contained 60 trials in experiment 1, 3 and 4, and 50 trials in experiment 2. In total, we collected 1200 trials in experiment 1, 500 trials in experiment 2, 600 trials in experiment 3, and 840 trials in experiment 4.

Randomisation

All four experiments used within-subjects designs. We did not compare results across experiments. Within each experiment, probed item location was always randomised across trials, and so was retro-cue style (informative vs. neutral) in experiment 2 and the congruency of fixation displacement in experiment 3. The colour task and the orientation task in experiment 4 were randomised across blocks. Before randomisation, all conditions of interest were set to have equal trial numbers. Data collection and analysis were not performed blind to the conditions of the experiments.

Eye tracking acquisition

Participants sat in front of a monitor (100-Hz refresh rate) at a viewing distance of approximately 95 cm with their head resting on a chin rest. An eye tracker (EyeLink 1000, SR research) was positioned on the table approximately 15 cm in front of the monitor. During the task, gaze was continuously tracked for both eyes simultaneously, at a sampling rate of 1000 Hz. Before acquisition, we calibrated the eye-tracker using the built-in calibration and validation protocols from the EyeLink software.

Eye tracking analysis

Eye tracking data were first converted from the edf to the asc format, and subsequently read into Matlab using the Fieldtrip analysis toolbox49. Eye blinks were detected and interpolated (± 100 ms around identified blinks) based on a spline interpolation procedure, using custom code. While blink correction increased signal quality, we observed the identified gaze bias even before any such correction. After blink correction, data from the left and the right eye were averaged, yielding two channels per trial: one containing the time course of horizontal gaze position (X channel) and the other of vertical gaze position (Y channel). We epoched data around probe onset, response onset, and calibration point onset.

Our main analysis focused on gaze position. Position data were normalised using the data from the custom calibration modules. We obtained the median gaze position values (in both X and Y channels) that were associated with each of our 7 gaze calibration positions (Supplementary Fig. 3), considering gaze position values from 500 to 1000 ms after each calibration point displacement. These empirical gaze values corresponded to the values that would be obtained if participants would look at the centre of the memory items. Accordingly, we defined these values as ±100 percent (corresponding to ±5.7 degrees visual angle). Calibration values were found separately for each participant and were used to normalise the single-trial gaze position data during the task. To enhance interpretability, we report both percentage values and degrees visual angle (“dva”) values in all relevant graphs.

Task modulations of gaze position were first identified (in experiment 1) by comparing trial-average normalised gaze position time courses between conditions in which the probed memory item occupied the left or the right position during encoding. To increase sensitivity and interpretability, we also constructed a measure of ‘towardness’ that expressed the gaze bias toward the memorised location of the probed bar in a single value. In experiments 1, 3, and 4 (with 1 left and 1 right item) towardness was defined as the average gaze position in the X channel following probes of right memory items minus left memory items, divided by 2. For experiment 2 (with 1 item in each quadrant), this additionally involved the complementary quantification in the Y channel (for top and bottom item positioning), and the averaging of the X and Y channel towardness values. Before averaging across participants, gaze time courses were smoothed by a Gaussian kernel with a 10 ms s.d.

For visualisation purposes, we also constructed heat maps of gaze density. Within a desired time window of interest, we created two-dimensional histograms of gaze position, aggregating gaze position values within this time window from all trials (not averaging over time points or trials). To express this as a density, gaze position counts were divided by the total number of gaze position samples that entered the analysis. Two-dimensional histograms were obtained at a 1x1 percent spacing, ranging from -150 to +150 percent (in normalised space). Before averaging across participants, density maps were smoothed by a 10x10 percent rectangular box-car. To zoom in on the gaze biases of interest that depended on memorised item location, we also subtracted gaze density values that were shared between conditions in which the probed memory item had different locations. This removed values associated with the average gaze density following probing of left and right items in experiment 1, and following probing of all quadrant items in experiment 2, in order to selectively focus on the differences between them.

In addition to gaze position, we also quantified gaze shifts, focusing on shifts along the horizontal axis (X channel) in experiment 1. To identify shifts, we took the absolute value of the temporal derivative of gaze position and defined samples that exceeded 10 times the median value as a shift. To avoid the same shift being counted multiple times, we imposed a minimum delay of 200 ms between successive gaze shifts. Shift magnitude was defined as the difference in normalised gaze position between the 50 ms period preceding the identified shift sample and the 50 to 100 ms period after this sample (after minus before). Because gaze shifts were directional, we could label them as toward or away from the memorised location of the probed memory item. Identified gaze shifts with an estimated magnitude smaller than 1 percent (i.e. smaller than 0.057 degrees visual angle) were considered noise and therefore not considered further (though these may still have contributed to the average gaze-position bias quantified in our main analyses). We calculated gaze shift density (quantified as number of shifts per trial, at a given magnitude) as a function of shift magnitude by including all gaze shifts identified within the desired time window for analysis. For magnitude sorting, we used successive magnitude bins of 5 percent (as defined in our normalised space), ranging from 1 to 120 percent in steps of 1 percent. Similarly, we also quantified gaze shift density as a function of time (Supplementary Fig. 2a), for which we used a sliding time window of 100 ms, that we advanced in steps of 20 ms. Though we are aware that many of these parameters are relatively arbitrary, we note that highly similar patterns were obtained when using different settings for gaze shift identification, gaze magnitude estimation, and gaze density quantification.

Statistical evaluation

Reproduction errors and response times were compared between conditions using paired samples t-tests. Reproduction errors were defined as the absolute difference (in degrees) between the probed item’s orientation and the reported orientation. Response onset times (in ms) were defined as the time from probe onset to response initiation. Trials with response times with a z score larger than 4 were removed before statistical evaluation.

Statistical evaluation of the gaze time courses was based on a cluster-based permutation approach18. This approach is ideally suited for evaluating physiological effects across multiple data points (in our case, gaze position data across time). This approach effectively circumvents the multiple-comparisons problem by evaluating clusters in the observed group-level data against a single permutation distribution of the largest clusters that are found after random permutations (or sign-flipping) of the condition-specific, trial-average, data at the participant-level. We used 10.000 permutations and used Fieldtrip’s default cluster-settings. Specifically, we clustered adjacent time points whose univariate (uncorrected) t-statistic of interest was significant (two-sided, alpha level of 0.05), and calculated our cluster-statistic as the sum of all t-values in each cluster. After each permutation, the largest cluster was defined as the cluster with the largest summed t value. When the data before permutation contained more than one cluster, each observed cluster was evaluated under the same permutation distribution of the largest cluster.

Inferential statistical evaluations of gaze were all based on the towardness time courses of gaze position. We additionally quantified densities of gaze position and gaze shifts in selected time windows that were identified in the towardness analysis. These follow-up analyses served only to characterise (in a descriptive sense) the nature of the identified gaze position bias.

All reported measures of spread involve ± 1 s.e.m, calculated across participants. All inferences were two-sided at an alpha level of 0.05 (0.025 per side). Confidence intervals of effect sizes (Cohen’s d) were calculated using the toolbox described in50. Data distributions were assumed to be normal but this was not formally tested.

Supplementary Material

Acknowledgements

This research was funded by a Marie Skłodowska-Curie Fellowship from the European Commission (ACCESS2WM) to F.v.E., a Wellcome Trust Senior Investigator Award (104571/Z/14/Z) and a James S. McDonnell Foundation Understanding Human Cognition Collaborative Award (220020448) to A.C.N, and by the NIHR Oxford Health Biomedical Research Centre. The Wellcome Centre for Integrative Neuroimaging is supported by core funding from the Wellcome Trust (203139/Z/16/Z). The funders had no role in study design, data collection and analysis, decision to publish or preparation of the manuscript. The authors also wish to thank Alexander Board and Rocio Silva Zunino for their help with the data collection of experiment 4.

Footnotes

Data availability All data are publically available through the Dryad Digital Repository at: https://doi.org/10.5061/dryad.m99r286 51.

Code availability Code will be made available by the authors upon request.

Author Contributions F.v.E and A.C.N conceived and designed the experiments; F.v.E programmed the experiments; F.v.E and S.R.C acquired the data; F.v.E analysed the data; F.v.E, S.R.C, and A.C.N interpreted the data; F.v.E and A.C.N drafted and revised the manuscript.

Competing interests The authors declare no competing interests.

References

- 1.Rizzolatti G, Riggio L, Dascola I, Umiltá C. Reorienting attention across the horizontal and vertical meridians: Evidence in favor of a premotor theory of attention. Neuropsychologia. 1987;25:31–40. doi: 10.1016/0028-3932(87)90041-8. [DOI] [PubMed] [Google Scholar]

- 2.Kustov AA, Robinson DL. Shared neural control of attentional shifts and eye movements. Nature. 1996;384:74–77. doi: 10.1038/384074a0. [DOI] [PubMed] [Google Scholar]

- 3.Deubel H, Schneider WX. Saccade target selection and object recognition: Evidence for a common attentional mechanism. Vision Res. 1996;36:1827–1837. doi: 10.1016/0042-6989(95)00294-4. [DOI] [PubMed] [Google Scholar]

- 4.Nobre AC, et al. Functional localization of the system for visuospatial attention using positron emission tomography. Brain. 1997;120:515–533. doi: 10.1093/brain/120.3.515. [DOI] [PubMed] [Google Scholar]

- 5.Moore T, Armstrong KM. Selective gating of visual signals by microstimulation of frontal cortex. Nature. 2003;421:370–373. doi: 10.1038/nature01341. [DOI] [PubMed] [Google Scholar]

- 6.Moore T, Armstrong KM, Fallah M. Visuomotor origins of covert spatial attention. Neuron. 2003;40:671–83. doi: 10.1016/s0896-6273(03)00716-5. [DOI] [PubMed] [Google Scholar]

- 7.Muller JR, Philiastides MG, Newsome WT. Microstimulation of the superior colliculus focuses attention without moving the eyes. Proc Natl Acad Sci. 2005;102:524–529. doi: 10.1073/pnas.0408311101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Lovejoy LP, Krauzlis RJ. Inactivation of primate superior colliculus impairs covert selection of signals for perceptual judgments. Nat Neurosci. 2010;13:261–266. doi: 10.1038/nn.2470. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Krauzlis RJ, Lovejoy LP, Zénon A. Superior colliculus and visual spatial attention. Annu Rev Neurosci. 2013;36:165–82. doi: 10.1146/annurev-neuro-062012-170249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Schall JD, Hanes DP. Neural basis of saccade target selection in frontal eye field during visual search. Nature. 1993;366:467–9. doi: 10.1038/366467a0. [DOI] [PubMed] [Google Scholar]

- 11.Zhou H, Desimone R. Feature-based attention in the frontal eye field and area V4 during visual search. Neuron. 2011;70:1205–17. doi: 10.1016/j.neuron.2011.04.032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Griffin IC, Nobre AC. Orienting attention to locations in internal representations. J Cogn Neurosci. 2003;15:1176–94. doi: 10.1162/089892903322598139. [DOI] [PubMed] [Google Scholar]

- 13.Landman R, Spekreijse H, Lamme VAF. Large capacity storage of integrated objects before change blindness. Vision Res. 2003;43:149–164. doi: 10.1016/s0042-6989(02)00402-9. [DOI] [PubMed] [Google Scholar]

- 14.Murray AM, Nobre AC, Clark IA, Cravo AM, Stokes MG. Attention restores discrete items to visual short-term memory. Psychol Sci. 2013;24:550–556. doi: 10.1177/0956797612457782. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Souza AS, Oberauer K. In search of the focus of attention in working memory: 13 years of the retro-cue effect. Attention, Perception Psychophys. 2016;78:1839–60. doi: 10.3758/s13414-016-1108-5. [DOI] [PubMed] [Google Scholar]

- 16.van Ede F, Niklaus M, Nobre AC. Temporal expectations guide dynamic prioritization in visual working memory through attenuated α oscillations. J Neurosci. 2017;37:437–445. doi: 10.1523/JNEUROSCI.2272-16.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Corneil BD, Munoz DP. Overt responses during covert orienting. Neuron. 2014;82:1230–1243. doi: 10.1016/j.neuron.2014.05.040. [DOI] [PubMed] [Google Scholar]

- 18.Maris E, Oostenveld R. Nonparametric statistical testing of EEG- and MEG-data. J Neurosci Methods. 2007;164:177–90. doi: 10.1016/j.jneumeth.2007.03.024. [DOI] [PubMed] [Google Scholar]

- 19.Müller MM, Teder-Sälejärvi W, Hillyard SA. The time course of cortical facilitation during cued shifts of spatial attention. Nat Neurosci. 1998;1:631–4. doi: 10.1038/2865. [DOI] [PubMed] [Google Scholar]

- 20.Busse L, Katzner S, Treue S. Temporal dynamics of neuronal modulation during exogenous and endogenous shifts of visual attention in macaque area MT. Proc Natl Acad Sci. 2008;105:16380–5. doi: 10.1073/pnas.0707369105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.van Ede F, de Lange FP, Maris E. Attentional cues affect accuracy and reaction time via different cognitive and neural processes. J Neurosci. 2012;32:10408–10412. doi: 10.1523/JNEUROSCI.1337-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Brandt SA, Stark LW. Spontaneous eye movements during visual imagery reflect the content of the visual scene. J Cogn Neurosci. 1997;9:27–38. doi: 10.1162/jocn.1997.9.1.27. [DOI] [PubMed] [Google Scholar]

- 23.Spivey MJ, Geng JJ. Oculomotor mechanisms activated by imagery and memory: Eye movements to absent objects. Psychol Res. 2001;65:235–241. doi: 10.1007/s004260100059. [DOI] [PubMed] [Google Scholar]

- 24.Martarelli CS, Mast FW. Eye movements during long-term pictorial recall. Psychol Res. 2013;77:303–309. doi: 10.1007/s00426-012-0439-7. [DOI] [PubMed] [Google Scholar]

- 25.Laeng B, Bloem IM, D’Ascenzo S, Tommasi L. Scrutinizing visual images: The role of gaze in mental imagery and memory. Cognition. 2014;131:263–283. doi: 10.1016/j.cognition.2014.01.003. [DOI] [PubMed] [Google Scholar]

- 26.Johansson R, Johansson M. Look here, eye movements play a functional role in memory retrieval. Psychol Sci. 2014;25:236–242. doi: 10.1177/0956797613498260. [DOI] [PubMed] [Google Scholar]

- 27.Hafed ZM, Clark JJ. Microsaccades as an overt measure of covert attention shifts. Vision Res. 2002;42:2533–2545. doi: 10.1016/s0042-6989(02)00263-8. [DOI] [PubMed] [Google Scholar]

- 28.Engbert R, Kliegl R. Microsaccades uncover the orientation of covert attention. Vision Res. 2003;43:1035–1045. doi: 10.1016/s0042-6989(03)00084-1. [DOI] [PubMed] [Google Scholar]

- 29.Lowet E, et al. Enhanced neural processing by covert attention only during microsaccades directed toward the attended stimulus. Neuron. 2018;99:207–214.e3. doi: 10.1016/j.neuron.2018.05.041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Hanning NM, Jonikaitis D, Deubel H, Szinte M. Oculomotor selection underlies feature retention in visual working memory. J Neurophysiol. 2016;115:1071–1076. doi: 10.1152/jn.00927.2015. [DOI] [PubMed] [Google Scholar]

- 31.Ohl S, Rolfs M. Saccadic eye movements impose a natural bottleneck on visual short-term memory. J Exp Psychol Learn Mem Cogn. 2017;43:736–748. doi: 10.1037/xlm0000338. [DOI] [PubMed] [Google Scholar]

- 32.Williams M, Pouget P, Boucher L, Woodman GF. Visual-spatial attention aids the maintenance of object representations in visual working memory. Mem Cogn. 2013;41:698–715. doi: 10.3758/s13421-013-0296-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Ferreira F, Apel J, Henderson JM. Taking a new look at looking at nothing. Trends Cogn Sci. 2008;12:405–410. doi: 10.1016/j.tics.2008.07.007. [DOI] [PubMed] [Google Scholar]

- 34.Richardson DC, Spivey MJ. Representation, space and Hollywood Squares: Looking at things that aren’t there anymore. Cognition. 2000;76:269–295. doi: 10.1016/s0010-0277(00)00084-6. [DOI] [PubMed] [Google Scholar]

- 35.Martinez-Conde S, Macknik SL, Hubel DH. The role of fixational eye movements in visual perception. Nat Rev Neurosci. 2004;5:229–40. doi: 10.1038/nrn1348. [DOI] [PubMed] [Google Scholar]

- 36.Ahissar E, Arieli A, Fried M, Bonneh Y. On the possible roles of microsaccades and drifts in visual perception. Vision Res. 2016;118:25–30. doi: 10.1016/j.visres.2014.12.004. [DOI] [PubMed] [Google Scholar]

- 37.Awh E, Jonides J. Overlapping mechanisms of attention and spatial working memory. Trends Cogn Sci. 2001;5:119–126. doi: 10.1016/s1364-6613(00)01593-x. [DOI] [PubMed] [Google Scholar]

- 38.Kuo B-C, Rao A, Lepsien J, Nobre AC. Searching for targets within the spatial layout of visual short-term memory. J Neurosci. 2009;29:8032–8. doi: 10.1523/JNEUROSCI.0952-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Dell’Acqua R, Sessa P, Toffanin P, Luria R, Jolicœur P. Orienting attention to objects in visual short-term memory. Neuropsychologia. 2010;48:419–428. doi: 10.1016/j.neuropsychologia.2009.09.033. [DOI] [PubMed] [Google Scholar]

- 40.Eimer M, Kiss M. An electrophysiological measure of access to representations in visual working memory. Psychophysiology. 2010;47:197–200. doi: 10.1111/j.1469-8986.2009.00879.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Theeuwes J, Kramer AF, Irwin DE. Attention on our mind: The role of spatial attention in visual working memory. Acta Psychol. 2011;137:248–251. doi: 10.1016/j.actpsy.2010.06.011. [DOI] [PubMed] [Google Scholar]

- 42.Foster JJ, Bsales EM, Jaffe RJ, Awh E. Alpha-band activity reveals spontaneous representations of spatial position in visual working memory. Curr Biol. 2017;27:3216–3223. doi: 10.1016/j.cub.2017.09.031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Schneegans S, Bays PM. Neural architecture for feature binding in visual working memory. J Neurosci. 2017;37:3913–3925. doi: 10.1523/JNEUROSCI.3493-16.2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Umeno MM, Goldberg ME. Spatial processing in the monkey frontal eye field. II. Memory responses. J Neurophysiol. 2001;86:2344–2352. doi: 10.1152/jn.2001.86.5.2344. [DOI] [PubMed] [Google Scholar]

- 45.Theeuwes J, Belopolsky A, Olivers CNL. Interactions between working memory, attention and eye movements. Acta Psychol. 2009;132:106–114. doi: 10.1016/j.actpsy.2009.01.005. [DOI] [PubMed] [Google Scholar]

- 46.Merrikhi Y, et al. Spatial working memory alters the efficacy of input to visual cortex. Nat Commun. 2017;8 doi: 10.1038/ncomms15041. 15041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.van der Stigchel S, Hollingworth A. Visuospatial working memory as a fundamental component of the eye movement system. Curr Dir Psychol Sci. 2018;27:136–143. doi: 10.1177/0963721417741710. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.van Ede F, Chekroud SR, Stokes MG, Nobre AC. Concurrent visual and motor selection during visual working memory guided action. Nat Neurosci. 2019 doi: 10.1038/s41593-018-0335-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Oostenveld R, Fries P, Maris E, Schoffelen JM. FieldTrip: Open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Comput Intell Neurosci. 2011;2011 doi: 10.1155/2011/156869. 156869. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Hentschke H, Stüttgen MC. Computation of measures of effect size for neuroscience data sets. Eur J Neurosci. 2011;34:1887–1894. doi: 10.1111/j.1460-9568.2011.07902.x. [DOI] [PubMed] [Google Scholar]

- 51.van Ede F, Chekroud SR, Nobre AC. Data from: Human gaze tracks attentional focusing in memorised visual space. Dryad Digital Repository. 2019 doi: 10.5061/dryad.m99r286. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.